2026-02-13 19:45:00

I got an email from a reader describing the feeling of blogging as having “the itch, whether or not others read it”.

The itch. I think that’s such a great way to put it. Like not blogging isn’t really an option, unless you’re okay with constantly feeling itchy.

I have quit blogging more than once, but I’ve always ended up coming back to it. Even if I’ve never thought about it that way before, I think what’s brought me back every time has been the itch.

There’s so much more to blogging than just writing posts.

It’s a way to learn more about yourself and others. It puts things into perspective. It’s hard to beat as a creative outlet, in so many different ways.

I’m sure I’ll quit blogging again. But next time I’ll call it pausing instead, because I know the itch will return.

2026-02-13 15:53:00

It's difficult to take care of myself when things are not so bad.

I mean, when there isn't anything inherently wrong with my situation—it’s just a dull period. I follow the routine: eat, sleep, go to work, see my friends occasionally. I live unbothered.

Throughout these periods, I set myself to autopilot mode and live as if life were just a chore. I just complete tasks without truly experiencing any of them.

I don’t think that I should look for problems where there are none; of course, I appreciate the easier times. I just think I need to practice presence even when it doesn't feel like a necessity.

When I’m sad or in trouble, I have to be more alert, so staying present is easier. But in the quiet times, if I'm not careful, I slip away.

The value of any moment is determined by whether or not you are there to witness it.

P.S. I suppose writing is a useful way to interrupt the endless flow of chores and slow down. To write things. To be more present.

2026-02-13 13:31:00

Look, I use AI every single day. I've written about it before -- how it helps me code faster, automate scripts, orchestrate workflows. These tools just work. They save me hours of grunt work and make me more productive.

But here's what bothers me: the breathless exaggeration everywhere I look.

Scroll through X for five minutes and you'll see "essays" claiming AI has "judgment" or "taste" or "intuition." That it "knows the right call." Come on. What feels like judgment is actually careful prompting, context management, skills.md, memory.md, and statistical pattern matching -- all designed by the meat bags.

This hype misleads people. It inflates expectations and warps how the public understands what these tools actually do.

What really gets me are the people who aren't even technical writing these dramatic thinkpieces. You know the ones: "AI has reached a new level of judgment..." followed by the same tired predictions about replacing writers and coders we've been hearing for years. It's just clickbait.

And then there's the practical insanity this hype creates. People are rushing out to buy Mac minis to run something like OpenClaw (which is still bloated and messy) without even testing it. They don't spin up a $5 VPS or use a container to validate whether it works for their use case. They just drop hundreds of dollars on hardware because the hype train says "you need this NOW" and common sense goes out the window.

The problem is simple: Hype-men hypes. When it outputs something coherent or stylish, they say "bUt iT fEeLs dIfFeReNt tHiS tImE". You’ve been feeling that every time a new AI model drops, buddy.

Here's what AI actually is: human design, massive datasets, and careful optimization. It's impressive, sure. But it's not magic. It improves workflows and generates useful solutions, but it doesn't "decide" or "judge" like we do. It's a tool -- a really good one -- but still just a tool that depends entirely on us.

Want to celebrate what it does? Go on. But stop pretending it's something it's not. Please talk about AI honestly -- get excited about real capabilities, not made-up ones.

And respectfully, shove the taste and agency up yours, pal.

2026-02-13 08:28:00

If you're reading this, and you don't have a Facebook or Xitter account (because you either deleted it, or never had one to begin with), give yourself a pat on the back. This shit is hard. Which is evidenced by the fact that three billion users are still active on Facebook, as an example, in any given month.

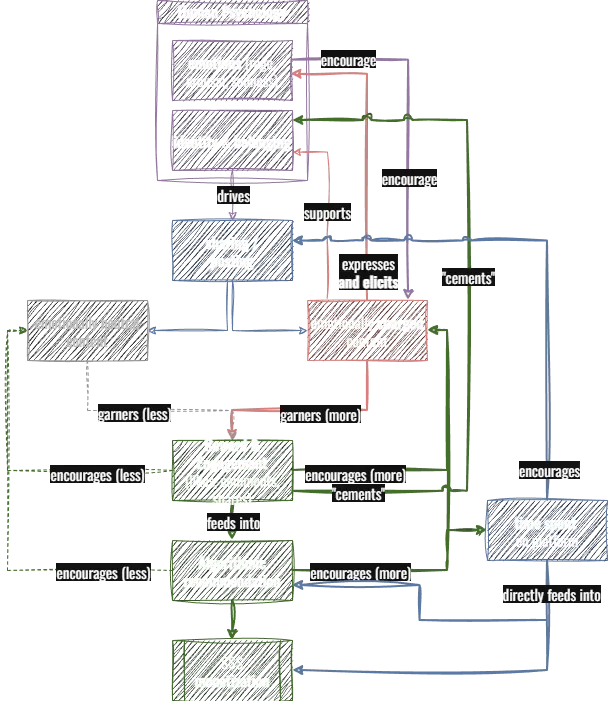

Take a look at the vicious cycle of:

And how this can cause harm to us as individuals and to our society.

We'll end on a brief note on how non-algorithmic alternatives do exist; and why it may still be hard for people to leave the existing platforms.

The situation we currently find ourselves in is that, somehow, the billionaire-owned, walled-garden "social networks" are still popular.

A this point, "social media" may be a bit of a misnomer. I like a term that I recently came across in the shownotes of a podcast I enjoy: 1 Let's call them "algorithmic media" instead. I find that this name is a better fit, for a couple of reasons:

At this moment in time, more so than ever, algorithmic media may not be serving us - their users - anymore: Not serving us as individual humans, not serving us as a society, not serving us in how we govern ourselves politically.

If you follow privacy, digital-sovereignty, or anti-surveillance conversations, none of this will be news to you. But if you’re newer here - welcome! - or sharing this with someone just beginning to explore alternatives to billionaire-owned algorithmic media, here's a quick recap of the lowlights:

As things stand, many of us thus find ourselves "locked in" to platforms who keep us in a cycle much like this one:

A flowchart visualizing the relationships between human psychology, content sharing, engagement, rewards, and monetization. (Which, in case you can't tell, is not AI generated. Created by hand using draw.io, for better or worse.) I have done my best to provide a detailed verbal description of the flow below.

My attempt at a verbal summary of the above flowchart is:

Short answer: No.

For a number of reasons, one of the most critical of which is that the algorithmic media platforms themselves did not start out this way. YouTube did not have ads when it launched. Instagram had a non-algorithmic, chronological Most Recent feed until 2016. Xitter didn't show promoted content in people's timelines until 2010. Facebook, according to Cory Doctorow, began as an alternative to MySpace that promised it wouldn't spy on you.

This illustrates two things:

Cool! So now we have the Cliff's Notes of why people may be wanting to look beyond these billionaire-owned platforms, and look for alternatives. Alternatives that are more privacy-friendly, that show you the content that you signed up to see, and whose explicit goal is not to keep you glued to the screen for as long as possible and do a number on your mental health along the way. Great news:

The alternatives exist. They are out there. ActivityPub, the Fediverse, Mastodon, Pixelfed, Ghost, many others. They are out there and you can join them today.

And yet... Even folks who know all of the above, even folks who agree with all of the above, folks who continue to have shoddy experiences on the algorithmic networks, often cannot seem to shake themselves loose of thos platforms.

Why is that? Because of Network Effects. More on those in the next post!

As always, please feel free to get in touch with thoughts or comments! You can email or find me on Mastodon.

{{ posts | limit:3 }}

Dr. Thomas Schwenke in Episode 145 of "Auslegungssache," by c't.↩

Side note: There is a lot more to this, like social goals, negativity biases, etc, but I didn't want to explode the flowchart right on the first node... If you are interested in a more nuanced approach, click some of the reference links in this article and explore from there!↩

2026-02-13 02:42:00

No seriously, I do. It’s not perfect, in fact it’s downright fucked at times and there have been many times in my life when I’ve wished it away in the dead of night.

But when I truly sit back and think about it, my life has made me who I am and there is only one of me.

I will return to stardust when it’s my time. For now, I’ll take the good times with the bad, because I know it’ll be alright in the end.

2026-02-13 00:35:00

There I was minding my own business, plodding through the working day, getting on with my tasks.

A colleague asked me to proof-read his report and offer feedback. I am the kind of guy that lets a lot of things slip. I appreciate we all write in different styles, grammar, and tone; we all mangle punctuation to suit.

If am asked to critique, I prefer constructive rather than, "hang on while I rewrite this in my own style" kind of approach. Today I simply suggested that the report open with a summary paragraph, introducing the topic — it had launched into a discussion, assuming the reader was already familiar with the topic.

I kindly offered a topping paragraph, consisting of a few sentences.

and I quote:

Thanks for that. Totally agree. Your opening paragraph is amazing and sets the scene perfectly. Did you run it through AI?

Well, you can imagine my reaction upon reading this. I was incandescent with rage.

How 🤬 dare someone assume that I've used a machine to write this. I am more than capable of stringing a few sentences together. I am paid to do it. I am not paid to force words into a sausage machine and accept the resultant diatribe.

I put my flowery language to the side and offered a quick response, in a jocular fashion:

Hi Bob (fake name), have you ever met me? How long have we worked together? Surely you know my feelings towards AI?

Rest assured, those are all my own words. No super-computer, consuming megawatts of energy, was needed. Just my little brain.

Glad I could help with the report. It's a good read.

And breathe!

Is this what the world is becoming?. Everyone assuming that we are all sitting at our screen eagerly anticipating what the Artificial monsters will say?

Can I get off the world please?!

Leave a Comment; Or copy this post id and search for it in your Fediverse client to reply; Or send a message. If you have replied with your own blog post and I will mention it here.

https://gofer.social/@daj/statuses/01KH9BBGJ66S136XHJDJEH3MTX