2026-03-06 00:30:58

When we build software systems, one of the most important goals is making sure they can handle large amounts of work efficiently.

High-throughput systems are capable of processing vast quantities of data or transactions in a given timeframe. Throughput refers to the amount of work a system completes in a specific time period. For example, a web server might process 10K requests per second, or a database might handle 50K transactions per minute. The higher the throughput, the more work gets done in the same amount of time.

Throughput is different from latency. Latency measures how long it takes to complete a single operation, from start to finish. While throughput measures the volume of operations the system handles over time. For example, a system can have low latency but low throughput if it processes each request quickly but can only handle a few at once. Conversely, a system might have high throughput but high latency if it processes many requests simultaneously, but each request takes longer to complete.

There is often a tradeoff between these two metrics. When we batch multiple operations together, we increase throughput because the system processes many items at once. However, this batching introduces waiting time for individual operations, which increases latency. Similarly, processing every request immediately reduces latency but may limit throughput if the system becomes overwhelmed.

In this article, we will go through the fundamental concepts and practical strategies for building systems that can handle high volumes of work without breaking down under pressure.

2026-03-05 00:30:49

Building with LangChain agents is easy. Running them reliably in production is not. As agent workflows grow in complexity, you need visibility, fault tolerance, retries, scalability, and human oversight. Orkes Conductor provides a durable orchestration layer that manages multi agent workflows with state management, error handling, observability, and enterprise grade reliability. Instead of stitching together fragile logic, you can coordinate agents, tools, APIs, and human tasks through a resilient workflow engine built for scale. Learn how to move from experimental agents to production ready systems with structured orchestration.

2026 has already started strong. In January alone, Moonshot AI open-sourced Kimi K2.5, a trillion-parameter model built for multimodal agent workflows. Alibaba shipped Qwen3-Coder-Next, an efficient coding model designed for agentic coding. OpenAI launched a macOS app for its Codex coding assistant. These are recent moves in trends that have been building for months.

This article covers five key trends that will likely shape how teams build with AI this year.

Early language models like GPT-4 generated answers directly. You asked a question, and the model started producing text token by token. This works for simple tasks, but it often fails on harder problems where the first attempt is wrong, like advanced math or multi-step logic.

Newer models, starting with OpenAI’s o1, changed this by spending time “thinking” before answering. Instead of jumping straight to the final response, they generate intermediate steps and then produce the answer. The model spends more time and computing power, but it can solve much harder problems in logic and multi-step planning.

After o1, many teams focused on training reasoning models. By early 2026, most major AI labs had either released a reasoning model or added reasoning to its main product.

A key method that made model training practical at scale was Reinforcement Learning with Verifiable Rewards (RLVR). Although first introduced by AI2’s Tülu 3, DeepSeek-R1 brought the approach to mainstream attention by applying it at scale. To understand how RLVR improves on previous methods, it helps to look at the standard training pipeline.

LLM training has two main stages: pre-training and post-training. During post-training, a Reinforcement Learning (RL) algorithm lets the model practice. The model generates responses, and the algorithm updates its weights so better responses become more likely over time.

To decide which responses are better, AI labs traditionally trained a separate reward model as a proxy for human preferences. This involved collecting preference data from humans, training the reward model on that data, and using it to guide the LLM. This approach is known as Reinforcement Learning from Human Feedback (RLHF).

RLHF creates a bottleneck. It depends on humans labeling data, which is slow and expensive at large scale. It also gets harder when the task is complex, because people cannot reliably judge long reasoning traces.

RLVR removes this bottleneck. It still uses reinforcement learning, but the reward comes from checking correctness instead of predicting what a human would prefer. In domains like math or coding, many tasks have answers that can be checked automatically. The system checks if the code runs or if the math solution matches the ground truth. If it does, the model gets a reward. No separate reward model is needed.

RLVR enables scalable training because correctness checks can run quickly and automatically. The model can practice on millions of problems with immediate feedback. DeepSeek-R1 showed that this approach could reach frontier-level reasoning, shifting the main bottleneck from human labeling to available compute.

Today, most major AI labs use reasoning in training, and many use RLVR. As a result, reasoning alone is no longer a differentiator. The focus has shifted to efficiency.

AI teams are now working on adaptive reasoning, where the model adjusts its effort based on how hard a prompt is. Instead of spending many tokens on a simple greeting, models reserve deep thinking for problems that actually need it. Gemini 3 is a concrete example. It supports a thinking_level control and uses dynamic thinking by default, so it can vary how much reasoning it applies across prompts. This focus on efficiency will make reasoning models practical for real-world use cases where speed and cost matter.

Early language models were good at generating text, but they could not take actions. If you asked a model to book a flight, it could describe the steps but could not use a booking system. And because it could not check the real world, it often guessed. If you asked “Is the restaurant open right now?”, it might answer from old information instead of checking live hours.

These limits led to the rise of AI agents. An agent combines an LLM with tools and runs it in a loop, allowing it to plan and act. Instead of directly generating the final answer, an agent can take a goal, break it into steps, run tools, and use the results to decide what to do next.

Most agents share the same structure. A language model interprets the request and picks the next step. Tools connect the model to external systems like search, calendars, files, or APIs. A loop runs actions, inspects results, and retries or changes course when something fails.

Agents are no longer experimental. They are shipping in real products. OpenAI’s ChatGPT agent can browse the web and complete tasks on your behalf. Anthropic’s Claude can use tools, write and run code, and work through multi-step problems.

Three developments made this possible. First, reasoning improved. Models got better at planning multi-step work, keeping track of intermediate results, and choosing the next action instead of jumping to a final answer.

Second, tool connections became easier. In the past, every tool integration was custom. Protocols like Anthropic’s Model Context Protocol (MCP) reduced the friction of connecting models to external systems. Adding a new tool now takes just a few lines of code.

Third, frameworks like LangChain and LlamaIndex matured. They made it easier to build agents without starting from scratch. They provide ready-made components for tool use, multi-step flows, and logging. This lowered the barrier and let more teams experiment with agents.

from langchain_ollama import ChatOllama

from langchain.agents import create_agent

# Create an LLM instance

llm = ChatOllama(model=“gemma3:1b”)

# Create your tool list

tools = [get_weather, web_search]

# Create your agent

agent = create_agent(llm, tools)

# Call your agent using agent.invoke

agent.invoke({”messages”:

[{”role”: “user”, “content”: “Events in SF”}]

})Agents are good at short workflows, but they still struggle when tasks run long. Over dozens of steps, they can lose context and make mistakes that compound. They are also limited by default access. Many agents run in sandboxed environments and cannot see your email, files, or local apps unless you connect them.

A likely trend in 2026 is persistent agents that address both problems. These are always-on assistants designed to handle longer workflows over extended periods. Many will run locally, making it easier to connect with your files, apps, and system settings while keeping data under your control. OpenClaw is an early example of this shift toward personal agents that run on your own hardware.

More access also increases risk. When agents can read personal data and take actions, mistakes matter more. So a major focus in 2026 will be reliability and security. Reliability means staying on track, recovering from errors, and behaving predictably over long tasks. Security means protecting data, resisting prompt injection, and avoiding irreversible actions without explicit approval.

AI started helping software engineers with simple autocomplete. But the capability was limited. The model could only see the immediate area around your cursor, maybe a few lines before and after. It did not understand the full codebase, the project structure, or what you were trying to build.

That changed when AI labs applied the agent approach to coding. Rather than relying on general-purpose models, they trained specialized LLMs through extensive fine-tuning on code repositories, documentation, and programming patterns. They also replaced generic tools with coding-specific ones like read_file, search_codebase, edit_file, run_terminal_command, and execute_tests.

The result is a model that understands software engineering practices like project structure, dependencies, and debugging, and knows how to use its tools to complete tasks. When you give it a complex task, it decides which tools to call and in what order to finish the job.

Powerful proprietary coding agents like Anthropic’s Claude Code and OpenAI’s Codex are driving this shift. They can read an entire repository and understand complex project structures. At the same time, open-source models have narrowed the gap. Qwen3-Coder-Next, an 80B-parameter model released in early 2026, reached performance close to top closed models while running locally on consumer hardware.

Coding agents are one of the most visible places where AI has already changed day-to-day work. Engineers can ask for repo-level fixes and improvements and get working patches much faster. These tools also lowered the barrier to entry. People with less coding experience can build working apps using services built on top of these agents, like Replit and Lovable.

The baseline for coding agents is no longer just writing code. It is managing software at scale. Three areas will likely see the most progress.

Deeper repository-level understanding. Current agents sometimes lose track of how files relate to each other in large codebases. Better tracking of dependencies, architecture, and cross-file context will let agents handle bigger and more complex projects reliably.

Security-aware coding. As agents write more production code, catching vulnerabilities before they ship becomes critical. Expect agents to build security scanning and automated test generation directly into their workflow, rather than treating them as separate steps.

Faster completions. Today’s agents can be slow on complex tasks, sometimes taking minutes to plan and execute a multi-file change. AI labs are actively working on reducing the time from request to working code, making agents practical for more real-time development work.

For the first few years of the LLM era, the most capable models were closed. If you wanted top performance, you used APIs from labs like OpenAI, Anthropic, or Google. You could not access the weights, run models locally, or fine-tune them. Open-weight models existed but they lagged behind.

That gap did not last long. It narrowed faster than most people expected in two phases: a defining DeepSeek moment, followed by rapid momentum.

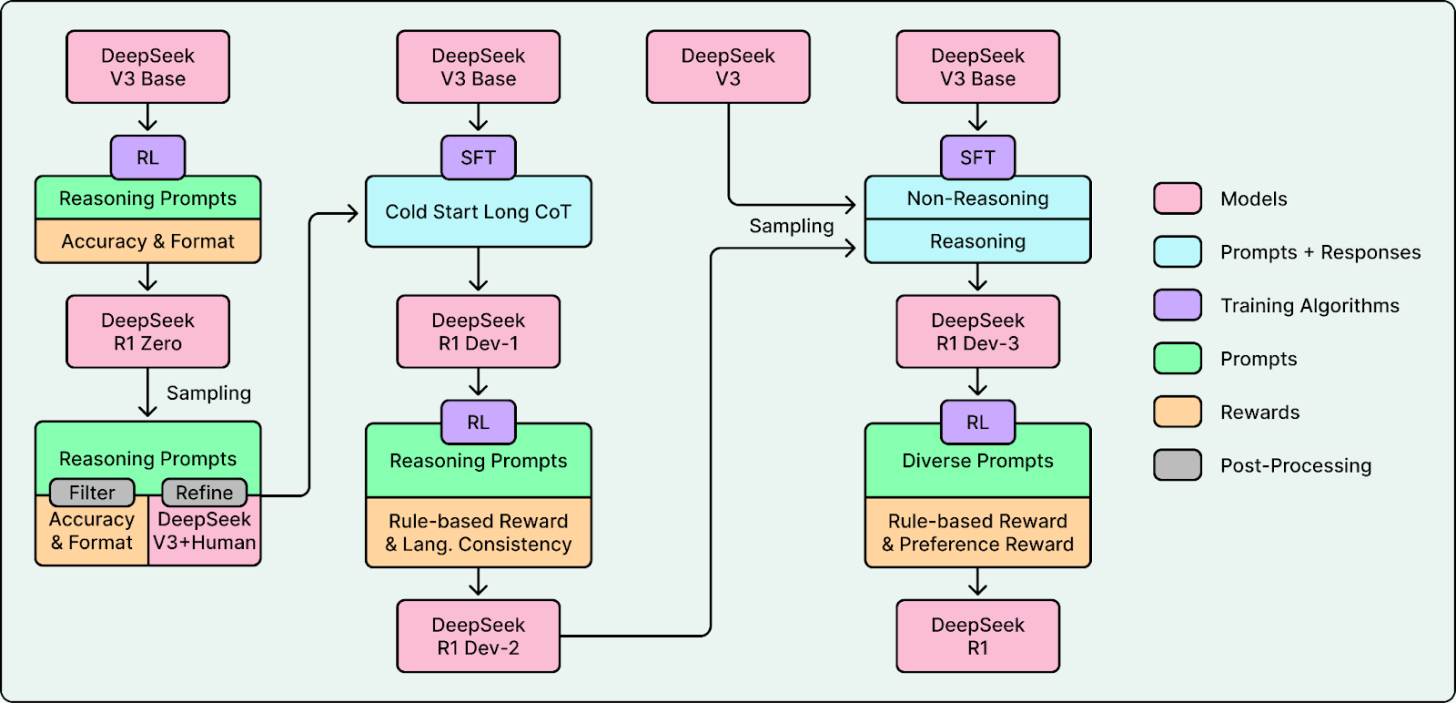

In January 2025, DeepSeek released DeepSeek-R1 and open-sourced its weights, code, and training approach. The reasoning model matched or exceeded closed competitors on key benchmarks. It showed that frontier-level reasoning did not require a proprietary API. People started calling similar breakthroughs a “DeepSeek moment.”

A key reason R1 stood out was its training approach. Before this, many chatbots leaned heavily on RLHF during post-training, the approach popularized by early ChatGPT. DeepSeek leaned heavily on RLVR, which scales better on verifiable tasks like math and coding. That made it easier to train reasoning ability with much less human labeling.

After that, more labs released full weights and training details. Alibaba’s Qwen family became a major base for open development. GLM from Z.ai pushed multilingual and multimodal capability into the open ecosystem. Moonshot’s Kimi family shipped strong agentic and tool-use features. With this momentum, more teams entered and the open-weight ecosystem got much stronger.

In August 2025, OpenAI released gpt-oss, its first open-weight models since GPT-2. The release included 120B and 20B parameter models under the Apache 2.0 license. Mistral, Meta, and the Allen Institute also shipped competitive releases.

With detailed technical reports and working recipes, techniques spread quickly. Teams replicated results, improved them, and shipped variants. Today, open-weight models are close to top closed models on many standard benchmarks.

In 2026, open-weight releases are no longer surprising. The next wave of progress will focus less on scale and more on efficiency, practical deployment, and agent capabilities.

Architectural Efficiency. Architectures are getting more efficient, often using sparse MoE designs plus long context so only a small part of the model is active per token. Qwen3-Coder-Next is one example, with an ultra-sparse setup and a 256k native context window.

Agent Readiness. Open-weight models are being trained for agent use, not just chat. Tool use, structured outputs, and long-context reasoning are designed in from the start. As agents become central to how AI delivers value, agent-ready open-weight models will power more autonomous workflows.

Easier deployment. Lower barriers to running these models are emerging through new inference formats and compression techniques. Hardware vendors are also stepping up with direct support for open-weight models at launch, treating them as first-class deployment targets.

Most early chatbots were text-in, text-out. Even as they improved, they stayed text-centric. Images, audio, and video were often handled by separate systems. Early image generators could produce striking visuals, but results were inconsistent and hard to control.

This changed in two ways: chatbots became natively multimodal, and generation models improved dramatically.

The era of text-only models ended as leading models became natively multimodal. Gemini 3 and ChatGPT-5 can handle text and images in a single system, and their products also support richer media interactions. On the open-weight side, Qwen2.5-VL shows similar vision-language capability with strong visual understanding across modalities.

This unified approach enables more natural interactions and new use cases. For example, you can upload a diagram, ask questions about specific elements, and get answers that reference visual details, all within one conversation.

Image and video generation also improved, moving from demos to real tools. OpenAI’s Sora 2 showed video generation at a level that forced the industry to take it seriously. Google’s Veo 3.1, released in October 2025 and updated in January 2026, pushed video generation with richer audio and stronger editing controls like object insertion. Nano Banana Pro (Gemini 3 Pro Image), launched in November 2025, improved image generation and editing, especially text rendering and control.

Two trends will likely define the next phase of multimodal progress: physical AI and world models.

Physical AI like robots are moving from research into real deployments. CES 2026 featured a wave of humanoid robot demos across many companies. Boston Dynamics unveiled its electric Atlas and announced a partnership with Google DeepMind to integrate Gemini Robotics models. Tesla has also said it plans to ramp Optimus, targeting very high production over time.

These systems combine vision-language understanding, reinforcement learning, and planning. As Jensen Huang put it around CES 2026, “The ChatGPT moment for robotics is here,” pointing to physical AI models that can understand the real world and plan actions.

The video generation systems described above are learning something deeper than how to produce realistic pixels. They are building basic models of how the physical world works, systems that can simulate physics, predict outcomes, and reason about the real world.

In November 2025, Yann LeCun left Meta to launch AMI Labs, raising €500M to build AI systems that understand physics rather than just predicting text. Google DeepMind released Genie 3, the first real-time interactive world model generating persistent 3D environments. NVIDIA’s Cosmos Predict 2.5, trained on 200 million curated video clips, unifies text-to-world, image-to-world, and video-to-world generation for training robots and autonomous vehicles in simulated environments.

Training better world models will likely continue through 2026. If models can simulate environments reliably, they become a foundation for training robots, autonomous vehicles, and other systems that must operate in the physical world. Video generation, robotics, and simulation are starting to converge into one direction. 2026 will show whether that convergence accelerates or stalls.

2026 will not be defined by a single breakthrough. It will be shaped by capabilities that now exist together and reinforce each other. These capabilities are already combining to enable new workflows, from autonomous code refactoring to robots learning tasks through simulated environments. It will be an interesting year to watch.

2026-03-04 00:30:27

Start reading the latest version of the book everyone is talking about.

The second edition of Designing Data-Intensive Applications was just published – with significant revisions for AI and cloud-native!

Martin Kleppmann and Chris Riccomini help you navigate the options and tradeoffs for processing and storing data for data-intensive applications. Whether you’re exploring how to design data-intensive applications from the ground up or looking to optimize an existing real-time system, this guide will help you make the right choices for your application.

Peer under the hood of the systems you already use, and learn how to use and operate them more effectively

Make informed decisions by identifying the strengths and weaknesses of different approaches and technologies

Understand the distributed systems research upon which modern databases are built

Go behind the scenes of major online services and learn from their architectures

We’re offering 3 complete chapters. Start reading it today.

Agoda generates and processes millions of financial data points (sales, costs, revenue, and margins) every day.

These metrics are fundamental to daily operations, reconciliation, general ledger activities, financial planning, and strategic evaluation. They not only enable Agoda to predict and assess financial outcomes but also provide a comprehensive view of the company’s overall financial health.

Given the sheer volume of data and the diverse requirements of different teams, the Data Engineering, Business Intelligence, and Data Analysis teams each developed their own data pipelines to meet their specific demands. The appeal of separate data pipeline architectures lies in their simplicity, clear ownership boundaries, and ease of development.

However, Agoda soon discovered that maintaining separate financial data pipelines, each with its own logic and definitions, could introduce discrepancies and inconsistencies that could impact the company’s financial statements. In other words, there is no single source of truth, which is not a good situation for financial data.

In this article, we will look at how the Agoda engineering team built a single source of truth for its financial data and the challenges encountered.

Disclaimer: This post is based on publicly shared details from the Agoda Engineering Team. Please comment if you notice any inaccuracies.

A data pipeline is an automated system that extracts data from source systems, transforms it according to business rules, and loads it into databases where analysts can use it.

The high-level architecture of multiple data pipelines, each owned by different teams, introduced several fundamental problems that affected both data quality and operational efficiency.

First, duplicate sources became a significant issue. Many pipelines pulled data from the same upstream systems independently. This led to redundant processing, synchronization issues, data mismatches, and increased maintenance overhead. When three different teams all read from the same booking database but at slightly different times or with different queries, this wastes computational resources and can lead to scenarios where teams are looking at slightly different versions of the same underlying data.

Second, inconsistent definitions and transformations created confusion across the organization. Each team applied its own logic and assumptions to the same data sources. As a result, financial metrics could differ depending on which pipeline produced them. For instance, the Finance team might calculate revenue differently from the Analytics team, leading to conflicting reports when executives ask fundamental questions about business performance. This undermined data reliability and created a lack of trust in the numbers.

Third, the lack of centralized monitoring and quality control resulted in inconsistent practices across teams. The absence of a unified system for tracking pipeline health and enforcing data standards meant that each team implemented its own quality checks. This resulted in duplicated effort, inconsistent practices, and delays in identifying and resolving issues. When problems did occur, it was difficult to determine which pipeline was the source of the issue.

See the diagram below:

During a recent review, Agoda observed that differences in data handling and transformation across these pipelines led to inconsistencies in reporting, as well as operational delays.

AI coding tools are fast, capable, and completely context-blind. Even with rules, skills, and MCP connections, they generate code that misses your conventions, ignores past decisions, and breaks patterns. You end up paying for that gap in rework and tokens.

Unblocked changes the economics.

It builds organizational context from your code, PR history, conversations, docs, and runtime signals. It maps relationships across systems, reconciles conflicting information, respects permissions, and surfaces what matters for the task at hand. Instead of guessing, agents operate with the same understanding as experienced engineers.

You can:

Generate plans, code, and reviews that reflect how your system actually works

Reduce costly retrieval loops and tool calls by providing better context up front

Spend less time correcting outputs for code that should have been right in the first place

To overcome these challenges, Agoda developed a centralized financial data pipeline known as FINUDP, which stands for Financial Unified Data Pipeline.

This system delivers both high data availability and data quality. Built on Apache Spark, FINUDP processes all financial data from millions of bookings each day. It makes this data reliably available to downstream teams for reconciliation, ledger, and financial activities.

The architecture consists of several key components.

Source tables contain raw data from upstream systems such as bookings and payments.

The execution layer is where the actual data processing happens, running on Apache Spark for distributed computing power. This layer sends email and Slack alerts when jobs fail and uses an internally developed tool called GoFresh to monitor if data is updated on schedule.

The data lake is where processed data is stored, with validation mechanisms ensuring data quality.

Finally, downstream teams (including Finance, Planning, and Ledger teams) consume the data for their specific needs.

See the diagram below:

For this centralized pipeline, three non-functional requirements stood out.

Data freshness: The pipeline is designed to update data every hour, aligned with strict service level agreements for downstream consumers. To ensure these targets are never missed, Agoda uses GoFresh, which monitors table update timestamps. If an update is delayed, the system flags it, prompting immediate action to prevent any breaches of these agreements.

Reliability: Data quality checks run automatically as soon as new data lands in a table. A suite of automated validation checks investigates each column against predefined rules. Any violation triggers an alert, stopping the pipeline and allowing the team to address data issues before they cascade downstream.

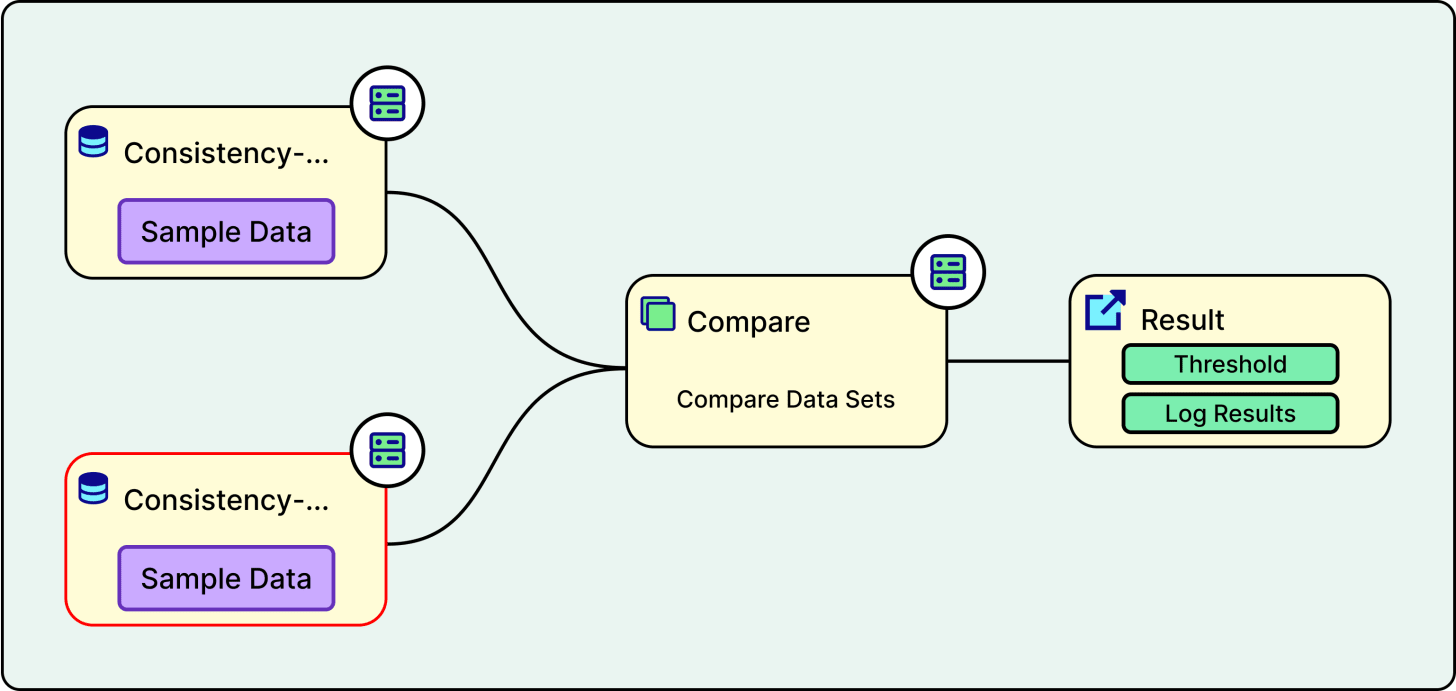

Maintainability: Each change begins with a strong, peer-reviewed design. Code reviews are mandatory, and all changes undergo shadow testing, which runs on real data in a non-production environment for comparison.

Agoda implemented several technical practices to ensure the reliability of FINUDP. Understanding these practices provides insight into how production-grade data systems are built and maintained.

Shadow testing is one of the most important practices. When a developer makes a change to the pipeline code, the system runs both the old version and the new version on the same production data in a test environment. The outputs from both versions are then compared, and a summary of the differences is shared directly within the code review process. This provides reviewers with clear visibility into the impact of proposed changes on the data. It is an excellent way to catch unexpected side effects before they reach production.

See the diagram below:

The staging environment serves as a safety net between development and production. It closely mirrors the production setup, allowing Agoda to test new features, pipeline logic, schema changes, and data transformations in a controlled setting before releasing them to all users. By running the full pipeline with realistic data in staging, the team can identify and resolve issues such as data quality problems, integration errors, or performance bottlenecks without risking the integrity of production data. This approach reduces the likelihood of unexpected failures and builds confidence that every change has been thoroughly validated before going live.

Proactive monitoring for data reliability includes several mechanisms.

Daily snapshots are taken to preserve historical states of the data.

Partition count checks ensure that all expected data partitions are present. A partition is a way of dividing large tables into smaller chunks, typically by date, so queries only need to scan relevant portions of the data.

Automated alerts detect spikes in corrupted data, ensuring that row counts align with expectations.

The multi-level alerting system ensures that failures are caught quickly and the right people are notified:

Email alerts are the first level, where any pipeline failure triggers an email to developers via the coordinator, enabling quick awareness and response.

Slack alerts form the second level, where a dedicated backend service monitors all running jobs and sends notifications about successes or failures directly to the development team’s Slack channels, categorized by hourly and daily frequency.

The third level involves GoFresh continuously checking the freshness of specific columns in target tables. If data is not updated within a predetermined timeframe, GoFresh notifies the Network Operations Center, a 24/7 support team that monitors all critical Agoda alerts, notifies the correct team, and coordinates emergency response sessions.

Data integrity is verified using a third-party data quality tool called Quilliup. Agoda executes predefined test cases that utilize SQL queries to compare data in target tables with their respective source tables. Quilliup measures the variation between source and target data and alerts the team if the difference exceeds a set threshold. This ensures consistency between the original data and its downstream representation.

Data contracts establish formal agreements with upstream teams that provide source data. These contracts define required data rules and structure. If incoming source data violates the contract, the source team is immediately alerted and asked to resolve the issue. There are two types of data contracts.

Detection contracts monitor real production data and alert when violations occur.

Preventative contracts are integrated into the continuous integration pipelines of upstream data producers, catching issues before data is even published.

Lastly, anomaly detection utilizes machine learning models to monitor data patterns and identify unusual fluctuations or spikes in the data. When anomalies are detected, the team investigates the root cause and provides feedback to improve model accuracy, distinguishing between valid alerts and false positives.

Throughout the journey of building FINUDP and migrating multiple data pipelines into one, Agoda encountered several key challenges:

Stakeholder management proved to be more complex than anticipated. Centralizing multiple data pipelines into a single platform meant that each output still served its own set of downstream consumers. This created a broad and diverse stakeholder landscape across product, finance, and engineering teams.

Performance issues emerged as a significant technical challenge. Bringing together several large pipelines and consolidating high-volume datasets into FINUDP initially incurred a performance cost. The end-to-end runtime was approximately five hours, which was far from ideal for daily financial reporting. Through a series of optimization cycles covering query tuning, partitioning strategy, pipeline orchestration, and infrastructure adjustments, Agoda was able to reduce the runtime from five hours to approximately 30 minutes.

Data inconsistency was perhaps the most conceptually challenging problem. Each legacy pipeline had evolved with its own set of assumptions, business rules, and data definitions. When these were merged into FINUDP, those differences surfaced as data inconsistencies and mismatches. To address this, Agoda went back to first principles. The team documented the intended meaning of each key metric and field, conducted detailed gap analyses comparing the outputs of different pipelines, and facilitated workshops with stakeholders to agree on a single definition for each dataset.

Centralizing data pipelines came with clear benefits but also required navigating key trade-offs between competing priorities:

The most significant trade-off involved velocity. In the previous decentralized approach, independent small datasets could move through their separate pipelines without waiting on each other. With the centralized setup, Agoda established data dependencies to ensure that all upstream datasets are ready before proceeding with the entire data pipeline.

Data quality requirements also affected development speed. Since the unified pipeline includes multiple data sources and components, changes to any component require testing and a data quality check of the whole pipeline. This causes a slowness in velocity, but it is a trade-off accepted in exchange for the numerous benefits of having a unified pipeline, including consistency, reliability, and a single source of truth.

Data governance became more rigorous and time-consuming. Migrating to a single pipeline required that every transformation be meticulously documented and reviewed. Since financial data is at stake, full alignment and approval from all stakeholders are needed before changing upstream processes. The need for thorough vetting and consensus slowed implementation, but ultimately built a foundation for trust and integrity in the new unified system. In essence, centralization increases reliability and transparency, but it demands tighter coordination and careful change management at every step.

Consolidating financial data pipelines at Agoda has made a real difference in how the company handles and trusts its financial metrics.

Through FINUDP, Agoda has established a single source of truth for all financial metrics. By introducing centralized monitoring, automated testing, and robust data quality checks, the team has significantly improved both the reliability and availability of data. This setup means downstream teams always have access to accurate and consistent information.

Last year, the data pipeline achieved 95.6% uptime (with a goal to reach 99.5% data availability). Maintaining such high data standards is always a work in progress, but with these systems in place, Agoda is better equipped to catch issues early and collaborate across teams.

References:

2026-03-03 00:30:50

npx workos launches an AI agent, powered by Claude, that reads your project, detects your framework, and writes a complete auth integration directly into your existing codebase. It’s not a template generator. It reads your code, understands your stack, and writes an integration that fits.

The WorkOS agent then typechecks and builds, feeding any errors back to itself to fix.

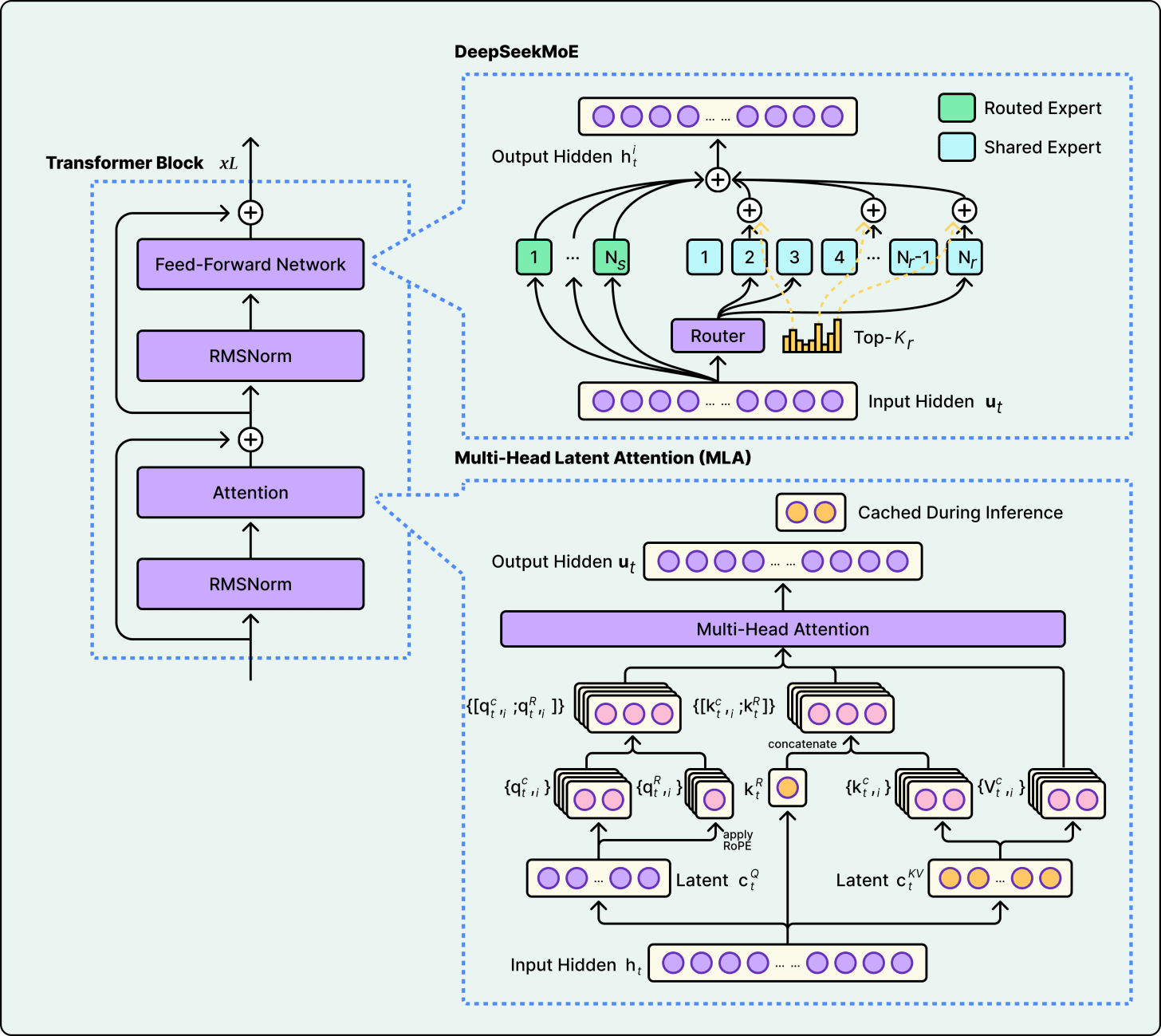

In December 2024, DeepSeek released V3 with the claim that they had trained a frontier-class model for $5.576 million. They used an attention mechanism called Multi-Head Latent Attention that slashed memory usage. An expert routing strategy avoided the usual performance penalty. Aggressive FP8 training cuts costs further.

Within months, Moonshot AI’s Kimi K2 team openly adopted DeepSeek’s architecture as their starting point, scaled it to a trillion parameters, invented a new optimizer to solve a training stability challenge that emerged at that scale, and competed with it across major benchmarks.

Then, in February 2026, Zhipu AI’s GLM-5 integrated DeepSeek’s sparse attention mechanism into their own design while contributing a novel reinforcement learning framework.

This is how the open-weight ecosystem actually works: teams build on each other’s innovations in public, and the pace of progress compounds. To understand why, you need to look at the architecture.

In this article, we will cover various open-source models and the engineering bets that define each one.

Every major open-weight LLM released at the frontier in 2025 and 2026 uses a Mixture-of-Experts (MoE) transformer architecture.

See the diagram below that shows the concept of the MoE architecture:

The reason is that dense transformers activate all parameters for every token. To make a denser model smarter, if you add more parameters, the computational cost scales linearly. With hundreds of billions of parameters, this becomes prohibitive.

MoE solves this by replacing the monolithic feed-forward layer in each transformer block with multiple smaller “expert” networks and a learned router that decides which experts handle each token. This result is a model that can, for example, store the knowledge of 671 billion parameters but only compute 37 billion per token.

This is why two numbers matter for every model:

Total parameters (memory footprint, knowledge capacity)

Active parameters (inference speed, per-token cost).

Think of a specialist hospital with 384 doctors on staff, but only 8 in the room for any given patient. You benefit from the knowledge of 384 specialists while only paying for 8 at a time. The triage nurse (the router) decides who gets called.

That’s also why a trillion-parameter model and a 235-billion-parameter model cost roughly the same per query. For example, Kimi K2 activates 32 billion parameters per token, while Qwen3 activates 22 billion. In other words, you’re comparing the active counts, not the totals.

Take your meeting context to new places

If you’re already using Claude or ChatGPT for complex work, you know the drill: you feed it research docs, spreadsheets, project briefs... and then manually copy-paste meeting notes to give it the full picture.

What if your AI could just access your meeting context automatically?

Granola’s new Model Context Protocol (MCP) integration connects your meeting notes to your AI app of choice.

Ask Claude to review last week’s client meetings and update your CRM. Have ChatGPT extract tasks from multiple conversations and organize them in Linear. Turn meeting insights into automated workflows without missing a beat.

Perfect for engineers, PMs, and operators who want their AI to actually understand their work.

-> Try the MCP integration for free here or use the code BYTEBYTEGO

Almost every model marketed as “open source” is technically open weight. This means that the trained parameters are public, but the training data and often the full training code are not. In traditional software, however, “open source” means code is available, modifiable, and redistributable.

What does this mean in practice?

You can download, fine-tune, and commercially deploy all six of these models. However, you cannot see or audit their training data, and you cannot reproduce their training runs from scratch. For most engineering teams, the first part is what matters. But the distinction is worth knowing.

The license landscape also varies. DeepSeek V3 and GLM-5 use the MIT license. Qwen3 and Mistral Large 3 use Apache 2.0. Both are fully permissive for commercial use. Kimi K2 uses a modified MIT license. Llama 4 uses a custom community license that restricts usage for companies with over 700 million monthly users and prohibits using the model to train competing models.

Transparency also varies. Some teams publish detailed technical reports with architecture diagrams, ablation studies, and hyperparameters. Others provide weights and a blog post with less architectural detail. More transparency enables the “borrow and build” dynamic described above, which is part of why it works.

Every time a model generates a token, it needs to “remember” keys and values for all previous tokens in the conversation. This storage, called the KV-cache, grows linearly with sequence length and becomes a memory bottleneck for long contexts. Different models use three different strategies to deal with it.

Grouped-Query Attention (GQA) shares key-value pairs across groups of query heads. It’s the industry default, offering straightforward implementation and moderate memory savings. Qwen3 and Llama 4 both use GQA.

Multi-Head Latent Attention (MLA) compresses key-value pairs into a low-dimensional latent space before caching, then decompresses when needed. It was introduced in DeepSeek V2 and used in both DeepSeek V3 and Kimi K2. MLA saves more memory than GQA but adds computational overhead for the compress/decompress step.

Sparse Attention skips attending to all previous tokens and instead selects the most relevant ones. DeepSeek introduced DeepSeek Sparse Attention (DSA) in V3.2, and GLM-5 openly adopted DSA in its architecture. Since sparse attention optimizes the attention layers while MoE optimizes the feed-forward layers, the two techniques compound. Therefore, GLM-5 benefits from both.

See the diagram below that shows DeepSeek’s Multi-Head Latent Attention approach:

The tradeoff comes down to what matters most in your deployment. GQA is simpler and proven. MLA is more memory-efficient but more complex to engineer. Sparse attention reduces compute for long contexts but requires careful design to avoid missing important tokens. The strategy a model chooses depends on whether the bottleneck is memory, compute, or context length.

The six models range from 16 to 384 experts, reflecting a fundamental disagreement about how far sparsity should be pushed.

At a fixed compute budget, increasing the number of experts can improve both training and validation loss. However, the report also notes that this gain comes with increased infrastructure complexity. More total experts means more total parameters stored in memory, and Kimi K2’s trillion parameters require a multi-GPU cluster regardless of how few fire per token. By contrast, Llama 4 Scout’s 109 billion total parameters can fit on a single high-memory server.

Two other design choices stand out:

First, the shared expert question. DeepSeek V3, Llama 4, and Kimi K2 include a shared expert that processes every token, providing a baseline capability floor. Qwen3’s technical report notes that, unlike their earlier Qwen2.5-MoE, they dropped the shared expert, but doesn’t disclose why. There is no consensus in the field on whether shared experts are worth the compute cost.

Second, Llama 4 takes a unique approach. Rather than making every layer MoE, Llama 4 alternates between dense and MoE layers, and routes each token to only 1 expert (plus the shared) rather than 8. This means fewer active experts per token, but each expert is larger.

See the diagram below that shows Llama’s approach:

Architecture determines capacity, but training determines what a model actually does with it.

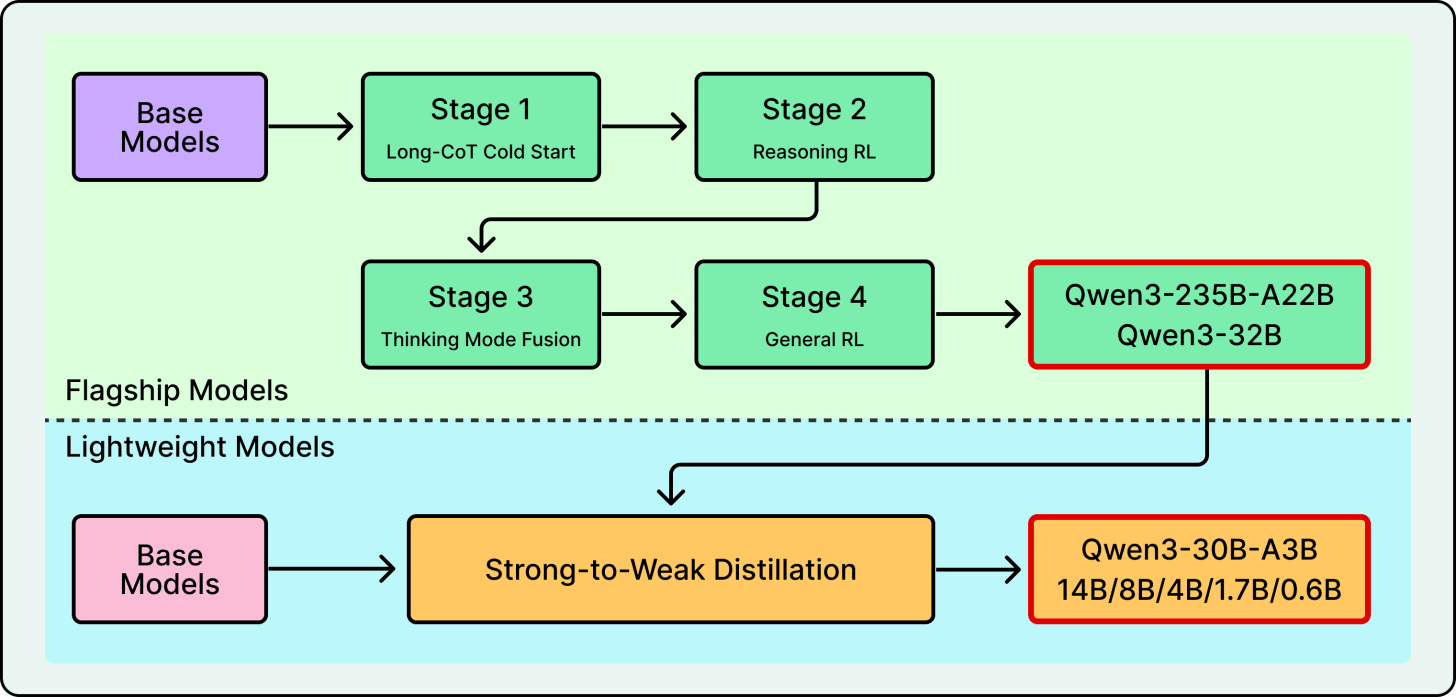

Pre-training, where the model learns by predicting the next token across trillions of tokens, gives the model its base knowledge. The scale varies (14.8 trillion tokens for DeepSeek V3, up to 36 trillion for Qwen3), but the approach is similar. Post-training is where models diverge, and it’s now the primary differentiator.

Reinforcement learning with verifiable rewards checks whether the model’s output is objectively correct.

Did the code compile? Is the math answer right? The model is rewarded for correct outputs and penalized for wrong ones. This was the breakthrough behind DeepSeek R1, and elements of it were distilled into DeepSeek V3.

Distillation from a larger teacher trains a massive model and uses its outputs to teach smaller ones. Llama 4 co-distilled from Behemoth, a 2-trillion-parameter teacher model, during pre-training itself. Qwen3 distills from its flagship down to smaller models in the family.

See the diagram below that shows Qwen’s post-training flow:

Synthetic agentic data involves building simulated environments loaded with real tools like APIs, shells, and databases, then rewarding the model for completing tasks in those environments. For example, Kimi K2’s technical report describes a large-scale pipeline that systematically generates tool-use demonstrations across simulated and real-world environments.

Novel RL infrastructure can be a contribution in itself. GLM-5 developed “Slime,” a new asynchronous reinforcement learning framework that improves training throughput for post-training, enabling more iterations within the same compute budget.

Training stability also deserves attention here. At this scale, a single training crash can waste days of GPU time. To counter this, Kimi K2 developed the MuonClip optimizer specifically to prevent exploding attention logits, enabling them to train on 15.5 trillion tokens without a single loss spike. DeepSeek V3 similarly reported zero irrecoverable loss spikes across its entire training run. These engineering contributions may prove more reusable than any particular architectural choice.

Architectures are converging. Everyone is building MoE transformers. Training approaches are diverging, with teams placing different bets on reinforcement learning, distillation, synthetic data, and new optimizers.

The specific models covered here will be overtaken in months. However, the framework for evaluating them likely won’t change.

Important questions would still be about active parameter count and not just the total. Also, what attention tradeoff did they make, and does it match the context-length needs? How many experts fire per token, and can the infrastructure handle the total? How was it post-trained, and does that approach align with your use case? What does the license actually permit?

References:

2026-03-01 00:30:26

AI coding tools are fast, capable, and completely context-blind. Even with rules, skills, and MCP connections, they generate code that misses your conventions, ignores past decisions, and breaks patterns. You end up paying for that gap in rework and tokens.

Unblocked changes the economics.

It builds organizational context from your code, PR history, conversations, docs, and runtime signals. It maps relationships across systems, reconciles conflicting information, respects permissions, and surfaces what matters for the task at hand. Instead of guessing, agents operate with the same understanding as experienced engineers.

You can:

Generate plans, code, and reviews that reflect how your system actually works

Reduce costly retrieval loops and tool calls by providing better context up front

Spend less time correcting outputs for code that should have been right in the first place

This week’s system design refresher:

11 Ways To Use AI To Increase Your Productivity

Al Topics to Learn before Taking Al/ML Interviews

PostgreSQL versus MySQL

Why AI Needs GPUs and TPUs?

Network Protocols Explained

AI is changing how we work. People who use AI get more done in less time. You do not need to code. You need to know which tool to use and when.

For example, instead of reading long technical blogs, you can upload them to Google’s NotebookLM and ask it to summarize the key points.

Or you can use Otter.ai to turn meeting transcripts into action items, decisions, and highlights.

Here is a list of 19 tools that can speed up your daily workflow across different areas. Save this for the next time you feel stuck getting started.

Over to you: What’s the underrated AI tool that others might not know about?

AI interviews often test fundamentals, not tools. This visual splits them into two buckets that show up repeatedly: Traditional AI and Modern AI.

Traditional AI focuses on fundamental ML topics, mostly from before neural networks became dominant.

Modern AI focuses on neural network foundations and newer concepts like transformers, RAG, and post-training.

Interviewers generally expect you to know both. They expect you to explain how they work, when they break, and the trade-offs.

Use this as a checklist, and make sure you can explain each topic clearly before your next AI interview.

Over to you: which topic here do you find hardest to explain under interview pressure? What else is missing?

Built using the C language, PostgreSQL uses a process-based architecture.

You can think of it like a factory with a manager (Postmaster) coordinating specialized workers. Each connection gets its own process and shares a common memory pool. Background workers handle tasks like writing data, vacuuming, and logging independently.

MySQL takes a thread-based approach. Imagine a single multi-tasking brain.

It uses a layered design with one server handling multiple connections through threads. The magic happens using pluggable storage engines (such as InnoDB, MyISAM) that you can swap based on your needs.

Over to you: Which database do you prefer?

When AI processes data, it’s essentially multiplying massive arrays of numbers. This can mean billions of calculations happening simultaneously.

CPUs can handle such calculations sequentially. To perform any calculation, the CPU must fetch an instruction, retrieve data from memory, execute the operation, and write results back. This constant transfer of information between the processor and memory is not very efficient. It’s like having one very smart person solve a giant puzzle alone.

GPUs change the game with parallel processing. They split the work across hundreds of cores, reducing processing time to milliseconds.

However, TPUs take it even further with a systolic array architecture that’s thousands of times faster than CPUs. Each unit in a TPU multiplies its stored weight by incoming data, adds to a running sum flowing vertically, and passes both values to its neighbor. This cuts down on I/O costs and reduces processing times even further.

Over to you: What else will you add to explain the need for GPUs and TPUs to run AI workloads?

Every time you type a URL and hit Enter, half a dozen network protocols quietly come to life. We usually talk about HTTP or HTTPS, but that’s just the tip of the iceberg. Under the hood, the web runs on a carefully layered stack of protocols, each solving a very specific problem.

At the top is HTTP. It defines the request-response model that powers browsers, APIs, and microservices. Simple, stateless, and everywhere.

When security is added, HTTP becomes HTTPS, wrapping every request in TLS so data is encrypted and the server is authenticated before anything meaningful is exchanged.

Before any of that can happen, DNS steps in. Humans think in domain names, machines think in IP addresses. DNS bridges that gap, resolving names into routable IPs so packets know where to go.

Then comes the transport layer. TCP sets up a connection, performs the three-way handshake, retransmits lost packets, and ensures everything arrives in order. It’s reliable, but that reliability comes with overhead.

UDP skips all of that. No handshakes, no guarantees, just fast datagrams. That’s why it’s used for streaming, gaming, and newer protocols like QUIC.

At the bottom sits IP. This is the postal system of the internet. It doesn’t care about reliability or order. Its only job is to move packets from one network to another through routers, hop by hop, until they reach the destination.

Each layer is deliberately limited in scope. DNS doesn’t care about encryption. TCP doesn’t care about HTTP semantics. IP doesn’t care if packets arrive at all. That separation is exactly why the internet scales.

Over to you: When something breaks, which layer do you usually blame first, DNS, TCP, or the application itself?

2026-02-27 00:30:39

If a database stores data on three servers in three different cities, and we write a new value, when exactly is that write “done”? Does it happen when the first server saves it? Or when all three have it? Or when two out of three confirm?

The answer to this question is quite important. Consider a simple bank transfer where we move $500 from savings to checking. We see the updated balance on our phone. But our partner, checking the same account from their laptop in another city, still sees the old balance. For a few seconds, the household has two different versions of the truth. For something similar to a like count on social media, that kind of temporary disagreement is harmless. For a bank balance, it’s a different story.

The guarantee that every reader sees the most recent write, no matter where or when they read, is what distributed systems engineers call strong consistency. It sounds straightforward. Making it work across machines, data centers, and continents is one of the hardest problems in distributed systems, because it requires those machines to coordinate, and coordination has a cost governed by physics.

In a previous article, we had looked at eventual consistency. In this article, we will look at what strong consistency actually means, how systems deliver it, and what it really costs.