2026-01-28 02:38:37

Arm’s 7-series cores started out as the company’s highest performance offerings. After Arm introduced the performance oriented X-series, 7-series cores have increasingly moved into a density focused role. Like Intel’s E-Cores, Arm’s 7-series cores today derive their strength from numbers. A hybrid core setup can ideally use density-optimized cores to achieve high multithreaded performance at lower power and area costs than a uniform big-core design. The Cortex A725 is the latest in Arm’s 7-series, and arrives when Arm definitely wants a strong density optimized core. Arm would like SoC makers to license their cores rather than make their own. Arm probably hopes their big+little combination can compare well with Qualcomm’s custom cores. Beyond that, Arm’s efforts to expand into the x86-64 dominated laptop segment would benefit from a good density optimized core too.

Here, I’ll be looking into the Cortex A725 in Nvidia’s GB10. GB10 has ten A725 cores and ten X925 cores split across two clusters, with five of each core type in each. The A725 cores run at 2.8 GHz, while the high performance X925 cores reach 3.9 to 4 GHz. As covered before, one of GB10’s clusters has 8 MB of L3, while the other has 16 MB. GB10 will provide a look at A725’s core architecture, though implementation choices will obviously influence performance. For comparison, I’ll use older cores from Arm’s 7-series line as well as Intel’s recent Skymont E-Core.

A massive thanks to Dell for sending over two of their Pro Max with GB10 for testing. In our testing the Dell Pro Maxs were quite quiet even when under a Linpack load which speaks to Dell’s thermal design keeping the GB10 SoC within reasonable levels.

Cortex A725 is a 5-wide out-of-order core with reordering capacity roughly on par with Intel’s Skylake or AMD’s Zen 2. Its execution engine is therefore large enough to dip deep into the benefits of out-of-order execution, but stops well short of high performance core territory. The core connects to the rest of the system via Arm’s DynamIQ Shared Unit 120 (DSU-120) via 256-bit read and write paths. Arm’s DSU-120 acts as a cluster level interconnect and contains the shared L3 cache.

In Arm tradition, A725 offers a number of configuration options to let implementers fine tune area, power, and performance. These options include:

Cortex A710’s branch predictor already showed capabilities on par with older Intel high performance cores, and A725 inherits that strength. In a test where branch direction correlates with increasingly long random patterns, A725 trades blows with A715 rather than being an overall improvement. With a single branch, latencies start going up once the random pattern exceeds 2048 for a single branch, versus 3072 on A710. With 512 branches in play, both A710 and A725 see higher latencies once the pattern length exceeds 32. However, A725 shows a gradual degradation compared to the abrupt one seen on A710.

Arm’s 7-series cores historically used branch target buffers (BTBs) with very low cycle count latencies, which helped make up for their low clock speed. A725’s BTB setup is fine on its own, but regresses compared to A710. Each BTB level is smaller compared to A710, and the larger BTB levels are slower too.

A725’s taken branch performance trades blows with Skymont. Tiny loops favor A725, because Skymont can’t do two taken branches per cycle. Moderate branch counts give Skymont a win, because Intel’s 1024 entry L1 BTB has single cycle latency. Getting a target out of A725’s 512 entry L1 BTB adds a pipeline bubble. Then, both cores have similar cycle count latencies to a 8192 entry L2 BTB. However, that favors Skymont because of its higher clock speed.

Returns, or branch-with-link instructions in Arm terms, are predicted on A725 using a 16 entry return stack. A call+return pair has a latency of two cycles, so each branch likely executes with single cycle latency.

In SPEC CPU2017, A725 holds the line in workloads that are easy on the branch predictor while sometimes delivering improvements in difficult tests. 541.leela is the most impressive case. In that workload. A725 manages a 6.61% decrease in mispredicts per instruction compared to Neoverse N2. Neoverse N2 is a close relative of Cortex A710, and was tested here in Azure’s Cobalt 100. A725’s gains are less clear in SPEC CPU2017’s floating point workloads, where it trades blows with Neoverse N2. Still, it’s an overall win for A725 because SPEC’s floating point workloads are less branchy and put more emphasis on core throughput.

Comparing with Skymont is more difficult because there’s no way to match the instruction stream due to ISA differences. It’s safe to say the two cores land in the same ballpark, and both have excellent branch predictors.

After the branch predictor has decided where to go, the frontend has to fetch instructions and decode them into the core’s internal format. Arm’s cores decode instructions into macro-operations (MOPs), which can be split further into micro-operations (uOPs) in later pipeline stages. Older Arm cores could cache MOPs in a MOP cache, much like AMD and Intel’s high performance CPUs. A725 drops the MOP cache and relies exclusively on a conventional fetch and decode path for instruction delivery. The fetch and decode path can deliver 5 MOPs per cycle, which can correspond to 6 instructions if the frontend can fuse a pair of instructions into a single MOP. With a stream of NOPs, A725’s frontend delivers five instructions per cycle until test sizes spill out of the 32 entry instruction TLB. Frontend bandwidth then drops to under 2 IPC, and stays there until the test spills out of L3.

For comparison, A710 could use its MOP cache to hit 10 IPC if each MOP corresponds to a fused instruction pair. That’s easy to see with a stream of NOPs, because A710 can fuse pairs of NOPs into a single MOP. A725’s decoders can no longer fuse pairs of adjacent NOPs, though they still support other fusion cases like CMP+branch.

Arm’s decision to ditch the MOP cache deserves discussion. I think it was long overdue, because Arm cores already took plenty of measures to mitigate decode costs. Chief among these is a predecode scheme. Instructions are partially decoded when they’re filled into the instruction cache. L1I caches on cores up to the Cortex A78 and Cortex X1 stored instructions in a 36 or 40-bit intermediate format. It’s a compromise between area-hungry micro-op caching and fully decoding instructions with every fetch. Combining predecode and micro-op caching is overkill, especially on low clocked cores where timing should be easier. Seeing A710 hit ridiculous throughput in an instruction bandwidth microbenchmark was fun, but it’s a corner case. Arm likely determined that the core rarely encountered more than one instruction fusion candidate per cycle, and I suspect they’re right.

A725’s Technical Reference Manual suggests Arm uses a different predecode scheme now, with 5 bits of “sideband” predecode data stored alongside each 32-bit aarch64 instruction. Sideband bit patterns indicate a valid opcode. Older Arm CPUs used the intermediate format to deal with the large and non-contiguous undefined instruction space. A725’s sideband bits likely cover the same purpose, along with aiding the decoders for valid instructions.

MOPs from the frontend go to the rename/allocate stage, which can handle five MOPs per cycle. Besides doing classical register renaming to remove false dependencies, modern CPUs can pull a variety of tricks to expose additional parallelism. A725 can recognize moving an immediate value of 0 to a register as a zeroing idiom, and doesn’t need to allocate a physical register to hold that instruction’s result. Move elimination is supported too, though A725 can’t eliminate chained MOVs across all renamer slots like AMD and Intel’s recent cores. In a test with independent MOVs, A725 allocates a physical register for every instruction. Arm’s move elimination possibly fails if the renamer encounters too many MOVs in the same cycle. That’s what happens on Intel’s Haswell, but I couldn’t verify it on A725 because Arm doesn’t have performance events to account for move elimination efficacy.

Memory renaming is another form of move elimination, where the CPU carries out zero-cycle store forwarding if both the load and store use the same address register. Intel first did this on Ice Lake, and AMD first did so on Zen 2. Skymont has memory renaming too. I tested for but didn’t observe memory renaming on A725.

Arm historically took a conservative approach to growing reordering capacity on their 7-series cores. A larger reordering window helps the core run further ahead of a stalled instruction to extract instruction level parallelism. Increasing the reordering window can improve performance, but runs into diminishing returns. A725 gets a significant jump in reorder buffer capacity, from 160 to 224 entries. Reordering capacity is limited by whichever structure fills first, so sizing up other structures is necessary to make the most of the ROB size increase.

A725 gets a larger integer register file and deeper memory ordering queues, but not all structures get the same treatment. The FP/vector register file has more entries, but has fewer renames available for 128-bit vector results. FP/vector register file entries are likely 64-bit now, compared to 128-bit from before. SVE predicate registers take a slight decrease too. Vector execution tends to be area hungry and only benefits a subset of applications, so Arm seems to be de-prioritizing vector execution to favor density.

A725’s execution pipe layout looks a lot like A710’s. Four pipes service integer operations, and are each fed by a 20 entry scheduling queue. Two separate ports handle branches. I didn’t measure an increase in scheduling capacity when mixing branches and integer adds, so the branch ports likely share scheduling queues with the ALU ports. On A710, one of the pipes could only handle multi-cycle operations like madd. That changes with A725, and all four pipes can handle simple, single cycle operations. Integer multiplication remains a strong point in Arm’s 7-series architecture. A725 can complete two integer multiplies per cycle, and achieves two cycle latency for integer multiplies.

Two pipes handle floating point and vector operations. Arm has used a pair of mostly symmetrical FP/vector pipes dating back to the Cortex A57. A725 is a very different core, but a dual pipe FPU still looks like a decent balance for a density optimized core. Each FP/vector pipe is fed by a 16 entry scheduling queue, and both share a non-scheduling queue with approximately 23 entries. A single pipe can use up to 18 entries in the non-scheduling queue after its scheduler fills.

Compared to A710, A725 shrinks the FP scheduling queues and compensates with a larger non-scheduling queue. A non-scheduling queue is simpler than a scheduling one because it doesn’t have to check whether operations are ready to execute. It’s simply an in-order queue that sends MOPs to the scheduler when entries free up. A725 can’t examine as many FP/vector MOPs for execution ready-ness as A710 can, but retains similar ability to look past FP/vector operations in the instruction stream to find other independent operations. Re-balancing entries between the schedulers and non-scheduling queue is likely a power and area optimization.

A725 has a triple AGU pipe setup for memory address calculation, and seems to feed each pipe with a 16 entry scheduling queue. All three AGUs can handle loads, and two of them can handle stores. Indexed addressing carries no latency penalty.

Addresses from the AGU pipes head to the load/store unit, which ensures proper ordering for memory operations and carries out address translation to support virtual memory. Like prior Arm cores, A725 has a fast path for forwarding either half of a 64-bit store to a subsequent 32-bit load. This fast forwarding has 5 cycle latency per dependent store+load pair. Any other alignment gives 11 cycle latency, likely with the CPU blocking the load until the store can retire.

A725 is more sensitive to store alignment than A710, and seems to handle stores in 32 byte blocks. Store throughput decreases when crossing a 32B boundary, and takes another hit if the store crosses a 64B boundary as well. A710 takes the same penalty as A725 does at a 64B boundary, and no penalty at a 32B boundary.

Arm grew the L1 DTLB from 32 entries in A710 to 48 entries in A725, and keeps it fully associative. DTLB coverage thus increases from 128 to 192 KB. Curiously, Arm did the reverse on the instruction side and shrank A710’s 48 entry instruction TLB to 32 entries on A725. Applications typically have larger data footprints than code footprints, so shuffling TLB entries to the data side could help performance. Intel’s Skymont takes the opposite approach, with a 48 entry DTLB and a 128 entry ITLB.

A725’s L2 TLB has 1536 entries and is 6-way set associative, or can have 1024 entries and be 4-way set associative in a reduced area configuration. The standard option is an upgrade over the 1024 entry 4-way structure in A710, while the area reduced one is no worse. Hitting the L2 TLB adds 5 cycles of latency over a L1 DTLB hit.

Intel’s Skymont has a much larger 4096 entry L2 TLB, but A725 can make up some of the difference by tracking multiple 4 KB pages with a single TLB entry. Arm’s Technical Reference Manual describes a “MMU coalescing” setting that can be toggled via a control register. The register lists “8x32” and “4x2048” modes, but Arm doesn’t go into further detail about what they mean. Arm is most likely doing something similar to AMD’s “page smashing”. Cores in AMD’s Zen line can “smash” together pages that are consecutive in virtual and physical address spaces and have the same attributes. “Smashed” pages act like larger pages and opportunistically extend TLB coverage, while letting the CPU retain the flexibility and compatibility benefits of a smaller page size. “8x32” likely means A725 can combine eight consecutive 4 KB pages to let a TLB entry cover 32 KB. For comparison, AMD’s Zen 5 can combine four consecutive 4 KB pages to cover 16 KB.

A725 has a 64 KB 4-way set associative data cache. It’s divided into 16 banks to service multiple accesses, and achieves 4 cycle load-to-use latency. The L1D uses a pseudo-LRU replacement scheme, and like the L1D on many other CPUs, is virtually indexed and physically tagged (VIPT). Bandwidth-wise, the L1D can service three loads per cycle, all of which can be 128-bit vector loads. Stores go through at two per cycle. Curiously, Arm’s Technical Reference Manual says the L1D has four 64-bit write paths. However, sustaining four store instructions per cycle would be impossible with only two store AGUs.

The L2 cache is 8-way set associative, and has two banks. Nvidia selected the 512 KB option, which is the largest size that supports 9 cycle latency. Going to 1 MB would bump latency to 10 cycles, which is still quite good. Because L2 misses incur high latency costs, Arm uses a more sophisticated “dynamic biased” cache replacement policy. L2 bandwidth can reach 32 bytes per cycle, but curiously not with read accesses. With either a read-modify-write or write-only access pattern, A725 can sustain 32 bytes per cycle out to L3 sizes.

Arm’s optimization guide details A725’s cache performance characteristics. Latencies beyond L2 rely on assumptions that don’t match GB10’s design, but the figures do emphasize the expensive nature of core to core transfers. Reaching out to another core incurs a significant penalty at the DSU compared to getting data from L3, and takes several times longer than hitting in core-private caches. Another interesting detail is that hitting in a peer core’s L2 gives better latency than hitting in its L1. A725’s L2 is strictly inclusive of L1D contents, which means L2 tags could be used as a snoop filter for the L1D. Arm’s figures suggest the core first checks L2 when it receives a snoop, and only probes L1D after a L2 hit.

A725’s SPEC CPU2017 performance brackets Intel’s Crestmont as tested in their Meteor Lake mobile design. Neoverse N2 scores slightly higher. Core performance is heavily dependent on how it’s implemented, and low clock speeds in GB10’s implementation mean its A725 cores struggle to climb past older density optimized designs.

Clock speed tends to heavily influence core-bound bound workloads. 548.exchange2 is one example. Excellent core-private cache hitrates mean IPC (instructions per cycle) doesn’t change with clock speed. A725 manages a 10.9% IPC increase over Neoverse N2, showing that Arm has improved their core architecture. However, Neoverse N2’s 3.4 GHz clock speed translates to a 17% performance advantage over A725. Crestmont presents a more difficult comparison because of different executed instruction counts. 548.exchange2 executed 1.93 trillion instructions on the x86-64 cores, but required 2.12 trillion on aarch64 cores. A725 still achieves higher clock normalized performance, but Cresmont’s 3.8 GHz clock speed puts it 14.5% ahead. Other high IPC, core bound workloads show a similar trend. Crestmont chokes on something in 538.imagick though.

On the other hand, clock speed means little in workloads that suffer a lot of last level cache misses. DRAM doesn’t keep pace with clock speed increases, meaning that IPC gets lower as clock speed gets higher. A725 remains very competitive in those workloads despite its lower clock speed. In 520.omnetpp, it matches the Neoverse N2 and takes a 20% lead over Crestmont. A725 even gets close to Skymont’s performance in 549.fotonik3d.

A725 only gains entries in the most important out-of-order structures compared to A710. Elsewhere, Arm preferred to rebalance resources, or even cut back on them. It’s impressive to see Arm trim the fat on a core that didn’t have a lot of fat to begin with, and it’s hard to argue with the results. A725’s architecture looks like an overall upgrade over A710 and Neoverse N2. I suspect A725 would come out top if both ran at the same clock speeds and were supported by identical memory subsystems. In GB10 though, A725’s cores don’t clock high enough to beat older designs.

Arm’s core design and Nvidia’s implementation choices contrast with AMD and Intel’s density optimized strategies. Intel’s Skymont is almost a P-Core, and only makes concessions in the most power and area hungry features like vector execution. AMD takes their high performance architecture and targets lower clock speeds, taking whatever density gains they can get in the process. Now that Arm’s cores are moving into higher performance devices like Nvidia’s GB10, it’ll be interesting to see how their density-optimized strategy plays out against Intel and AMD’s.

Again, a massive thank you to Dell for sending over the two Pro Max with GB10s for testing.

If you like the content then consider heading over to the Patreon or PayPal if you want to toss a few bucks to Chips and Cheese, also consider joining the Discord.

2026-01-07 05:32:40

Hello you fine Internet folks,

Here at CES 2026, AMD showed off their upcoming Venice series of server CPUs and their upcoming MI400 series of datacenter accelerators. AMD has talked about the specifications of both Venice and the MI400 series at their Advancing AI event back in June of 2025, but this is the first time AMD has shown off the silicon for both of product lines.

Starting with Venice, the first thing to notice is the packaging of the CCDs to the IO dies is different. Instead of using the organic substrate of the package to run the wires between the CCDs and the IO dies that AMD has used since EPYC Rome, Venice appears to be using a more advanced form of packaging similar to Strix Halo or MI250X. Another change is that Venice appears to have two IO dies instead of the single IO die that the prior EPYC CPUs had.

Venice has 8 CCDs each of which have 32 cores for a total of up to 256 cores per Venice package. Doing some measuring of each of the dies, you get that each CCD is approximately 165mm2 of N2 silicon. If AMD has stuck to 4MB of L3 per core than each of these CCDs have 32 Zen 6 cores and 128MB of L3 cache along with the die to die interface for the CCD <-> IO die communications. At approximately 165mm2 per CCD, that would make a Zen 6 core plus the 4MB of L3 per core about 5mm2 each which is similar to Zen 5’s approximately 5.34mm2 on N3 when counting both the Zen 5 core and 4MB of L3 cache.

Moving to the IO dies, they each appear to be approximately 353mm2 for a total of just over 700mm2 of silicon dedicated for the IO dies. This is a massive increase from the approximately 400mm2 that the prior EPYC CPUs dedicated for their IO dies. The two IO dies appear to be using an advanced packaging of some kind similar to the CCDs. Next to the IO dies appear to be 8 little dies, 4 on each side of the package, which are likely to either be structural silicon or deep trench capacitor dies meant to improve power delivery to the CCDs and IO dies.

Shifting off of Venice and on to the MI400 accelerator, this is a massive package with 12 HBM4 dies and “twelve 2 nanometer and 3 nanometer compute and IO dies”. It appears as if there are two base dies just like MI350. But unlike MI350, there appears to also be two extra dies on the top and bottom of the base dies. These two extra dies are likely for off-package IO such as PCIe, UALink, etc.

Calculating the die sizes of the base dies and the IO dies, the die size of the base die is approximately 747mm2 for each of the two base dies with the off-package IO dies each being approximately 220mm2. As for the compute dies, while the packaging precludes any visual demarcation of the different compute dies, it is likely that there are 8 compute dies with 4 compute dies on each base die. So while we can’t figure out the exact die size of the compute dies, the maximum size is approximately 180mm2. The compute chiplet is likely in the 140mm2 to 160mm2 region but that is a best guess that will have to wait to be confirmed.

The MI455X and Venice are the two SoCs that are going to be powering AMD’s Helios AI Rack but they aren’t the only new Zen 6 and MI400 series products that AMD announced at CES. AMD announced that there would be a third member of the MI400 family called the MI440X joining the MI430X and MI455X. The MI440X is designed to fit into the 8-way UBB boxes as a direct replacement for the MI300/350 series.

AMD also announced Venice-X which is likely is going to be a V-Cache version of Venice. This is interesting because not only did AMD skip Turin-X but if there is a 256 core version of Venice-X, then this would be the first time that a high core count CCD will have the ability to support a V-Cache die. If AMD sticks to the same ratio of base die cache to V-Cache die cache, then each 32 core CCD would have up to 384MB of L3 cache which equates to 3 Gigabytes of L3 cache across the chip.

Both Venice and the MI400 series are due to launch later this year and I can’t wait to learn more about the underlying architectures of both SoCs.

If you like the content then consider heading over to the Patreon or PayPal if you want to toss a few bucks to Chips and Cheese, also consider joining the Discord.

2026-01-05 05:31:26

Hello you fine Internet folks,

Today we are talking about Qualcomm's upcoming X2 GPU architecture with Eric Demers, Qualcomm's GPU Team lead. We talk about the changes from the prior X1 generation of GPUs along with why some of the changes were made.

Hope y'all enjoy!

The transcript below has been edited for readability and conciseness.

George: Hello you fine Internet Folks. We’re here in San Diego at Qualcomm headquarters at their first architecture day and I have with me Eric Demers. What do you do?

Eric: I am an employee here at Qualcomm, I lead our GPU team, that’s most of the hardware and some of the software. It’s all in one and it’s a great team we’ve put together and I have been here for fourteen years.

George: Quick 60 second boildown of what is new in the X2 GPU versus the X1 GPU.

Eric: Well, it’s the next generation for us, meaning that we looked at everything we were building on the X1 and we said “we can do one better”, and fundamentally improved the performance. And I shared with you that it is a noticeable improvement in performance but not necessarily the same amount of increase in power. So it is a very power-efficient core, so that you effectively are getting much more performance at a slightly higher power cost. As opposed to doubling the performance and doubling the power cost, so that’s one big improvement, second we wanted to be a full-featured DirectX 12.2 Ultimate part. So all the features of DirectX 12.2 which most GPUs on Windows already support, so we’re part of that gang.

George: And in terms of API, what will you support for the X2 GPU?

Eric: Obviously we’ll have DirectX 12.2 and all the DirectX versions behind that, so we’ll be fully compatible there. But we also plan to introduce native Vulkan 1.4 support. There’s a version of that which Windows supplies, but we’ll be supplying a native version that is the same codebase as we use for our other products. We’ll also be introducing native OpenCL 3.0 support, also as used by our other products. And then in the first quarter of 2026 we’d like to introduce SYCL support, and SYCL is a higher-end compute-focused API and shading language for a GPU. It’s an open standard, other companies support it, and it helps us attack some of the GPGPU use-cases that exist on Windows for Snapdragon.

George: Awesome, going into the architecture a little bit. With the X2 GPU, you now have what’s called HPM or High-Performance Memory? What exactly is that for and how is it set up in hardware?

Eric: So our GPUs have always had memory attached to them. We were a tiling architecture to start with on mobile which minimises memory bandwidth requirements. And how it does it is by keeping as much on chip as possible before you send it to DRAM. Well, we’ve taken that to the next level and said “let’s make it really big” and instead of tiling the screen just render it normally but render it to an on-chip SRAM that is big enough to actually hold that whole surface or most of that surface. So particularly for the X2 Extreme Edition we can do a QHD+ resolution or 1600p resolution, 2K resolutions, all natively on die and all that rendering, so the color ROPs, all the Z-buffer, all of that is done at full speed on die and doesn’t use any DRAM bandwidth. Frees that up for reading on the GPU, CPU, or NPU. It gives you a performance boost, but saving all that bandwidth gives you a power boost as well. So it’s a performance per Watt improvement for us. It isn’t just for rendering and is a general purpose large memory attached to your GPU, you can use it for compute. You could render to it and then do post-processing with it still in the HPM using your shaders. There’s all types of flexible use-cases that we’ve come up with or that we will come up with over time.

George: Awesome, going into the HPM, there’s 21 megabytes of HPM on an X2-90 GPU. How is that split up? Is it 5.25 MB per slice and that slice can only access that 5.25 MB or is it shared across the whole die?

Eric: So physically it is implemented per slice. So we have 5.25 MB per slice. But no, there’s a full crossbar because if you think about it as a frame buffer there’s abilities to go fetch out of anywhere. You have random access to the whole surface from any of the slices. We have a full crossbar at full bandwidth that allows the HPM to be used by any of the slices, even though it is physically implemented inside the slice.

George: And how is the HPM set up? Is it a cache, or is it a scratchpad?

Eric: So the answer to that is yes, it is under software control. You can allocate parts of it to be a cache. Up to 3 MB of color and Z-cache. Roughly 1/3rd to 2/3rds and that’s typically if you’re rendering to DRAM then you’ll use it as a cache. The cache there is for the granularity fetches so you’ll prefetch data and bring them on chip and decompress the data and pull it into the HPM and use it locally as much as you can then cache it back out when you evict that cache line. The rest of the HPM will then be reserved for software use. It could be used to store nothing, it could be used to store render targets, you could have multiple render targets, it could store just Z-buffers, just color buffers. It could store textures from a previous render that you’re going to use as part of the rendering. It’s really a general scratchpad in that sense that you can use as you see fit, from both an application and a driver standpoint.

George: What was the consideration to allow only three megabytes of the SRAM to be used as cache and leave the other 2.25 MB as a sort of scratchpad SRAM? Why not allow all of it to be cache?

Eric: Yeah, that’s actually a question that we asked ourselves internally. First of all, making everything cache with the granularity we have has a cost. Your tag memory, which is the memory that tells you what’s going on in your cache grows with the size of your cache. So there’s a cost for that so it’s not like it’s free and we could just throw it in. There’s actually a substantial cost because it also affects your timing because you have to do a tag lookup and the bigger your tag is the more complex the design has to be. So there’s a desired limit at which beyond it’s much more work and more cost to put in. But we looked at it and what we found is that if you hold the whole frame buffer in HPM, you get 100% efficiency. But as soon as you drop below holding all of it, your efficiency flattens out and your cache hit rate doesn’t get much worse unless the cache gets really small and it doesn’t get much better until you get to the full side. So there’s a plateau and what we found is that right at the bottom of that plateau is roughly the size that we planned. So it gets really good cache hit-rates and if we had made it twice as big it wouldn’t have been much better but it would have been costlier so we did the right trade-off on this particular design. It may be something we revisit in the future, particularly if the use-cases for HPM change but for now it’s the right balance for us.

George: And sort of digging even deeper into the slice architecture. Previously, a micro-SP which is part of the slice. There’s two micro-SPs per SP and then there’s two SPs per slice.

Eric: Yeah.

George: The micro-SP would issue wave128 instructions and now you have moved to wave64 but you still have 128 ALUs in a micro-SP. How are you dealing with only issuing 64 instructions per wave to 128 ALUs?

Eric: I am not sure this is very different from others, but we dual-issue. So we will dual-issue two waves at a time to keep all those ALUs busy. So in a way it’s just meaning that we’re able to deal with wave64 but we’re also more efficient because they are wave64. If you do a branch, the smaller the wave the less granularity loss you’ll have, the less of the potential branches you have to take. Wave64 is generally a more efficient use of resources than one large wave and in fact two waves is more efficient than one wave and so for us keeping more waves in flight is simply an efficiency improvement even if it doesn’t affect your peak throughput for example. But it comes with overhead, you have to have more context, more information, more meta information on the side to have two waves in flight. One of the consequences is that the GPRs our general purpose registers where the data for the waves is stored. We had to grow it roughly 30% from 96k to 128k. We grew that in part to have more waves, both to deal with the dual-issue but just having more waves is generally more efficient.

George: So how often are you seeing your GPU being able to dual-issue? How often are you seeing two waves go down at the same time?

Eric: All the time, it almost always operates that way. I guess there could be cases where there are bubbles in the shader execution where they are both waiting and in which case neither will run. You could have one that gets issued and is ready earlier, but generally we have so many waves in flight that there’s always space for two to run.

George: Okay.

Eric: It would really be the case if you had a lot of GPRs used by one wave and that we do not have enough, and normally we have enough for two of those but you might be throttling more often in those cases because you just don’t have enough waves to cover all the latency of the memory fetches. But that’s a fairly corner case of very very complex shaders that is not typical.

George: So the dual-issue mechanism doesn’t have very many restrictions on it?

Eric: No, no restrictions.

George: Cool, thank you so much for this interview. And my final question is, what is your favourite type of cheese?

Eric: That’s a good question. I love Brie, I will always love Brie … and Mozzarella curds. So, I grew up in Canada and poutine was a big thing and I freaking loved Mozzarella curds and it’s hard to find them. The only place I found them is Whole Foods sometimes, but Amazon, surprisingly, I have to go order them so that we can make home-made poutine.

George: What’s funny is Ian, who’s behind the camera. He and I, he’s Potato, I’m Cheese.

Eric: There you go.

George: We run a show called the Tech Poutine, and the guest is the Gravy.

Eric: Ah, there you go, and the gravy is available. Either Swiss chalet, or I forget the other one, but they’re all good. And Five Guys in San Diego makes the fries that are closest to the typical poutine fries. And I actually have Canadian friends that have gone to Costco and gotten the cheese there and then gone to Five Guys, gotten the fries there and made the sauce just to eat that.

George: Thank you so much for this interview.

Eric: You’re welcome.

George: If you like content like this, hit like and subscribe. It does help with the channel. If you would like a transcript of this, that will be on chipsancheese.com as well as links to the Patreon and PayPal down below in the description. Thank you so much Eric.

Eric: You’re welcome, thank you.

George: And have a good one, folks.

2025-12-31 18:30:35

GB10 is a collaboration between Nvidia and Mediatek that brings Nvidia’s Blackwell architecture into an integrated GPU. GB10’s GPU has 48 Blackwell SMs, matching the RTX 5070 in core count. The CPU side has 10 Cortex X925 and 10 Cortex A725 cores and is therefore quite powerful. Feeding all of that compute power requires a beefy memory subsystem, and can lead to difficult tradeoffs. Analyzing GB10’s memory subsystem from the CPU side will be the focus of this article. To keep article length manageable, I’ll further focus on Nvidia and Mediatek’s memory subsystems and design decisions. Core architecture and GB10’s GPU will be an exercise for another time.

We’d like to thank Zach at ZeroOne Technology for allowing us SSH access to his DGX Spark unit for CPU testing.

CPU cores on GB10 are split into two clusters. Each cluster has five A725 cores and five X925 cores. Core numbering starts with the A725 cores within each cluster, and the two clusters come after each other. All of the A725 cores run at 2.8 GHz. X925 cores clock up to 3.9 GHz on the first cluster, and up to 4 GHz on the second.

Arm’s A725 and X925 have configurable cache capacities. GB10 opts for 64 KB L1 instruction and data caches on both cores. All A725 cores get 512 KB L2 caches, and all X925 cores get 2 MB of L2. A725’s L2 is 8-way set associative and offers latency at just 9 cycles. In actual time, that comes out to 3.2 nanoseconds and is good considering the low 2.8 GHz clock speed. However, L3 latency is poor at over 21 ns, or >60 cycles.

Testing cores across both clusters indicates that the first CPU cluster has 8 MB of L3, while the second has 16 MB. I’ll refer to these as Cluster 0 and Cluster 1 respectively. Both clusters have the same L3 latency from an A725 core, despite the capacity difference. 512 KB isn’t a lot of L2 capacity when L3 latency is this high. Likely, selecting the 512 KB L2 option reduces core area and lets GB10 implement more cores. Doing so makes sense considering that A725 cores aren’t meant to individually deliver high single threaded performance. That task is best left to the X925 cores.

GB10’s X925 cores have 2 MB, 8-way set associative L2 caches with 12 cycle latency. L3 latency is surprisingly much better at ~56 cycles or ~14 ns, even though the A725 and X925 cores share the same L3. While it’s not a spectacular L3 latency result, it’s at least similar to Intel’s Arrow Lake L3 in nanosecond terms. Combined with the larger L2, that gives GB10’s X925 cores a cache setup that’s better balanced to deliver high performance.

A 16 MB system level cache (SLC) sits after L3. It’s hard to see from latency plots due to its small capacity relative to the CPU L3 caches. Latency data from cluster 0 suggests SLC latency is around 42 or 47 ns, depending on whether it’s accessed from a X925 or A725 core respectively. System level caches aren’t tightly coupled to any compute block, which typically means lower performance in exchange for being able to service many blocks across the chip. Nvidia states that the system level cache “enables power-efficient data sharing between engines” in addition to serving as the CPU’s L4 cache. Allowing data exchange between the CPU and GPU without a round trip to DRAM may well be the SLC’s most important function.

AMD’s Zen 5 in Strix Halo has smaller but faster core-private caches. GB10’s X925 and A725 cores have good cycle count latencies, but Zen 5 can clock so much higher that its caches end up being faster, if just barely so at L2. AMD’s L3 design continues to impress, delivering lower latency even though it has twice as much capacity.

DRAM latency is a bright spot for GB10. 113 ns might feel slow coming from a typical DDR5-equipped desktop, but it’s excellent for LPDDR5X. Strix Halo for comparison has over 140 ns of DRAM latency, as does Intel’s Meteor Lake. Faster LPDDR5X may play a role in GB10’s latency figures. Hot Chips slides say GB10 can run its memory bus at up to 9400 MT/s, and dmidecode reports 8533 MT/s. Placing CPU cores on the same die as the memory controllers may also contribute to lower latency.

Core-private bandwidth figures are straightforward. A725 cores can read from L1 at 48 bytes per cycle, and appear to have a 32B/cycle datapath to L2. A single A725 core can read from L3 at ~55 GB/s. X925 has more impressive bandwidth. It can read 64B/cycle from L1D, likely has a 64B/cycle path to L2, and can sustain nearly 90 GB/s of read bandwidth from L3. Single core DRAM bandwidth is also higher from a X925 core, at 38 GB/s compared to 26 GB/s from an A725 core.

A single AMD Zen 5 or Zen 4 core can pull over 50 GB/s from DRAM, or well north of 100 GB/s from L3. It’s an interesting difference that suggests AMD lets a single core queue up more memory requests, but low threaded workloads rarely demand that much bandwidth and I suspect it doesn’t make a big difference.

Shared components in the memory hierarchy face more pressure in multithreaded workloads, because having more cores active tends to multiply bandwidth demands. Normally I test multithreaded bandwidth by having each thread traverse a separate array. That prevents access combining, because no two threads will request the same address. It also shows the sum of cache capacities, because each core can keep a different part of the test data footprint in its private caches. GB10 has 15 MB of L2 across cores in each cluster, but only 8 or 16 MB of L3. Any size that fits within L3 will have a substantial part contained within L2.

Pointing all threads to the same array carries the risk of overestimating bandwidth if accesses get combined, but that seems to happen only after a shared cache. I initially validated bandwidth testing methodologies on Zen 2 and Skylake. There, shared array results generally aligned with L3 performance counter data. Using shared array results on GB10 provides another data point to evaluate L3 performance. Taken together with results using thread-private arrays, they suggest GB10 has much lower L3 bandwidth than AMD’s Strix Halo. However, it’s still respectable at north of 200 GB/s and likely to be adequate.

GB10’s two CPU clusters have asymmetrical external bandwidth, in addition to L3 capacity. Cluster 0 feels a bit like a Strix Halo CCX (Core Complex). Cluster 1 gives off AMD GMI-Wide vibes, with over 100 GB/s of read bandwidth. Switching to a 1:1 ratio of reads and writes dramatically increases measured bandwidth, suggesting the clusters have independent read and write paths with similar width. GB10’s CPU clusters are built using Arm’s DynamIQ Shared Unit 120 (DSU-120), which can be configured with up to four 256-bit CHI interfaces, so perhaps two clusters have different interface counts.

Much like Strix Halo, GB10’s CPU side enjoys more bandwidth than a typical client setup, but can’t fully utilize the 256-bit LPDDR5X bus. CPU workloads tend to be more latency sensitive and less bandwidth hungry. Memory subsystems in both large iGPU chips reflect that, and emphasize caching to improve CPU performance.

Observations above point to Cluster 1 being performance optimized, while Cluster 0 focuses on density. Cache is one of the biggest area consumers in a modern chip so cutting L3 capacity to 8 MB is almost certainly an area concession. Cluster 0 may also have a narrower external interface as running fewer wires outside the cluster could save area as well. But Nvidia and Mediatek stop short of fully specializing each cluster.

Both Cluster 0 and Cluster 1 have the same core configuration of five X925 cores and five A725 cores. The X925 cores focus on the highest performance whereas the A725 cores focus on density. As a result the A725 cores feel out of place on a high performance cluster, especially with 512 KB of L2 in front of a L3 with over 20 ns of latency.

I wonder if going all-in on cluster specialization would be a better idea by concentrating the ten A725 cores into Cluster 0 for density and the ten X925 cores into Cluster 1 for high performance. Going from two heterogeneous clusters to two homogeneous clusters would simplify the OS scheduler as well. For example, the OS scheduler would also have an easier time containing workloads to a single cluster, letting the hardware clock or power down the second cluster.

Latency and bandwidth can go hand in hand. High bandwidth demands cause requests to back up in various queues throughout the memory subsystem, pushing up average request latency. Ideally, a memory subsystem can provide high bandwidth while preventing bandwidth hungry threads from starving out latency sensitive ones. Here, I’m testing latency from a X925 core with various combinations of other cores generating bandwidth load from within the same cluster.

Both clusters hit their maximum bandwidth figures with all A725 generating bandwidth load. Adding bandwidth demands from X925 cores decreases aggregate bandwidth while pushing latency up. Reversing the core load order suggests the X925 cores specifically cause contention in the memory subsystem. Latency reaches a maximum with four X925 cores asking for as much bandwidth they can get. It’s almost like the X925 cores don’t know when to slow down to avoid monopolizing memory subsystem resources. When the A725 cores come into play, GB10 seems to realize what’s going on and starts to balance bandwidth demands better. Bandwidth improves, and surprisingly, latency does too.

Cluster 1 is worse at controlling latency despite having more bandwidth on tap. It’s unexpected after testing AMD’s GMI-Wide setup, where higher off-cluster bandwidth translated to better latency control under high bandwidth load.

Loading cores across both clusters shows GB10 maintaining lower latency than Strix Halo over the achieved bandwidth range. GB10’s combination of lower baseline latency and high external bandwidth from Cluster 1 put it well ahead.

Throwing GB10’s iGPU into the mix presents an additional challenge. Increasing bandwidth demands from the iGPU drive up CPU-side latency, much like on Strix Halo. GB10 enjoys better baseline DRAM latency than Strix Halo, and maintains better latency at modest GPU bandwidth demands.

However, GB10 does let high GPU bandwidth demands squeeze out the CPU. Latency from the CPU side goes beyond 351 ns with the GPU pulling 231 GB/s.

I only generate bandwidth load from the GPU in the test above, and run a single CPU latency test thread. Mixing high CPU and GPU bandwidth demands makes the situation more complicated.

With two X925 cores on cluster 1 pulling as much bandwidth as they can get their hands on, and the GPU doing the same, latency from the highest performance X925 core goes up to nearly 400 ns. Splitting out achieved bandwidth from the CPU and GPU further shows the GPU squeezing out the CPU bandwidth test threads.

Memory accesses typically traverse the cache hierarchy in a vertical fashion, where cache misses at one level go through to a lower level. However, the memory subsystem may have to carry out transfers between caches at the same level in order to maintain cache coherency. Doing so can be rather complex. The memory subsystem has to determine which peer cache, if any, might have a more up-to-date copy of a line. Arm’s DSU-120 has a Snoop Control Unit, which uses snoop filters to orchestrate peer-to-peer cache transfers within a core complex. Nvidia/Mediatek’s High Performance Coherent Fabric is responsible for maintaining coherency across clusters.

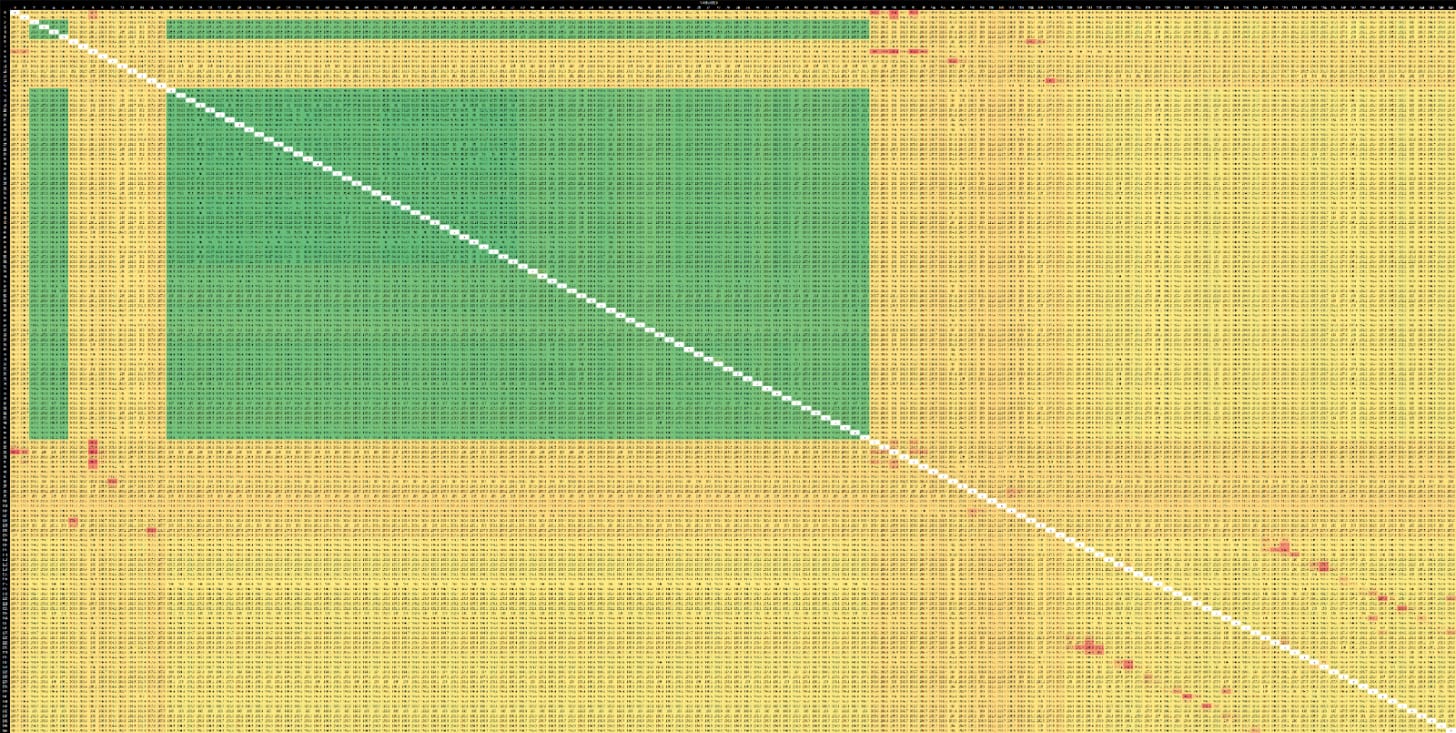

GB10’s cluster boundaries are clearly visible with a coloring scheme applied across all result points. Results within each cluster are also far from uniform. Setting separate color schemes for intra-cluster and cross-cluster points highlights this. X925 cores in general give better intra-cluster latency results. Best case latencies involve transfers between X925 cores in the same cluster. Worst case latencies show up between A725 cores on different clusters, and can reach 240 ns.

Compared to Strix Halo, GB10’s core-to-core latency figures are high overall. Strix Halo manages to keep cross-cluster latencies at around 100 ns. It’s worse than what AMD achieves on desktop parts, but is substantially better than the 200 ns seen on GB10. Within clusters, AMD keeps everything below 50 ns while GB10 only manages 50-60 ns in the best case.

GB10’s CPU setup feels very density optimized compared to Strix Halo’s.GB10 has 20 CPU cores to Strix Halo’s 16, and gets there using a highly heterogeneous CPU configuration that’s light on cache. My initial thought is that all else being equal, I would prefer 32 MB of fast cache in a single level over 16 MB of L3 and 16 MB of slower system level cache. That said, performance is a complicated topic and I’m still working on benchmarking both Strix Halo and GB10. GB10’s memory subsystem has bright points too. Its DRAM latency is outstanding for a LPDDR5X implementation. Mediatek has also seen fit to give one cluster over 100 GB/s of external read bandwidth, which is something AMD hasn’t done on any client design to date.

CPU-side bandwidth is another interesting detail, and has a lot of common traits across both chips. CPU cores can’t access full LPDDR5X bandwidth on both GB10 and Strix Halo. The 256-bit memory bus is aimed at feeding the GPU, not the CPU. High GPU bandwidth demands can squeeze out the CPU in both memory subsystems. Perhaps Nvidia/Meditek and AMD both optimized for workloads that don’t simultaneously demand high CPU and GPU performance.

I hope to see Nvidia and AMD continue to iterate on large iGPU designs. Products like GB10 and Strix Halo allow smaller form factors, and sidestep VRAM capacity issues that plague current discrete GPUs. They’re fascinating from an enthusiast point of view. Hopefully both companies will improve their designs and make them more affordable going forward.

Again, we’d like to thank Zach at ZeroOne for providing SSH access to his Spark. If you like the content then consider heading over to the Patreon or PayPal if you want to toss a few bucks to Chips and Cheese, also consider joining the Discord.

2025-12-20 06:21:52

Hello you fine Internet folks,

Today we have an interview with the CEO of Cornelis Networks, Lisa Spelman, where we talk about what makes Omnipath different to other solutions on the market along with what steps has Cornelis taken in support of Ultra Ethernet.

Hope y’all enjoy!

If you like the content then consider heading over to the Patreon or PayPal if you want to toss a few bucks to Chips and Cheese. Also consider joining the Discord.

This transcript has been lightly edited for readability and conciseness.

George: Hello, you fine internet folks. We’re here at Supercomputing 2025 at the Cornelis Networks booth. So, with me I have, Lisa Spelman, CEO of Cornelis. Would you like to tell us about what Cornelis is and what you guys do?

Lisa: I would love to! So, thanks for having me, it’s always good, the only thing we’re missing is some cheese. We got lots of cheese.

George: There was cheese last night!

Lisa: Oh, man! Okay, well, yeah, we were here at the kickoff last night. It was a fun opening. So, Cornelis Networks is a company that is laser-focused on delivering the highest-performance networking solutions for the highest-performance applications in your data center. So that’s your HPC workloads, your AI workloads, and everything that just has intense data demands and benefits a lot from a parallel processing type of use case.

So that’s where all of our architecture, all of our differentiation, all of our work goes into.

George: Awesome. So, Cornelis Networks has their own networking, called OmniPath.

Lisa: Yes.

George: Now, some of you may know OmniPath used to be an Intel technology. But Cornelis, I believe, bought the IP from Intel. So could you go into a little bit about what OmniPath is and the difference between OmniPath, Ethernet, and InfiniBand.

Lisa: Yes, we can do that. So you’re right, Cornelis spun out of Intel with an OmniPath architecture.

George: Okay.

Lisa: And so this OmniPath architecture, I should maybe share, too, that we’re a full-stack, full-solution company. So we design both a NIC, a SuperNIC ASIC, we design a Switch ASIC... Look at you- He’s so good! He’s ready to go!

George: I have the showcases!

Lisa: Okay, so we have... we design our SuperNIC ASIC, we design our Switch ASIC, we design the card for the add-in card for the SuperNIC and the switchboard, all the way up, you know, top of rack, as well as all the way up to a big old director-class system that we have sitting here.

All of that is based on our OmniPath architecture, which was incubated and built at Intel then, like you said, spun out and acquired by Cornelis. So the foundational element of the OmniPath architecture is this lossless and congestion-free design.

So it was... it was built, you know, in the last decade focused on, how do you take all of the data movement happening in highly-parallel workloads and bring together a congestion-free environment that does not lose packets? So it was specifically built to address these modern workloads, the growth of AI, the massive scale of data being put together while letting go of learning from the past, but letting go of legacy, other networks that may be designed more for storage systems or for... you know, just other use cases. That’s not what they were inherently designed for.

George: The Internet.

Lisa: Yeah.

George: Such as Ethernet.

Lisa: A more modern development. I mean, Ethernet, I mean, amazing, right? But it’s 50 years old now. So what we did was, in this architecture, built in some really advanced capabilities and features like your credit-based flow controls and your dynamic lane scaling. So it’s the performance, as well as adding reliability to the system. And so the network plays a huge role, not only increasing your compute utilization of your GPU or your CPU, but it also can play a really big role in increasing the uptime of your overall system, which has huge economic value.

George: Yeah.

Lisa: So that’s the OmniPath architecture, and the way that it comes to life, and the way that people experience it, is lowest latency in the industry on all of these workloads. You know, we made sure on micro benchmarks, like ping pong latency, all the good micros. And then the highest message rates in the industry. We have two and a half X higher than the closest competitor. So that works really great for those, you know, message-dependent, really fast-rate workloads.

And then on top of that, we’re all going to operate at the same bandwidth. I mean, bandwidth is not really a differentiator anymore. And so we measure ourselves on how fast we get to half-bandwidth, and how quickly we can launch and start the data movement, the packet movement. So fastest to half-bandwidth is one of our points of pride for the architecture as well.

George: So, speaking of sort of Ethernet. I know there’s been the new UltraEthernet consortium in order to update Ethernet to a more... to the standard of today.

Lisa: Yeah.

George: What has Cornelis done to support that, especially with some of your technologies?

Lisa: So we think this move to UltraEthernet is really exciting for the industry. And it was obviously time, I mean, you know, it takes a big need and requirement to get this many companies to work together, and kind of put aside some differences, and come together to come up with a consortium and a capability, a definition that actually does serve the workloads of today.

So we’re we’re very excited and motivated towards it. And the reason that we are so is because we see so much of what we’ve already built in OmniPath being reflected through in the requirements of UltraEthernet. We also have a little bit of a point of pride in that the the software standard for UltraEthernet is built on top of LibFabric, which is an open source, you know, that we developed actually, and we’re maintainers of. So we’re we’re all in on the UltraEthernet, and in fact, we’ve just announced our next generation of products.

George: Speaking of; the CN6000, the successor to the CN5000: what exactly does it support in terms of networking protocols, and what do you sort of see in terms of like industry uptick?

Lisa: Yes. So this is really cool. We think it’s going to be super valuable for our customers. So with our next generation, our CN6000, that’s our 800 gig product, that one is going to be a multi-protocol NIC. So our super NIC there, it will have OmniPath native in it, and we have customers that they absolutely want that highest performance that they can get through OmniPath and it works great for them. But we’re also adding into it Rocky V2. So Ethernet performance as well as UltraEthernet, the 1.0, you know, the spec, the UltraEthernet compliance as well.

So you’re going to get this multi-modal NIC, and what we what we’re doing, what our differentiation is, is that you’re you’re moving to that Ethernet transport layer, but you’re still behind the scenes getting the benefits of the OmniPath architecture.

George: OK.

Lisa: So it’s not like it’s two totally separate things. We’re actually going to take your packet, run it through the OmniPath pipes and that architecture benefit, but spit it out as Ethernet, as a protocol or the transport layer.

George: Cool. And for UltraEthernet, I know there’s sort of two sort of specs. There’s what’s sort of colloquially known as the “AI spec” and the “HPC spec”, which have different requirements. For the CN6000, will it be sort of the AI spec or the HPC spec?

Lisa: Yeah. So we’re focusing on making sure the UltraEthernet transport layer absolutely works. But we are absolutely intending to deliver to both HPC performance and AI workload performance. And one of the things I like to kind of point out is, it’s not that they’re so different; AI workloads and HPC workloads have a lot of similar demands on the network. They just pull on them in different ways. So it’s like, message rate, for example: message rate is hugely important in things like computational fluid dynamics.

George: Absolutely.

Lisa: But it also plays a role in inference. Now, it might be the top determiner of performance in a CFD application, and it might be the third or fourth in an AI application. So you need that same, you know, the latency, the message rates, the bandwidth, the overlap, the communications, you know, all that type of stuff. Just workloads pull on them a little differently.

So we’ve built a well-rounded solution that addresses all. And then, by customer use case, you can pull on what you need.

George: Awesome. And as you can see here [points to network switch on table, you guys make your own switches.

Lisa: We do.

George: And you make your own NICs. But one of the questions I have is, can you use the CN6000 NIC with any switch?

Lisa: OK, so that’s a great point. And yes, you can. So one of our big focuses as we expand the company, the customer base, and serve more customers and workloads, is becoming much more broadly industry interoperable.

George: OK.

Lisa: So we think this is important for larger-scale customers that want to maybe run multi-vendor environments. So we’re already doing work on the 800 gig to ensure that it works across a variety of, in, you know, standard industry available switches. And that gives customers a lot of flexibility.

Of course, they can still choose to use both the super NIC and the switch from us. And that’s great, we love that, but we know that there’s going to be times when there’s like, a partner or a use case, where having our NIC paired with someone else’s switch is the right move. And we fully support it.

George: So then I guess sort of the flip-side of that is if I have, say, another NIC, could I attach that to an OmniPath switch?

Lisa: You will be able to, not in a CN6000, but stay tuned, I’ll have more breaking news! That’s just a little sneak peek of the future future.

George: Well, and sort of to round this off with the most important question. What’s your favorite type of cheese, Lisa?

Lisa: OK, I am from Portland, Oregon. So I have to go with our local, to the state of Oregon, our Rogue Creamery Blues.

George: Oh, OK.

Lisa: I had a chance this summer to go down to Grants Pass, where they’re from, and headquartered, and we did the whole cheese factory tour- I thought of you. We literally got to meet the cows! So it was very nice, it was very cool. And so that’s what I have to go with.

George: One of my favorite cheeses is Tillamook.

Lisa: OK, yes! Yes, another local favorite.

George: Thank you so much, Lisa!

Lisa: Thank you for having me!

George: Yep, have a good one, folks.

2025-12-16 04:56:51

Nvidia has dominated the GPU compute scene ever since it became mainstream. The company’s Blackwell B200 GPU is the next to take up the mantle of being the go-to compute GPU. Unlike prior generations, Blackwell can’t lean heavily on process node improvements. TSMC’s 4NP process likely provides something over the 4N process used in the older Hopper generation, but it’s unlikely to offer the same degree of improvement as prior full node shrinks. Blackwell therefore moves away from Nvidia’s tried-and-tested monolithic die approach, and uses two reticle sized dies. Both dies appear to software as a single GPU, making the B200 Nvidia’s first chiplet GPU. Each B200 die physically contains 80 Streaming Multiprocessors (SMs), which are analogous to cores on a CPU. B200 enables 74 SMs per die, giving 148 SMs across the GPU. Clock speeds are similar to the H100’s high power SXM5 variant.

I’ve listed H100 SXM5 specifications in the table above, but data in the article below will be from the H100 PCIe version unless otherwise specified.

A massive thank you goes to Verda (formerly DataCrunch) for providing an instance with 8 B200s which are all connected to each other via NVLink. Verda gave us about 3 weeks with the instance to do with as we wished. We previously covered the CPU part of this VM if you want to check that part out as well.

B200’s cache hierarchy feels immediately familiar coming from the H100 and A100. L1 and Shared Memory are allocated out of the same SM-private pool. L1/Shared Memory capacity is unchanged from H100 and stays at 256 KB. Possible L1/Shared Memory splits have not changed either. Shared Memory is analogous to AMD’s Local Data Share (LDS) or Intel’s Shared Local Memory (SLM), and provides software managed on-chip storage local to a group of threads. Through Nvidia’s CUDA API, developers can advise on whether to prefer a larger L1 allocation, prefer equal, or prefer more Shared Memory. Those options appear to give 216, 112, and 16 KB of L1 cache capacity, respectively.

In other APIs, Shared Memory and L1 splits are completely up to Nvidia’s driver. OpenCL gets the largest 216 KB data cache allocation with a kernel that doesn’t use Shared Memory, which is quite sensible. Vulkan gets a slightly smaller 180 KB L1D allocation. L1D latency as tested with array indexing in OpenCL comes in at a brisk 19.6 ns, or 39 cycles.

As with A100 and H100, B200 uses a partitioned L2 cache. However, it’s now much larger with 126 MB of total capacity. For perspective, H100 had 50 MB of L2, and A100 had 40 MB. L2 latency to the directly attached L2 partition is similar to prior generations at about 150 ns. Latency dramatically increases as test sizes spill into the other L2 partition. B200’s cross-partition penalty is higher than its predecessors, but only slightly so. L2 partitions on B200 almost certainly correspond to its two dies. If so, the cross-die latency penalty is small, and outweighed by a single L2 partition having more capacity than H100’s entire L2.

B200 acts like it has a triple level cache setup from a single thread’s perspective. The L2’s partitioned nature can be shown by segmenting the pointer chasing array and having different threads traverse each segment. Curiously, I need a large number of threads before I can access most of the 126 MB capacity without incurring cross-partition penalties. Perhaps Nvidia’s scheduler tries to fill one partition’s SMs before going to the other.

AMD’s Radeon Instinct MI300X has a true triple-level cache setup, which trades blows with B200’s. Nvidia has larger and faster L1 caches. AMD’s L2 trades capacity for a latency advantage compared to Nvidia. Finally, AMD’s 256 MB last level cache offers an impressive combination of latency and high capacity. Latency is lower than Nvidia’s “far” L2 partition.

One curiosity is that both the MI300X and B200 show more uniform latency across the last level cache when I run multiple threads hitting a segmented pointer chasing array. However, the reasons behind that latency increase are different. The latency increase past 64 MB on AMD appears to be caused by TLB misses, because testing with a 4 KB stride shows a latency increase at the same point. Launching more threads brings more TLB instances into play, mitigating address translation penalties. Cutting out TLB miss penalties also lowers measured VRAM latency on the MI300X. On B200, splitting the array didn’t lower measured VRAM latency, suggesting TLB misses either weren’t a significant factor with a single thread, or bringing on more threads didn’t reduce TLB misses. B200 thus appears to have higher VRAM latency than the MI300X, as well as the older H100 and A100. Just as with the L2 cross-partition penalty, the modest nature of the latency regression versus H100/A100 suggests Nvidia’s multi-die design is working well.

OpenCL’s local memory space is backed by Nvidia’s Shared Memory, AMD’s LDS, or Intel’s SLM. Testing local memory latency with array accesses shows B200 continuing the tradition of offering excellent Shared Memory latency. Accesses are faster than on any AMD GPU I’ve tested so far, including high-clocked members of the RDNA line. AMD’s CDNA-based GPUs have much higher local memory latency.

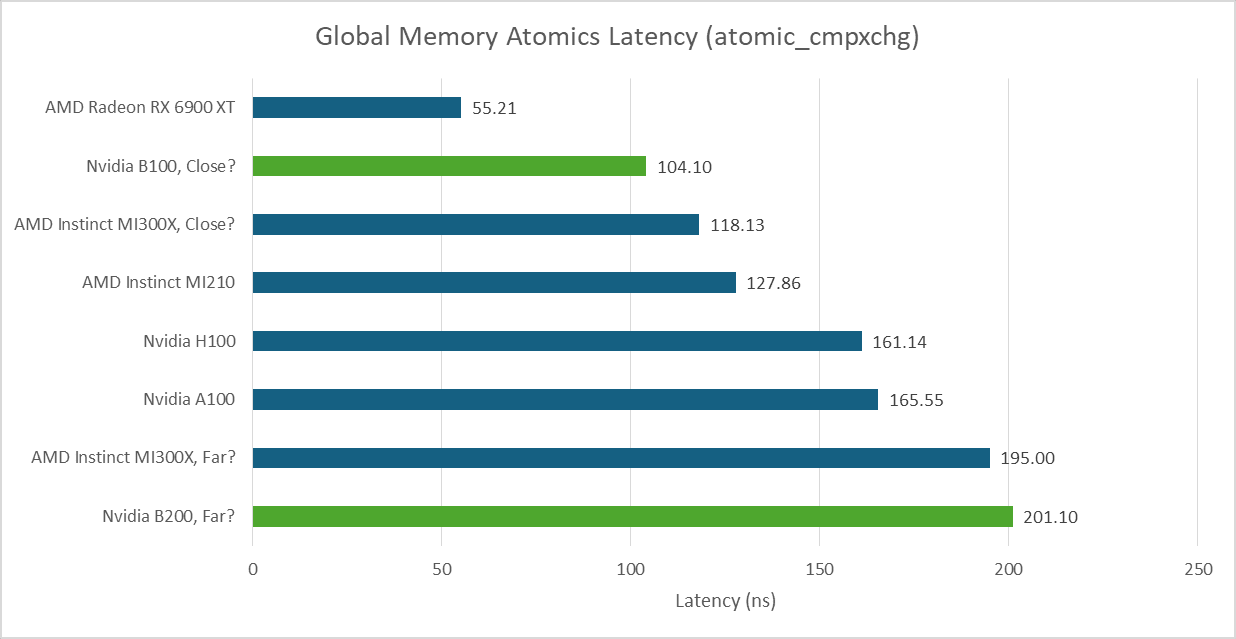

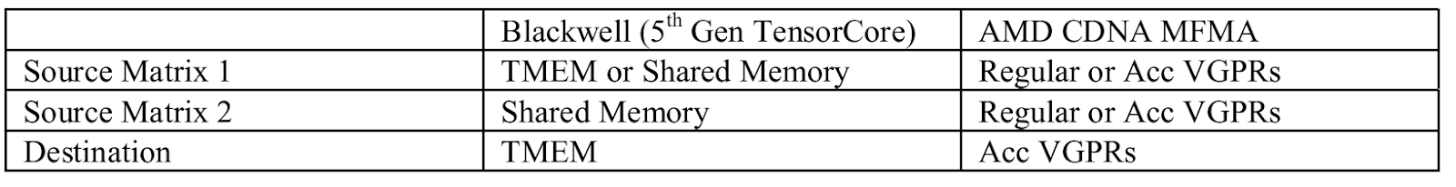

Atomic operations on local memory can be used to exchange data between threads in the same workgroup. On Nvidia, that means threads running on the same SM. Bouncing data between threads using atomic_cmpxchg shows latency on par with AMD’s MI300X. As with pointer chasing latency, B200 gives small incremental improvements over prior generations. AMD’s RDNA line does very well in this test compared to big compute GPUs.

Modern GPUs use dedicated atomic ALUs to handle operations like atomic adds and increments. Testing with atomic_add gives a throughput of 32 operations per cycle, per SM on the B200. I wrote this test after MI300X testing was concluded, so I only have data from the MI300A. Like GCN, AMD’s CDNA3 Compute Units can sustain 16 atomic adds per cycle. That lets the B200 pull ahead despite having a lower core count.

A higher SM count gives B200 a large L1 cache bandwidth advantage over its predecessors. It also catches up to AMD’s MI300X from OpenCL testing. Older, smaller consumer GPUs like the RX 6900XT are left in the dust.

Local memory offers the same bandwidth as L1 cache hits on the B200, because both are backed by the same block of storage. That leaves AMD’s MI300X with a large bandwidth lead. Local memory is more difficult to take advantage of because developers must explicitly manage data movement, while a cache automatically takes advantage of locality. But it is an area where the huge MI300X continues to hold a lead.

Nemez’s Vulkan-based benchmark provides an idea of what L2 bandwidth is like on the B200. Smaller data footprints contained within the local L2 partition achieve 21 TB/s of bandwidth. That drops to 16.8 TB/s when data starts crossing between the two partitions. AMD’s MI300X has no graphics API support and can’t run Vulkan compute. However, AMD has specified that the 256 MB Infinity Cache provides 14.7 TB/s of bandwidth. The MI300X doesn’t need the same degree of bandwidth from Infinity Cache because the 4 MB L2 instances in front of it should absorb a good chunk of L1 miss traffic.

The B200 offers a large bandwidth advantage over the outgoing H100 at all levels of the cache hierarchy. Thanks to HBM3E, B200 also gains a VRAM bandwidth lead over the MI300X. While the MI300X also has eight HBM stacks, it uses older HBM3 and tops out at 5.3 TB/s.

AMD’s MI300X showed varying latency when using atomic_cmpxchg to bounce values between threads. Its complex multi-die setup likely contributed to this. The same applies to B200. Here, I’m launching as many single-thread workgroups as GPU cores (SMs or CUs), and selecting different thread pairs to test. I’m using an access pattern similar to that of a CPU-side core to core latency test, but there’s no way to influence where each thread gets placed. Therefore, this isn’t a proper core to core latency test and results are not consistent between runs. But it is enough to display latency variation, and show that the B200 has a bimodal latency distribution.

Latency is 90-100 ns in good cases, likely when threads are on the same L2 partition. Bad cases land in the 190-220 ns range, and likely represents cases when communication crosses L2 partition boundaries. Results on AMD’s MI300X range from ~116 to ~202 ns. B200’s good case is slightly better than AMD’s, while the bad case is slightly worse.

Thread to thread latency is generally higher on datacenter GPUs compared to high clocked consumer GPUs like the RX 6900XT. Exchanging data across a GPU with hundreds of SMs or CUs is challenging, even in the best cases.

Atomic adds on global memory are usually handled by dedicated ALUs at a GPU-wide shared cache level. Nvidia’s B200 can sustain just short of 512 such operations per cycle across the GPU. AMD’s MI300A does poorly in this test, achieving lower throughput than the consumer oriented RX 6900XT.

Increased SM count gives the B200 higher compute throughput than the H100 across most vector operations. However, FP16 is an exception. Nvidia’s older GPUs could do FP16 operations at twice the FP32 rate. B200 cannot.

AMD’s MI300X can also do double rate FP16 compute. Likely, Nvidia decided to focus on handling FP16 with the Tensor Cores, or matrix multiplication units. Stepping back, the MI300X’s massive scale blows out both the H100 and B200 for most vector operations. Despite using older process nodes, AMD’s aggressive chiplet setup still holds advantages.

B200 targets AI applications, and no discussion would be complete without covering its machine learning optimizations. Nvidia has used Tensor Cores, or dedicated matrix multiplication units, since the Turing/Volta generation years ago. GPUs expose a SIMT programming model where developers can treat each lane as an independent thread, at least from a correctness perspective. Tensor Cores break the SIMT abstraction by requiring a certain matrix layout across a wave, or vector. Blackwell’s 5th generation Tensor Cores go one step further, and have a matrix span multiple waves in a workgroup (CTA).

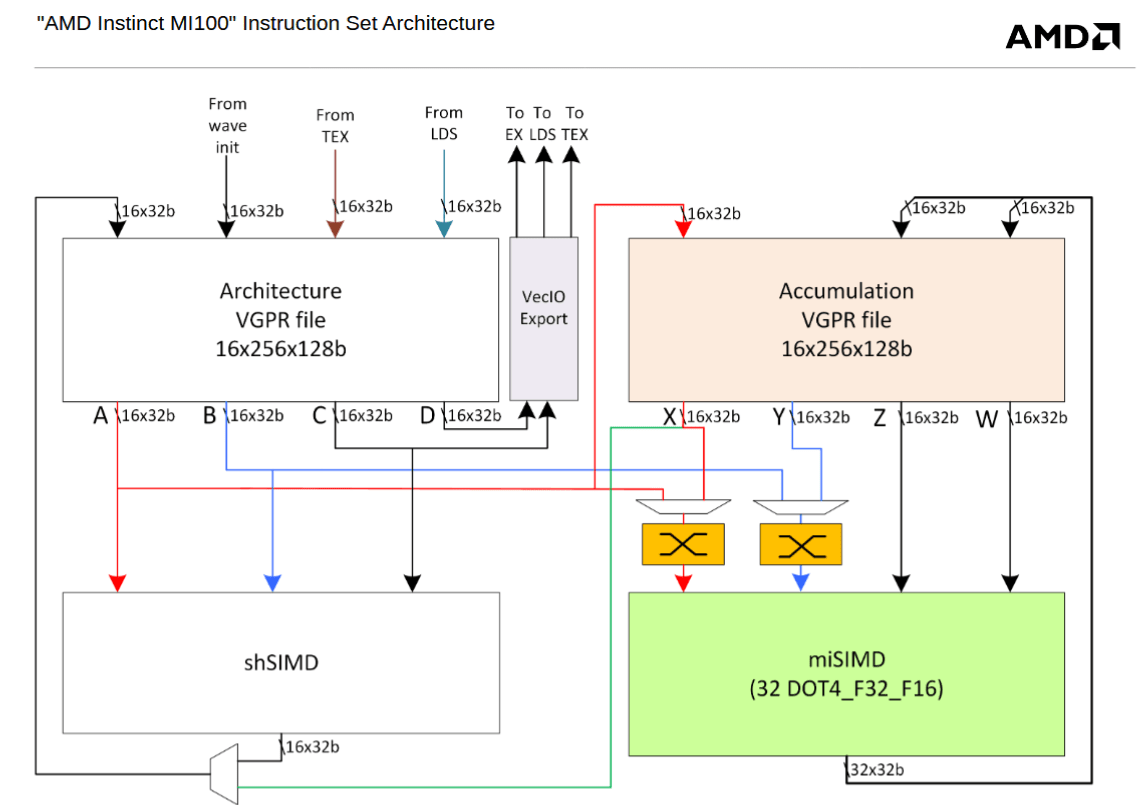

Blackwell also introduces Tensor Memory, or TMEM. TMEM acts like a register file dedicated to the Tensor Cores. Developers can store matrix data in TMEM, and Blackwell’s workgroup level matrix multiplication instructions use TMEM rather than the register file. TMEM is organized as 512 columns by 128 rows with 32-bit cells. Each wave can only access 32 TMEM rows, determined by its wave index. That implies each SM sub-partition has a 512 column by 32 row TMEM partition. A “TensorCore Collector Buffer” can take advantage of matrix data reuse, taking the role of a register reuse cache for TMEM.

TMEM therefore works like the accumulator register file (Acc VGPRs) on AMD’s CDNA architecture. CDNA’s MFMA matrix instructions similarly operate on data in the Acc VGPRs, though MFMA can also take source matrices from regular VGPRs. On Blackwell, only the older wave-level matrix multiplication instructions take regular register inputs. Both TMEM and CDNA’s Acc VGPRs have 64 KB of capacity, giving both architectures a 64+64 KB split register file per execution unit partition. The regular vector execution units cannot take inputs from TMEM on Nvidia, or the Acc VGPRs on AMD.

While Blackwell’s TMEM and CDNA’s Acc VGPRs have similar high level goals, TMEM is a more capable and mature implementation of the split register file idea. CDNA had to allocate the same number of Acc and regular VGPRs for each wave. Doing so likely simplified bookkeeping, but creates an inflexible arrangement where mixing matrix and non-matrix waves would make inefficient use of register file capacity. TMEM in contrast uses a dynamic allocation scheme similar in principle to dynamic VGPR allocation on AMD’s RDNA4. Each wave starts with no TMEM allocated, and can allocate 32 to 512 columns in powers of two. All rows are allocated at the same time, and waves must explicitly release allocated TMEM before exiting. TMEM can also be loaded from Shared Memory or the regular register file, while CDNA’s Acc VGPRs can only be loaded through regular VGPRs. Finally, TMEM can optionally “decompress” 4 or 6-bit data types to 8 bits as data is loaded in.

Compared to prior Nvidia generations, adding TMEM helps reduce capacity and bandwidth pressure on the regular register file. Introducing TMEM is likely easier than expanding the regular register file. Blackwell’s CTA-level matrix instructions can sustain 1024 16-bit MAC operations per cycle, per partition. Because one matrix input always comes from Shared Memory, TMEM only has to read one row and accumulate into another row every cycle. The regular vector registers would have to sustain three reads and one write per cycle for FMA instructions. Finally, TMEM doesn’t have to be wired to the vector units. All of that lets Blackwell act like it has a larger register file for AI applications, while allowing hardware simplifications. Nvidia has used 64 KB register files since the Kepler architecture from 2012, so a register file capacity increase feels overdue. TMEM delivers that in a way.

On AMD’s side, CDNA2 abandoned dedicated Acc VGPRs and merged all of the VGPRs into a unified 128 KB pool. Going for a larger unified pool of registers can benefit a wider range of applications, at the cost of not allowing certain hardware simplifications.

Datacenter GPUs traditionally have strong FP64 performance, and that continues to be the case for B200. Basic FP64 operations execute at half the FP32 rate, making it much faster than consumer GPUs. In a self penned benchmark, B200 continues to do well compared to both consumer GPUs and H100. However, the MI300X’s massive size shows through even though it’s an outgoing GPU.

In the workload above, I take a 2360x2250 FITS file with column density values and output gravitational potential values with the same dimensions. The data footprint is therefore 85 MB. Even without performance counter data, it’s safe to assume it fits in the last level cache on both MI300X and B200.

FluidX3D is a different matter. Its benchmark uses a 256x256x256 cell configuration and 93 bytes per cell in FP32 mode, for a 1.5 GB memory footprint. Its access patterns aren’t cache friendly, based on testing with performance counters on Strix Halo. FluidX3D plays well into B200’s VRAM bandwidth advantage, and the B200 now pulls ahead of the MI300X.

FluidX3D can also use 16-bit floating point formats for storage, reducing memory capacity and bandwidth requirements. Computations still use FP32 and format conversion costs extra compute, so the FP16 formats result in a higher compute to bandwidth ratio. That typically improves performance because FluidX3D is so bandwidth bound. When using IEEE FP16 for storage, AMD’s MI300A catches up slightly but leaves the B200 ahead by a large margin.

Another FP16C format reduces the accuracy penalty associated with using 16 bit storage formats. It’s a custom floating point format without hardware support, which further drives up the compute to bandwidth ratio.

With compute front and center again, AMD’s MI300A pulls ahead. The B200 doesn’t do badly, but it can’t compete with the massive compute throughput that AMD’s huge chiplet GPU provides.

We encountered three GPU hangs over several weeks of testing. The issue manifested with a GPU process getting stuck. Then, any process trying to use any of the system’s eight GPUs would also hang. None of the hung processes could be terminated, even with SIGKILL. Attaching GDB to one of them would cause GDB to freeze as well. The system remained responsive for CPU-only applications, but only a reboot restored GPU functionality. nvidia-smi would also get stuck. Kernel messages indicated the Nvidia unified memory kernel module, or nvidia_uvm, took a lock with preemption disabled.

The stack trace suggests Nvidia might be trying to free allocated virtual memory, possibly on the GPU. Taking a lock makes sense because Nvidia probably doesn’t want other threads accessing a page freelist while it’s being modified. Why it never leaves the critical section is anyone’s guess. Perhaps it makes a request to the GPU and never gets a response. Or perhaps it’s purely a software deadlock bug on the host side.

# nvidia-smi -r

The following GPUs could not be reset:

GPU 00000000:03:00.0: In use by another client

GPU 00000000:04:00.0: In use by another client

GPU 00000000:05:00.0: In use by another client

GPU 00000000:06:00.0: In use by another client

GPU 00000000:07:00.0: In use by another client

GPU 00000000:08:00.0: In use by another client

GPU 00000000:09:00.0: In use by another client

GPU 00000000:0A:00.0: In use by another client

Hangs like this aren’t surprising. Hardware acceleration adds complexity, which translates to more failure points. But modern hardware stacks have evolved to handle GPU issues without a reboot. Windows’s Timeout Detection and Recovery (TDR) mechanism for example can ask the driver to reset a hung GPU. nvidia-smi does offer a reset option. But frustratingly, it doesn’t work if the GPUs are in use. That defeats the purpose of offering a reset option. I expect Nvidia to iron out these issues over time, especially if the root cause lies purely with software or firmware. But hitting this issue several times within such a short timespan isn’t a good sign, and it would be good if Nvidia could offer ways to recover from such issues without a system reboot.

Nvidia has made the chiplet jump without any major performance concessions. B200 is a straightforward successor to H100 and A100, and software doesn’t have to care about the multi-die setup. Nvidia’s multi-die strategy is conservative next to the 12-die monster that is AMD’s MI300X. MI300X retains some surprising advantages over Nvidia’s latest GPU, despite being an outgoing product. AMD’s incoming datacenter GPUs will likely retain those advantages, while catching up in areas that the B200 has pulled ahead in. The MI350X for example will bring VRAM bandwidth to 8 TB/s.

But Nvidia’s conservative approach is understandable. Their strength doesn’t lie in being able to build the biggest, baddest GPU around the block. Rather, Nvidia benefits from their CUDA software ecosystem. GPU compute code is typically written for Nvidia GPUs first. Non-Nvidia GPUs are an afterthought, if they’re thought about at all. Hardware isn’t useful without software to run on it, and quick ports won’t benefit from the same degree of optimization. Nvidia doesn’t need to match the MI300X or its successors in every area. They just have to be good enough to prevent people from filling in the metaphorical CUDA moat. Trying to build a monster to match the MI300X is risky, and Nvidia has every reason to avoid risk when they have a dominant market position.

Still, Nvidia’s strategy leaves AMD with an opportunity. AMD has everything to gain from being ambitious and taking risks. GPUs like the MI300X are impressive showcases of hardware engineering, and demonstrate AMD’s ability to take on ambitious design goals. It’ll be interesting to see whether Nvidia’s conservative hardware strategy and software strength will result in its continued dominance.

Again, a massive thank you goes to Verda (formerly DataCrunch) without which this article would not be possible! If you want to try out the B200 or test other NVIDIA GPUs yourself, Verda is offering free trial credits specifically for Chips & Cheese readers. Simply enter the code “CHEESE-B200” and redeem 50$ worth of credit and follow these instructions on how to redeem coupon credits.