2026-02-14 04:58:15

A new update to ChapterPod is out now, and it includes a couple of nice little features.

The first is the ability to import your own subtitle files, if you've already generated them. If you didn't know, ChapterPod can generate subtitles for you, and after it's done, it gives you a nice interface to add chapters via the transcript. I've found this to be very quick, and it's now my go-to way to lock in chapter timings on my shows. However, I always generate subtitles in Quick Subtitles first, so it's annoying to have to wait for ChapterPod to generate a new transcript when I already have one.

Now, I can just drag in my existing SRT file, and it will bring that into the app, and I can use that to edit against. It's super simple, but it works, saves me some time, and gives me a better transcript to work with.

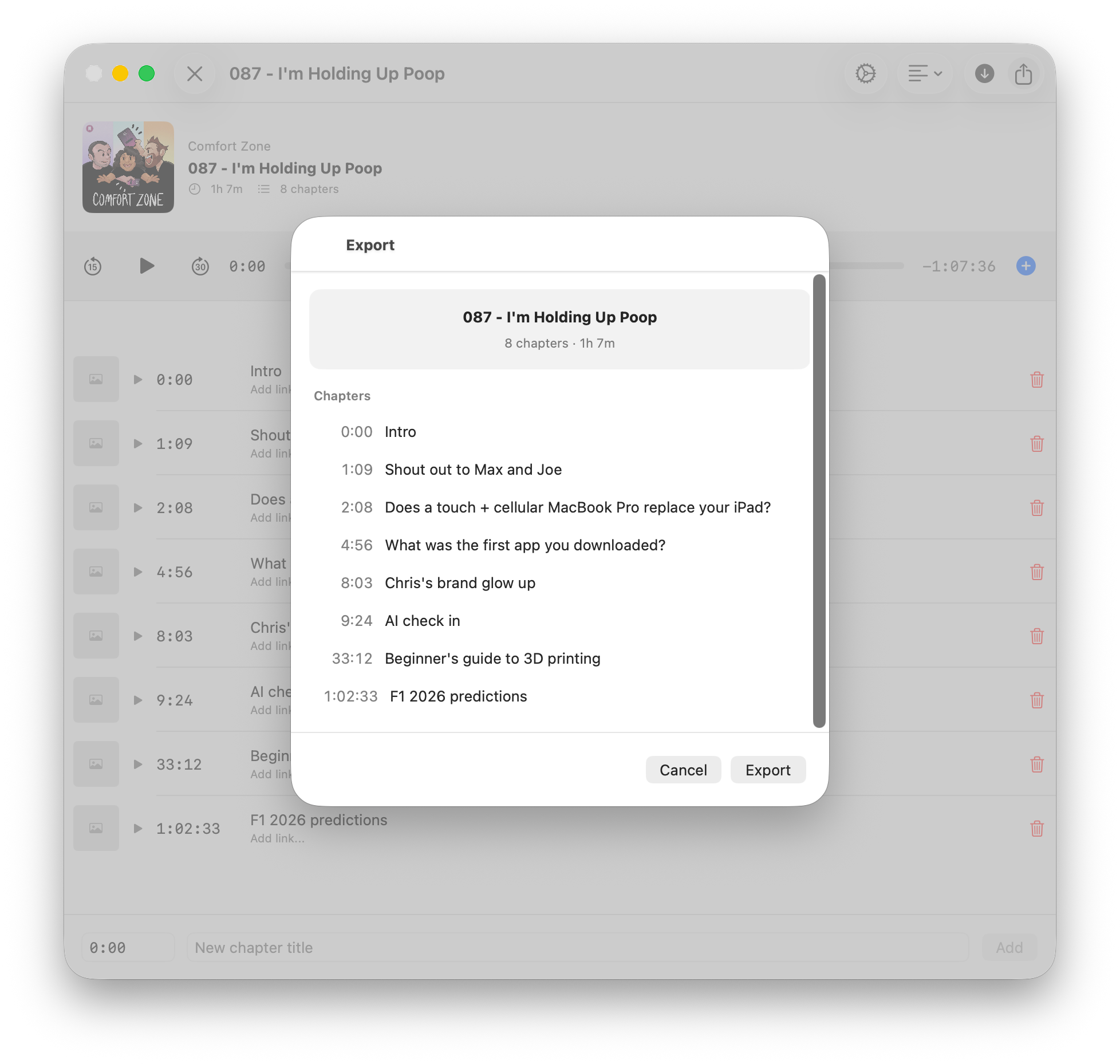

The second change is that now, when you go to export an episode, we will show a summary view that lets you double-check to make sure that your titles are correct, your timings are good, your links are there, and everything is as you would expect before saving the file.

A couple of other little things I wanted to call out in this update: the app icon also got a refresh. This is specifically because some people in app review thought the old one was too close to Apple's podcast icon and could be confusing to users. This would lead to occasional build rejections, depending on who was looking at the build, which was strange. I also happen to think the new one looks nicer, so that's good.

Also, in one of the previous point updates, I added the ability to drag chapters around in the transcript view, which makes it very easy to fine-tune the location of those chapters.

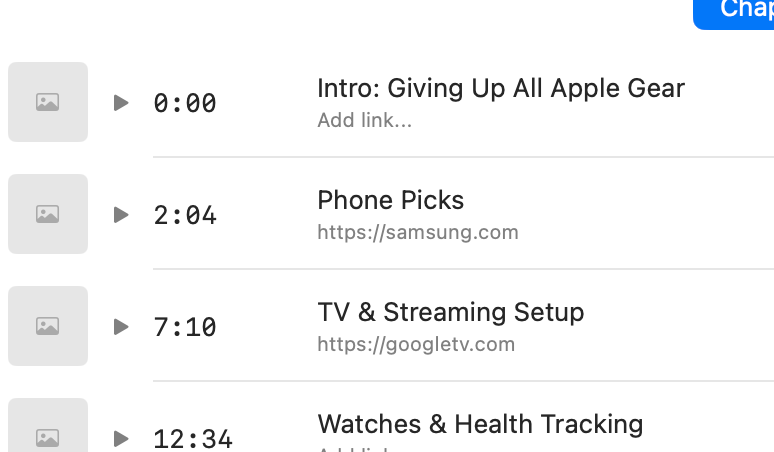

Finally, I got some feedback that links were kind of hidden away, especially on the Mac interface. It's easier in other apps to see the chapters that have links and see the links are correct and what they should be all at once, rather than having to click into each one. Now you can see your chapter titles and links all at once.

That's ChapterPod 1.3. The update is available now for the iPhone, iPad, and Mac, and of course, it's a free update for all existing users.

2026-02-13 04:19:12

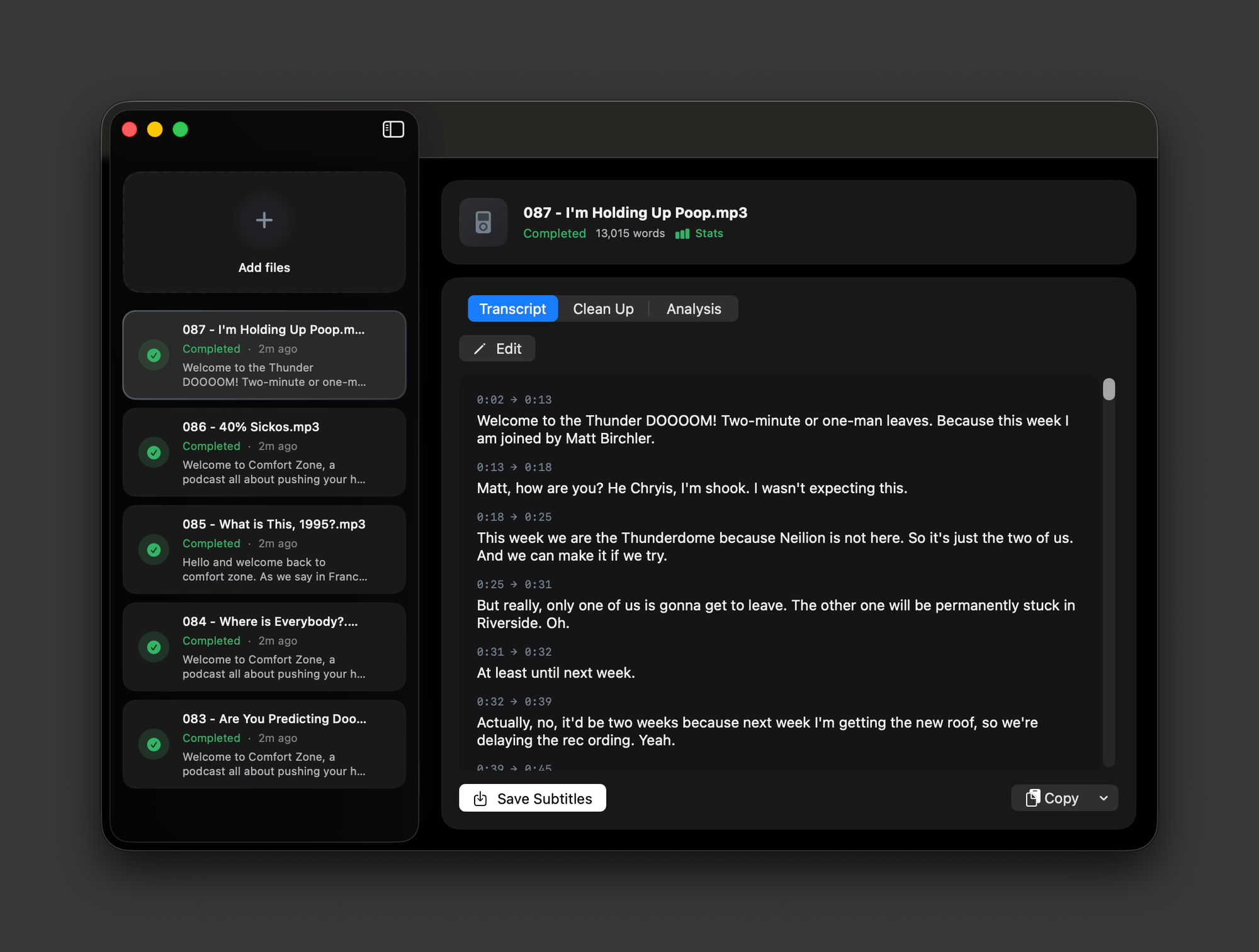

I'm thrilled to announce that Quick Subtitles 2.0 is now available for iPhone, iPad, and Mac. This update focuses on three key improvements, all of which make the app better than ever, and I believe the best (and most cost effective) way to generate subtitles for your audio and video projects.

First, Quick Subtitles is now a multi-document application, meaning you can work on multiple projects simultaneously. This change came directly from user feedback, and it also addresses a personal frustration I had. I often wanted to work on several projects in parallel, but Quick Subtitles previously only allowed one project at a time. Now, you can import your audio or video file, generate subtitles, and work on them at your own pace. You can delete projects when they're finished, or use the auto-delete feature to remove them automatically after a set time. Because of this new workflow, the dedicated batch processing page is no longer necessary, as you can simply drag multiple files into the UI and they will process in sequence.

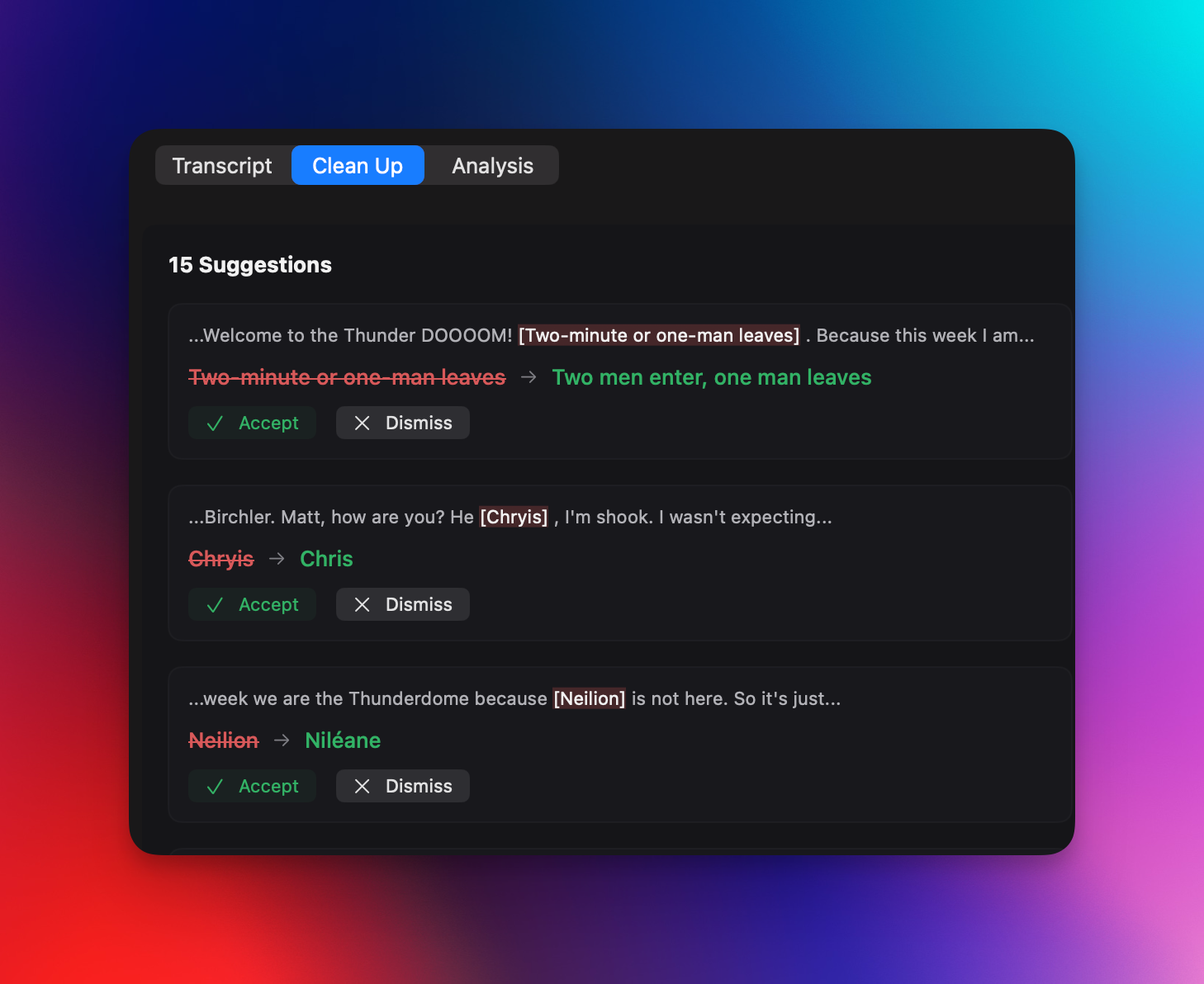

The second major update involves modifying the transcript, and this comes in two forms. After a transcript is created, the segments are now displayed in the UI rather than just raw text, and you can edit the transcript directly. So, if the transcription gets something slightly wrong, you can easily go in and fix it. For those using their own Gemini API key or a Quick Subtitles AI subscription, there's a new "clean up" feature. This analyzes the generated transcript, identifies potential errors, and gives you the option to correct them. This is presented in an intuitive UI that allows you to tell the LLM whether its suggestions are accurate or not. In my experience, it's correct over 90% of the time, and I usually approve all of its suggested changes. If you're confident in its accuracy, you can enable "YOLO mode" in the AI settings, which automatically applies all changes. While these transcription models are very impressive, and significantly better than previous options for generating subtitles, especially on-device, they aren't perfect. These new tools provide more freedom to address any issues that may arise.

The third key feature is a new model selection option. Previously, Quick Subtitles exclusively used Apple's on-device speech-to-text analyzer, which was fast and of high quality. Now, you have another option: NVIDIA's Parakeet V3. This model performs the same function as Apple's, but in some cases, it's more accurate, and in many cases, it's up to twice as fast at generating the transcript. The results I've seen have been genuinely shocking, especially considering how impressed I was with Apple's model just eight months ago. You can enable this in the app settings under the new model selector. Note that you'll need to perform a several-hundred-megabyte download to install it on your device. As always, this model is 100% locally processed, so your audio files never leave your device or use a cloud service. On a side note, the performance and quality of this model have impressed me so much that I'm considering incorporating it into QuickNotes to enhance that app as well.

If you're using the Mac app, there are a couple of niceties worth calling out. While a transcript is being generated, a progress bar appears on the app icon in the dock so you can keep an eye on things without switching back to the app (take that, Final Cut 😉).

You can also drag files directly onto the dock icon to start transcribing them immediately, which makes it easy to kick off a new project without even opening the app first.

Quick Subtitles 2.0 is available now for all Apple platforms and is a free upgrade for existing users. There's never been a better time to get started.

2026-02-12 21:00:00

Ted Lieu: Here's a video. Like former Prince Andrew, Donald Trump attended various parties with Jeffrey Epstein. I want to know: were there any underage girls at that party or at any party that Trump attended with Jeffrey Epstein.

Long pause

Pam Bondi: This is so ridiculous. And that they are trying to deflect from all the great things Donald Trump has done. There is no evidence that Donald Trump has committed a crime. Everyone knows that. This has been the most transparent presidency.

Absolutely wild stuff in this testimony. I'm not even saying Trump was guilty of everything, but it's not great when the AG is asked point blank if the sitting POTUS partied with underage girls, and the response is "um, let's change the subject."

2026-02-12 09:52:47

God damn, Jacob Geller is the best of us. He's an astounding essayist, and his work is consistently amazing.

2026-02-12 08:35:32

Jay Peters: Apple keeps hitting bumps with its overhauled Siri

Nearly a year ago, Apple delayed planned features for Siri that would let it understand your personal context and take action for you based on what’s on your screen. Apple had planned to launch those features with iOS 26.4, which is set to launch in March, but “testing uncovered fresh problems with the software,” according to Bloomberg. Apple has apparently told engineers to use iOS 26.5, scheduled for May, to test new Siri features instead.

Two things…

One, making a meaningfully better, LLM-enhanced Siri seems to be very tricky.

Two, I've seen a few "how can it be delayed if it was never publicly announced?" posts on socials, and I genuinely wonder about these folks. Set aside these features were advertised as coming in 2024, and we can still mention that internal products are planned and delayed 👏🏻 all 👏🏻the 👏🏻 time 👏🏻 at companies, regardless of if they gave customers timelines. Stop acting like this is a clever take when stories like this come out.

2026-02-11 21:00:00

Aruna Ranganathan, Xingqi Maggie Ye: AI Doesn’t Reduce Work—It Intensifies It

In our in-progress research, we discovered that AI tools didn’t reduce work, they consistently intensified it.

Two quick things on this.

First, this resonates with me. Because there are things you can do quicker, suddenly you can do much more of them. That's just going to be lots to think about and manage, regardless of whether you have an AI.

Second, I don't buy these suggestions that AI will be such a productivity gain that it will lead to four-day work weeks or, as people like Elon Musk have suggested, no one ever working again. As we've seen time and time and time again, as technology increases productivity, people are more likely to find ways to do more in the same amount of time rather than to take more time off.