2026-01-26 08:00:00

I was listening to a podcast recently where someone pointed out something curious: machines have been better at playing chess than humans for three decades now, and nobody cares. People still watch human chess players. If anything, chess is experiencing a renaissance.

I don’t know anyone who wants to watch robots play chess. But I know plenty of people who love watching humans play. I’m not a big chess player myself (heck, I could hardly win any game), but I catch myself watching matches on YouTube.

The reason is that the game is way more than moving pieces on a board. There’s a background story to every player and the match is a truly human experience where people don’t only compete against an opponent but also against themselves. That’s what makes it so interesting, not the fact that there’s an algorithm which has 3646 Elo. Humans can still contribute!

And I think it’s the same with writing.

Everyone wants to read personal thoughts from real human beings, but no one writes them anymore. What we get instead is slop, and that’s hardly a good read. The moment I notice I’m reading autogenerated text, I care less.

That’s why I keep writing. My personal blog is a weird mix of ramblings about reviewing code, the best programmers I know, and random thoughts.

But you know what? People are reading it and from time to time I get an email from someone who found one of my articles helpful.

Time and again, when I talk to friends, they share the same experience. My friend Thomas wrote his first article about his Experience with Atlassian and many people found it helpful and reached out. And even if no one does, writing is a joyful hobby and it helps me clear my thoughts.

I believe there has rarely been a better time to start writing.

2025-10-31 08:00:00

Over the years, I’ve gravitated toward two complementary ways to build robust software systems: building up and sanding down.

Building up means starting with a tiny core and gradually adding functionality. Sanding down means starting with a very rough idea and refining it over time.

Neither approach is inherently better; it’s almost a stylistic decision that depends on team dynamics and familiarity with the problem domain. On top of that, my thoughts on the topic are not particularly novel, but I wanted to summarize what I’ve learned over the years.

Building up focuses on creating a solid foundation first. I like to use it when working on systems I know well or when there is a clear specification I can refer to. For example, I use it for implementing protocols or when emulating hardware such as for my MOS 6502 emulator.

I prefer “building up” over “bottom-up” as the former evokes construction and upward growth. “Bottom-up” is more abstract and directional. Also “bottom-up” always felt like jargon while “building up” is more intuitive and very visual, so it could help communicate the idea to non-technical stakeholders.

There are a few rules I try to follow when building up:

When I collaborate with highly analytical people, this approach works well. People who have a background in formal methods or mathematics tend to think in terms of “building blocks” and proofs. I also found that functional programmers tend to prefer this approach.

In languages like Rust, the type system can help enforce invariants and make it easier to build up complex systems from simple components. Also, Rust’s trait system encourages composition, which aligns well with that line of thinking.

The downside of the “build up” approach is that you end up spending a lot of time on the foundational layers before you can see any tangible results. It can be slow to get to an MVP this way. Some people also find this approach too rigid and inflexible, as it can be hard to pivot or change direction once you’ve committed to a certain architecture.

For example, say you’re building a web framework. There are a ton of questions at the beginning of the project:

In a building-up approach, you would start by answering these questions and designing the core abstractions first. Foundational components like the request and response types, the router, and the middleware system are the backbone of the framework and have to be rock solid.

Only after you’ve pinned down the core data structures and their interactions would you move on to building the public API. This can lead to a very robust and well-designed system, but it can also take a long time to get there.

For instance, here is the Request struct from the popular http crate:

#[derive(Clone)]

pub struct Request<T> {

head: Parts,

body: T,

}

/// Component parts of an HTTP `Request`

///

/// The HTTP request head consists of a method, uri, version, and a set of

/// header fields.

#[derive(Clone)]

pub struct Parts {

/// The request's method

pub method: Method,

/// The request's URI

pub uri: Uri,

/// The request's version

pub version: Version,

/// The request's headers

pub headers: HeaderMap<HeaderValue>,

/// The request's extensions

pub extensions: Extensions,

_priv: (),

}There are quite a few clever design decisions in this short piece of code:

Request struct is generic over the body type T, allowing for flexibility in how the body is represented (e.g., as a byte stream, a string, etc.).Parts struct is separated from the Request struct, allowing for easy access to the request metadata without needing to deal with the body.Extensions can be used to store extra data derived from the underlying protocol._priv: () field is a zero-sized type used to prevent external code from constructing Parts directly. It enforces the use of the provided constructors and ensures that the invariants of the Parts struct are maintained.With the exception of extensions, this design has stood the test of time. It has remained largely unchanged since the very first version in 2017.

The alternative approach, which I found to work equally well, is “sanding down.” In this approach, you start with a rough prototype (or vertical slice) and refine it over time. You “sand down” the rough edges over and over again, until you are happy with the result. It feels a bit like woodworking, where you start with a rough piece of wood and gradually refine it into a work of art. (Not that I have any idea what woodworking is like, but I imagine it’s something like that.)

Crucially, this is similar but not identical to prototyping. The difference is that you don’t plan on throwing away the code you write. Instead, you’re trying to exploit the iterative nature of the problem and purposefully work on “drafts” until you get to the final version. At any point in time you can stop and ship the current version if needed.

I find that this approach works well when working on creative projects which require experimentation and quick iteration. People with a background in game development or scripting languages tend to prefer this approach, as they are used to working in a more exploratory way.

When using this approach, I try to follow these rules:

This approach makes it easy to throw code away and try something new. I found that it can be frustrating for people who like to plan ahead and are very organized and methodical. The “chaos” seems to be off-putting for some people.

As an example, say you’re writing a game in Rust. You might want to tweak all aspects of the game and quickly iterate on the gameplay mechanics until they feel “just right.”

In order to do so, you might start with a skeleton of the game loop and nothing else. Then you add a player character that can move around the screen. You tweak the jump height and movement speed until it feels good. There is very little abstraction between you and the game logic at this point. You might have a lot of duplicated code and hardcoded values, but that’s okay for now. Once the core gameplay mechanics are pinned down, you can start refactoring the code.

I think Rust can get in the way if you use Bevy or other frameworks early on in the game design process. The entity component system can feel quite heavy and hinder rapid iteration. (At least that’s how I felt when I tried Bevy last time.)

I had a much better experience creating my own window and rendering loop using macroquad. Yes, the entire code was in one file and no, there were no tests. There also wasn’t any architecture to speak of.

And yet… working on the game felt amazing! I knew that I could always refactor the code later, but I wanted to stay in the moment and get the gameplay right first.

Here’s my game loop, which was extremely imperative and didn’t require learning a big framework to get started:

#[macroquad::main("Game")]

async fn main() {

let mut player = Player::new();

let input_handler = InputHandler::new();

clear_background(BLACK);

loop {

// Get inputs - only once per frame

let movement = input_handler.get_movement();

let action = input_handler.get_action();

// Update player with both movement and action inputs

player.update(&movement, &action, get_frame_time());

// Draw

player.draw();

next_frame().await

}

}You don’t have to be a Rust expert to understand this code.

In every loop iteration, I simply:

It’s a very typical design for that type of work.

If I wanted to, I could now sand down the code and refactor it into a more modular design until it’s production-ready. I could introduce a “listener/callback” system to separate input handling from player logic or a scene graph to manage multiple game objects or an ontology system to manage game entities and their components. But why bother? For now, I care about the game mechanics, not the architecture.

Both variants can lead to correct, maintainable, and efficient systems. There is no better or worse approach.

I found that most people gravitate toward one approach or the other. However, it helps to be familiar with both approaches and know when to apply which mode. Choose wisely, because switching between the two approaches is quite tricky as you start from different ends of the problem.

2025-09-26 08:00:00

Since my professional writing on Rust has moved to the corrode blog, I can be a bit more casual on here and share some of my personal thoughts on the recent debate around using Rust in established software.

The two projects in question are git (kernel thread, Hacker News Discussion) and the recently rewritten coreutils in Rust, which will ship with Ubuntu 25.10 Quizzical Quokka.

What prompted me to write this post is a discussion on Twitter and a blog post titled “Are We Chasing Language Hype Over Solving Real Problems?”.

In both cases, the authors speculate about the motivations behind choosing Rust, and as someone who helps teams use Rust in production, I find those takes… hilarious.

Back when I started corrode, people always mentioned that Rust wasn’t used for anything serious. I knew about the production use cases from client work, but there was very little public information out there. As a consequence, we started the ‘Rust in Production’ podcast to show that companies indeed choose Rust for real-world applications. However, people don’t like to be proven wrong, so that conspiracy theory has now morphed into “Big Rust” trying to take over the world. 😆

Let’s look at some of the claims made in the blog post and Twitter thread and see how these could be debunked pretty easily.

“GNU Core Utils has basically never had any major security vulnerabilities in its entire existence”

If only that were true.

A quick CVE search shows multiple security issues over the decades, including buffer overflows and path traversal vulnerabilities. Just a few months ago, a heap buffer under-read was found in sort, which would cause a leak of sensitive data if an attacker sends a specially crafted input stream.

The GNU coreutils are one of the most widely used software packages worldwide with billions of installations and hundreds (thousands?) of developers looking at the code. Yes, vulnerabilities still happen. No, it is not easy to write correct, secure C code. No, not even if you’re extra careful and disciplined.

ls is five thousand lines long. (Check out the source code). That’s a lot of code for printing file names and metadata and a big attack surface!

“Rust can only ever match C performance at best and is usually slower”

Work by Trifecta shows that it is possible to write Rust code that is faster than C in some cases. Especially in concurrent workloads and with memory safety guarantees. If writing safe C code is too hard, try writing safe concurrent C code!

That’s where Rust shines.

You can achieve ridiculous levels of parallelization without worrying about security issues.

And no, you don’t need to litter your code with unsafe blocks.

Check out Steve Klabnik’s recent talk about Oxide where he shows that their bootloader and their preemptive multitasking OS, hubris – both pretty core systems code – only contain 5% of unsafe code each.

You can write large codebases in Rust with no unsafe code at all.

As a trivial example, I sat down to rewrite cat in Rust one day.

The result was 3x faster than GNU cat on my machine.

You can read the post here.

All I did was use splice to copy data, which saves one memory copy.

Performance is not only dependent on the language but on the algorithms and system calls you use.

If you play into Rust’s strengths, you can match C’s performance. At least there is no technical limitation that would prevent this. And I personally feel more willing to aggressively optimize my code in Rust, because I don’t have to worry about introducing memory safety bugs. It feels like I’m not alone.

“We reward novelty over necessity in the industry”

This ignores that most successful companies (Google, Meta, etc.) primarily use battle-tested tech stacks, not bleeding-edge languages. These companies have massive codebases and cannot afford to rewrite everything in the latest trendy language. But they see the value of using Rust for new components and gradually rewriting existing ones. That’s because 70% of security vulnerabilities are memory safety issues and these issues are extremely costly to fix. If these companies could avoid switching to a new language to do so, they would.

Besides, Rust is not exactly new anymore. Rust 1.0 was released 10+ years ago! The industry is moving slowly, but not that slowly. You’d be surprised to find out how many established companies use Rust without even announcing it or thinking of it as “novelty”.

“100% orchestrated”

Multiple people in the Twitter thread were convinced this is some coordinated master plan rather than developers choosing better tools, while the very maintainers of git and coreutils openly discussed their motivations in public forums for everyone to see.

“They’re trying to replace/erase C. It’s not going to happen”

They are right. C is not going away anytime soon. There is just so much C/C++ code out there in the wild, and rewriting everything in Rust is not feasible. The good news is that you can incrementally rewrite C/C++ code in Rust, one component at a time. That’s what the git maintainers are planning, by using Rust for new components.

“They’re rewriting software with a GNU license into software with an MIT license”

Even if you use Rust, you can still license your code under GPL or any other license you want. Git itself remains GPL, and many Rust projects use various licenses, not only MIT. The license fear is often brought up by people who don’t understand how open source licensing works or it might just be FUD.

MIT code is still compatible with GPL code and you can use both of them in the same project without issues. It’s just that the end product (the thing you deliver to your users, i.e. binary executables) is now covered by GPL because of its virality.

“It’s just developers being bored and wanting to work with shiny new languages”

The aging maintainers of C projects are retiring, and there are fewer new developers willing to pick up C just to maintain legacy code in their free time. C developers are essentially going extinct. New developers want to work with modern languages and who can blame them? Or would you want to maintain a 40-year-old COBOL codebase or an old Perl script? We have to move on.

“Why not build something completely new instead of rewriting existing tools?”

It’s not that easy. The code is only part of the story. The other part is the ecosystem, the tooling, the integrations, the documentation, and the user base. All of that takes years to build. Users don’t want to change their workflows, so they want drop-in replacements. Proven interfaces and APIs, no matter how crude and old-fashioned, have a lot of value.

But yes, new tools are being built in Rust as well.

“They don’t know how to actually solve problems, just chase trends”

Talk about dismissing the technical expertise of maintainers who’ve been working on these projects for years or decades and understand the pain points better than anyone.

If they were just chasing trends, they wouldn’t be maintaining these projects in the first place! These people are some of the most experienced developers in the world, and yet people want to tell them how to do their jobs.

“It’s part of the woke mind virus infecting software”

Imagine thinking memory safety is a political conspiracy. Apparently preventing buffer overflows is now an ideological stance. The closest thing to this is the White House’s technical report which recommends memory-safe languages for government software and mandating memory safety for software receiving federal funding is a pretty reasonable take.

I could go on, but I think you get my point.

People who give Rust an honest chance know that it offers advantages in terms of memory safety, concurrency, and maintainability. It’s not about chasing hype but about long-term investment in software quality. As more companies successfully adopt Rust every day, it increasingly becomes the default choice for many new projects.

If you’re interested in learning more about using Rust in production, check out my other blog or listen to the Rust in Production podcast.

Oh, and if you know someone who posts such takes, stop arguing and send them a link to this post.

2025-08-06 08:00:00

I’ve been reviewing other people’s code for a while now, more than two decades to be precise. Nowadays, I spend around 50-70% of my time reviewing code in some form or another. It’s what I get paid to do, alongside systems design.

Over time, I learned a thing or two about how to review code effectively. I focus on different things now than when I started.

Bad reviews are narrow in scope. They focus on syntax, style, and minor issues instead of maintainability and extensibility.

Good reviews look at not only the changes, but also what problems the changes solve, what future issues might arise, and how a change fits into the overall design of the system.

I like to look at the lines that weren’t changed. They often tell the true story.

For example, often people forget to update a related section of the codebase or the docs. This can lead to bugs, confusion, breaking changes, or security issues.

Be thorough and look at all call-sites of the new code. Have they been correctly updated? Are the tests still testing the right thing? Are the changes in the right place?

Here’s a cheat sheet of questions I ask myself when reviewing code:

These questions have more to do with systems design than with the changes themselves. Don’t neglect the bigger picture because systems become brittle if you accept bad changes.

Code isn’t written in isolation. The role of more experienced developers is to reduce operational friction and handle risk management for the project. The documentation, the tests, and the data types are equally as important as the code itself.

Always keep an eye out for better abstractions as the code evolves.

I spend a big chunk of my time thinking about good names when reviewing code.

Naming things is hard, which is why it’s so important to get it right. Often, it’s the most important part of a code review.

It’s also the most subjective part, which makes it tedious because it’s hard to distinguish between nitpicking and important naming decisions.

Names encapsulate concepts and serve as “building blocks” in your code. Bad names are the code smell that hint at problems running deep. They increase cognitive overhead by one or more orders of magnitude.

For example, say we have a struct that represents a player’s stats in a game:

struct Player {

username: String,

score: i32,

level: i32,

}I often see code like this:

// Bad: using temporary/arbitrary names creates confusion

fn update_player_stats(player: Player, bonus_points: i32, level_up: bool) -> Player {

let usr = player.username.trim().to_lowercase();

let updated_score = player.score + bonus_points;

let l = if level_up { player.level + 1 } else { player.level };

let l2 = if l > 100 { 100 } else { l };

Player {

username: usr,

score: updated_score,

level: l2,

}

}This code is hard to read and understand.

What is usr, updated_score, and l2? The purpose is not conveyed clearly.

This builds up cognitive load and make it harder to follow the logic.

That’s why I always think of the most fitting names for variables, even if it feels like I’m being pedantic.

// Good: meaningful names that describe the transformation at each step

fn update_player_stats(player: Player, bonus_points: i32, level_up: bool) -> Player {

// Each variable name describes what the value represents

let username = player.username.trim().to_lowercase();

let score = player.score + bonus_points;

// Use shadowed variables to clarify intent

let level = if level_up { player.level + 1 } else { player.level };

let level = if level > 100 { 100 } else { level };

// If done correctly, the final variable names

// often match the struct's field names

Player {

username,

score,

level,

}

}Good names become even more critical in larger codebases where values are declared far away from where they’re used and where many developers have to have a shared understanding of the problem domain.

I have to decline changes all the time and it’s never easy. After all someone put in a lot of effort and they want to see their work accepted.

Avoid sugarcoating your decision or trying to be nice. Be objective, explain your reasoning and provide better alternatives. Don’t dwell on it, but focus on the next steps.

It’s better to say no than to accept something that isn’t right and will cause problems down the road. In the future it will get even harder to deny a change once you’ve set a precedent.

That’s the purpose of the review process: there is no guarantee that the code will be accepted.

In open source, many people will contribute code that doesn’t meet your standards. There needs to be someone who says “no” and this is a very unpopular job (ask any open source maintainer). However, great projects need gatekeepers because the alternative is subpar code and eventually unmaintainable projects.

At times, people will say “let’s just merge this and fix it later.” I think that’s a slippery slope. It can lead to tech debt and additional work later on. It’s hard to stand your ground, but it’s important to do so. If you see something that isn’t right, speak up.

When it gets hard, remember that you’re not rejecting the person, you’re rejecting the code. Remind people that you appreciate their effort and that you want to help them improve.

Even though you’ll develop an intuition for what to focus on in reviews, you should still back it up with facts. If you find yourself saying “no” to the same thing over and over again, consider writing a style guide or a set of guidelines for your team.

Be gracious but decisive; it’s just code.

Code reviews aren’t just about code; people matter too. Building a good relationship with your coworkers is important.

I make it a point to do the first couple of reviews together in a pair programming session if possible.

This way, you can learn from each other’s communication style. Building trust and getting to know each other works well this way. You should repeat that process later if you notice a communication breakdown or misunderstanding.

“Can you take a quick look at this PR? I want to merge it today.” There often is an expectation that code reviews are a one-time thing. That’s not how it works. Instead, code reviews are an iterative process. Multiple iterations should be expected to get the code right.

In my first iteration, I focus on the big picture and the overall design. Once I’m done with that, I go into the details.

The goal shouldn’t be to merge as quickly as possible, but to accept code that is of high quality. Otherwise, what’s the point of a code review in the first place? That’s a mindset shift that’s important to make.

Reviews aren’t exclusively about pointing out flaws, they’re also about creating a shared understanding of the code within the team. I often learn the most about writing better code by reviewing other people’s code. I’ve also gotten excellent feedback on my own code from excellent engineers.

These are invaluable “aha moments” that help you grow as a developer. Experts spent their valuable time reviewing my code, and I learned a lot from it. I think everybody should experience that once in their career.

From time to time, you’ll disagree with the author. Being respectful and constructive is important. Avoid personal attacks or condescending language. Don’t say “this is wrong.” Instead, say “I would do it this way.” If people are hesitant, ask a few questions to understand their reasoning.

These “Socratic questions”1 help the author think about their decisions and can lead to better designs.

People should enjoy receiving your feedback. If not, revisit your review style. Only add comments that you yourself would be happy to receive.

From time to time, I like to add positive comments like “I like this” or “this is a great idea.” Keeping the author motivated and showing that you appreciate their work goes a long way.

It’s easy to miss subtle details when you look at code for too long. Having a local copy of the code that I can play with helps me a lot.

I try to run the code, the tests, and the linters if I can. Checking out the branch, moving things around, breaking things, and trying to understand how it works is part of my review process.

User-facing changes like UI changes or error messages are often easier to spot when you run the code and try to break it.

After that, I revert the changes and, if needed, write down my findings in a comment. Better understanding can come from this approach.

Code reviews are often a bottleneck in the development process, because they can’t be fully automated: there’s a human in the loop who has to look at the code and provide feedback.

But if you wait for your colleagues to review your code, that can lead to frustration. Avoid being that person.

Sometimes you won’t have time to review code and that is okay. If you can’t review the code in a reasonable time, let the author know.

I’m still working on this, but I try to be more proactive about my availability and set clear expectations.

Code reviews are my favorite way to learn new things. I learn new techniques, patterns, new libraries, but most importantly, how other people approach problems.

I try to learn one new thing with each review. It’s not wasted time, if it helps the team improve and grow as a whole.

Formatters exist for a reason: leave whitespace and formatting to the tools. Save your energy for issues that truly matter.

Focus on logic, design, maintainability, and correctness. Avoid subjective preferences that don’t impact code quality.

Ask yourself: Does this affect functionality or would it confuse future developers? If not, let it go.

When reviewing code, focus on the reasoning behind the changes. This has a much better chance of success than pointing out flaws without any reasoning.

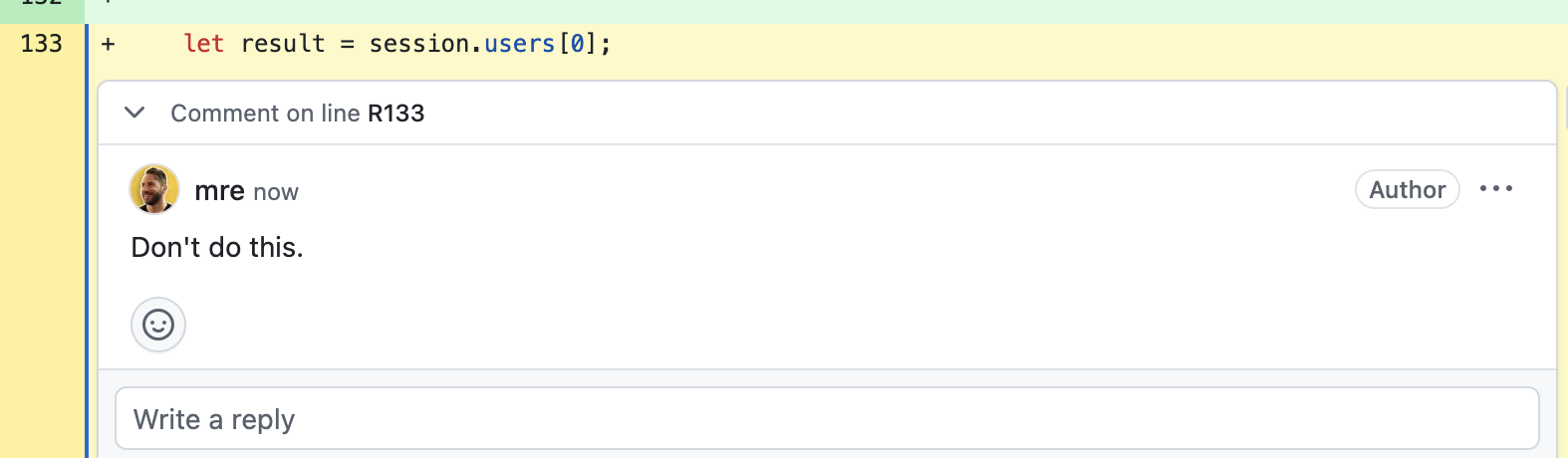

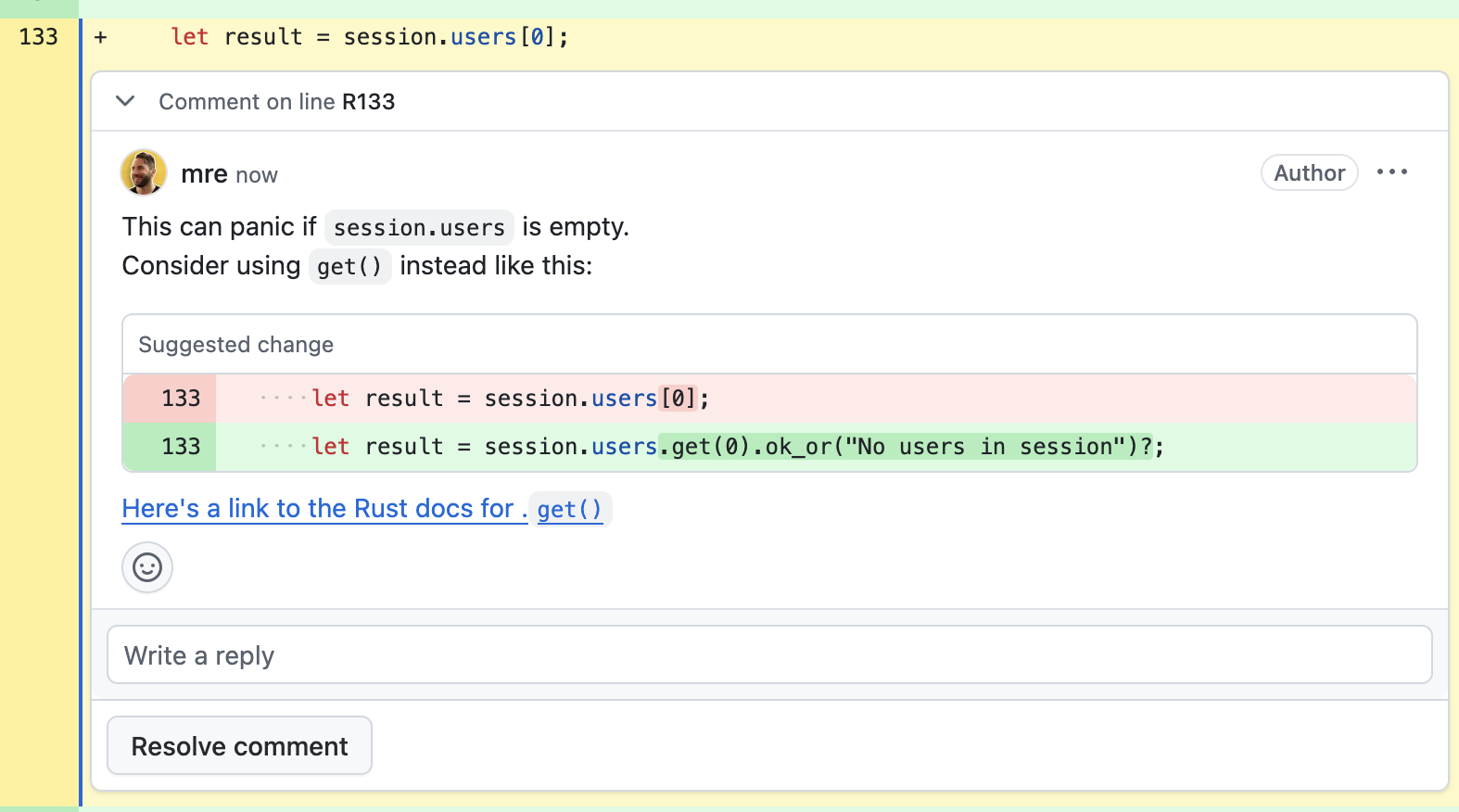

Consider the following two code review comments. The first one is unhelpful and dismissive.

The second suggests an alternative, links to the documentation, and explains why the change could lead to problems down the road.

Which one would you prefer to receive?

I realize that this requires more time and effort, but it’s worth it! Most of the time, the author will appreciate it and avoid making the same mistake in the future. There is a compound effect from helpful reviews over time.

Asking is better than assuming. If you don’t understand something, ask the author to explain it. Chances are, you’re not the only one who doesn’t get it.

Often, the author will be happy to explain their reasoning. Better understanding of the code and the system as a whole can result from this. It can also help the author see things from a different perspective. Perhaps they’ll learn that their assumptions were wrong or that the system isn’t self-explanatory. Perhaps there’s missing documentation?

Asking great questions is a superpower.

From time to time, ask the author for feedback on your feedback:

Basically, you ask them to review your review process, heh.

Learning how to review code is a skill that needs constant practice and refinement. Good luck finding your own style.

Thanks for pointing out that term to me, Lucca! ↩

2025-06-23 08:00:00

One of the most repeated pieces of advice throughout my career in software has been “don’t repeat yourself,” also known as the DRY principle. For the longest time, I took that at face value, never questioning its validity.

That was until I saw actual experts write code: they copy code all the time1. I realized that repeating yourself has a few great benefits.

The common wisdom is that if you repeat yourself, you have to fix the same bug in multiple places, but if you have a shared abstraction, you only have to fix it once.

Another reason why we avoid repetition is that it makes us feel clever. “Look, I know all of these smart ways to avoid repetition! I know how to use interfaces, generics, higher-order functions, and inheritance!”

Both reasons are misguided. There are many benefits of repeating yourself that might get us closer to our goals in the long run.

When you’re writing code, you want to keep the momentum going to get into a flow state. If you constantly pause to design the perfect abstraction, it’s easy to lose momentum.

Instead, if you allow yourself to copy-paste code, you keep your train of thought going and work on the problem at hand. You don’t introduce another problem of trying to find the right abstraction at the same time.

It’s often easier to copy existing code and modify it until it becomes too much of a burden, at which point you can go and refactor it.

I would argue that “writing mode” and “refactoring mode” are two different modes of programming. During writing mode, you want to focus on getting the idea down and stop your inner critic, which keeps telling you that your code sucks. During refactoring mode, you take the opposite role: that of the critic. You look for ways to improve the code by finding the right abstractions, removing duplication, and improving readability.

Keep these two modes separate. Don’t try to do both at the same time.2

When you start to write code, you don’t know the right abstraction just yet. But if you copy code, the right abstraction reveals itself; it’s too tedious to copy the same code over and over again, at which point you start to look for ways to abstract it away. For me, this typically happens after the first copy of the same code, but I try to resist the urge until the 2nd or 3rd copy.

If you start too early, you might end up with a bad abstraction that doesn’t fit the problem. You know it’s wrong because it feels clunky. Some typical symptoms include:

render_pdf_file instead of generate_invoice

We easily settle for the first abstraction that comes to mind, but most often, it’s not the right one. And removing the wrong abstraction is hard work, because now the data flow depends on it.

We also tend to fall in love with our own abstractions because they took time and effort to create. This makes us reluctant to discard them even when they no longer fit the problem—it’s a sunk cost fallacy.

It gets worse when other programmers start to depend on it, too. Then you have to be careful about changing it, because it might break other parts of the codebase. Once you introduce an abstraction, you have to work with it for a long time, sometimes forever.

If you had a copy of the code instead, you could just change it in one place without worrying about breaking anything else.

Duplication is far cheaper than the wrong abstraction

—Sandi Metz, The Wrong Abstraction

Better to wait until the last moment to settle on the abstraction, when you have a solid understanding of the problem space.3

Abstraction reduces code duplication, but it comes at a cost.

Abstractions can make code harder to read, understand, and maintain because you have to jump between multiple levels of indirection to understand what the code does. The abstraction might live in different files, modules, or libraries.

The cost of traversing these layers is high. An expert programmer might be able to keep a few levels of abstraction in their head, but we all have a limited context window (which depends on familiarity with the codebase).

When you copy code, you can keep all the logic in one place. You can just read the whole thing and understand what it does.

Sometimes, code looks similar but serves different purposes.

For example, consider two pieces of code that calculate a sum by iterating over a collection of items.

total = 0

for item in shopping_cart:

total += item.price * item.quantityAnd elsewhere in the code, we have

total = 0

for item in package_items:

total += item.weight * item.rateIn both cases, we iterate over a collection and calculate a total. You might be tempted to introduce a helper function, but the two calculations are very different.

After a few iterations, these two pieces of code might evolve in different directions:

def calculate_total_price(shopping_cart):

if not shopping_cart:

raise ValueError("Shopping cart cannot be empty")

total = 0.0

for item in shopping_cart:

# Round for financial precision

total += round(item.price * item.quantity, 2)

return totalIn contrast, the shipping cost calculation might look like this:

def calculate_shipping_cost(package_items, destination_zone):

# Use higher of actual weight vs dimensional weight

total_weight = sum(item.weight for item in package_items)

total_volume = sum(item.length * item.width * item.height for item in package_items)

dimensional_weight = total_volume / 5000 # FedEx formula

billable_weight = max(total_weight, dimensional_weight)

return billable_weight * shipping_rates[destination_zone]Had we applied “don’t repeat yourself” too early, we would have lost the context and specific requirements of each calculation.

The DRY principle is misinterpreted as a blanket rule to avoid any duplication at all costs, which can lead to complexity.

When you try to avoid repetition by introducing abstractions, you have to deal with all the edge cases in a place far away from the actual business logic. You end up adding redundant checks and conditions to the abstraction, just to make sure it works in all cases. Later on, you might forget the reasoning behind those checks, but you keep them around “just in case” because you don’t want to break any callers. The result is dead code that adds complexity to the codebase; all because you wanted to avoid repeating yourself.

The common wisdom is that if you repeat yourself, you have to fix the same bug in multiple places. But the assumption is that the bug exists in all copies. In reality, each copy might have evolved in different ways, and the bug might only exist in one of them.

When you create a shared abstraction, a bug in that abstraction breaks every caller, breaking multiple features at once. With duplicated code, a bug is isolated to just one specific use case.

Knowing that you didn’t break anything in a shared abstraction is much harder than checking a single copy of the code. Of course, if you have a lot of copies, there is a risk of forgetting to fix all of them.

The key to making this work is to clean up afterwards. This can happen before you commit the code or during a code review.

At this stage, you can look at the code you copied and see if it makes sense to keep it as is or if you can see the right abstraction. I try to refactor code once I have a better understanding of the problem, but not earlier.

A trick to undo a bad abstraction is to inline the code back into the places where it was used. For a while, you end up “repeating yourself” again in the codebase, but that’s okay. Rethink the problem based on the new information you have. Often you’ll find a better abstraction that fits the problem better.

When the abstraction is wrong, the fastest way forward is back.

—Sandi Metz, The Wrong Abstraction

It’s fine to look for the right abstraction, but don’t obsess over it. Don’t be afraid to copy code when it helps you keep momentum and find the right abstraction.

It bears repeating: “Repeat yourself.”

For some examples, see Ferris working on Rustendo64 or tokiospliff working on a C++ game engine. ↩

This is also how I write prose: I first write a draft and block my inner critic, and then I play the role of the editor/critic and “refactor” the text. This way, I get the best of both worlds: a quick feedback loop which doesn’t block my creativity, and a final product which is more polished and well-structured. Of course, I did not invent this approach. I recommend reading “Shitty first drafts” from Anne Lamott’s book Bird by Bird: Instructions on Writing and Life if you want to learn more about this technique. ↩

This is similar to the OODA loop concept, which stands for “Observe, Orient, Decide, Act.” It was developed by military strategist John Boyd. Fighter pilots use it to wait until the last responsible moment to decide on a course of action, which allows them to make the best decision based on the current situation and available information. ↩

2025-06-06 08:00:00

I watched the Champions League final the other day when it struck me: I’m basically watching millionaires all the time.

The players are millionaires, the coaches are millionaires, the club owners are millionaires. It’s surreal.

This week I watched John Wick Ballerina and, again, there’s Keanu Reeves, who is a millionaire, and Ana de Armas, who is as well.

Yesterday I heard about Trump and Musk fighting. They are not millionaires, they are billionaires!

As I’m writing this, I’m watching the Rock am Ring live stream, a music festival in Germany. Weezer is playing. These guys are all millionaires.

I don’t know what to make of it. It’s a strange realization, but one that feels worth sharing.

I could go down the road of how this fixation on elites distracts us from the people nearby, but that’s not quite it. What interests me more is how normalized this has become.

Maybe it’s just the power law in action: a few rise to the top, and we amplify them by watching. But most people in every field aren’t millionaires. We just don’t see them.

You’re on a tiny blog by a tiny man and if you made it this far, I appreciate you. It looks as if you care about the little stories as well.

If you’re anything like me, you’re not only enjoying the little stories, you’re actively seeking them out – but there’s so few of it nowadays. Yes, there are still places where people share their stories, but you need to know where to look.

If anything, we all should share more. Write about the little things, the everyday moments, the people you meet, the things you care about. Don’t live anybody else’s life!

Rivers Cuomo, Weezer’s lead singer, once wrote:

My motivation is much different now than it was then: then I was terribly discontent and dreaming of being a classical composer, a writer, or basically anything that I wasn’t; now I just want to enjoy my life and do the responsible thing—graduate.

That’s from his Letter For Readmission To Harvard (2005).

Nobody forced him to go back to Harvard after so many years. He was a freaking millionaire rock star by then.

And yet, he did.

He stopped pretending and started living.

We don’t have to keep watching other people’s lives.

Live your own.