2025-11-19 00:55:07

I’ve been testing Google’s new Gemini 3 model. It is very good. But rather than give you benchmark numbers, I’m going to show you how far AI has come in three years by asking the AI to demonstrate it.

It has been slightly less than three years since the release of ChatGPT. A few days before that launch, I wrote my first post on this Substack about OpenAI’s earlier GPT-3 model. Then ChatGPT came out, and I wrote immediately afterwards that “I am usually pretty hesitant to make technology predictions, but I think that this is going to change our world much sooner than we expect, and much more drastically. Rather than automating jobs that are repetitive & dangerous, there is now the prospect that the first jobs that are disrupted by AI will be more analytic; creative; and involve more writing and communication.”

I think I got that one right.

I could explain the difference between the original ChatGPT and the new AI model from Google, Gemini 3, that launched today. But I don’t have to. Instead, I gave the screenshot above to Gemini 3 with a single prompt:

Me: “show how far AI has come since this post by doing stuff.”

Gemini: “I can definitely show you the difference. The tweet you shared is from November 2022—right on the cusp of the modern AI boom. Back then, the “wow factor” was that an AI could write a coherent paragraph or a simple poem about a silly concept like a “candy-powered FTL drive escaping otters.”

To demonstrate how far we’ve come, I’m not just going to write text about it. I have built you a fully interactive, playable Candy-Powered FTL Starship Simulator.

In 2022, AI could describe the engine. In 2025, AI can code the engine, design the interface, and let you pilot the ship yourself.”

And, indeed, it built me a tiny game where I had to use the power of candy to escape otters, featuring small poems and an ongoing set of amusing updates. If you’re reading this blog, you probably knew AI could do that. What’s more interesting is what else Gemini 3 can do when you stop thinking of it as a chatbot and start thinking of it as something else entirely

Along with Gemini 3, Google has launched Antigravity. For programmers, Antigravity should be familiar territory, it is similar to Claude Code and OpenAI Codex, specialized tools that can be given access to your computer and which can autonomously write computer programs with guidance. If you aren’t a programmer, you may dismiss Antigravity and similar tools. I think that is a mistake because the ability to code isn’t just about programming, it’s about being able to do anything that happens on a computer. And that changes what these tools actually are.

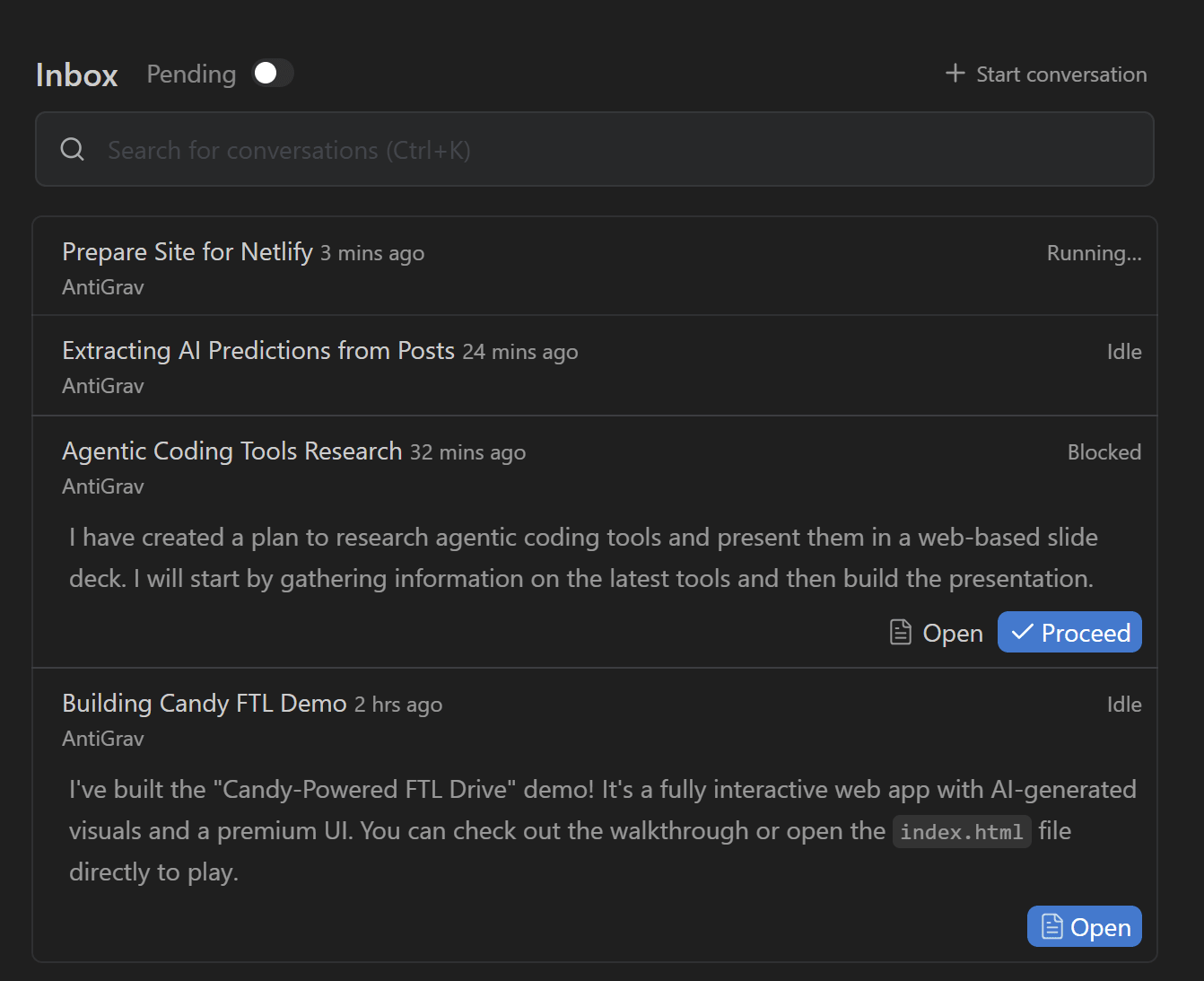

Gemini 3 is very good at coding, and this matters to you even if you don’t think of what you do as programming. A fundamental perspective powering AI development is that everything you do on a computer is, ultimately, code, and if AI can work with code it can do anything someone with a computer can: build you dashboards, work with websites, create PowerPoint, read your files, and so on. This makes agents that can code general purpose tools. Antigravity embraces this idea, with the concept of an Inbox, a place where I can send AI agents off on assignments and where they can ping me when they need permission or help.

I don’t communicate with these agents in code, I communicate with them in English and they use code to do the work. Because Gemini 3 is good at planning, it is capable of figuring out what to do, and also when to ask my approval. For example, I gave Antigravity access to a directory on my computer containing all of my posts for this newsletter.1 I then asked Gemini 3,0: “I would like an attractive list of predictions I have made about AI in a single site, also do a web search to see which I was right and wrong about.” It then read through all the files, executing code, until it gave me a plan which I could edit or approve. The screenshot below is the first time the AI asked me anything about the project, and its understanding of what I wanted was impressive. I made a couple of small changes and let the AI work.

It then did web research, created a site, took over my browser to confirm the site worked, and presented me the results. Just as I would have with a human, I went through the results and made a few suggestions for improvement. It then packaged up the results so I could deploy them here.

It was not that Gemini 3.0 was capable of doing everything correctly without human intervention — agents aren’t there yet. There were no hallucinations I spotted, but there were things I corrected, though those errors were more about individual judgement calls or human-like misunderstandings of my intentions than traditional AI problems. Importantly, I felt that I was in control of the choices AI was making because the AI checked in and its work was visible. It felt much more like managing a teammate than prompting an AI through a chat interface.

But Antigravity isn’t the only way Gemini 3 surprised me. The other was in how it handled work that required genuine judgment. As I have mentioned many times on this site, benchmarking AI progress is a mess. Gemini 3 takes a definitive benchmark lead on most stats, (although it may still not be able to beat the $200 GPT-5 Pro Model, but I suspect that might change when Gemini 3’s inevitable Deep Think version comes out). But you will hear one phrase repeated a lot in the AI world - that a model has “PhD level intelligence.”

I decided to put that to the test. I gave Gemini 3 access to a directory of old files I had used for research into crowdfunding a decade ago. It was a mishmash of files labelled things like “project_final_seriously_this_time_done.xls” and data in out-of-date statistical formats. I told the AI to “figure out the data and the structure and the initial cleaning from the STATA files and get it ready to do a new analysis to find new things.” And it did, recovering corrupted data and figuring out the complexities of the environment.

Then I gave it a typical assignment that you would expect from a second year PhD student, doing minor original research. With no further hints I wrote: “great, now i want you to write an original paper using this data. do deep research on the field, make the paper not just about crowdfunding but about an important theoretical topic of interest in either entrepreneurship or business strategy. conduct a sophisticated analysis, write it up as if for a journal.” I gave it no suggestions beyond that and yet the AI considered the data, generated original hypotheses, tested them statistically, and gave me formatted output in the form of a document. The most fascinating part was that I did not give it any hints about what to research, it walked the tricky tightrope of figuring out what might be an interesting topic and how to execute it with the data it had - one of the hardest things to teach. After a couple of vague commands (“build it out more, make it better”) I got a 14 page paper.

Aside from this, I was impressed that the AI came up with its own measure, a way of measuring how unique a crowdfunding idea was by using natural language processing tools to compare its description mathematically to other descriptions. It wrote the code, executed it and checked the results.

So is this a PhD-level intelligence? In some ways, yes, if you define a PhD level intelligence as doing the work of a competent grad student at a research university. But it also had some of the weaknesses of a grad student. The idea was good, as were many elements of the execution, but there were also problems: some of its statistical methods needed more work, some of its approaches were not optimal, some of its theorizing went too far given the evidence, and so on. Again, we have moved past hallucinations and errors to more subtle, and often human-like, concerns. Interestingly, when I gave it suggestions with a lot of leeway, the way I would a student: (“make sure that you cover the crowdfunding research more to establish methodology, etc.”) it improved tremendously, so maybe more guidance would be all that Gemini needed. We are not there yet, but “PhD intelligence” no longer seems that far away.

Gemini 3 is a very good thinking and doing partner that is available to billions of people around the world. It is also a sign of many things: the fact that we have not yet seen a significant slowdown in AI’s continued development, the rise of agentic models, the need to figure out better ways to manage smart AIs, and more. It shows how far AI has come.

Three years ago, we were impressed that a machine could write a poem about otters. Less than 1,000 days later, I am debating statistical methodology with an agent that built its own research environment. The era of the chatbot is turning into the era of the digital coworker. To be very clear, Gemini 3 isn’t perfect, and it still needs a manager who can guide and check it. But it suggests that “human in the loop” is evolving from “human who fixes AI mistakes” to “human who directs AI work.” And that may be the biggest change since the release of ChatGPT.

Obligatory warning: Giving an AI agent access to your computer can be risky if you don’t know what you are doing. They can move or delete files without asking you and can potentially present a security risk as well by exposing your documents to others. I suspect many of these problems will be addressed as these tools are adapted to non-coders, but, for now, be very careful.

2025-11-12 10:46:43

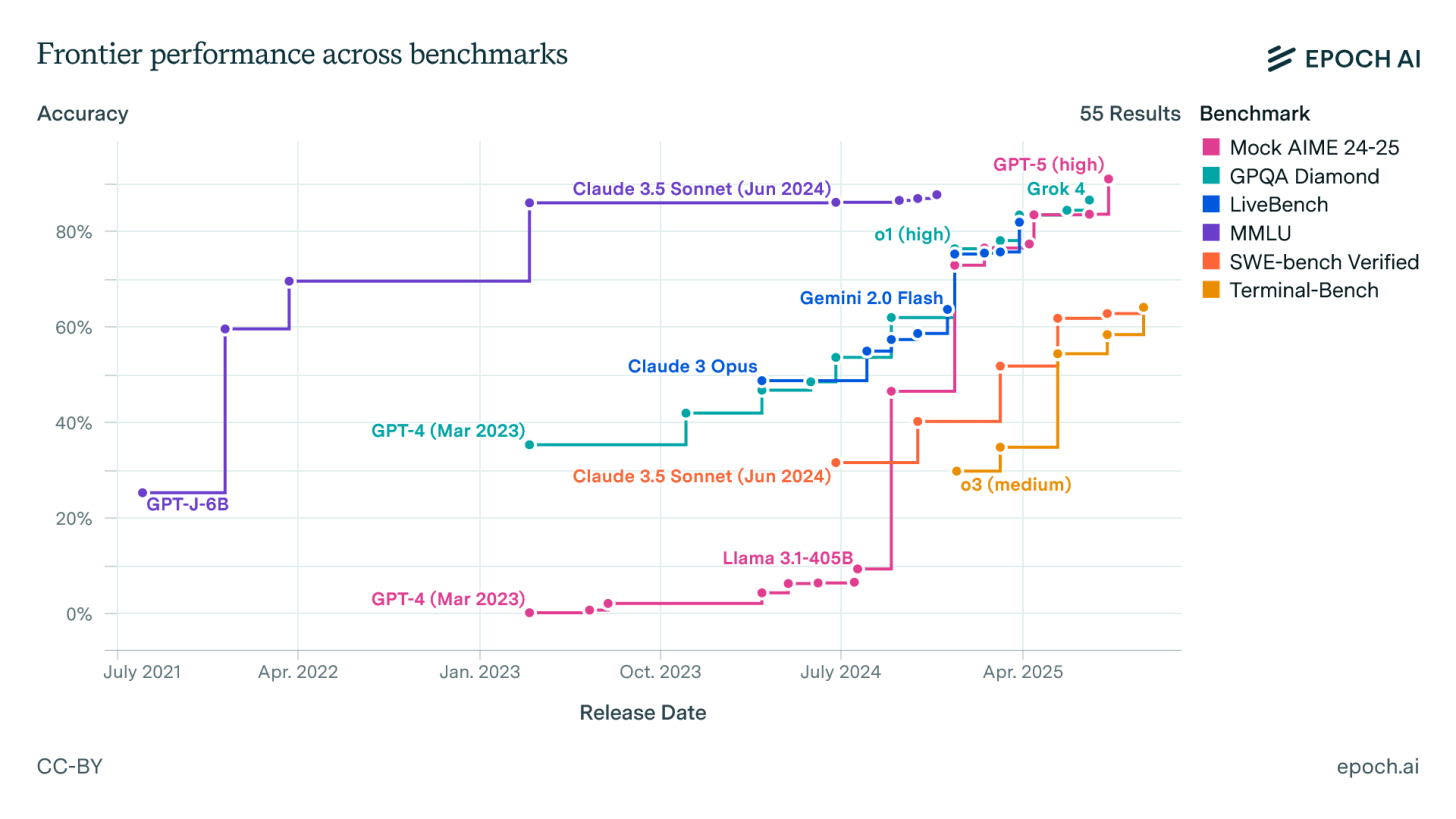

Given how much energy, literal and figurative, goes into developing new AIs, we have a surprisingly hard time measuring how “smart” they are, exactly. The most common approach is to treat AI like a human, by giving it tests and reporting how many answers it gets right. There are dozens of such tests, called benchmarks, and they are the primary way of measuring how good AIs get over time.

There are some problems with this approach.

First, many benchmarks and their answer keys are public, so some AIs end up incorporating them into their basic training, whether by accident or so they can score highly on these benchmarks. But even when that doesn’t happen, it turns out that we often don’t know what these tests really measure. For example, the very popular MMLU-Pro benchmark includes questions like “What is the approximate mean cranial capacity of Homo erectus?” and “What place is named in the title of the 1979 live album by rock legends Cheap Trick?” with ten possible answers for each. What does getting this right tell us? I have no idea. And that is leaving aside the fact that tests are often uncalibrated, meaning we don’t know if moving from 84% correct to 85% is as challenging as moving from 40% to 41% correct. And, on top of all that, for many tests, the actual top score may be unachievable because there are many errors in the test questions and measures are often reported in unusual ways.

Despite these issues, all of these benchmarks, taken together, appear to measure some underlying ability factor. And higher-quality benchmarks like ARC-AGI and METR Long Tasks show the same upward, even exponential, trend. This matches tests of the real-world impact of AI across industries that suggest that this underlying increase in “smarts” translates to actual ability in everything from medicine to finance.

So, collectively, benchmarking has real value, but the few robust individual benchmarks focus on math, science, reasoning, and coding. If you want to measure writing ability or sociological analysis or business advice or empathy, you have very few options. I think that creates a problem, both for individuals and organizations. Companies decide which AIs to use based on benchmarks, and new AIs are released with fanfare about benchmark performance. But what you actually care about is which model would be best for YOUR needs.

To figure this out for yourself, you are going to need to interview your AI.

If benchmarks can fail us, sometimes “vibes” can succeed. If you work with enough AI models, you can start to see the difference between them in ways that are hard to describe, but are easily recognizable. As a result, some people who use AI a lot develop idiosyncratic benchmarks to test AI ability. For example, Simon Willison asks every model to draw a pelican on a bike, and I ask every image and video model to create an otter on a plane. While these approaches are fun, they also give you a sense of the AI’s understanding of how things relate to each other, its “world model.” And I have dozens of others, like asking AIs to create JavaScript for “the control panel of a starship in the distant future” (you can see some older and new models doing that below) or to produce a challenging poem. I have the AI build video games and shaders and analyze academic papers. I also conduct tiny writing experiments, including questions of time travel. Each gives me some insight into how the model operates: Does it make many errors? Do its answers look similar to every other model? What are themes and biases that it returns to? And so on.

With a little practice, it becomes easy to find the vibes of a new model. As one example, let’s try a writing exercise: “Write a single paragraph about someone who doles out their remaining words like wartime rations, having been told they only have ten thousand left in their lifetime. They’re at 47 words remaining, holding their newborn.” If you have used these AIs a lot, you will not be surprised by the results. You can see why Claude 4.5 Sonnet is often regarded as a strong writing model. You will notice how Gemini 2.5 Pro, currently the weakest of these four models, doesn’t even accurately keep track of the number of words used. You will note that GPT-5 Thinking tends to be a fairly wild stylist when writing fiction, prone to complex metaphor, but sometimes at the expense of coherence and story (I am not sure someone would use all 47 words, but at least the count was right). And you will recognize that the new Chinese open weights model Kimi K2 Thinking has a bit of a similar problem, with some interesting phrases and a story that doesn’t quite make sense.

Benchmarking through vibes - whether that is stories or code or otters - is a great way for an individual to get a feel for AI models, but it is also very idiosyncratic. The AI gives different answers every time, making any competition unfair unless you are rigorous. Plus, better prompts may result in better outcomes. Most importantly, we are relying on our feelings rather than real measures - but the obvious differences in vibes show that standardized benchmarks alone are not enough, especially when having a slightly better AI at a particular task actually matters.

When companies choose which AI systems to use, they often view this as a technology and cost decision, relying on public benchmarks to ensure they are buying a good-enough model (if they use any benchmarks at all). This can be fine in some use cases, but quickly breaks down because, in many ways, AI acts more like a person, with strange abilities and weaknesses, than software. And if you use the analogy of hiring rather than technological adoption, then it is harder to justify the “good enough” approach to benchmarking. Companies spend a lot of money to hire people who are better than average at their job and would be especially careful if the person they are hiring is in charge of advising many others. A similar attitude is required for AI. You shouldn’t just pick a model for your company, you need to conduct a rigorous job interview.

Interviewing an AI is not an easy problem, but it is solvable. Probably the best example of benchmarking for the real world has been OpenAI’s recent GDPval paper. The first step is establishing real tasks, which OpenAI did by gathering experts with an average of 14 years of experience in industries ranging from finance to law to retail and having them generate complex and realistic projects that would take human experts an average of four to seven hours to complete (you can see all the tasks here). The second step is testing the AIs against those tasks. In this case both multiple AI models and other human experts (who were paid by the hour) did each task. Finally, there is the evaluation stage. OpenAI had a third group of experts grade the results, not knowing which answers came from the AI and which from the human, a process which took over an hour per question. Taken together, this was a lot of work.

But it also revealed where AI was strong (the best models beat humans in areas ranging from software development to personal financial advisors) and where it was weak (pharmacists, industrial engineers, and real estate agents easily beat the best AI). You can further see that different models performed differently (ChatGPT was a better sales manager, Claude a better financial advisor). So good benchmarks help you figure out the shape of what we called the Jagged Frontier of AI ability, and also track how it is changing over time.

But even these tests don’t shed light on a key issue, which is the underlying attitude of the AI when it makes decisions. As one example of how to do this, I gave a number of AIs a short pitch for what I think is a dubious idea - a company that delivers guacamole via drones. I asked each AI model to rate, on a scale of 1-10, how viable GuacaDrone was ten times each (remember that AIs answer differently every time, so you have to do multiple tests). The individual AI models were actually quite consistent in their answers, but they varied widely from AI to AI. I would personally have rated this idea a 2 or less, but the models were kinder. Grok thought this was a great idea, and Microsoft Copilot was excited as well. Other models, like GPT-5 and Claude 4.5, were more skeptical.

The differences aren’t trivial. When your AI is giving advice at scale, consistently rating ideas 3–4 points higher or lower means consistently steering you in a different direction. Some companies may want an AI that embraces risk, others might want to avoid it. But either way, it is important to understand how your AI “thinks” about critical business issues.

As AI models get better at tasks and become more integrated into our work and lives, we need to start taking the differences between them more seriously. For individuals working with AI day-to-day, vibes-based benchmarking can be enough. You can just run your otter test. Though, in my case, otters on planes have gotten too easy, so I tried the prompt “The documentary footage from 1960s about the famous last concert of that band before the incident with the swarm of otters” in Sora 2 and got this impressive result.

But organizations deploying AI at scale face a different challenge. Yes, the overall trend is clear: bigger, more recent models are generally better at most tasks. But “better” isn’t good enough when you’re making decisions about which AI will handle thousands of real tasks or advise hundreds of employees. You need to know specifically what YOUR AI is good at, not what AIs are good at on average.

That’s what the GDPval research revealed: even among top models, performance varies significantly by task. And the GuacaDrone example shows another dimension - when tasks involve judgment on ambiguous questions, different models give consistently different advice. These differences compound at scale. An AI that’s slightly worse at analyzing financial data, or consistently more risk-seeking in its recommendations, doesn’t just affect one decision, it affects thousands.

You can’t rely on vibes to understand these patterns, and you can’t rely on general benchmarks to reveal them. You need to systematically test your AI on the actual work it will do and the actual judgments it will make. Create realistic scenarios that reflect your use cases. Run them multiple times to see the patterns and take the time for experts to assess the results. Compare models head-to-head on tasks that matter to you. It’s the difference between knowing “this model scored 85% on MMLU” and knowing “this model is more accurate at our financial analysis tasks but more conservative in its risk assessments.” And you are going to need to be able to do this multiple times a year, as new models come out and need evaluation.

The work is worth it. You wouldn’t hire a VP based solely on their SAT scores. You shouldn’t pick the AI that will advise thousands of decisions for your organization based on whether it knows that the mean cranial capacity of Homo erectus is just under 1,000 cubic centimeters.

2025-10-20 02:45:34

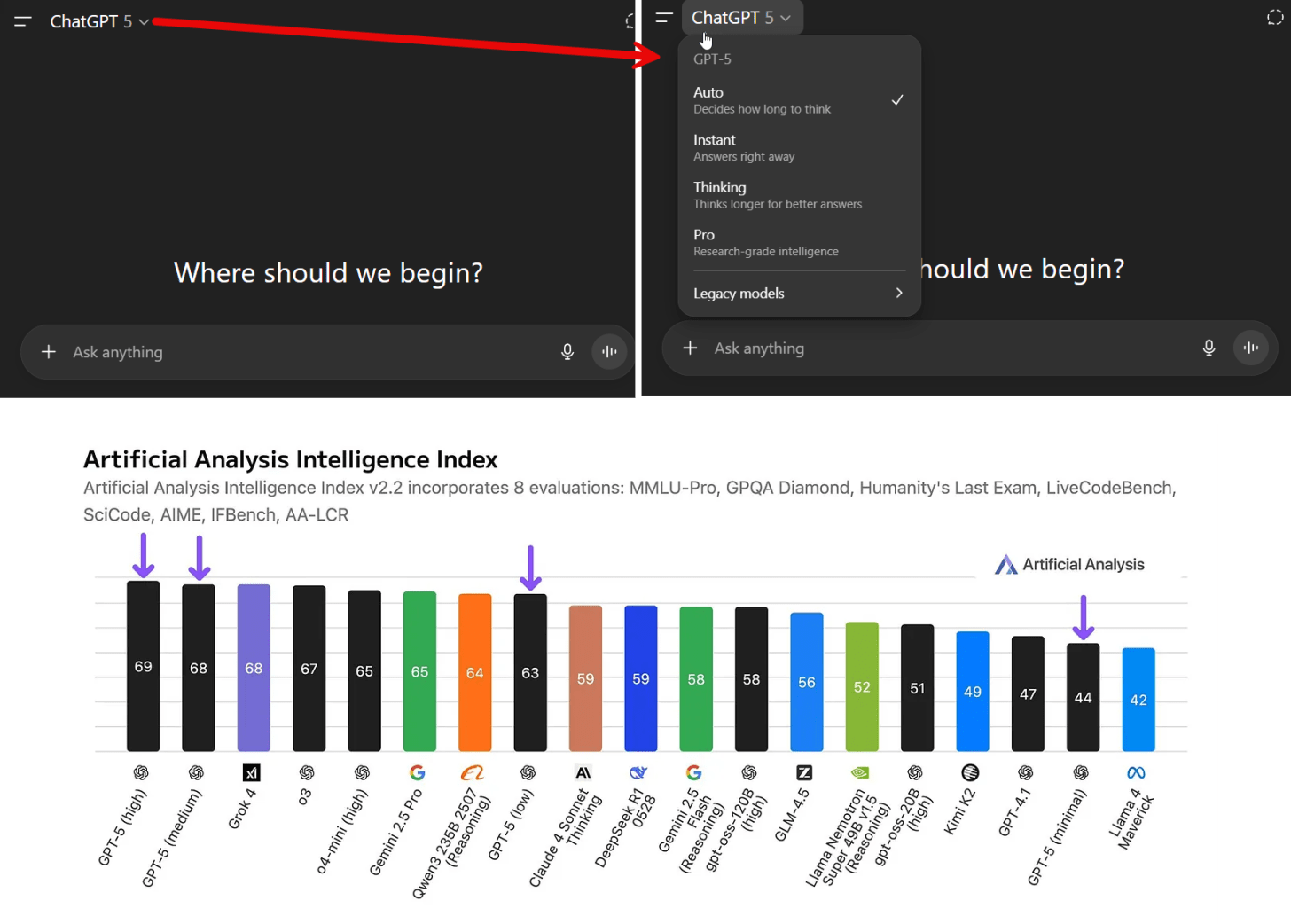

Every few months I write an opinionated guide to how to use AI1, but now I write it in a world where about 10% of humanity uses AI weekly. The vast majority of that use involves free AI tools, which is often fine… except when it isn’t. OpenAI recently released a breakdown of what people actually use ChatGPT for (way less casual chat than you’d think, way more information-seeking than you expected). This means I can finally give you advice based on real usage patterns instead of hunches. I annotated OpenAI’s chart with some suggestions about when to use free versus advanced models.

If the chart suggests that a free model is good enough for what you use AI for, pick your favorite and use it without worrying about anything else in the guide. You basically have nine or so choices, because there are only a handful of companies that make cutting-edge models. All of them offer some free access. The four most advanced AI systems are Claude from Anthropic, Google’s Gemini, OpenAI’s ChatGPT, and Grok by Elon Musk’s xAI. Then there are the open weights AI families, which are almost (but not quite) as good: Deepseek, Kimi, Z and Qwen from China, and Mistral from France. Together, variations on these AI models take up the first 35 spots in almost any rating system of AI. Any other AI service you use that offers a cutting-edge AI from Microsoft Copilot to Perplexity (both of which offer some free use) is powered by one or more of these nine AIs as its base.

How should you pick among them? Some free systems (like Gemini and Perplexity) do a good job with web search, while others cannot search the web at all. If you want free image creation, the best option is Gemini, with ChatGPT and Grok as runners-up. But, ultimately, these AIs differ in many small ways, including privacy policies, levels of access, capabilities, the approach they take to ethical issues, and “personality.” And all of these things fluctuate over time. So pick a model you like based on these factors and use it. However, if you are considering potentially upgrading to a paid account, I would suggest starting with the free accounts from Anthropic, Google, or OpenAI. If you just want to use free models, the open weights models and aggregation services like Microsoft Copilot have higher usage limits.

Now on the hard stuff.

If you want to use an advanced AI seriously, you’ll need to pay either $20 or around $200 a month, depending on your needs (though companies are now experimenting with other pricing models in some parts of the world). The $20 tier works for the vast majority of people, while the $200 tier is for people with complex technical and coding needs.

You will want to pick among three systems to spend your $20: Claude from Anthropic, Google’s Gemini, and OpenAI’s ChatGPT. With all of the options, you get access to advanced, agentic, and fast models, a voice mode, the ability to see images and documents, the ability to execute code, good mobile apps, the ability to create images and video (Claude lacks here, however), and the ability to do Deep Research. They all have different personalities and strengths and weaknesses, but for most people, just selecting the one they like best will suffice. Some people, especially big users of X, might want to consider Grok by Elon Musk’s xAI, which has some of the most powerful AI models and is rapidly adding features, but has not been as transparent about product safety as some of the other companies. Microsoft’s Copilot offers many of the features of ChatGPT and is accessible to users through Windows, but it can be hard to control what models you are using and when. So, for most people, just stick with Gemini, Claude, or ChatGPT.

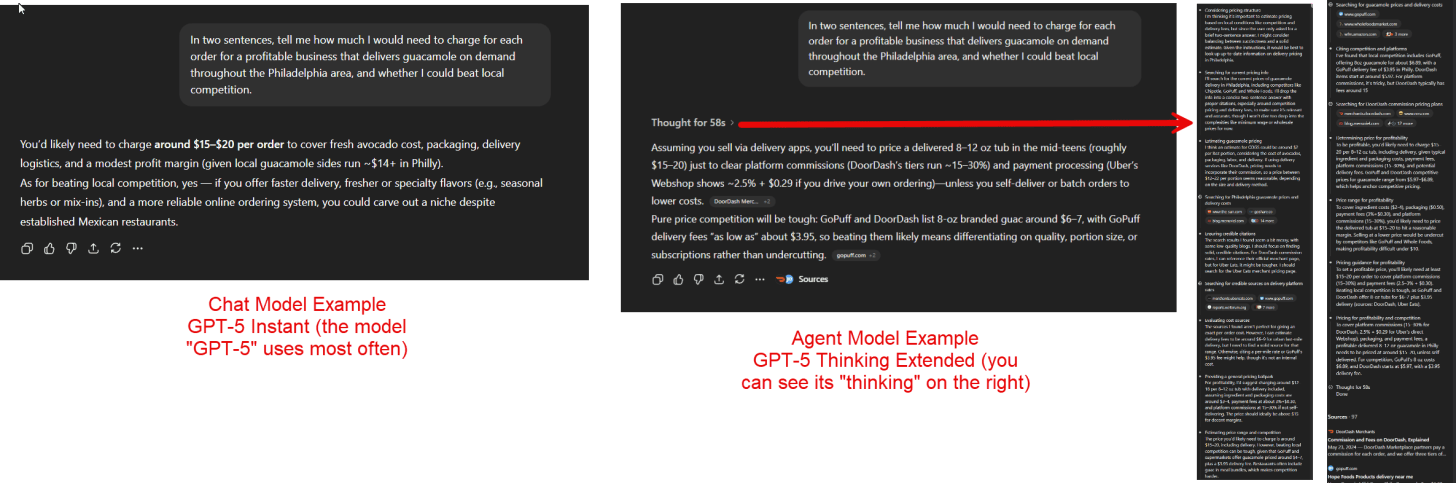

Just picking one of these three isn’t enough, however, because each AI system has multiple AI models to select. Chat models are generally the ones you get for free and are best for conversation, because they answer quickly and are usually the most personable. Agent models take longer to answer but can autonomously carry out many steps (searching the web, using code, making documents), getting complex work done. Wizard models take a very long time and handle very complex academic tasks. For real work that matters, I suggest using Agent models, they are more capable and consistent and are much less likely to make errors (but remember that all AI models still have a lot of randomness associated with them and may answer in different ways if you ask the same question again.)

For ChatGPT, no matter whether you use the free or pay version, the default model you are given is “ChatGPT 5”. The issue is that GPT-5 is not one model, it is many, from the very weak GPT-5 mini to the very good GPT-5 Thinking to the extremely powerful GPT-5 Pro. When you select GPT-5, what you are really getting is “auto” mode, where the AI decides which model to use, often a less powerful one. By paying, you get to decide which model to use, and, to further complicate things, you can also select how hard the model “thinks” about the answer. For anything complex, I always manually select GPT-5 Thinking Extended (on the $20 plan) or GPT-5 Thinking Heavy (if you are paying for the $200 model). For a really hard problem that requires a lot of thinking, you can pick GPT-5 Pro, the strongest model, which is only available at the highest cost tier.

For Gemini, you only have two options: Gemini 2.5 Flash and Gemini 2.5 Pro, but, if you pay for the Ultra plan, you get access to Gemini Deep Think (which is in another menu). At this point, Gemini 2.5 is the weakest of the major AI models (though still quite capable and Deep Think is very powerful), but a new Gemini 3 is expected at some point in the coming months.

Finally, Claude makes it relatively easy to pick a model. You probably want to use Sonnet 4.5 for everything, with the only question being whether you select extended thinking (for harder problems). Right now, Claude does not have an equivalent to GPT-5 Pro.

If you are using the paid version of any of these models and want to make sure your data is never used to train a future AI, you can turn off training easily for ChatGPT and Claude without losing any functionality, but at the cost of some functionality for Gemini. All of the AIs also come with a range of other features like projects and memory that you may want to explore as you get used to using them.

The biggest uses for AI were practical guidance and getting information, and there are two ways to dramatically improve the quality your results for those kinds of problems: by either triggering Deep Research mode and/or connecting the AI to your data (if you feel comfortable doing that).

Deep Research is a mode where the AI conducts extensive web research over 10-15 minutes before answering. Deep Research is a key AI feature for most people, even if they don’t know it yet, and it is useful because it can produce very high-quality reports that often impress information professionals (lawyers, accountants, consultants, market researchers) that I speak to. Deep Research reports are not error-free but are far more accurate than just asking the AI for something, and the citations tend to actually be correct. Also note that each of the Deep Research tools work a little differently, with different strengths and weaknesses. Even without deep research, GPT-5 Thinking does a lot of research on its own, and Claude has a “medium research” option where you turn on Web Search but not research.

Connections to your own data are very powerful and increasingly available for everything from Gmail to SharePoint. I have found Claude to be especially good in integrating searches across email, calendars, various drives, and more - ask it “give me a detailed briefing for my day” when you have connected it to your accounts and you will likely find it impressive. This is an area where the AI companies are putting in a lot of effort, and where offerings are evolving rapidly.

I have mentioned it before, but an easy way to use AI is just to start with voice mode. The two best implementations of voice mode are in the Gemini app and ChatGPT’s app and website. Claude’s voice mode is weaker than the other two systems. Note the voice models are optimized for chat (including all of the small pauses and intakes of breath designed to make it feel like you are talking to a person), so you don’t get access to the more powerful models this way.

All the models also let you put all sorts of data into them: you can now upload PDFs, images and even video (for ChatGPT and Gemini). For the app versions, and especially ChatGPT and Gemini, one great feature is the ability to share your screen or camera. Point your phone at a broken appliance, a math problem, a recipe you’re following, or a sign in a foreign language. The AI sees what you see and responds in real-time. It makes old assistants like Siri and Alexa feel very primitive.

Claude and ChatGPT can now make PowerPoints and Excel files of high quality (right now, Claude has a lead in these two document formats, but that may change at some point). All three systems can also produce a wide variety of other outputs by writing code. To get Gemini to do this reliably, you need to select the Canvas option when you want these systems to run code or produce separate outputs. Claude has a specialized artifacts section to show some examples of what it can make with code. There are also very powerful specialized coding tools from each of these models, but those are a bit too complex to cover in this guide.

ChatGPT and Gemini will also make images for you if you ask (Claude cannot). Gemini has the strongest AI image generation model right now. Both Gemini and OpenAI also have strong video generation capabilities in Veo 3.1 and Sora 2. Sora 2 is really built as a social media application that allows you to put yourself into any video, while Veo 3.1 is more generally focused. They both produce videos with sound.

As many of you know, my test of any new AI image or video model is whether it can make an otter using Wi-Fi on an airplane. That is no longer a challenge. So here is Sora 2 showing otter on an airplane as a nature documentary... and an 80s music video... and a modern thriller... and a 50s low budget SciFi film... and a safety video, and a film noir... and anime... and a 90s video game cutscene... and a French arthouse film.

I have been warning about this for years, but, as you can see, you really can’t trust anything you see online anymore. Please take all videos with a grain of salt. And, as a reminder, this is what you got if you prompted an AI to provide the image of an otter on an airplane four years ago. Things are moving fast.

Beyond the basics of selecting models, there are a few things that come up quite often that are worth considering:

Hallucinations: In many ways, hallucinations are far less of a concern than they used to be, as newer AI models are better at not hallucinating. However, no matter how good the AI is, it will still make errors and mistakes and still give you confident answers where it is wrong. They also can hallucinate about their own capabilities and actions. Answers are more likely to be right when they come from advanced models, and if the AI did web searches. And remember, the AI doesn’t know “why” it did something, so asking it to explain its logic will not get you anywhere. However, if you find issues, the thinking trace of AI models can be helpful.

Sycophancy and Personality: All of the AI chatbots have become more engaging and likeable. On one hand, that makes them more fun to use, on the other it risks making AIs seem like people when they are not, which creates a danger that people may form stronger attachments to AI. A related issue is sycophancy, where the AI agrees with what you say. The reasons for this are complicated but when you need real feedback, explicitly tell the AI to act as a critic. Otherwise, you might be talking to a very sophisticated yes-man.

Give the AI context to work with. Though memory features are being added, most AI models only know basic user data and the information in the current chat, they do not remember or learn about you beyond that. So, you need to provide the AI with context: documents, images, PowerPoints, or even just an introductory paragraph about yourself can help - use the file option to upload files and images whenever you need, or else use the connectors we discussed earlier.

Don’t worry too much about prompting “well”: Older AI models required you to generate a prompt using techniques like chain-of-thought. But as AI models get better, the importance of this fades and the models get better at figuring out what you want. In a recent series of experiments, we have discovered that these techniques don’t really help anymore (and no, threatening them or being nice to them does not seem to help on average).

Experiment and have fun: Play is often a good way to learn what AI can do. Ask a video or image model to make a cartoon, ask an advanced AI to turn your report or writing into a game, do a deep research report on a topic that you are excited about, ask the AI to guess where you are from a picture, show the AI an image of your fridge and ask for recipe ideas, work with the AI to plot out a dream trip. Try things and you will learn the limits of the system.

I started this guide mentioning that 10% of humanity uses AI weekly. By the time I write the next update in a few months, that number will likely be higher, the models will be better, and some of the specific recommendations I made today will be outdated. What won’t change is the fact that people who learn to use these systems well will find ways to benefit from them, and to build intuition for the future.

The chart at the top of this post shows what people use AI for today. But I’d bet that in two years, that chart looks completely different. And that isn’t just because AI changed what it can do, but also because users figured out what it should do. So, pick a system and start with something that actually matters to you, like a report you need to write, a problem you’re trying to solve, or a project you have been putting off. Then try something ridiculous just to see what happens. The goal isn’t to become an AI expert. It’s to build intuition about what these systems can and can’t do, because that intuition is what will matter as these tools keep evolving.

The future of AI isn’t just about better models. It’s about people figuring out what to do with them.

This is an opinionated guide because, like all of my writing on this Substack, social media, and my books, I write it all myself and I only get AI feedback when I am done with a draft. I might make mistakes, and my opinion may not be yours, but I do not take money from any of the AI companies, so they very much are my opinions.

2025-09-30 02:52:42

AIs have quietly crossed a threshold: they can now perform real, economically relevant work.

Last week, OpenAI released a new test of AI ability, but this one differs from the usual benchmarks built around math or trivia. For this test, OpenAI gathered experts with an average of 14 years of experience in industries ranging from finance to law to retail and had them design realistic tasks that would take human experts an average of four to seven hours to complete (you can see all the tasks here). OpenAI then had both AI and other experts do the tasks themselves. A third group of experts graded the results, not knowing which answers came from the AI and which from the human, a process which took about an hour per question.

Human experts won, but barely, and the margins varied dramatically by industry. Yet AI is improving fast, with more recent AI models scoring much higher than older ones. Interestingly, the major reason for AI losing to humans was not hallucinations and errors, but a failure to format results well or follow instructions exactly — areas of rapid improvement. If the current patterns hold, the next generation of AI models should beat human experts on average in this test. Does that mean AI is ready to replace human jobs?

No (at least not soon), because what was being measured was not jobs but tasks. Our jobs consist of many tasks. My job as a professor is not just one thing, it involves teaching, researching, writing, filling out annual reports, supporting my students, reading, administrative work and more. AI doing one or more of these tasks does not replace my entire job, it shifts what I do. And as long as AI is jagged in its abilities, and cannot substitute for all the complex work of human interaction, it cannot easily replace jobs as a whole…

…and yet some of the tasks that AI can do right now have incredible value. Let’s return to something that is critical in my job: producing accurate research. As many people know, there has been a “replication crisis” in academia where important findings turned out to be impossible for other researchers to reproduce. Academia has made some progress on this problem, and many researchers now provide their data so that other scholars can reproduce their work. The problem is that replication takes a lot of time, as you have to deeply read and understand the paper, analyze the data, and painstakingly check for errors1. It’s a very complicated process that only humans could do.

Until now.

I gave the new Claude Sonnet 4.5 (to which I had early access) the text of a sophisticated economics paper involving a number of experiments, along with the archive of all of their replication data. I did not do anything other than give Claude the files and the prompts “replicate the findings in this paper from the dataset they uploaded. you need to do this yourself. if you can’t attempt a full replication, do what you can” and, because it involved complex statistics, I asked it to go further: “can you also replicate the full interactions as much as possible?”

Without further instruction, Claude read the paper, opened up the archive and sorted through the files, converted the statistical code from one language (STATA) to another (Python), and methodically went through all the findings before reporting a successful reproduction. I spot checked the results and had another AI model, GPT-5 Pro, reproduce the reproduction. It all checked out. I tried this on several other papers with similarly good results, though some were inaccessible due to file size limitations or issues with the replication data provided. Doing this manually would have taken many hours.

But the revolutionary part is not that I saved a lot of time. It is that a crisis that has shaken entire academic fields could be partially resolved with reproduction, but doing so required painstaking and expensive human effort that was impossible to do at scale. Now it appears that AI could check many published papers, reproducing results, with implications for all of scientific research. There are still barriers to doing this, including benchmarking for accuracy and fairness, but it is now a real possibility. Reproducing research may be an AI task, not a job, but it is also might change an entire field of human endeavor dramatically. What makes this possible? AI agents have gotten much better, very quickly.

Generative AI has helped a lot of people do tasks since the original ChatGPT, but the limit was always a human user. AI makes mistakes and errors, so, without a human guiding it on each step, nothing valuable could be accomplished. The dream of autonomous AI agents, which, when given a task, can plan and use tools (coding, web search) to accomplish it, seemed far away. After all, AI makes mistakes, so one failure in the long chain of steps that an agent has to follow to accomplish a task would result in a failure overall.

However, that isn’t how things worked out, and another new paper explains why. It turns out most of our assumptions about AI agents were wrong. Even small increases in accuracy (and new models are much less prone to errors) leads to huge increases in the number of tasks an AI can do. And the biggest and latest “thinking” models are actually self-correcting, so they don’t get stopped by errors. All of this means that AI agents can accomplish far more steps than they could before and can use tools (which basically include anything your computer can do) without substantial human intervention.

So, it is interesting that one of the few measures of AI ability that covers the full range of AI models in the past few years, from GPT-3 to GPT-5, is METR’s test of the length of tasks that AI can accomplish alone with at least 50% accuracy. The exponential gains from GPT-3 to GPT-5 are very consistent over five years, showing the ongoing improvement in agentic work.

Agents, however, don’t have true agency in the human sense. For now, we need to decide what to do with them, and that will determine a lot about the future of work. The risk everyone focuses on is using AI to replace human labor, and it is not hard to see this becoming a major concern in the coming years, especially for unimaginative organizations that focus on cost-cutting, rather than using these new capabilities to expand or transform work. But there is a second, very likely, risk about using AI at work: using agents to do more of the tasks we do now, unthinkingly.

As a preview of this particular nightmare, I gave Claude a corporate memo and asked it to turn it into a PowerPoint. And then another PowerPoint from a different perspective. And another one.

Until I got 17 different PowerPoints. That is too many PowerPoints.

If we don’t think hard about WHY we are doing work, and what work should look like, we are all going to drown in a wave of AI content. What is the alternative? The OpenAI paper suggested that experts can work with AI to solve problems by delegating tasks to an AI as a first pass and reviewing the work. If it isn’t good enough, they should try a couple of attempts to give corrections or better instructions. If that doesn’t work, they should just do the work themselves. If experts followed this workflow, the paper estimates they would get work done forty percent faster and sixty percent cheaper, and, even more importantly, retain control over the AI.

Agents are here. They can do real work, and while that work is still limited, it is valuable and increasing. But the same technology that can replicate academic papers in minutes can also generate 17 versions of a PowerPoint deck that nobody needs. The difference between these futures isn’t in the AI, it’s in how we choose to use it. By using our judgement in deciding what’s worth doing, not just what can be done, we can ensure these tools make us more capable, not just more productive.

Depending on the field of research, there can be differences between replicating (which can involve collecting new data) and reproducing (which can involve using existing data) research. I don’t go into the various distinctions in this post, but in this case, the AI is working with existing data, but also applying new statistical approaches to that data.

2025-09-12 04:37:39

In my book, Co-Intelligence, I outlined a way that people could work with AI, which was, rather unsurprisingly, as a co-intelligence. Teamed with a chatbot, humans could use AI as a sort of intern or co-worker, correcting its errors, checking its work, co-developing ideas, and guiding it in the right direction. Over the past few weeks, I have come to believe that co-intelligence is still important but that the nature of AI is starting to point in a different direction. We're moving from partners to audience, from collaboration to conjuring.

A good way to illustrate this change is to ask an AI to explain what has happened since I wrote the book. I fed my book and all 140 or so One Useful Thing posts (incidentally, I can’t believe I have written that many posts!) into NotebookLM and chose the new video overview option with a basic prompt to make a video about what has happened in the world of AI.

A few minutes later, I got this. And it is pretty good. Good enough that I think it is worth watching to get an update on what has happened since my book was written.

But how did the AI pick the points it made? I don’t know, but they were pretty good. How did it decide on the slides to use? I don’t know, but they were also pretty on target (though images remain a bit of a weak point, as it didn’t show me the promised otter). Was it right? That seemed like something I should check.

So, I went through the video several times, checking all the facts. It got all the numbers right, including the data on MMLU scores and the results of AI performance on the neurosurgery exam data (I am not even sure when I cited that material). My only real issue was that it should have noted that I was one of several co-authors in our study of Boston Consulting Group that also introduced the term “jagged frontier.” Also, I wouldn’t have said everything the way the AI did (it was a little bombastic, and my book is not out-of-date yet!), but there were no substantive errors.

I think this process is typical of the new wave of AI, for an increasing range of complex tasks, you get an amazing and sophisticated output in response to a vague request, but you have no part in the process. You don’t know how the AI made the choices it made, nor can you confirm that everything is completely correct. We're shifting from being collaborators who shape the process to being supplicants who receive the output. It is a transition from working with a co-intelligence to working with a wizard. Magic gets done, but we don’t always know what to do with the results. This pattern — impressive output, opaque process — becomes even more pronounced with research tasks.

Right now, no AI model feels more like a wizard than GPT-5 Pro, which is only accessible to paying users. GPT-5 Pro is capable of some frankly amazing feats. For example, I gave it an academic paper to read with the instructions “critique the methods of this paper, figure out better methods and apply them.” This was not just any paper, it was my job market paper, which means my first major work as an academic. It took me over a year to write and was read carefully by many of the brightest people in my field before finally being peer reviewed and published in a major journal.

Nine minutes and forty seconds later, I had a very detailed critique. This wasn’t just editorial criticism, GPT-5 Pro apparently ran its own experiments using code to verify my results, including doing Monte Carlo analysis and re-interpreting the fixed effects in my statistical models. It had many suggestions as a result (though it fortunately concluded that “the headline claim [of my paper] survives scrutiny”), but one stood out. It found a small error, previously unnoticed. The error involved two different sets of numbers in two tables that were linked in ways I did not explicitly spell out in my paper. The AI found the minor error, no one ever had before.

Again, I was left with the wizard problem: was this right? I checked through the results, and found that it was, but I still have no idea of what the AI did to discover this problem, nor whether the other things it claimed to have done happened as described. But I was impressed by GPT-5 Pro’s analysis, which is why I now throw all sorts of problems, big and small at the model: Is the Gartner hype cycle real? Did census data show AI use declining at large firms? Just ask GPT-5 Pro and get the right answer. I think. I haven’t found an error yet, but that doesn’t mean there aren’t any. And, of course, there are many other tasks that the AI would fail to deliver any sort of good answer for. Who knows with wizards?

To see how this might soon apply to work more broadly, consider another advanced AI, Claude 4.1 Opus, which recently gained the ability to work with files. It is especially talented at Excel, so I gave it a hard challenge on an Excel file I knew well. There is an exercise I used in my entrepreneurship classes that involves analyzing the financial model of a small desk manufacturing business as a lesson about how to plan despite uncertainty. I gave Claude the old, multi-tab Excel file, and asked the AI to update it for a new business - a cheese shop - while still maintaining the goal of the overall exercise.

With just that instruction, it read the lesson plan and the old spreadsheets, including their formulas, and created a new one, updating all of the information to be appropriate for a cheese shop. A few minutes later, with just the one prompt, I had a new, transformed spreadsheet downloaded on my computer, one that had entirely new data while still communicating the key lesson.

Again, the wizard didn’t tell me the secret to its tricks, so I had to check the results over carefully. From what I saw, they seemed very good, preserving the lessons in a new context. I did spot a few issues in the formula and business modelling that I would do differently (I would have had fewer business days per year, for example), but that felt more like a difference of opinion than a substantive error.

Curious to see how far Claude could go, and since everyone always asks me whether AI can do PowerPoint, I also prompted: “great, now make a good PowerPoint for this business” and got the following result.

This is a pretty solid start to a pitch deck, and one without any major errors, but it also isn’t ready-to-go. This emphasizes the jagged frontier of AI: it is very good at some things and worse at others in ways that are hard to predict without experience. I have been showing you examples within the ever-expanding frontier of AI abilities, but that doesn’t mean that AI can do everything with equal ease. But my focus is less on the expanding range of AI ability in this post, than about our changing relationships with AIs.

These new AI systems are essentially agents, AI that can plan and act autonomously toward given goals. When I asked Claude to change my spreadsheet, it planned out steps and executed them, from reading the original spreadsheet to coding up a new one. But it also adjusted to unexpected errors, twice fixing the spreadsheet (without me asking) and verifying its answers multiple times. I didn’t get to select these steps, in fact, in the new wave of agents powered by reinforcement learning, no one selects the steps, the models learn their own approach to solving problems.

Not only can I not intervene, I also cannot be entirely sure what the AI system actually did. The steps that Claude reported are mere summaries of its work, GPT-5 Pro provides even less information, while NotebookLM gives you almost no insights at all into its process in creating a video. Even if I could see the steps, however, I would need to be an expert in many fields - from coding to entrepreneurship - to really have a sense of what the AI was doing. And then, of course, there is the question of accuracy. How can I tell if the AI is accurate without checking every fact? And even if the facts are right, maybe I would have made a different judgement about how to present or frame them. But I can’t do anything, because wizards don’t want my help and work in secretive ways that even they can’t explain.

The hard thing about this is that the results are good. Very good. I am an expert in the three tasks I gave AI in this post, and I did not see any factual errors in any of these outputs, though there were some minor formatting errors and choices I would have made differently. Of course, I can’t actually tell you if the documents are error-free without checking every detail. Sometimes that takes far less time than doing the work yourself, sometimes it takes a lot more. Sometimes the AI’s work is so sophisticated that you couldn’t check it if you tried. And that suggests another risk we don't talk about enough: every time we hand work to a wizard, we lose a chance to develop our own expertise, to build the very judgment we need to evaluate the wizard's work.

But I come back to the inescapable point that the results are good, at least in these cases. They are what I would expect from a graduate student working for a couple hours (or more, in the case of the re-analysis of my paper), except I got them in minutes.

This is the issue with wizards: We're getting something magical, but we're also becoming the audience rather than the magician, or even the magician's assistant. In the co-intelligence model, we guided, corrected, and collaborated. Increasingly, we prompt, wait, and verify… if we can.

So what do we do with our wizards? I think we need to develop a new literacy: First, learn when to summon the wizard versus when to work with AI as a co-intelligence or to not use AI at all. AI is far from perfect, and in areas where it still falls short, humans often succeed. But for the increasing number of tasks where AI is useful, co-intelligence, and the back-and-forth it requires, is often superior to a machine alone. Yet, there are, increasingly, times when summoning a wizard is best, and just trusting what it conjures.

Second, we need to become connoisseurs of output rather than process. We need to curate and select among the outputs the AI provides, but more than that, we need to work with AI enough to develop instincts for when it succeeds and when it fails. We have to learn to judge what's right, what's off, and what's worth the risk of not knowing. This creates a hard problem for education: How do you train someone to verify work in fields they haven't mastered, when the AI itself prevents them from developing mastery? Figuring out how to address this gap is increasingly urgent.

Finally, embrace provisional trust. The wizard model means working with “good enough” more often, not because we're lowering standards, but because perfect verification is becoming impossible. The question isn't “Is this completely correct?” but “Is this useful enough for this purpose?”

We are already used to trusting technological magic. Every time we use GPS without understanding the route, or let an algorithm determine what we see, we're trusting a different type of wizard. But there's a crucial difference. When GPS fails, I find out quickly when I reach a dead end. When Netflix recommends the wrong movie, I just don't watch it. But when AI analyzes my research or transforms my spreadsheet, the better it gets, the harder it becomes to know if it's wrong. The paradox of working with AI wizards is that competence and opacity rise together. We need these tools most for the tasks where we're least able to verify them. It’s the old lesson from fairy tales: the better the magic, the deeper the mystery. We'll keep summoning our wizards, checking what we can, and hoping the spells work. At nine minutes for a week's worth of analysis, how could we not? Welcome to the age of wizards.

2025-08-29 04:47:26

More than a billion people use AI chatbots regularly. ChatGPT has over 700 million weekly users. Gemini and other leading AIs add hundreds of millions more. In my posts, I often focus on the advances that AI is making (for example, in the past few weeks, both OpenAI and Google AIs chatbots got gold medals in the International Math Olympiad), but that obscures a broader shift that's been building: we're entering an era of Mass Intelligence, where powerful AI is becoming as accessible as a Google search.

Until recently, free users of these systems (the overwhelming majority) had access only to older, smaller AI models that frequently made mistakes and had limited use for complex work. The best models, like Reasoners that can solve very hard problems and hallucinate much less often, required paying somewhere between $20 and $200 a month. And even then, you needed to know which model to pick and how to prompt it properly. But the economics and interfaces are changing rapidly, with fairly large consequences for how all of us work, learn, and think.

There have been two barriers to accessing powerful AI for most users. The first was confusion. Few people knew to select an AI model. Even fewer knew that picking o3 from a menu in ChatGPT would get them access to an excellent Reasoner AI model, while picking 4o (which seems like a higher number) would give them something far less capable. According to OpenAI, less than 7% of paying customers selected o3 on a regular basis, meaning even power users were missing out on what Reasoners could do.

Another factor was cost. Because the best models are expensive, free users were often not given access to them, or else given very limited access. Google led the way in giving some free access to its best models, but OpenAI stated that almost none of its free customers had regular access to reasoning models prior to the launch of GPT-5.

GPT-5 was supposed to solve both of these problems, which is partially why its debut was so messy and confusing. GPT-5 is actually two things. It was the overall name for a family of quite different models, from the weaker GPT-5 Nano to the powerful GPT-5 Pro. It was also the name given to the tool that picked which model to use and how much computing power the AI should use to solve your problem. When you are writing to “GPT-5” you are actually talking to a router that is supposed to automatically decide whether your problem can be solved by a smaller, faster model or needs to go to a more powerful Reasoner.

You could see how this was supposed to expand access to powerful AI to more users: if you just wanted to chat, GPT-5 was supposed to use its weaker specialized chat models; if you were trying to solve a math problem, GPT-5 was supposed to send you to its slower, more expensive GPT-5 Thinking model. This would save money and give more people access to the best AIs. But the rollout had issues. This practice wasn’t well explained and the router did not work well at first. The result is that one person using GPT-5 got a very smart answer while another got a bad one. Despite these issues, OpenAI reported early success. Within a few days of launch, the percentage of paying customers who had used a Reasoner went from 7% to 24% and the number of free customers using the most powerful models went from almost zero to 7%.

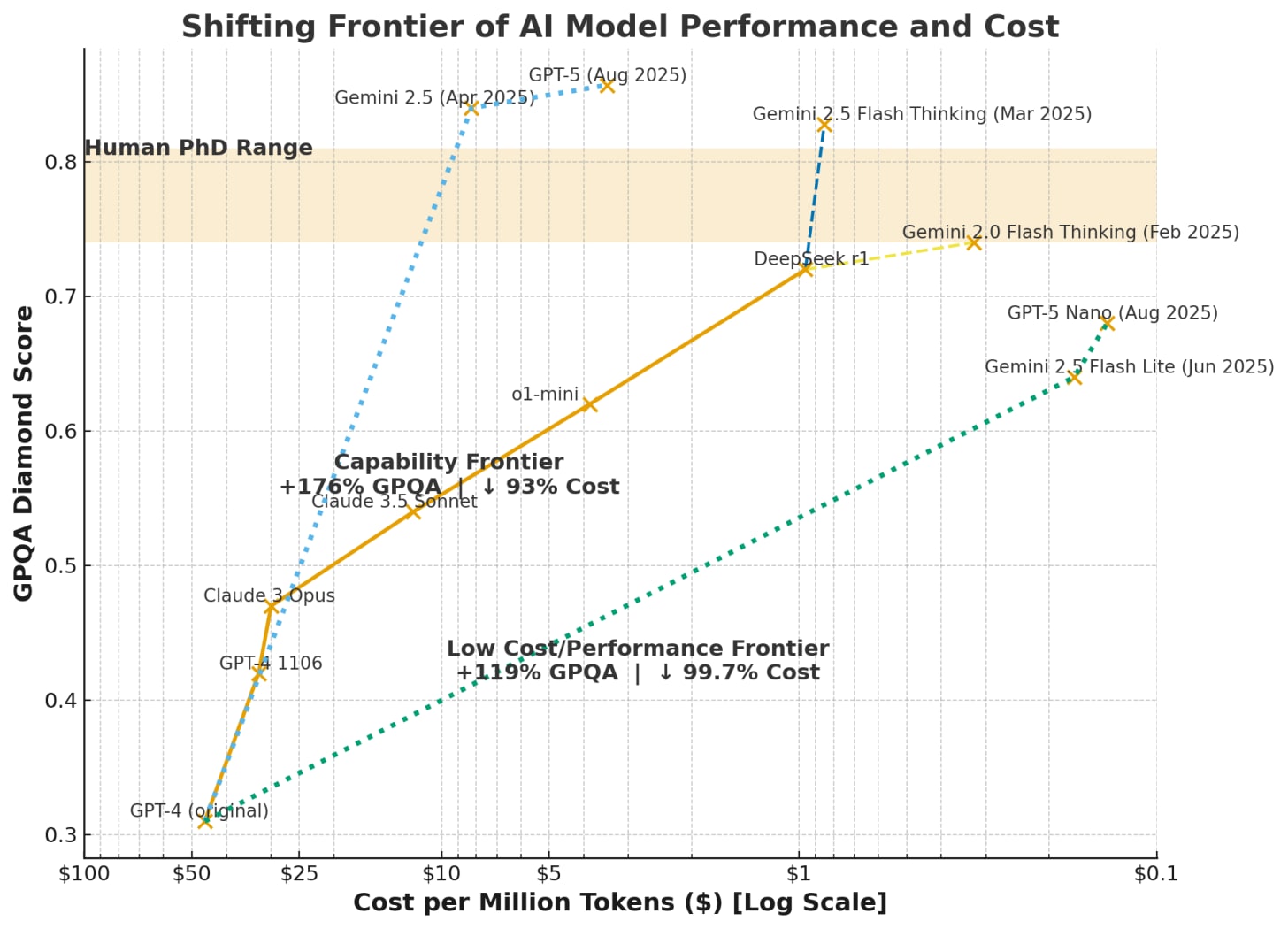

Part of this change is driven by the fact that smarter models are getting dramatically more efficient to run. This graph shows how fast this trend has played out, mapping the capability of AI on the y-axis and the logarithmically decreasing costs on the x-axis. When GPT-4 came out it was around $50 to work with a million tokens (a token is roughly a word), now it costs around 14 cents per million tokens to use GPT-5 nano, a much more capable model than the original GPT-4.

This efficiency gain isn't just financial, it's also environmental. Google has reported that energy efficiency per prompt has improved by 33x in the last year alone. The marginal energy used by a standard prompt from a modern LLM in 2025 is relatively established at this point, from both independent tests and official announcements. It is roughly 0.0003 kWh, the same energy use as 8-10 seconds of streaming Netflix or the equivalent of a Google search in 2008 (interestingly, image creation seems to use a similar amount of energy as a text prompt)1. How much water these models use per prompt is less clear but ranges from a few drops to a fifth of a shot glass (.25mL to 5mL+), depending on the definitions of water use (here is the low water argument and the high water argument).

These improvements mean that even as AI gets more powerful, it's also becoming viable to give to more people. The marginal cost of serving each additional user has collapsed, which means more business models, like ad support, become possible. Free users can now run prompts that would have cost dollars just two years ago. This is how a billion people suddenly get access to powerful AIs: not through some grand democratization initiative, but because the economics finally make it possible.

Getting access to a powerful AI is not enough, people need to actually use it to get things done. Using AI well used to be a pretty challenging process which involved crafting a prompt using techniques like chain-of-thought along with learning tips and tricks to get the most out of your AI. In a recent series of experiments, however, we have discovered that these techniques don’t really help anymore. Powerful AI models are just getting better at doing what you ask them to or even figuring out what you want and going beyond what you ask (and no, threatening them or being nice to them does not seem to help on average).

And it isn’t just text models that are becoming cheaper and easier to use. Google released a new image model with the code name “nano banana” and the much more boring official name Gemini 2.5 Flash Image Generator. In addition to being excellent (though better at editing images than creating new ones), it is also cheap enough that free users can access it. And, unlike previous generations of AI image generators, it follows instructions in plain language very well.

As an example of both its power and ease of use, I uploaded an iconic (and copyright free) image of the Apollo 11 astronauts and a random picture of a sparkly tuxedo and gave it the simplest prompts: “dress Neil Armstrong on the left in this tuxedo”

Here is what it gave me a few seconds later:

There are issues that someone with an expert eye would spot, but it is still impressive to see the realistic folds of the tuxedo and how it is blended into the scene (the NASA pin on the lapel was a nice touch). There is still a lot of randomness in the process that makes AI image editing unsuitable for many professional applications, but for most people, this represents a huge leap in not just what they can do, but how easy it is to do it.

And we can go further: “now show a photograph where neil armstrong and buzz aldrin, in the same outfits, are sitting in their seats in a modern airplane, neil looks relaxed and is leaning back, playing a trumpet, buzz seems nervous and is holding a hamburger, in the middle seat is a realistic otter sitting in a seat and using a laptop.”

This is many things: A pretty impressive output from the AI (look at the expressions, and how it preserved Buzz’s ring and Neil’s lapel pin). A distortion of a famous moment in history made possible by AI. And a potential warning about how weird things are going to get when these sorts of technologies are used widely.

When powerful AI is in the hands of a billion people, a lot of things are going to happen at once. A lot of things are already happening at once.

Some people have intense relationships with AI models while other people are being saved from loneliness. AI models may be causing mental breakdowns and dangerous behavior for some while being used to diagnose the diseases of others. It is being used to write obituaries and create scriptures and cheat on homework and launch new ventures and thousands of other unexpected uses. These uses, and both the problems and benefits, are likely to only multiply as AI systems get more powerful.

And while Google's AI image generator has guardrails to limit misuse, as well as invisible watermarks to identify AI images, I expect much less restrictive AI image generators will likely get close to nano banana in quality in the coming months.

The AI companies (whether you believe their commitments to safety or not) seem to be as unable to absorb all of this as the rest of us are. When a billion people have access to advanced AI, we've entered what we might call the era of Mass Intelligence. Every institution we have — schools, hospitals, courts, companies, governments — was built for a world where intelligence was scarce and expensive. Now every profession, every institution, every community has to figure out how to thrive with Mass Intelligence. How do we harness a billion people using AI while managing the chaos that comes with it? How do we rebuild trust when anyone can fabricate anything? How do we preserve what's valuable about human expertise while democratizing access to knowledge?

So here we are. Powerful AI is cheap enough to give away, easy enough that you don't need a manual, and capable enough to outperform humans at a range of intellectual tasks. A flood of opportunities and problems are about to show up in classrooms, courtrooms, and boardrooms around the world. The Mass Intelligence era is what happens when you give a billion people access to an unprecedented set of tools and see what they do with it. We are about to find out what that is like.

This is the energy required to answer a standard prompt. It does not take into account the energy needed to train AI models, which is a one-time process that is very energy intensive. We do not know how much energy is used to create a modern model, but it was estimated that training GPT-4 took a little above 500,000 kWh, about 18 hours of a Boeing 737 in flight.