2026-02-13 07:13:45

It’s a dramatic shift from Musk’s long-standing goal of a permanent human presence on the red planet.

Elon Musk has long said settling Mars is SpaceX’s raison d’être, but the world’s richest man has now pivoted his attention to the moon. The company is targeting an uncrewed lunar landing in March 2027 and has ambitions to create a “self-growing city” on our nearest celestial neighbor.

The news marks a dramatic shift from Musk’s long-standing goal of a permanent human presence on the red planet, which he has framed as a way to hedge humanity’s future against a cataclysmic event on Earth. Only a year ago the billionaire labeled missions to the moon “a distraction.”

But in a surprise announcement posted to X on Super Bowl Sunday, Musk revealed the change in strategy, confirming a Wall Street Journal report earlier in the week that SpaceX was putting off plans for a Mars mission to focus on lunar landings instead.

“For those unaware, SpaceX has already shifted focus to building a self-growing city on the Moon, as we can potentially achieve that in less than 10 years, whereas Mars would take 20+ years,” wrote Musk. “The mission of SpaceX remains the same: extend consciousness and life as we know it to the stars.”

The practical advantages of this shift are clear. As Musk notes, Mars is only accessible when the planets align every 26 months, with each journey taking six months (or longer). Trips to the moon can launch every 10 days and would take just a few days to arrive.

Lunar landings are also a problem SpaceX already needs to solve. The company has a $4 billion contract with NASA to return astronauts to the moon using its Starship rocket. The Artemis III mission will attempt to land a crew on the moon in 2028, though it’s unclear whether SpaceX’s vehicle will be ready in time.

However, the pivot to the moon appears to be about more than just pragmatism. Musk has become increasingly focused on artificial intelligence and, in recent months, has suggested this mission may overlap with his space ventures. In particular, he has floated the idea that space-based data centers may help solve the energy constraints currently holding back AI development.

Last week, Musk put his money where his mouth is by announcing that SpaceX had acquired his AI company xAI in a merger valuing the new entity at a whopping $1.25 trillion. In comments at an all-hands meeting at xAI on Tuesday evening, heard by the The New York Times, Musk unveiled an ambitious vision for how the company could build a factory for AI data centers on the moon’s surface.

The plan includes a giant electromagnetic catapult called a “mass driver” to launch satellites from the lunar surface into space. He also described building “a self-sustaining city on the moon,” which could act as a stepping stone to Mars.

The pivot may also be in response to growing competition from Jeff Bezos, his chief rival in the private space race. The billionaire’s rocket company, Blue Origin, has finally started to deliver with its New Glenn launch vehicle, and sources told Ars Technica that Bezos wants his team to go “all in” on lunar exploration.

Crucially, Blue Origin is developing a crew transportation system that doesn’t require orbital refueling. SpaceX’s Starship, on the other hand, will require around 10 to 12 tanker flights to fill the vehicle with propellant before it sets off on a lunar mission, according to Space.com.

While Starship has a major payload advantage—more than 100 tons to the lunar surface—the relative simplicity of Blue Origin’s technology could allow it to land humans on the moon before its rival.

Despite the refocus on the moon, Musk insisted he hasn’t abandoned Mars. In his Sunday post, he emphasized that SpaceX still has plans to build a city on the red planet and missions to start this process will begin in five to seven years.

Given Musk’s record for overly ambitious timelines, his prognostications on both the moon and Mars should probably be taken with a pinch of salt. Nonetheless, it seems increasingly likely that humanity’s first off-Earth settlement will be a lot closer to home than we thought.

The post Elon Musk Says SpaceX Is Pivoting From Mars to the Moon appeared first on SingularityHub.

2026-02-11 04:23:25

The unexpectedly large impact of genetics could spur new efforts to find longevity genes.

Laura Oliveira fell in love with swimming at 70. She won her first competition three decades later. Longevity runs in her family. Her aunt Geny lived to 110. Her two sisters thrived and were mentally sharp beyond a century. They came from humble backgrounds, didn’t stick to a healthy diet—many loved sweets and fats—and lacked access to preventative screening or medical care. Extreme longevity seems to have been built into their genes.

Scientists have long sought to tease apart the factors that influence a person’s lifespan. The general consensus has been that genetics play a small role; lifestyle and environmental factors are the main determinants.

A new study examining two cohorts of twins is now challenging that view. After removing infections, injuries, and other factors that cut a life short, genetics account for roughly 55 percent of the variation in lifespan, far greater than previous estimates of 10 to 25 percent.

“The genetic contribution to human longevity is greater than previously thought,” wrote Daniela Bakula and Morten Scheibye-Knudsen at the University of Copenhagen, who were not involved in the study.

Dissecting the impact of outside factors versus genetics on lifespan isn’t just academic curiosity. It lends insight into what contributes to a long life, which bolsters the quest for genes related to healthy aging and strategies to combat age-related diseases.

“If we can understand why there are some people who can make it to 110 while smoking and drinking all their life, then maybe, down the road, we can also translate that to interventions or to medicine,” study author Ben Shenhar of the Weizmann Institute of Science told ScienceNews.

Eat well, work out, don’t smoke, and drink very moderately or not at all. These longevity tips are so widespread they’ve gone from medical advice to societal wisdom. Focusing on lifestyle factors makes sense. You can readily form healthy habits and potentially alter your genetic destiny, if just by a smidge, and genes hardly seem to influence longevity.

Previous studies in multiple populations estimated the heritability of lifespan was roughly 25 percent at most. More recent work found even less genetic influence. The results poured cold water on efforts to uncover genes related to longevity, with some doubting their impact even if they could be found.

But the small role of genes on human longevity has had researchers scratching their heads. The estimated impact is far lower than in other mammals, such as wild mice, and is an outlier compared to other complex heritable traits in humans—ranging from psychiatric attributes to metabolism and immune system health—which are pegged at an average of roughly 49 percent.

To find out why, the team dug deep into previous lifespan studies and found a potential culprit.

Most studies used data from people born in the 18th and 19th centuries, where accidents, infectious diseases, environmental pollution, and other hazards were often the cause of an early demise. These outside factors likely masked intrinsic, or bodily, influences on longevity—for example, gradual damage to DNA and cellular health—and in turn, heavily underestimated the impact of genes on lifespan.

“Although susceptibility to external hazards can be genetically influenced, mortality in historical human populations was largely dominated by variation in exposure, medical care, and chance,” wrote Bakula and Scheibye-Knudsen.

The team didn’t set out to examine genetic influences on longevity. They were developing a mathematical model to gauge how aging varies in different populations. But by playing with the model, they realized that removing outside factors could vastly increase lifespan heritability.

To test the theory, they analyzed mortality data from Swedish twins—both identical and fraternal—born between 1900 and 1935. The time period encompassed some environmental extremes, including a deadly flu pandemic, a world war, and economic turmoil but also vast improvements in vaccination, sanitation, and other medical care.

Because identical twins share the same DNA, they’re a valuable resource for teasing apart the impact of nature versus nurture, especially if the twins were raised in different environments. Meanwhile, fraternal twins have roughly 50 percent similar DNA. By comparing lifespan between these two cohorts—with and without external factors added in using a mathematical model—the team teased out the impact of genes on longevity.

To further validate their model, the researchers applied it to another historical database of Danish twins born between 1890 and 1900, a period when deaths were often caused by infectious diseases. After excluding outside factors, results from both cohorts found the influence of genes accounted for roughly 55 percent of variation in lifespan, far higher than previous estimates. They unearthed similar results in a cohort of US siblings of centenarians.

Longevity aside, the analysis also found a curious discrepancy between the chances of inheriting various age-related diseases. Dementia and cardiovascular diseases are far more likely to run in families. Cancer, surprisingly, not so much. This suggests tumors are more driven by random mutations or environmental triggers.

The team emphasizes that the findings don’t mean longevity is completely encoded in your genes. According to their analysis, lifestyle factors could shift life expectancy by roughly five years, a small but not insignificant amount of time to spend with loved ones.

The estimates are hardly cut-and-dried. How genetics influence health and aging is complex. For example, genes that keep chronic inflammation at bay during aging could also increase chances of deadly infection earlier in life.

“Drawing a clear, bright line between intrinsic and extrinsic causes of death is not possible,” Bradley Willcox at the University of Hawaii, who was not involved in the study, told The New York Times. “Many deaths live in a gray zone where biology and environment collide.”

Although some experts remain skeptical, the findings could influence future research. Do genes have a larger impact on extreme longevity compared to average lifespan? If so, which ones and why? How much can lifestyle influence the aging process? According to Boston University’s Thomas Perls, who leads the New England Centenarian Study, the difference in lifespan for someone with only good habits versus no good habits could be more than 10 years.

The team stresses the analysis can’t cover everyone, everywhere, across all time. The current study mainly focused on Scandinavian twin cohorts, who hardly encapsulate the genetic diversity and socioeconomic status of other populations around the globe.

Still, the results suggest that future hunts for longevity-related genes could be made stronger by excluding external factors during analysis, potentially increasing the chances of finding genes that make outsized contributions to living a longer, healthier life.

“For many years, human lifespan was thought to be shaped almost entirely by non-genetic factors, which led to considerable skepticism about the role of genetics in aging and about the feasibility of identifying genetic determinants of longevity,” said Shenhar in a press release. “By contrast, if heritability is high, as we have shown, this creates an incentive to search for gene variants that extend lifespan, in order to understand the biology of aging and, potentially, to address it therapeutically.”

The post Your Genes Determine How Long You’ll Live Far More Than Previously Thought appeared first on SingularityHub.

2026-02-10 06:04:33

The strongest known form of quantum-secure communication is no longer limited to tabletop experiments.

Quantum communication could enable uncrackable transfer of information, but most approaches rely on trusted devices. Researchers have now demonstrated that a new method that does away with this challenging requirement can operate over distances as large as 62 miles.

One of the central promises of a future quantum internet is provably secure communication. That’s thanks to one of the quirks of quantum physics: Observing a quantum state inevitably changes it. So if anyone attempts to intercept and read a message encoded in the quantum states of particles, they will alter it in the process, alerting the receiver to the breach.

Quantum communication speeds are too slow to transmit large amounts of information, so most schemes instead rely on an approach known as quantum key distribution. This involves using the quantum communication channel to share an encryption key between two parties, which they use to encode and decode messages sent over classical communication networks.

There have been impressive demonstrations of the technology’s potential, including an effort that beamed keys more than 8,000 miles via satellite and another that transmitted them more than 620 miles over optical fiber. But these feats used communication schemes relying on assurances the devices used had no technical flaws and hadn’t been tampered with. This is hard to guarantee.

New research from China’s quantum communications supremo, Jian-Wei Pan, who was also behind the previous record-breaking research, has shown the ability to securely transmit keys over a distance of more than 62 miles even if the equipment used is compromised.

“The demonstration of device-independent [quantum key distribution] at the metropolitan scale helps close the gap between proof-of-principle quantum network experiments and real-world applications,” the researchers write in a paper reporting the results in Science.

Most quantum key distribution schemes send photons encoding quantum information over a series of trusted relays. In contrast, the device-independent scheme uses a pair of entangled photons, one of which stays with the sender while the other is sent to the receiver.

By carrying out a series of measurements on the entangled photons and subjecting them to a statistical test, the sender and receiver can verify if the particles are truly entangled and then use the data to extract a secret key only they can access. Crucially, the approach doesn’t rely on assumptions about the hardware used to generate the results.

But the scheme has struggled to scale because it places strict demands on the efficiency with which quantum particles are detected and the strength of their entanglement. Any loss or noise can undermine security, so earlier experiments only operated over distances of a few hundred feet.

To achieve their latest results, Pan’s team used two network nodes, each consisting of an individual rubidium atom trapped by lasers. These atoms are encoded into a specific quantum state and then excited to produce an entangled photon. Photons from each node are then transmitted over optical fiber to a third node where they interfere with each other and entangle the two atoms.

In a series of innovations, the team improved the creation and measurement of the entangled atoms. The changes resulted in reliable entanglement above 90 percent even at distances of up to 62 miles.

This enabled them to produce a positive key rate—essentially a guarantee that the protocol produces the secret bits that make up the key faster than they must be discarded due to error, noise, or interception by an adversary—up to the maximum distance they tested.

Calculating a positive key rate typically relies on the assumption that the system can send an unlimited amount of data and therefore doesn’t always guarantee the scheme will be practical. But the researchers also tested how their protocol worked when restricted to a finite amount of data and found it could transmit a secure key over almost seven miles.

Steve Rolston, a quantum physicist at the University of Maryland, College Park, told The South China Morning Post that the work is a significant advance over previous efforts. However, he also noted that the data rates remain “abysmally small”—producing less than one bit of secure key every 10 seconds. The tests were also done on a coil of fiber in a laboratory rather than real-world telecom networks subject to environmental noise and temperature swings that can disrupt quantum states.

Even so, the results mark an important milestone. By demonstrating device-independent quantum key distribution at city-scale distances, the study shows that the strongest known form of quantum-secure communication is no longer limited to tabletop experiments.

The post Scientists Send Secure Quantum Keys Over 62 Miles of Fiber—Without Trusted Devices appeared first on SingularityHub.

2026-02-08 02:02:27

Moltbook Was Pure AI TheaterWill Douglas Heaven | MIT Technology Review ($)

“As the hype dies down, Moltbook looks less like a window onto the future and more like a mirror held up to our own obsessions with AI today. It also shows us just how far we still are from anything that resembles general-purpose and fully autonomous AI.”

‘Quantum Twins’ Simulate What Supercomputers Can’tDina Genkina | IEEE Spectrum

“What analog quantum simulation lacks in flexibility, it makes up for in feasibility: quantum simulators are ready now. ‘Instead of using qubits, as you would typically in a quantum computer, we just directly encode the problem into the geometry and structure of the array itself,’ says Sam Gorman, quantum systems engineering lead at Sydney-based startup Silicon Quantum Computing.”

A New AI Math Startup Just Cracked 4 Previously Unsolved ProblemsWill Knight | Wired ($)

“‘What AxiomProver found was something that all the humans had missed,’ Ono tells Wired. The proof is one of several solutions to unsolved math problems that Axiom says its system has come up with in recent weeks. The AI has not yet solved any of the most famous (or lucrative) problems in the field of mathematics, but it has found answers to questions that have stumped experts in different areas for years.”

Nasal Spray Could Prevent Infections From Any Flu StrainAlice Klein | New Scientist ($)

“An antibody nasal spray has shown promise for protecting against flu in preliminary human trials, after first being validated in mice and monkeys. It may be useful for combatting future flu pandemics because it seems to neutralize any kind of influenza virus, including ones that spill over from non-human animals.”

A Peek Inside Physical Intelligence, the Startup Building Silicon Valley’s Buzziest Robot BrainsConnie Loizos | TechCrunch

“‘Think of it like ChatGPT, but for robots,’ Sergey Levine tells me, gesturing toward the motorized ballet unfolding across the room. …What I’m watching, he explains, is the testing phase of a continuous loop: data gets collected on robot stations here and at other locations—warehouses, homes, wherever the team can set up shop—and that data trains general-purpose robotic foundation models.”

This Is the Most Misunderstood Graph in AIGrace Huckins | MIT Technology Review ($)

“To some, METR’s ‘time horizon plot’ indicates that AI utopia—or apocalypse—is close at hand. The truth is more complicated. …’I think the hype machine will basically, whatever we do, just strip out all the caveats,’ he says. Nevertheless, the METR team does think that the plot has something meaningful to say about the trajectory of AI progress.”

AI Bots Are Now a Significant Source of Web TrafficWill Knight | Wired ($)

“The viral virtual assistant OpenClaw—formerly known as Moltbot, and before that Clawdbot—is a symbol of a broader revolution underway that could fundamentally alter how the internet functions. Instead of a place primarily inhabited by humans, the web may very soon be dominated by autonomous AI bots.”

Fast-Charging Quantum Battery Built Inside a Quantum ComputerKarmela Padavic-Callaghan | New Scientist ($)

“Quach and his colleagues have previously theorized that quantum computers powered by quantum batteries could be more efficient and easier to make larger, which would make them more powerful. ‘This was a theoretical idea that we proposed only recently, but the new work could really be used as the basis to power future quantum computers,’ he says.”

Expansion Microscopy Has Transformed How We See the Cellular WorldMolly Herring | Quanta Magazine

“Rather than invest in more powerful and more expensive technologies, some scientists are using an alternative technique called expansion microscopy, which inflates the subject using the same moisture-absorbing material found in diapers. ‘It’s cheap, it’s easy to learn, and indeed, on a cheap microscope, it gives you better images,’ said Omaya Dudin, a cell biologist at the University of Geneva who studies multicellularity.”

CRISPR Grapefruit Without the Bitterness Are Now in DevelopmentMichael Le Page | New Scientist ($)

“It has been shown that disabling one gene via gene editing can greatly reduce the level of the chemicals that make grapefruit so bitter. …He thinks this approach could even help save the citrus industry. A bacterial disease called citrus greening, also known as huanglongbing, is having a devastating impact on these fruits. The insects that spread the bacteria can’t survive in areas with cold winters, says Carmi, but cold-hardy citrus varieties are so bitter that they are inedible.”

What We’ve Been Getting Wrong About AI’s Truth CrisisJames O’Donnell | MIT Technology Review ($)

“We were well warned of this, but we responded by preparing for a world in which the main danger was confusion. What we’re entering instead is a world in which influence survives exposure, doubt is easily weaponized, and establishing the truth does not serve as a reset button. And the defenders of truth are already trailing way behind.”

The post This Week’s Awesome Tech Stories From Around the Web (Through February 7) appeared first on SingularityHub.

2026-02-06 23:00:00

A new approach would help AI assess its own confidence, detect confusion, and decide when to think harder.

Have you ever had the experience of rereading a sentence multiple times only to realize you still don’t understand it? As taught to scores of incoming college freshmen, when you realize you’re spinning your wheels, it’s time to change your approach.

This process, becoming aware of something not working and then changing what you’re doing, is the essence of metacognition, or thinking about thinking.

It’s your brain monitoring its own thinking, recognizing a problem, and controlling or adjusting your approach. In fact, metacognition is fundamental to human intelligence and, until recently, has been understudied in artificial intelligence systems.

My colleagues Charles Courchaine, Hefei Qiu, and Joshua Iacoboni and I are working to change that. We’ve developed a mathematical framework designed to allow generative AI systems, specifically large language models like ChatGPT or Claude, to monitor and regulate their own internal “cognitive” processes. In some sense, you can think of it as giving generative AI an inner monologue, a way to assess its own confidence, detect confusion, and decide when to think harder about a problem.

Today’s generative AI systems are remarkably capable but fundamentally unaware. They generate responses without genuinely knowing how confident or confused their response might be, whether it contains conflicting information, or whether a problem deserves extra attention. This limitation becomes critical when generative AI’s inability to recognize its own uncertainty can have serious consequences, particularly in high-stakes applications such as medical diagnosis, financial advice, and autonomous vehicle decision-making.

For example, consider a medical generative AI system analyzing symptoms. It might confidently suggest a diagnosis without any mechanism to recognize situations where it might be more appropriate to pause and reflect, like “These symptoms contradict each other” or “This is unusual, I should think more carefully.”

Developing such a capacity would require metacognition, which involves both the ability to monitor one’s own reasoning through self-awareness and to control the response through self-regulation.

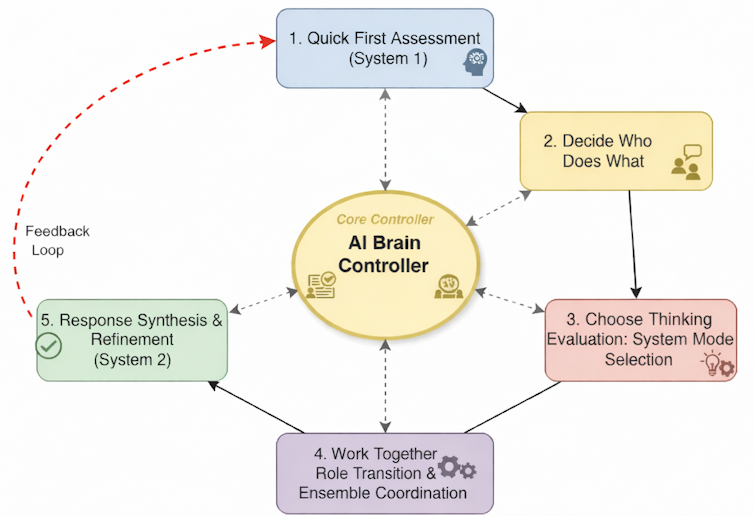

Inspired by neurobiology, our framework aims to give generative AI a semblance of these capabilities by using what we call a metacognitive state vector, which is essentially a quantified measure of the generative AI’s internal “cognitive” state across five dimensions.

One way to think about these five dimensions is to imagine giving a generative AI system five different sensors for its own thinking.

We quantify each of these concepts within an overall mathematical framework to create the metacognitive state vector and use it to control ensembles of large language models. In essence, the metacognitive state vector converts a large language model’s qualitative self-assessments into quantitative signals that it can use to control its responses.

For example, when a large language model’s confidence in a response drops below a certain threshold or the conflicts in the response exceed some acceptable levels, it might shift from fast, intuitive processing to slow, deliberative reasoning. This is analogous to what psychologists call System 1 and System 2 thinking in humans.

Imagine a large language model ensemble as an orchestra where each musician—an individual large language model—comes in at certain times based on the cues received from the conductor. The metacognitive state vector acts as the conductor’s awareness, constantly monitoring whether the orchestra is in harmony, whether someone is out of tune, or whether a particularly difficult passage requires extra attention.

When performing a familiar, well-rehearsed piece, like a simple folk melody, the orchestra easily plays in quick, efficient unison with minimal coordination needed. This is the System 1 mode. Each musician knows their part, the harmonies are straightforward, and the ensemble operates almost automatically.

But when the orchestra encounters a complex jazz composition with conflicting time signatures, dissonant harmonies, or sections requiring improvisation, the musicians need greater coordination. The conductor directs the musicians to shift roles: Some become section leaders, others provide rhythmic anchoring, and soloists emerge for specific passages.

This is the kind of system we’re hoping to create in a computational context by implementing our framework, orchestrating ensembles of large language models. The metacognitive state vector informs a control system that acts as the conductor, telling it to switch modes to System 2. It can then tell each large language model to assume different roles—for example, critic or expert—and coordinate their complex interactions based on the metacognitive assessment of the situation.

The implications extend far beyond making generative AI slightly smarter. In health care, a metacognitive generative AI system could recognize when symptoms don’t match typical patterns and escalate the problem to human experts rather than risking misdiagnosis. In education, it could adapt teaching strategies when it detects student confusion. In content moderation, it could identify nuanced situations requiring human judgment rather than applying rigid rules.

Perhaps most importantly, our framework makes generative AI decision-making more transparent. Instead of a black box that simply produces answers, we get systems that can explain their confidence levels, identify their uncertainties, and show why they chose particular reasoning strategies.

This interpretability and explainability is crucial for building trust in AI systems, especially in regulated industries or safety-critical applications.

Our framework does not give machines consciousness or true self-awareness in the human sense. Instead, our hope is to provide a computational architecture for allocating resources and improving responses that also serves as a first step toward more sophisticated approaches for full artificial metacognition.

The next phase in our work involves validating the framework with extensive testing, measuring how metacognitive monitoring improves performance across diverse tasks, and extending the framework to start reasoning about reasoning, or metareasoning. We’re particularly interested in scenarios where recognizing uncertainty is crucial, such as in medical diagnoses, legal reasoning, and generating scientific hypotheses.

Our ultimate vision is generative AI systems that don’t just process information but understand their cognitive limitations and strengths. This means systems that know when to be confident and when to be cautious, when to think fast and when to slow down, and when they’re qualified to answer and when they should defer to others.

This article is republished from The Conversation under a Creative Commons license. Read the original article.

The post Scientists Want to Give ChatGPT an Inner Monologue to Improve Its ‘Thinking’ appeared first on SingularityHub.

2026-02-06 07:42:51

Each finger can bend backwards for ultra-flexible crawling and grasping.

Here’s a party trick: Try opening a bottle of water using your thumb and pointer finger while holding it without spilling. It sounds simple, but the feat requires strength, dexterity, and coordination. Our hands have long inspired robotic mimics, but mechanical facsimiles still fall far short of their natural counterparts.

To Aude Billard and colleagues at the Swiss Federal Institute of Technology, trying to faithfully recreate the hand may be the wrong strategy. Why limit robots to human anatomy?

Billard’s team has now developed a prototype similar to Thing from The Addams Family.

Mounted on a robotic arm, the hand detaches at the wrist and transforms into a spider-like creature that can navigate nooks and crannies to pick up objects with its finger-legs. It then skitters on its fingertips back to the arm while holding on to its stash.

At a glance, the robot looks like a human hand. But it has an extra trick up its sleeve: It’s symmetrical, in that every finger is the same. The design essentially provides the hand with multiple thumbs. Any two fingers can pinch an object as opposing finger pairs. This makes complex single-handed maneuvers, like picking up a tube of mustard and a Pringles can at the same time, far easier. The robot can also bend its fingers forwards and backwards in ways that would break ours.

“The human hand is often viewed as the pinnacle of dexterity, and many robotic hands adopt anthropomorphic designs,” wrote the team. But by departing from anatomical constraints, the robot is both a hand and a walking machine capable of tasks that elude our hands.

If you’ve ever tried putting a nut on a bolt in an extremely tight space, you’re probably very familiar with the limits of our hands. Grabbing and orienting tiny bits of hardware while holding a wrench in position can be extremely frustrating, especially if you have to bend your arm or wrist at an uncomfortable angle for leverage.

Sculpted by evolution, our hands can dance around a keyboard, perform difficult surgeries, and do other remarkable things. But their design can be improved. For one, our hands are asymmetrical and only have one opposable thumb, limiting dexterity in some finger pairs. Try screwing on a bottle cap with your middle finger and pinkie, for example. And to state the obvious, wrist movement and arm length restrict our hands’ overall capabilities. Also, our fingers can’t fully bend backwards, limiting the scope of their movement.

“Many anthropomorphic robotic hands inherit these constraints,” wrote the authors.

Partly inspired by nature, the team re-envisioned the concept of a hand or a finger. Rather than just a grasping tool, a hand could also have crawling abilities, a bit like octopus tentacles that seamlessly switch between movement and manipulation. Combining the two could extend the hand’s dexterity and capabilities.

The team’s design process began with a database of standard hand models. Using a genetic algorithm, a type of machine learning inspired by natural selection, the team ran simulations on how different finger configurations changed the hand’s abilities.

By playing with the parameters, like how many fingers are needed to crawl smoothly, they zeroed in on a few guidelines. Five or six fingers gave the best performance, balancing grip strength and movement. Adding more digits caused the robot to stumble over its extra fingers.

In the final design, each three-jointed finger can bend towards the palm or to the back of the hand. The fingertips are coated in silicone for a better grip. Strong magnets at the base of the palm allow the hand to snap onto and detach from a robotic arm. The team made five- and six-fingered versions.

When attached to the arm, the hand easily pinches a Pringles can, tennis ball, and pen-shaped rod between two fingers. Its symmetrical design allows for some odd finger pairings, like using the equivalent of a ring and middle finger to tightly clutch a ball.

Other demos showcase its maneuverability. In one test, the robot twists off a mustard bottle cap while keeping the bottle steady. And because its fingers bend backwards, the hand can simultaneously pick up two objects, securing one on each side of its palm.

“While our robotic hand can perform common grasping modes like human hands, our design exceeds human capabilities by allowing any combination of fingers to form opposing finger pairs,” wrote the team. This allows “simultaneous multi-object grasping with fewer fingers.”

When released from the arm, the robot turns into a spider-like crawler. In another test, the six-fingered version grabs three blocks, none of which could be reached without detaching. The hand picks up the first two blocks by wrapping individual fingers around each. The same fingers then pinch the third block, and the robot skitters back to the arm on its remaining fingers.

The robot’s superhuman agility could let it explore places human hands can’t reach or traverse hazardous conditions during disaster response. It might also handle industrial inspection, like checking for rust or leakage in narrow pipes, or pick objects just out of reach in warehouses.

The team is also eyeing a more futuristic use: The hand could be adapted for prosthetics or even augmentation. Studies of people born with six fingers or those experimenting with an additional robotic finger have found the brain rapidly remaps to incorporate the digit in a variety of movements, often leading to more dexterity.

“The symmetrical, reversible functionality is particularly valuable in scenarios where users could benefit from capabilities beyond normal human function,” said Billard in a press release, but more work is needed to test the cyborg idea.

The post This Robotic Hand Detaches and Skitters About Like Thing From ‘The Addams Family’ appeared first on SingularityHub.