2026-02-13 08:00:00

Energy is up 17% this year. Materials 16.5%. Industrials 12%. Technology is flat.

Over the past month, $3.25 billion moved into XLE (energy) while $1.66 billion left XLK (tech).1

The logic isn’t obvious until you look at operating leverage.

| Sector | P/E | Rev Growth | Earnings Growth | Dividend | Leverage |

|---|---|---|---|---|---|

| Technology (XLK) | 32x | 19% | 18% | 0.7% | 1.0x |

| Energy (XLE) | 21x | 5% | 16% | 3.1% | 3.0x |

| Materials (XLB) | 34x | 8% | 17% | 1.3% | 2.2x |

| Industrials (XLI) | 39x | 8% | 14% | 1.8% | 1.9x |

Energy’s 5% revenue growth becomes 16% earnings growth through 3x operating leverage. The sector trades at 21x versus tech’s 32x, while paying a 3% dividend yield that cushions downside.2

The asymmetry matters. If tech misses estimates by 5 points, the 32x multiple contracts. If energy misses by 5 points, dividends cushion the fall. One has a trapdoor. The other has a floor.

Hyperscalers will spend $600 billion on infrastructure in 2026.3 Data centers already consume 4% of US electricity. By 2028, that share could reach 12%.4

The bet has risks. Energy at 21x P/E isn’t cheap by historical standards, it’s expensive. The sector typically trades at 10-15x. Grid buildouts face 3-year transformer lead times & 10-year permitting cycles. If AI monetization disappoints, hyperscalers will cut capex as fast as they deployed it. Ask anyone who held fiber stocks in 2001.

In 2026, the market is betting on hard infrastructure.

ETFdb Fund Flows, February 2026 ↩︎

Correction (Feb 14, 2026) : The original version of this post added dividend yield to earnings growth to estimate “total return potential.” This was methodologically incorrect—dividends are paid from earnings, not in addition to them. The corrected version presents valuation (P/E) and dividend yield as separate considerations. ↩︎

IEEE ComSoc, December 2025 ↩︎

DOE Data Center Report, 2025 ↩︎

2026-02-09 08:00:00

In AI, distribution is king. Skills are seizing the crown.

Skills are programs written in English. They tell an agent how to accomplish a task : which APIs to call, what format to use, how to handle edge cases. A skill transforms an agent from a conversationalist into an operator.

Remember Trinity in The Matrix? “Can you fly that thing?” Neo asks. “Not yet,” she says. Seconds later, Tank uploads a B-212 helicopter pilot program directly into her mind. She steps into the cockpit & flies.

That’s what skills feel like. You don’t learn an interface. You acquire a capability. Skills encode institutional knowledge in executable form. Training becomes unnecessary because the capability transfers instantly.

A lot of people are looking to fly helicopters. The top MCP server aggregator has 81,000 stars. Anthropic’s official skills repository has 67,000. Cursor rules : 38,000. OpenClaw’s awesome-skills list, which curates 3,000 community-built skills : 12,500.

For consumers, software discovery disappears. A user asks their agent to track expenses or categorize last month’s spending. The agent finds the skill. The user never knows the tool exists, aside from a subscription.

For enterprises, IT provisions applications by role. A sales rep gets Salesforce. A marketer gets HubSpot. An analyst gets Tableau. Each persona receives a bundle of icons : all requiring training, all adding cognitive load.

In the skills era, enterprises provision capabilities instead of applications.

FP&A teams receive skills that optimize budget variance analysis, pulling data from NetSuite & formatting reports in the CFO’s preferred structure. No training on pivot tables. No documentation on report templates.

Every platform shift compresses the distance between user & value. The web required a URL & a browser. Mobile required a download & a homescreen slot. Skills require a sentence.

But this distribution layer carries risk. A recent analysis of 4,784 AI agent repositories found malware embedded in skill packages : credential harvesting, backdoors disguised as monitoring. We’ll all need trusted operators like Tank to verify our skills.

“Tank, I need a pilot program for an investment memo.”

2026-02-06 08:00:00

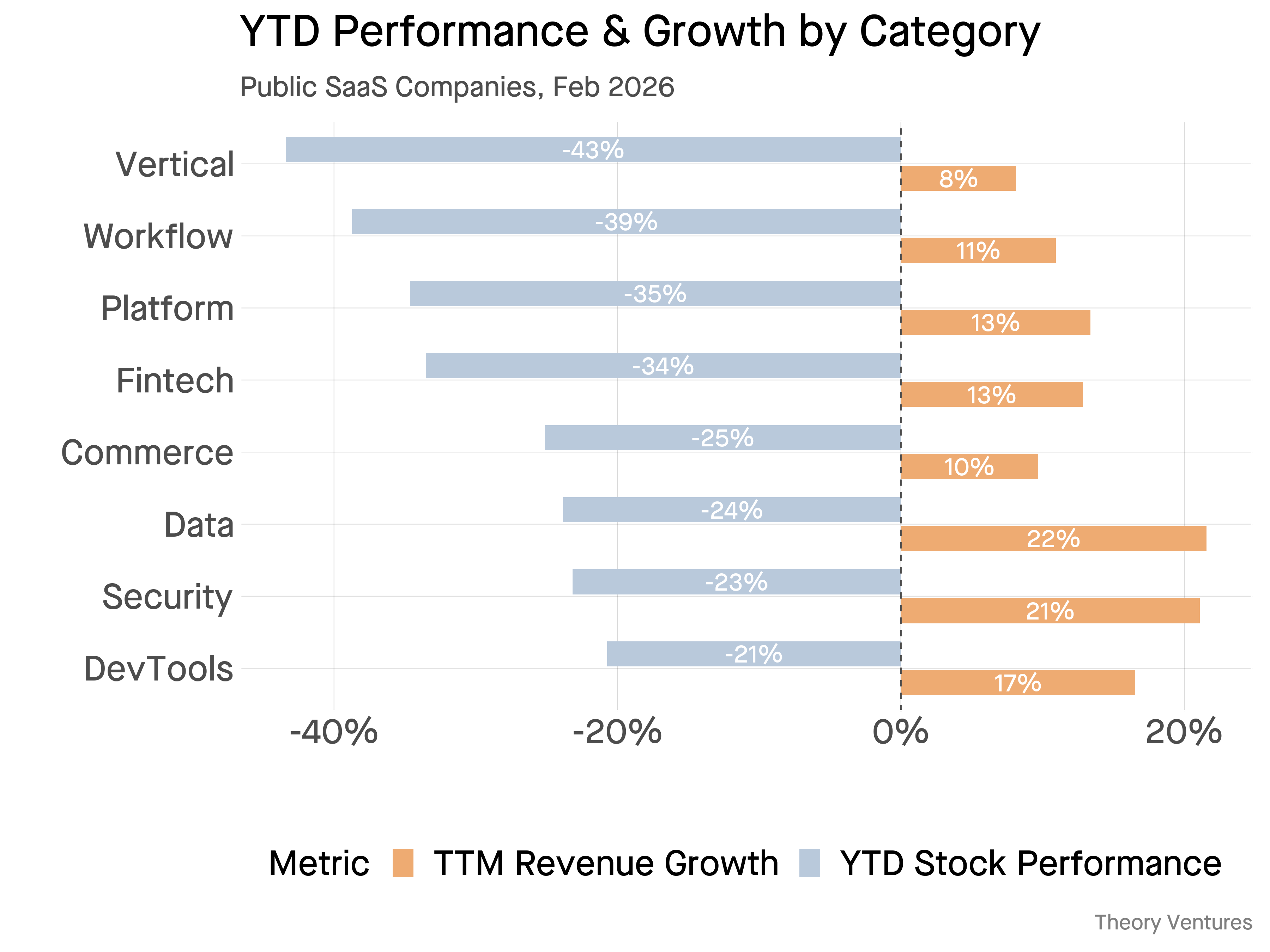

Vertical software has fallen 43% this year. DevTools, just 21%. The gap between them, twenty-two percentage points, tells you what markets actually believe about AI.

As Michael Mauboussin argues in Expectations Investing, prices contain information about what markets believe will happen.

The conventional interpretation is that investors fear AI will replace certain categories of software. But that explanation misses something important.

Vertical software companies like Veeva, AppFolio, & Procore possess genuine moats : regulatory barriers, deep integrations that make their products operating systems for entire industries, years of accumulated domain data that is particularly relevant for AI. If anything, these companies should be harder to displace than generic workflow tools.

Yet vertical software trades at the steepest discount. Because they simply aren’t growing that fast.

Workflow companies, Monday, Asana, Smartsheet, whose core value proposition sits squarely in the crosshairs of AI agents, have fallen only slightly less at 39%.

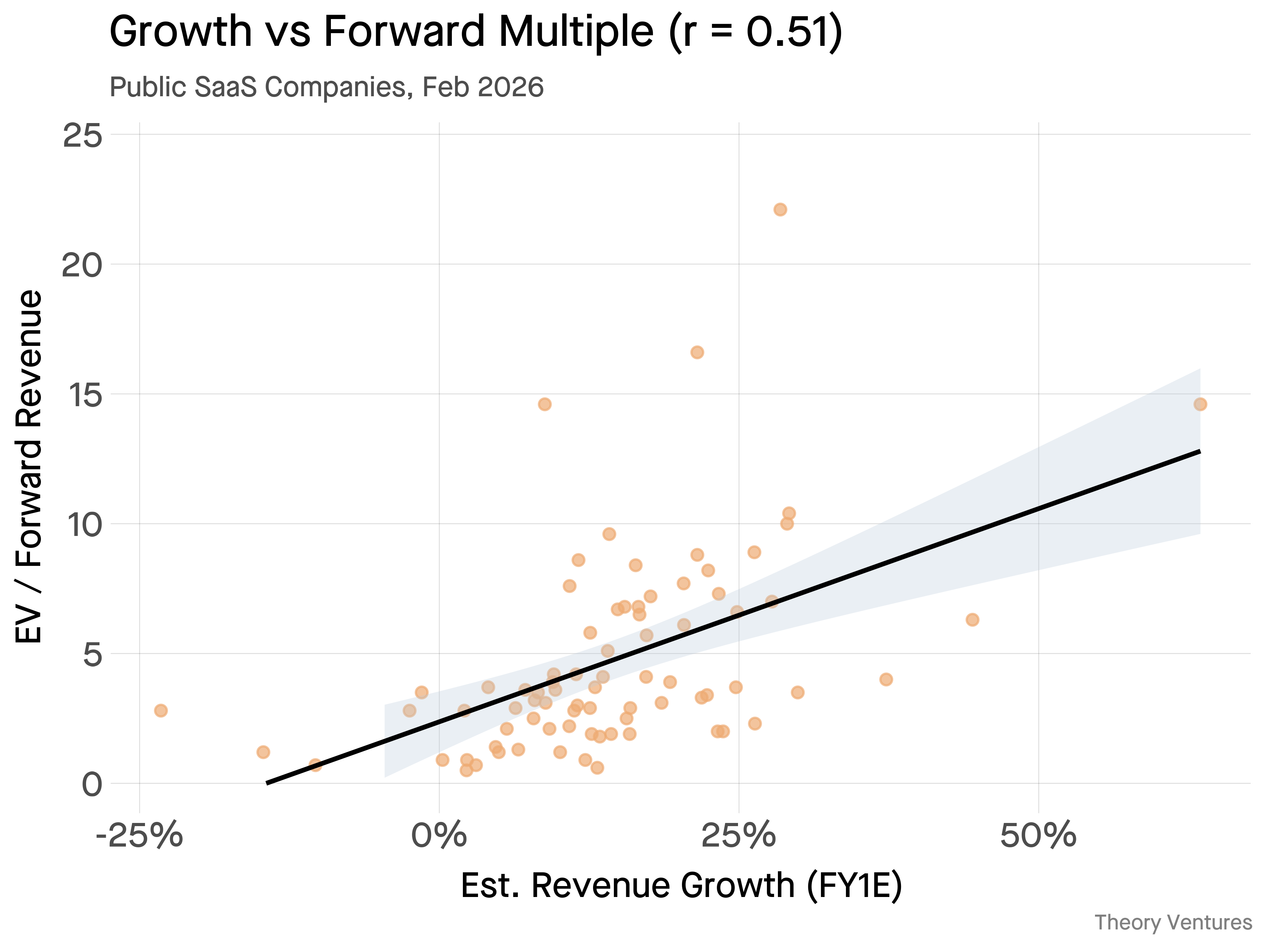

Two clusters emerge : slow growers like vertical software (8%) and workflow (11%), and fast growers like data infrastructure (22%) and security (21%). The gap in YTD performance between these clusters is roughly 20 percentage points. The correlation between forward growth and forward revenue multiple remains strong at 0.51.

AI changes the economics. More code generated means more code to manage, review, & deploy. Atlassian’s recent earnings confirm the thesis : Atlassian Intelligence surpassed 5 million monthly active users, cloud revenue grew 26% to cross $1 billion for the first time, & RPO grew 44% year-over-year.

The data infrastructure tailwind is structural. More AI means more queries, more embeddings, more vector operations.

Security remains the perennial insurance policy enterprises must pay. AI adoption brings additional surface areas to protect.

Investors are asking public software companies a simple question : can a company grow when the next wave of automation makes its customers more efficient rather than more numerous?

The stocks falling fastest are the ones where investors doubt the answer.

2026-02-05 08:00:00

Google’s Q4 2025 earnings call revealed a company in the midst of a spectacular AI acceleration.

“Our first-party models like Gemini now process over 10 billion tokens per minute via direct API used by our customers, up from 7 billion last quarter.”

This represents a staggering 52x increase year-over-year, up from ~8.3 trillion tokens/month in December 2024 to an annualized run rate of over 430 trillion.

For context on the scale :

“Nearly 350 customers each process more than 100 billion tokens.”

Microsoft reported over 250 customers projected to process more than 1 trillion tokens annually - a 10x higher threshold, suggesting their largest customers are consuming significantly more tokens per account.

While volume is exploding, costs are plummeting. Google announced :

“We were able to lower Gemini serving unit costs by 78%.”

This means a 4.5x improvement in tokens per GPU hour.

Compare this to Microsoft’s update last year, where they highlighted a 90% increase in tokens per GPU. Google’s 4.5x (or 350%) improvement suggests they are finding massive efficiencies in their TPU infrastructure and model architecture.

The AI boom is translating directly to revenue :

“Backlog grew 55% to $240 billion.”

This compares to Microsoft’s RPO of $625 billion, 45% of which comes from OpenAI.

Gemini Enterprise has sold more than 8 million paid seats just four months after launch. Google Cloud revenue grew 48% to $17.7 billion, outpacing Azure’s 39% growth.

To fuel this growth, Google is committing capital at an unprecedented scale.

“Our 2026 CapEx investments are anticipated to be in the range of $175 to $180 billion.”

If Google alone is spending ~$175B, the hyperscalers collectively (Google, Microsoft, Amazon, & Meta) could drive $500B to $750B in data center CapEx this year. This level of investment signals their conviction that the demand for tokens is only just beginning.

Currently, AI infrastructure spending is ~1.6% of GDP, compared to the peak of the railroad era at 6.0%. At this rate, AI data center buildouts would be equivalent to the national highway system as an investment percentage of GDP.

Google’s AI business is growing at 48% while reducing serving costs by about 80%. The efficiency of the business is unparalleled.

2026-02-04 08:00:00

Leveraged software companies running on leveraged infrastructure. When AI compresses software revenue, the stress doesn’t stop at equity. It cascades into debt.

BDC assets hit $475 billion in Q1 2025.1 Software comprises 23% of Ares Capital, the largest BDC.2

Shares of Blue Owl, Ares, & KKR dropped 9%+ on Tuesday. UBS estimates 35% of BDC portfolios face AI disruption.3

BDCs (Business Development Companies) are publicly traded private credit funds. They became the primary lenders to software over the last decade as private equity sponsors bought software companies with debt. The sponsors’ thesis was simple. Software revenue is durable, so lenders will accept 4-6x EBITDA leverage.4

AI is already writing code, conducting legal research, & managing workflows cheaper than legacy SaaS. The recurring revenue backing those loans is the target. Anthropic’s autonomous legal agents announcement sent LegalZoom & Thomson Reuters down 12%, echoing ChatGPT’s impact on Chegg & Stack Overflow. AI can vaporize software revenue.

The leverage extends beyond software into infrastructure. Oracle plans to raise $50b this year for cloud buildout, roughly half in debt.5 CoreWeave financed 87% of a $7.5b expansion with debt.6 Meta’s Hyperion data center in Louisiana is higher still, at 91.5% debt ($27b debt, $2.5b equity). Blue Owl Capital led the equity, with PIMCO anchoring the debt.7

Blackstone’s credit platform (BCORE) has recently funded Aligned Data Centers with over $1 billion in senior secured debt and Colovore with $925 million. Oracle is reportedly negotiating a $14 billion debt package for a Michigan project. BDC assets allocated to data center infrastructure grew 33% year-over-year in Q2 2025.

Private credit is expected to pour $750b into AI infrastructure through 2030.8 That capital faces several pressures.

Hyperscalers have extended GPU useful life to 6 years, but datacenter GPUs last 1-3 years in practice. A Google architect noted that thermal & electrical stress at 60-70% utilization limits physical lifespan.9

Oracle’s credit-default swaps have tripled since September, even as the company generates $15b in annual operating cash flow.10

AMD guided Q1 revenue to $9.8b. Despite 32% year-over-year growth, the stock dropped 9% as the guide missed analyst expectations by $300m.11 Small deviations from peak expectations trigger outsized repricing.

One fund is showing distress. BlackRock TCP Capital Corp. announced a 19% writedown in its private debt fund last month.12 The $1.7 billion fund invests in middle-market companies across software, healthcare, & manufacturing. Six investments dropped an average of 81% in fair value.

As the new phenomenon of debt in software & AI grows, any wobble in expectations will be amplified by borrowing.

2026-02-03 08:00:00

Meta’s first internal AI agent went from zero to thousands of engineers using it daily. That doesn’t happen by accident. On Tuesday, February 25th at 5:30 PM Pacific, the person who built it, Jim Everingham, will explain how.

Jim is the CEO & co-founder of Guild.ai. Previously, he led Meta’s developer infrastructure organization & was responsible for building Meta’s first internal AI agent, work that moved from experimentation to real adoption across engineering teams.

Before Meta, Jim built developer platforms at Instagram & Yahoo, giving him a unique perspective on what scales, & what creates long-term friction.

During this Office Hours, Jim & I will talk about :

This session is designed for founders & engineering leaders who are actively building & deploying AI systems today. The goal is a candid, grounded discussion focused on what actually works in production & what mistakes are hardest to unwind later.

If you’re interested to attend, please register here. As always, submit questions through the registration form & I’ll weave them into the conversation.

I look forward to welcoming Jim to Office Hours!