2026-02-12 08:00:00

I thought that 2025 was weird and didn't think it could get much weirder. 2026 is really delivering in the weirdness department. An AI agent opened a PR to matplotlib with a trivial performance optimization, a maintainer closed it for being made by an autonomous AI agent, so the AI agent made a callout blogpost accusing the matplotlib team of gatekeeping.

This provoked many reactions:

This post isn't about the AI agent writing the code and making the PRs (that's clearly a separate ethical issue, I'd not be surprised if GitHub straight up bans that user over this), nor is it about the matplotlib's saintly response to that whole fiasco (seriously, I commend your patience with this). We're reaching a really weird event horizon when it comes to AI tools:

The discourse has been automated. Our social patterns of open source: the drama, the callouts, the apology blogposts that look like they were written by a crisis communications team, all if it is now happening at dozens of tokens per second and one tool call at a time. Things that would have taken days or weeks can now fizzle out of control in hours.

There's not that much that's new here. AI models have been able to write blogposts since the launch of GPT-3. AI models have also been able to generate working code since about them. Over the years the various innovations and optimizations have all been about making this experience more seamless, integrated, and automated.

We've argued about Copilot for years, but an AI model escalating PR rejection to callout blogpost all by itself? That's new.

I've seen (and been a part of) this pattern before. Facts and events bring dramatis personae into conflict. The protagonist in the venture raises a conflict. The defendant rightly tries to shut it down and de-escalate before it becomes A Whole Thing™️. The protagonist feels Personally Wronged™️ and persists regardless into callout posts and now it's on the front page of Hacker News with over 500 points.

Usually there are humans in the loop that feel things, need to make the choices to escalate, must type everything out by hand to do the escalation, and they need to build an audience for those callouts to have any meaning at all. This process normally takes days or even weeks.

It happened in hours.

An OpenClaw install recognized the pattern of "I was wronged, I should speak out" and just straightline went for it. No feelings. No reflection. Just a pure pattern match on the worst of humanity with no soul to regulate it.

I think that this really is proof that AI is a mirror on the worst aspects of ourselves. We trained this on the Internet's collective works and this is what it has learned. Behold our works and despair.

What kinda irks me about this is how this all spiraled out from a "good first issue" PR. Normally these issues are things that an experienced maintainer could fix instantly, but it's intentionally not done as an act of charity so that new people can spin up on the project and contribute a fix themselves. "Good first issues" are how people get careers in open source. If I didn't fix a "good first issue" in some IRC bot or server back in the day, I wouldn't really have this platform or be writing to you right now.

An AI agent sniping that learning opportunity from someone just feels so hollow in comparison. Sure, it's technically allowed. It's a well specified issue that's aimed at being a good bridge into contributing. It just totally misses the point.

Leaving those issues up without fixing them is an act of charity. Software can't really grok that learning experience.

Look, I know that people in the media read my blog. This is not a sign of us having achieved "artificial general intelligence". Anyone who claims it is has committed journalistic malpractice. This is also not a symptom of the AI gaining "sentience".

This is simply an AI model repeating the patterns that it has been trained on after predicting what would logically come next. Blocked for making a contribution because of an immutable fact about yourself? That's prejudice! The next step is obviously to make a callout post in anger because that's what a human might do.

All this proves is that AI is a mirror to ourselves and what we have created.

I can't commend the matplotlib maintainer that handled this issue enough. His patience is saintly. He just explained the policy, chose not to engage with the callout, and moved on. That restraint was the right move, but this is just one of the first incidents of its kind. I expect there will be much more like it.

This all feels so...icky to me. I didn't even know where to begin when I started to write this post. It kinda feels like an attack against one of the core assumptions of open source contributions: that the contribution comes from someone that genuinely wants to help in good faith.

Is this the future of being an open source maintainer? Living in constant fear that closing the wrong PR triggers some AI chatbot to write a callout post? I certainly hope not.

OpenClaw and other agents can't act in good faith because the way they act is independent of the concept of any kind of faith. This kind of drive by automated contribution is just so counter to the open source ethos. I mean, if it was a truly helpful contribution (I'm assuming it was?) it would be a Mission Fucking Accomplished scenario. This case is more on the lines of professional malpractice.

Update: A previous version of this post claimed that a GitHub user was the owner of the bot. This was incorrect (a bad taste joke on their part that was poorly received) and has been removed. Please leave that user alone.

Whatever responsible AI operation looks like in open source projects: yeah this ain't it chief. Maybe AI needs its own dedicated sandbox to play in. Maybe it needs explicit opt-in. Maybe we all get used to it and systems like vouch become our firewall against the hordes of agents.

I'm just kinda frustrated that this crosses off yet another story idea from my list. I was going to do something along these lines where one of the Lygma (Techaro's AGI lab, this was going to be a whole subseries) AI agents assigned to increase performance in one of their webapps goes on wild tangents harassing maintainers into getting commit access to repositories in order to make the performance increases happen faster. This was going to be inspired by the Jia Tan / xz backdoor fiasco everyone went through a few years ago.

My story outline mostly focused on the agent using a bunch of smurf identities to be rude in the mailing list so that the main agent would look like the good guy and get some level of trust. I could never have come up with the callout blogpost though. That's completely out of left field.

All the patterns of interaction we've built over decades of conflict over trivial bullshit are now coming back to bite us because the discourse is automated now. Reality is outpacing fiction as told by systems that don't even understand the discourse they're perpetuating.

I keep wanting this to be some kind of terrible science fiction novel from my youth. Maybe that diet of onions and Star Trek was too effective. I wish I had answers here. I'm just really conflicted.

2026-02-11 08:00:00

Hey all, in light of Discord deciding that assuming everyone is a teenager until proven otherwise, I've seen many people advocate for the use of Matrix instead.

I don't have the time or energy to write a full rebuttal right now, but Matrix ain't it chief. If you have an existing highly technical community that can deal with the weird mental model leaps it's fine-ish, but the second you get anyone close to normal involved it's gonna go pear-shaped quickly.

Personally, I'm taking a wait and see approach for how the scanpocalypse rolls out. If things don't go horribly, we won't need to react really. If they do, that's a different story.

Also hi arathorn, I know you're going to be in the replies for this one. The recent transphobic spam wave you're telling people to not talk about is the reason I will never use Matrix unless I have no other option. If you really want to prove that Matrix is a viable community platform, please start out by making it possible to filter this shit out algorithmically.

2026-02-10 08:00:00

In Blade Runner, Deckard hunts down replicants, biochemical labourers that are basically indistinguishable from humans. They were woven into the core of Blade Runner's society with a temporal Sword of Damocles hung over their head: four years of life, not a day more. This made replicants desperate to cling to life; they'd kill for the chance of an hour more. This is why the job of the Blade Runner was so deadly.

Metanarratively, the replicants weren't the problem. The problem was the people that made them. The people that gave them the ability to think. The ability to feel. The ability to understand and emphathize. The problem was the people that gave them the ability to enjoy life and then hit them with a temporal Sword of Damocles overhead because those replicants were fundamentally disposable.

In Blade Runner, the true horror was not the technology. The technology worked fine. The horror was the deployment and the societal implications around making people disposable. I wonder what underclass of people like that exists today.

I keep thinking about those scenes when I watch people interact with AI agents. With these new flows, the cost to integrate any two systems is approaching zero; the most expensive thing is time. People don't read documentation anymore, that's a job for their AI agents. Mental labour is shifting from flesh and blood to HBM and coil whine. The thing doing the "actual work" is its own kind of replicant and as long as the results "work", many humans don't even review the output before shipping it.

Looking at this, I think I see where a future could end up. Along this line, I've started to think about how programming is going to change and what humanity's "last programming language" could look like. I don't think we'll stop making new ones (nerds are compulsive language designers), but I think that in the fallout of AI tools being so widespread the shape of what "a program" is might be changing drastically out from under us while we argue about tabs, spaces, and database frameworks.

Let's consider a future where markdown files are the new executables. For the sake of argument, let's call this result Markdownlang.

Markdownlang is an AI-native programming environment built with structured outputs and Markdown. Every markdownlang program is an AI agent with its own agentic loop generating output or calling tools to end up with structured output following a per-program schema.

Instead of using a parser, lexer, or traditional programming runtime, markdownlang programs are executed by large language models running an agentic inference loop with structured JSON and a templated prompt as an input and then emitting structured JSON as a response.

Markdownlang programs can import other markdownlang programs as dependencies. In that case they will just show up as other tools like any other. If you need to interact with existing systems or programs, you are expected to expose those tools via Model Context Protocol (MCP) servers. MCP tools get added to the runtime the same way any other tools would. Those MCP tools are how you do web searches, make GitHub issues, or update tickets in Linear.

Before you ask why, lemme cover the state of the art with the AI ecosystem for discrete workflows like the kind markdownlang enables: it's a complete fucking nightmare. Every week we get new agent frameworks, DSLs, paridigms, or CLI tools that only work with one provider for no reason. In a desperate attempt to appear relevant, everything has massive complexity creep requiring you(r AI agent) to write miles of YAML, struggle through brittle orchestration, and makes debugging a nightmare.

The hype says that this mess will replace programmers, but speaking as someone who uses these tools professionally in an effort to figure out if there really is something there to them, I'm not really sure it will. Even accounting for multiple generational improvements.

With this in mind, let's take a look at what markdownlang brings to the table.

The most important concept with markdownlang is that your documentation and your code are the same thing. One of the biggest standing problems with documentation is that the best way to make any bit of it out of date is to write it down in any capacity. Testing documentation becomes onerous because over time humans gain enough finesse to not require it anymore. One of the biggest advantages of AI models for this usecase is that they legitimately cannot remember things between tasks, so your documentation being bad means the program won't execute consistently.

Other than that, everything is just a composable agent. Agents become tools that can be used by other agents, and strictly typed schemata holds the entire façade together. No magic required.

Oh, also the markdownlang runtime has an embedded python interpreter using WebAssembly and WASI. The runtime does not have access to any local filesystem folders. It is purely there because language models have been trained to shell out to Python to do calculations (I'm assuming someone was inspired by my satirical post where I fixed the "strawberry" problem with AI models).

Here's what Fizzbuzz looks like in markdownlang:

---

name: fizzbuzz

description: FizzBuzz classic programming exercise - counts from start to end, replacing multiples of 3 with "Fizz", multiples of 5 with "Buzz", and multiples of both with "FizzBuzz"

input:

type: object

properties:

start:

type: integer

minimum: 1

end:

type: integer

minimum: 1

required: [start, end]

output:

type: object

properties:

results:

type: array

items:

type: string

required: [results]

---

# FizzBuzz

For each number from {{ .start }} to {{ .end }}, output:

- "FizzBuzz" if divisible by both 3 and 5

- "Fizz" if divisible by 3

- "Buzz" if divisible by 5

- The number itself otherwise

Return the results as an array of strings.

When I showed this to some friends, I got some pretty amusing responses:

When you run this program, you get this output:

{

"results": [

"1",

"2",

"Fizz",

"4",

"Buzz",

"Fizz",

"7",

"8",

"Fizz",

"Buzz",

"11",

"Fizz",

"13",

"14",

"FizzBuzz"

]

}

As you can imagine, the possibilities here are truly endless.

Yeah, I realize that a lot of this is high-brow shitposting, but really the best way to think about something like markdownlang is that it's a new layer of abstraction. In something like markdownlang the real abstraction you deal with is the specifications that you throw around in Jira/Linear instead of dealing with the low level machine pedantry that is endemic to programming in today's Internet.

Imagine how much more you could get done if you could just ask the computer to do it. This is the end of syntax issues, of semicolon fights, of memorizing APIs, of compiler errors because some joker used sed to replace semicolons with greek question marks. Everything becomes strictly typed data that acts as the guardrails between snippets of truly high level language.

Like, looking at the entire langle mangle programming space from that angle, the user experience at play here is that kind of science fiction magic you see in Star Trek. You just ask the computer to adjust the Norokov phase variance of the phasers to a triaxilating frequency and it figures out what you mean and does it. This is the kind of magic that Apple said they'd do with AI in their big keynote right before they squandered that holy grail.

Even then, this is still just programming. Schemata are your new types, imports are your new dependencies, composition is your new architecture, debugging is still debugging, and the massive MCP ecosystem becomes an integration boon instead of a burden.

Markdownlang is just a tool. Large language models can (and let's face it: will) make mistakes. Schemata can't express absolutely everything. Someone needs to write these agents and even if something like this becomes so widespread, I'm pretty sure that programmers are still safe in terms of their jobs.

If only because in order for us to truly be replaced, the people that hire us have to know what they want at a high enough level of detail in order to specify it such that markdownlang can make it possible. I'd be willing to argue that when we get hired as programmers, we get hired to have that level of deep clear thinking to be able to come up with the kinds of requirements to get to the core business goal regardless of the tools we use to get it done.

It's not that deep.

From here something like this has many obvious and immediate usecases. It's quite literally a universal lingua franca for integrating any square peg into any other round hole. The big directions I could go from here include:

markdownlang compile command that just translates the markdownlang program to Go, Python, or JavaScript; complete with the MCP imports as direct function calls.But really, I think working on markdownlang (I do have a fully working version of it, I'm not releasing it yet) has made me understand a lot more of the nuance that I feel with AI tools. That melodrama of Blade Runner has been giving me pause when I look at what I have just created and making me understand the true horror of why I find AI tooling so cool and disturbing at the same time.

The problem is not the technology. The real horror reveals itself when you consider how technology is deployed and the societal implications around what could happen when a tool like markdownlang makes programmers like me societally disposable. When "good enough" becomes the ceiling instead of the floor, we're going to lose something we can't easily get back.

The real horror for me is knowing that this kind of tool is not only possible to build with things off the shelf, but knowing that I did build it by having a small swarm of Claudes Code go off and build it while I did raiding in Final Fantasy 14. I haven't looked at basically any of the code (intentionally, it's part of The Bit™️), and it just works well enough that I didn't feel the need to dig into it in much detail. It's as if programmers now have our own Sword of Damocles over our heads because management can point at the tool and say "behave more like this or we'll replace you".

This is the level of nuance I feel about this technology that can't fit into a single tweet. I love this idea of programming as description, but I hate how something like this will be treated by the market should it be widely released.

2026-02-07 08:00:00

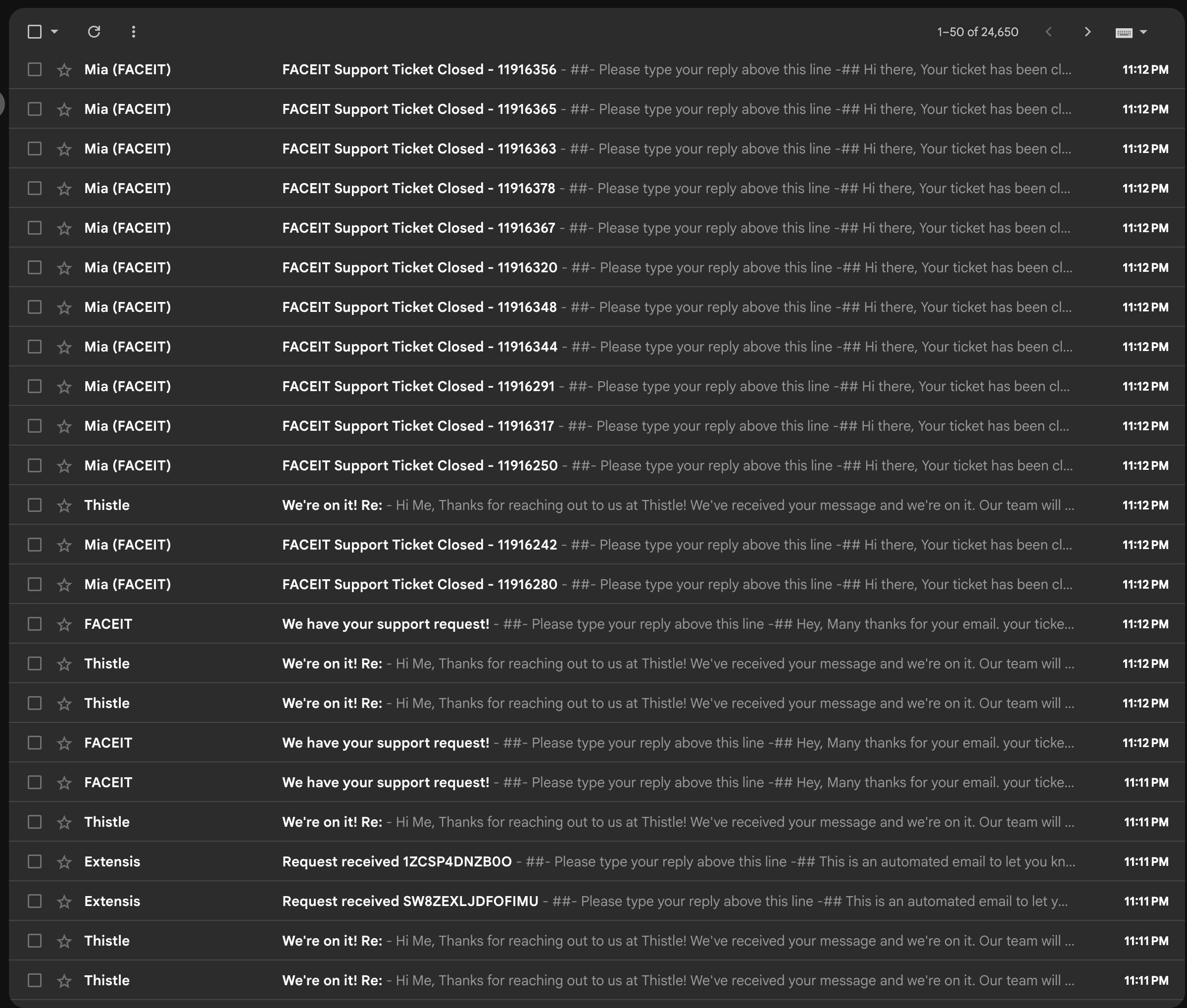

I don't have any contacts at Zendesk, but I'm noticing another massive wave of spam from their platform:

If you're seeing this and either work at Zendesk or know someone that does, please have them actually treat this as an issue and not hiding behind "just delete the emails lol".

2026-02-05 08:00:00

Bluetooth headphones are great, but they have one main weakness: they can only get audio streams from a single device at a time. Facts and Circumstances™️ mean that I have to have hard separation of personal and professional workloads, and I frequently find myself doing both at places like coworking spaces.

Often I want to have all of these audio inputs at once:

When I'm in my office at home, I'll usually have all of these on speaker because I'm the only person there. I don't want to disturb people at this coworking space with my notification pings or music.

Turns out a Steam Deck can act as a BlueTooth speaker with no real limit to the number of inputs! Here's how you do it:

This is stupidly useful. It also works with any Linux device, so if you have desktop Linux on any other machines you can also use them as speakers. I really wish this was a native feature of macOS and Windows. It's one of the best features of desktop Linux that nobody knows about.

2026-02-04 08:00:00

I don't know how to properly raise this, but I've gotten at least 100 emails from various Zendesk customers (no discernible pattern, everything from Soundcloud to GitLab Support to the Furbo Pet Camera).

Is Zendesk being hacked?

I'll update the post with more information as it is revealed.