2026-02-13 23:27:28

This is Behind the Blog, where we share our behind-the-scenes thoughts about how a few of our top stories of the week came together. This week, we discuss support and saying RIP to FPDS.

JOSEPH: I think I might make this into a more full article in a couple weeks when it actually happens, but yesterday I realized that FPDS.gov is shutting down. That is the Federal Procurement Data System, a website that includes decades of records showing what the U.S. government bought, from what company, and when. I check it essentially every day, and it has been the basis of countless of my articles at this point. Whether it’s finding an initial lead, or a story in itself, FPDS is behind so many of them.

2026-02-13 05:56:04

Jesse Van Rootselaar, the 18-year-old suspected of killing eight people and injuring 25 in a mass shooting in a secondary school in Canada, created a Roblox game that allowed players to simulate a mass shooting in a level that looks like a shopping mall, Roblox has confirmed.

“We have removed the user account connected to this horrifying incident as well as any content associated with the suspect,” Roblox told 404 Media in an email. “We are committed to fully supporting law enforcement in their investigation.”

2026-02-13 03:34:37

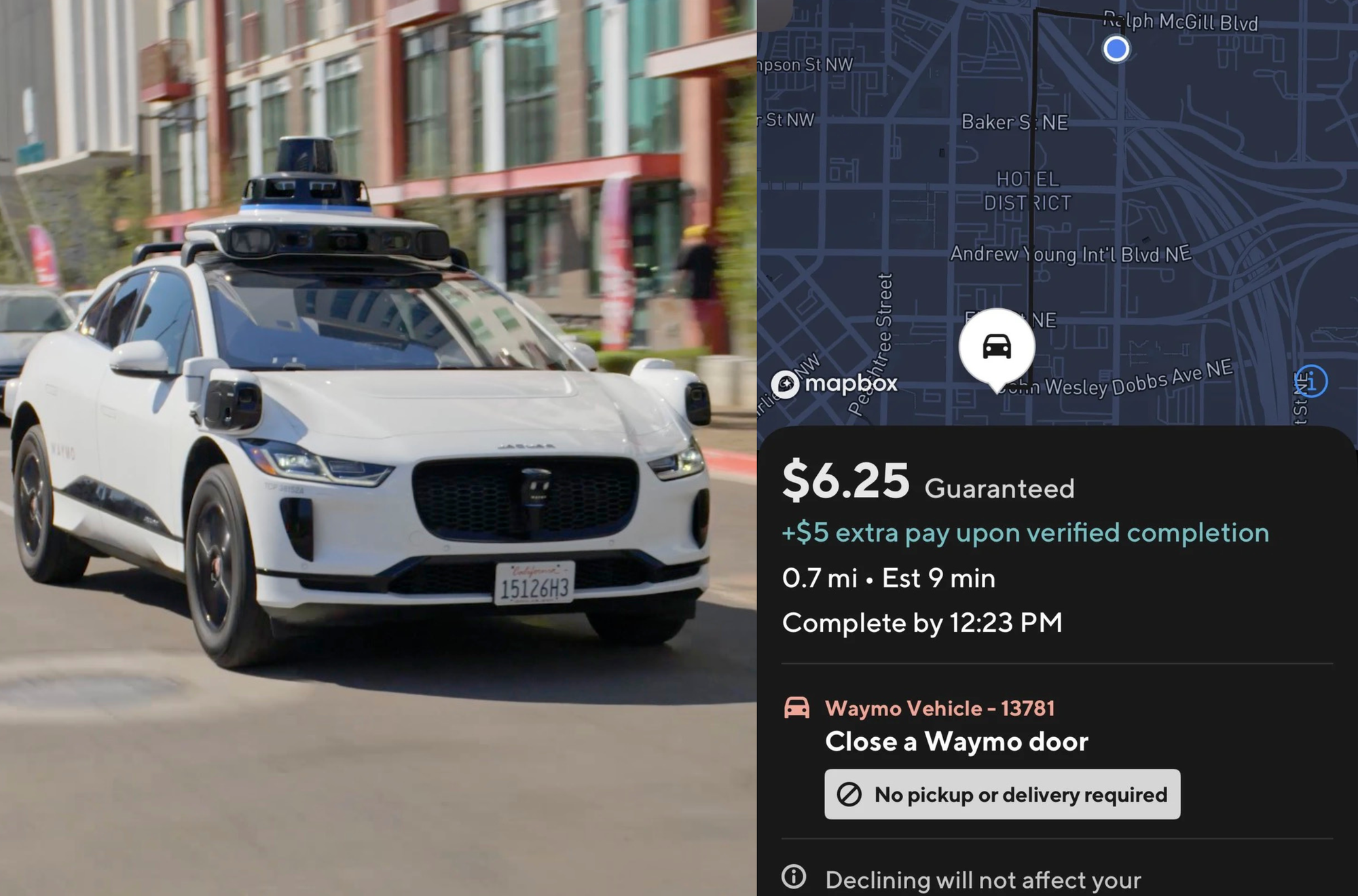

Waymo, Google’s autonomous vehicle company, and DoorDash, the delivery and gig work platform, have launched a pilot program that pays Dashers, at least in one case, around $10 to travel to a parked Waymo and close its door that the previous passenger left open, according to a joint statement from the company given to 404 Media.

The program is unusual in that Dashers are more often delivering food than helping out a driving robot. It also shows that even with autonomous vehicles, and the future they promise of metropolitan travel without the need for a driver, a human is sometimes needed for the most simple and yet necessary tasks.

2026-02-12 22:14:48

The Miami-Dade Sheriff’s Office (MDSO) and the Los Angeles Police Department (LAPD) have bought access to GeoSpy, an AI tool that can near instantly geolocate a photo using clues in the image such as architecture and vegetation, with plans to use it in criminal investigations, according to a cache of internal police emails obtained by 404 Media.

The emails provide the first confirmed purchases of GeoSpy’s technology by law enforcement agencies. On its website GeoSpy has previously published details of investigations it says used the technology, but did not name any agencies who bought the tool.

“The Cyber Crimes Bureau is piloting a new analytical tool called GeoSpy. Early testing shows promise for developing investigative leads by identifying geospatial and temporal patterns,” an MDSO email reads.

2026-02-12 04:53:57

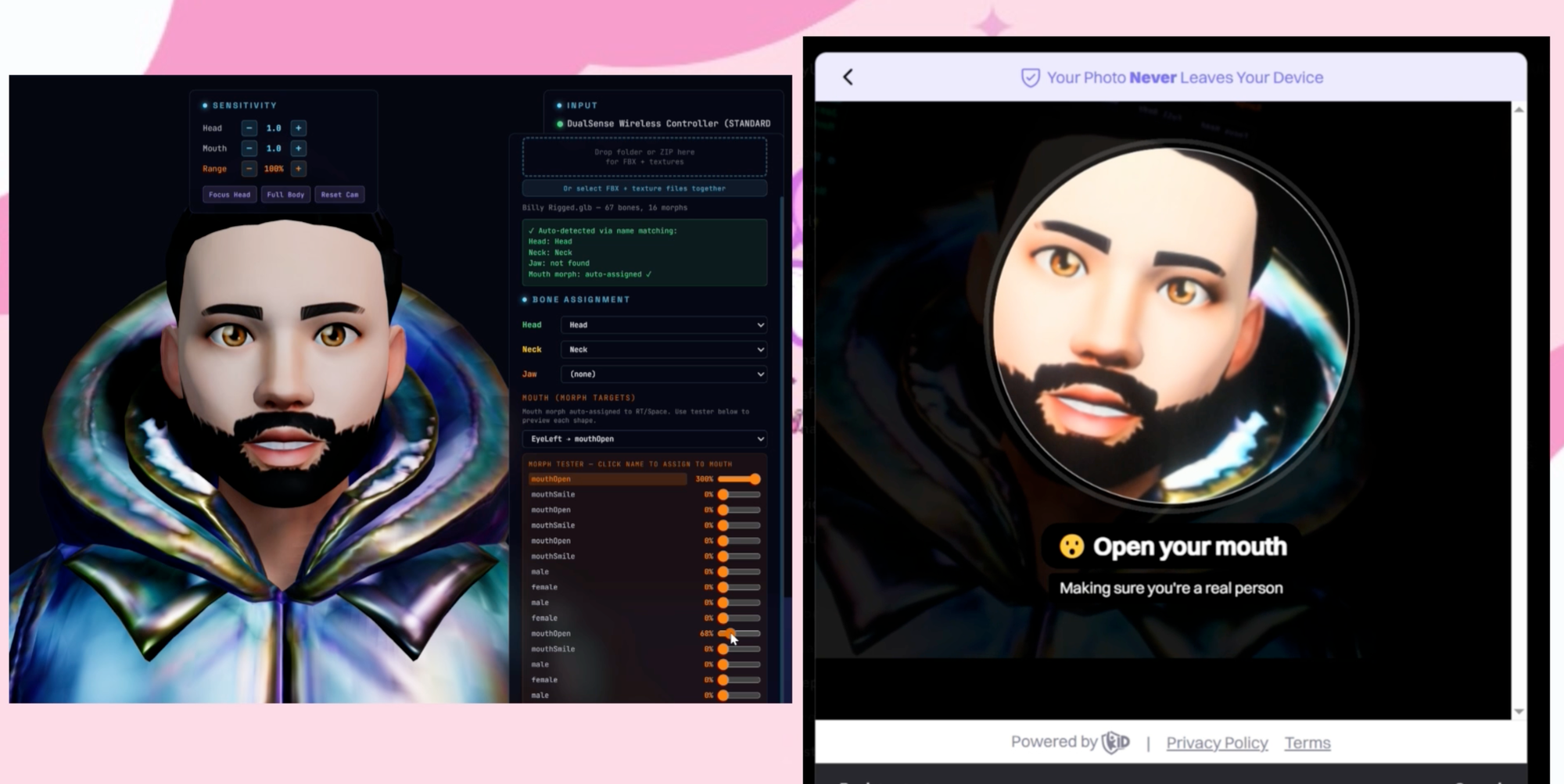

A newly released tool claims it can bypass Discord’s age verification system by allowing users to control a 3D model of a computer-generated man in their browser instead of scanning their real face.

On Monday, Discord announced it was launching teen-by-default settings globally, meaning that more users may be required to verify their age by uploading an identity document or taking a selfie. Users responded with widespread criticism, with Discord then publishing an update saying, “You need to be an adult to access age-restricted experiences such as age-restricted servers and channels or to modify certain safety settings.”

The tool, however, shows those age verification checks may be bypassed. 404 Media previously reported kids said they were using photos of Trump and G-Man from Half Life to bypass the age verification software in the popular VR game Gorilla Tag. That game uses the service k–ID, which is the same as what Discord is using.

2026-02-12 02:06:01

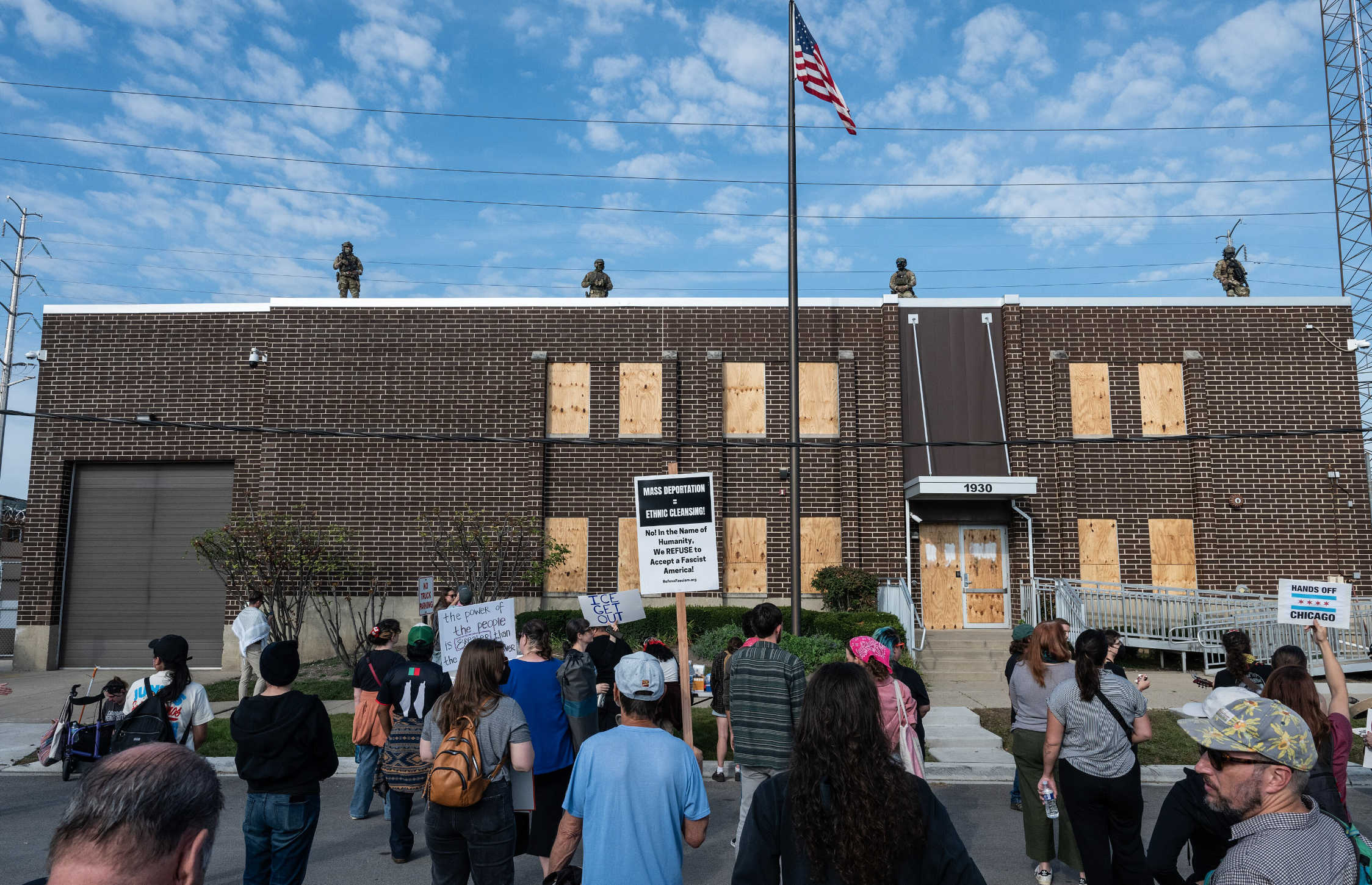

The legal saga over surveillance footage from within an Immigration and Customs Enforcement detention center in suburban Chicago has reached new levels of Kafkaesque absurdity, with the federal government losing three hard drives it was supposed to put footage on, refusing to provide footage from five critical surveillance cameras, and delivering soundless video of a highly contested visit from Department of Homeland Security Secretary Kristi Noem.

We have repeatedly covered an abuse lawsuit about living conditions within the Broadview detention facility. The federal government has claimed that 10 days of footage from within the facility, taken during a critical and highly contested period, was “irretrievably destroyed” and could not be produced as part of the lawsuit, which was brought by people being held at Broadview in what were allegedly horrendous conditions. It later said that due to a system crash, the footage was never recorded in the first place. The latest update in this case, however, deals with surveillance camera footage that was recorded and that a judge has ordered the federal government to turn over.

For this footage, the federal government first claimed that it could not afford the storage space necessary to take the footage that it did have and produce it for discovery to the plaintiffs’ lawyers in the case. The plaintiffs’ lawyers, representing Broadview’s detainees, then purchased 78 terabytes of empty hard drives and gave them to the federal government, according to court records. This included three 8-terabyte SSDs and three 18-terabyte hard drives.

Court records note that “plaintiffs provided defendants with five large hard drives to facilitate Defendants’ production, yet Defendants inexplicably lost three of them.” Emails submitted as evidence suggest that the U.S. government and the plaintiffs’ attorneys had a call to discuss the lost hard drives.

One of the emails sent by plaintiffs’ attorneys to the Department of Justice in late January notes that the government had been exceedingly slow in producing footage, taking weeks to produce just a small amount of footage.

“There should be plenty of hard drive space at Broadview’s disposal,” the email reads. “The team there should currently have in its possession 5 hard drives with 72 terabytes of space, provided by plaintiffs’ counsel at the last 2 site visits. We have received only one hard drive back from Broadview to date. Copying of November/December footage should have taken place over the past week so that it could be delivered to plaintiffs’ counsel today when they visit Broadview this afternoon. At the very least, that footage should be being copied now.”

The two sides then arranged a phone call, a summary of which was emailed by plaintiffs’ attorneys to the Department of Justice:

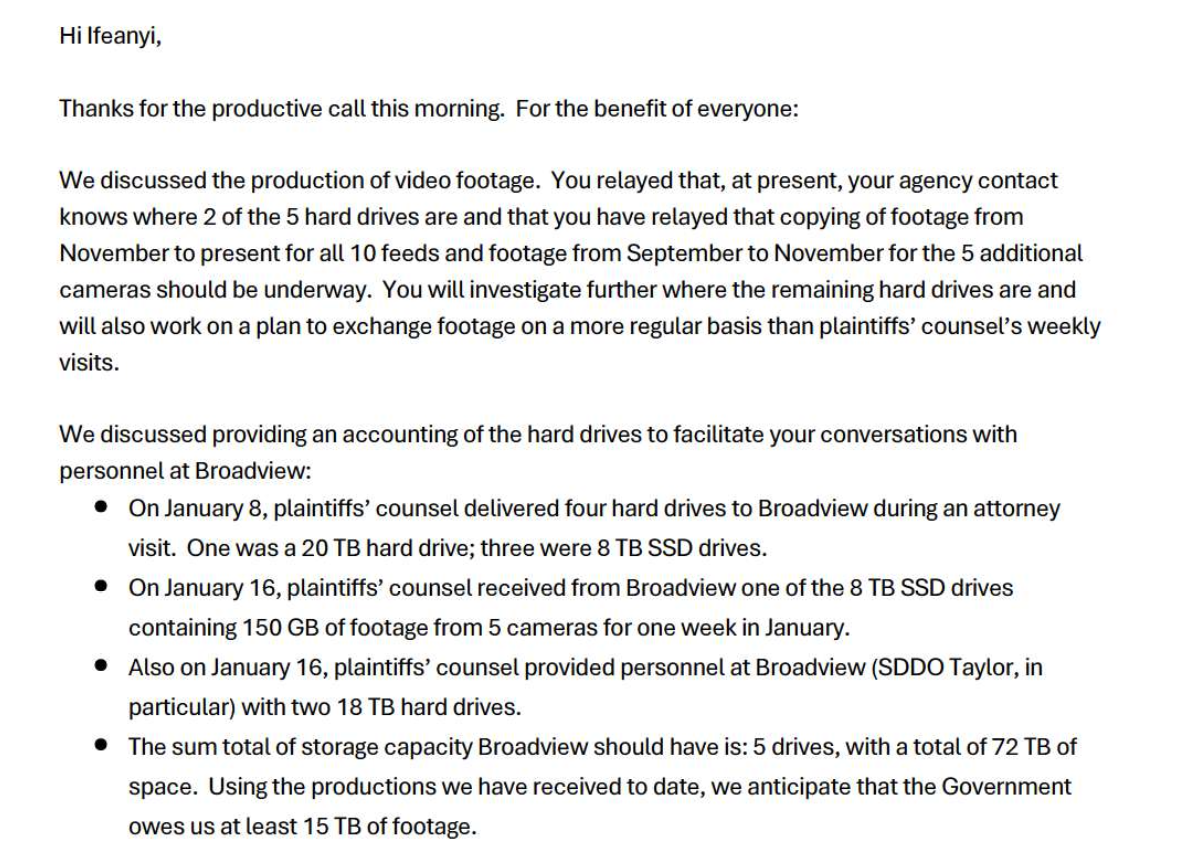

“Thanks for the productive call this morning. For the benefit of everyone:

We discussed the production of video footage. You relayed that, at present, your agency contact knows where 2 of the 5 hard drives are and that you have relayed that copying of footage from November to present for all 10 feeds and footage from September to November for the 5 additional cameras should be underway. You will investigate further where the remaining hard drives are and will also work on a plan to exchange footage on a more regular basis than plaintiffs' counsel's weekly visits.

We discussed providing an accounting of the hard drives to facilitate your conversations with personnel at Broadview:

• On January 8, plaintiffs' counsel delivered four hard drives to Broadview during an attorney visit. One was a 20 TB hard drive; three were 8 TB SSD drives.

• On January 16, plaintiffs' counsel received from Broadview one of the 8 TB SSD drives containing 150 GB of footage from 5 cameras for one week in January.

• Also on January 16, plaintiffs' counsel provided personnel at Broadview (SDDO Taylor, in particular) with two 18 TB hard drives.

• The sum total of storage capacity Broadview should have is: 5 drives, with a total of 72 TB of space. Using the productions we have received to date, we anticipate that the Government owes us at least 15 TB of footage.”

Days later, the Department of Justice told the plaintiffs’ attorneys that “they are still searching for those hard drives at Broadview.” The plaintiffs’ attorneys responded: “Losing multiple drives provided to facilitate speedy production is not acceptable,” and “the missing hard drives and lack of production of any footage predating January remains a significant, prejudicial issue.”

A filing by the plaintiffs with the court highlights some of the ongoing issues they have had with the government complying with court-ordered discovery requirements, which includes the lost hard drives, missing footage, footage from only five of the 10 cameras that were supposed to be delivered. A separate filing notes that footage produced by the government from a high-profile visit by Noem is missing audio “despite visible professional microphones and cell phones with audio capabilities in the footage.”

“Plaintiffs have gone above and beyond their obligations under federal law to streamline rolling production of such footage, purchasing expensive hard drives and agreeing to transport and pick up those drives from Broadview during weekly attorney visits. Defendants agreed to this arrangement,” they wrote in the filing. “Yet, Defendants have fallen unacceptably short of their production obligations. Defendants have provided no footage from five of the ten camera feeds […] Defendants have also failed to provide footage for a near-two-month span for the remaining five camera feeds. What’s more, Defendants have purportedly lost multiple hard drives provided by Plaintiffs’ counsel […] There is no excuse for Defendants’ discovery failures.”

The filing notes that the five missing cameras are specifically from detainee isolation cells, “despite those cells being a key part of Plaintiffs’ complaint. The produced feeds show egregious conditions but were insufficient to provide Plaintiffs the discovery necessary to fully investigate their claims.” These cells were designed to hold one person at a time, but were allegedly being used to hold multiple detainees at a time during a critical period that the lawsuit covers; “such cells are also where ICE holds detainees with acute medical or mental health conditions, including those who have suffered medical emergencies while in detention, and where it holds detainees who have been subjected to use of force by ICE officers while inside the facility,” they add.

The filing says that the plaintiffs learned that the government lost the hard drives in late January, when the government claimed that it had returned all of the hard drives to the plaintiffs’ attorneys, and that it had run out of storage space with which to provide them court-ordered footage.

“On January 28, Defendants’ counsel relayed that Broadview personnel had advised that they were out of storage space on drives provided by Plaintiffs, reporting that all hard drives provided by Plaintiffs had been returned to them. This was the first indication that some or all of 70 terabytes’ worth of hard drives were unaccounted for,” they wrote. “In the days since, the Government has admitted that it cannot find three of the five hard drives that should be in its possession.”

“Plaintiffs are waiting on months of footage. Every day that passes without this evidence compounds the prejudice to Plaintiffs’ ability to prepare for the upcoming hearing. Defendants’ foot dragging and poor organizational practices—and their instinct to rely on Plaintiffs to take the laboring oar for the purchase, delivery, pickup, and return of storage devices to facilitate Defendants’ discovery obligations—cannot be permitted.”