2025-12-30 15:10:54

How are you, hacker?

🪐Want to know what's trending right now?:

The Techbeat by HackerNoon has got you covered with fresh content from our trending stories of the day! Set email preference here.

## The Hidden Cost of AI: Why It’s Making Workers Smarter, but Organisations Dumber  By @yuliiaharkusha [ 8 Min read ]

AI boosts individual performance but weakens organisational thinking. Why smarter workers and faster tools can leave companies less intelligent than before. Read More.

By @yuliiaharkusha [ 8 Min read ]

AI boosts individual performance but weakens organisational thinking. Why smarter workers and faster tools can leave companies less intelligent than before. Read More.

By @ipinfo [ 9 Min read ] IPinfo reveals how most VPNs misrepresent locations and why real IP geolocation requires active measurement, not claims. Read More.

By @oxylabs [ 9 Min read ] Compare the best Amazon Scraper APIs for 2025, analyzing speed, pricing, reliability, and features for scalable eCommerce data extraction. Read More.

By @indrivetech [ 6 Min read ] How we built an n8n automation that reads Kibana logs, analyzes them with an LLM, and returns human-readable incident summaries in Slack Read More.

By @btcwire [ 2 Min read ] The project has opened the $SEC token presale, including early staking opportunities offering up to 100% APY during the presale phase. Chat to Pay is an instant Read More.

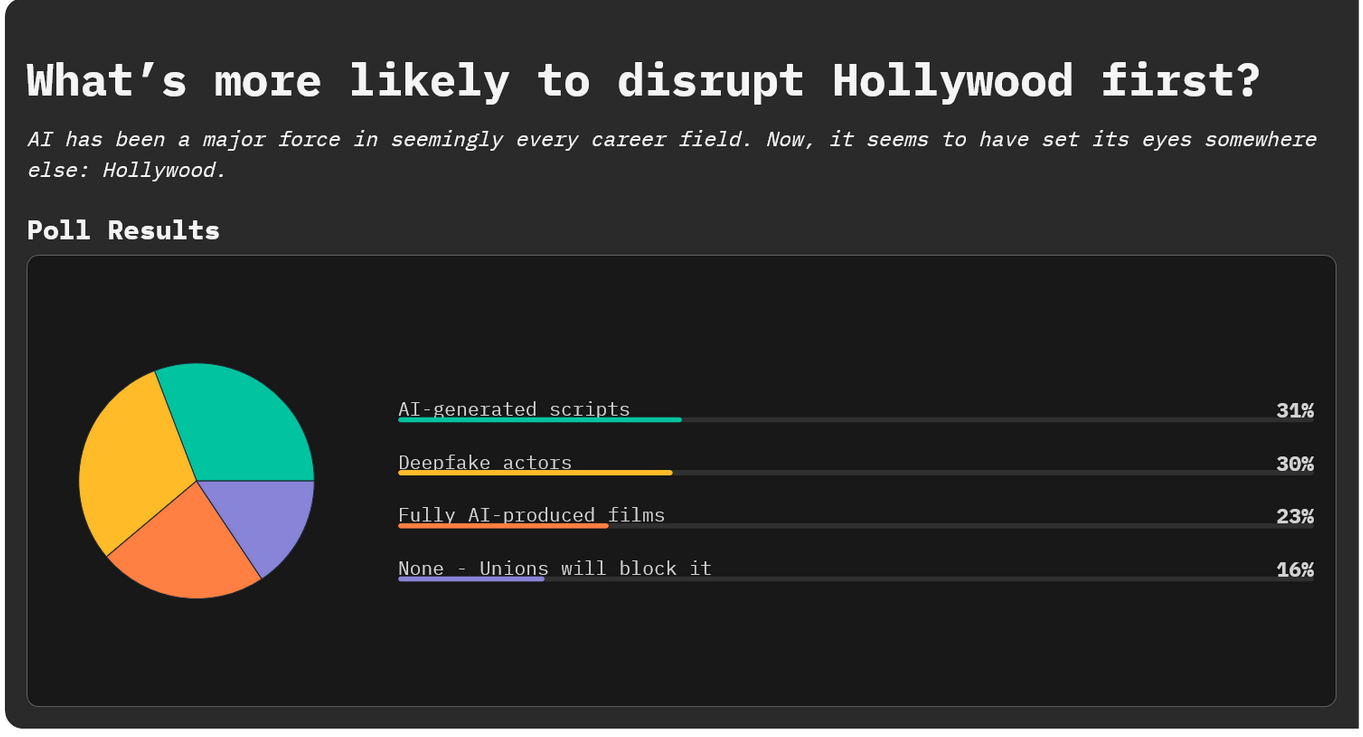

By @3techpolls [ 5 Min read ] AI has been a major force in seemingly every career field. Now, it seems to have set its eyes somewhere else: Hollywood. Read More.

By @shashanksingla [ 8 Min read ] Inside a lean RTB system processing 350M daily requests with sub-100ms latency, built by a 3-person team on a $10k cloud budget. Read More.

By @tigranbs [ 10 Min read ] How Rust's strict compiler transforms AI coding tools into reliable pair programmers and why the language is uniquely positioned for the age of agentic coding Read More.

By @theakashjindal [ 7 Min read ] AI focus shifts from automation to augmentation ("Collaborative Intelligence"), pairing AI speed with human judgment to boost productivity. Read More.

By @akiradoko [ 9 Min read ] The Java “tomb” is filling up. Here’s what’s being buried—and what you should use instead. Read More.

By @tolstykhzhe [ 9 Min read ] I became a project manager in a game company in 2021 and witnessed how production processes evolved in parallel with the rapid emergence of new AI tools. Read More.

By @ishanpandey [ 5 Min read ] DataHaven has launched Camp Haven, an activation campaign that doubles as a large-scale stress test for its AI-focused decentralized storage platform. Read More.

By [@Dandilion Civilization](https://hackernoon.com/u/Dandilion Civilization) [ 5 Min read ] I didn’t break hiring. It broke the evidence. Why CVs and training signals no longer predict capability, and what HR should use instead in 2026 Read More.

By @djcampbell [ 6 Min read ] Is AI good or bad? We must decide. Read More.

By @benoitk14 [ 3 Min read ] Web3 jobs in 2025 are growing again, but the market has matured. Less hype, more regulation, senior talent, and new community-driven hiring models. Read More.

By @huizhudev [ 5 Min read ] A guide to transforming CI/CD pipeline creation from a manual chore into an automated, architectural process using a specialized AI system prompt. Read More.

By @sanya_kapoor [ 6 Min read ] Anonymous Instagram Story viewing enables private research, reduces social pressure, and reshapes how we engage with public content. Read More.

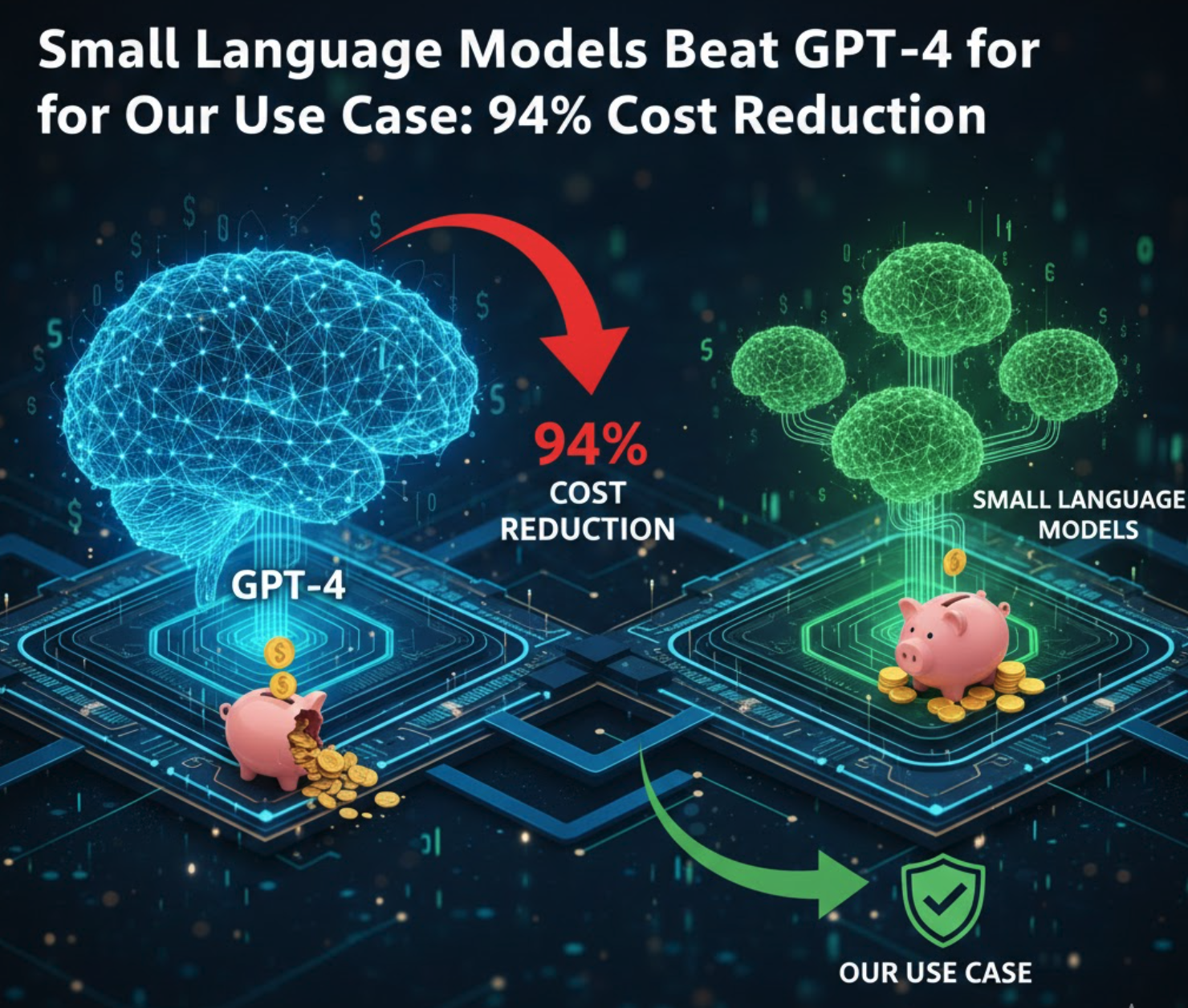

By @dineshelumalai [ 5 Min read ] Small language models outperformed GPT-4 for our use case. Learn how we achieved 94% cost reduction, faster response times, and higher customer satisfaction wit Read More.

By @companyoftheweek [ 4 Min read ] Meet ScyllaDB, the high-performance NoSQL database delivering predictable millisecond latencies for Discord and hundreds more. Read More.

By @nathanbsmith729 [ 6 Min read ]

I did not create a post for 21, so this is for continuity. Sorry! Read More.

🧑💻 What happened in your world this week? It's been said that writing can help consolidate technical knowledge, establish credibility, and contribute to emerging community standards. Feeling stuck? We got you covered ⬇️⬇️⬇️

ANSWER THESE GREATEST INTERVIEW QUESTIONS OF ALL TIME

We hope you enjoy this worth of free reading material. Feel free to forward this email to a nerdy friend who'll love you for it.

See you on Planet Internet! With love,

The HackerNoon Team ✌️

.gif)

2025-12-30 12:49:23

\ In 2024, stablecoin transfer volumes climbed to $27.6 trillion, eclipsing the combined settlement volume of traditional payment providers by more than 7%. This figure represents a fundamental inversion of the financial hierarchy.

For years, digital assets were viewed as a speculative fringe market, distinct from the serious machinery of global payments. The data now suggests the opposite is true. The most significant shift in finance is not happening in asset prices, but in the underlying architecture of money itself, specifically, the transition from legacy banking rails to blockchain-based settlement.

While market observers often fixate on daily volatility, the real story is the silent replacement of outdated infrastructure. This migration was the central theme for speakers at Binance Blockchain Week 2025. Leaders gathered to break down exactly how capital is moving from traditional settlement systems to on-chain environments. Their takeaway was simple; the era of experiments is finished, and the infrastructure build-out is now underway.

Reeve Collins, Co-Founder of Tether and Chairman of STBL, framed the current state of the industry during a panel on digital money. He noted that while retail adoption has been robust, the next phase relies on a different class of participants. "The people who haven't leaned in yet to truly bring the scale we're talking about are the institutions," Collins stated. "What's up next is more trust and more institutional money."

The flight toward on-chain finance is accelerating against a backdrop of macroeconomic friction. The US dollar has weakened approximately 11% against a global basket of currencies, while traditional finance grapples with the slow mechanics of trade deficits and debt imbalances. Yet, the migration to stablecoins is driven less by currency devaluation and more by technological obsolescence.

Legacy systems like SWIFT still rely on T+2 settlement cycles and operate within rigid banking hours. In contrast, the market cap for stablecoins has jumped to $312.55 billion, marking a 49.13% YTD growth by early December 2025. This liquidity is not sitting idle; it is active across 208.17 million holder addresses, moving value globally without regard for banking holidays or borders.

Capital is naturally seeking the path of least resistance. Investors and businesses are increasingly opting for programmable money that functions 24/7, abandoning the friction of the 9-to-5 banking window. The technology has simply outpaced the rails it was meant to supplement, leading to a gradual but decisive erosion of traditional fiat infrastructure.

The rise of the stablecoin sector is fundamentally a story about settlement architecture. Traders are not just swapping tokens; they are utilizing a superior mechanism for finality. This utility was highlighted at Binance Blockchain Week 2025 through a discussion on high-value transaction settlements.

Zach Witkoff, Co-Founder of World Liberty Financial, pointed to a specific event that illustrates this capacity for scale. "USD1 was used to settle the largest transaction in crypto history—MGX investing in Binance," Witkoff said. "Five years ago, no one would've imagined a $2 billion deal settling in stablecoins."

This architectural shift has been solidified by the passage of the GENIUS Act in the US. Rather than acting as a restriction, the regulation has served as a blueprint for institutional entry. By mandating 100% reserve backing and enforcing rigorous monthly disclosures, the Act has effectively transformed private stablecoins into regulated digital dollars.

For Reeve Collins, the new rules are less about restriction and more about permission for institutions to enter. He argues that clear regulations provide the necessary foundation for genuine growth. "When you talk about scale, what you're really talking about is trust," Collins said.

The technical capabilities of modern blockchains further widen the gap between crypto and traditional finance. On high-performance networks, conversions between assets now occur in under half a second for a fraction of a cent. When contrasted with the fees and delays of legacy wire transfers, the friction of the old system looks increasingly archaic.

As money moves on-chain, the assets it purchases must inevitably follow. This is the logic driving the explosion of tokenized real-world assets. The definition of value in the crypto space is expanding from simple currency to complex collateral.

The numbers reflect a sector in hyper-growth. The market cap for RWAs has reached $18.25 billion, a staggering 229.42% increase. Tokenized US Treasuries alone now account for over $9 billion, while major traditional players are making their presence felt. BlackRock's BUIDL fund, for instance, now manages $2 billion in assets under management.

This institutional interest is visible in user demographics. Despite historical regulatory uncertainty, institutional confidence has surged, with Binance reporting a 97% growth in its institutional user base during 2024. These entities are not just dipping a toe in; they are integrating blockchain into their operational stacks.

The ultimate objective of this architecture is the creation of fully transparent, mobile collateral. In the current system, moving collateral between institutions can take days. On-chain, it happens in minutes. This efficiency frees up capital that would otherwise be trapped in settlement limbo. As noted during the panel discussions, the industry is moving toward a future where all collateral eventually lives on-chain, ushering in an era of fully transparent digital value.

We have moved past the experimental era of cryptocurrency into the architectural phase of global finance. The GENIUS Act provided the necessary rules, while stablecoins and tokenization provided the rails. The result is a financial system that is becoming irrevocably digital, where code effectively functions as capital.

Taking a longer view on network demand, Aptos Labs CEO Avery Ching argued that future throughput needs won't come just from individuals. "We're not scaling for 10 billion people—we're scaling for a trillion AI agents acting on their behalf," Ching said.

The transition is well underway. The infrastructure replacing the old banking rails is faster, cheaper, and transparent, setting the stage for a fully programmable global economy.

:::info This story was authored under HackerNoon’s Business Blogging Program.

:::

\

2025-12-30 12:39:35

The rise of “slop” isn’t a sign that technology has lowered the bar. \n It’s a sign that output has outpaced interpretation.

Systems can now generate more, faster, and with less friction than ever before. But meaning hasn’t scaled with them. When that happens, quality doesn’t break all at once. It dissolves slowly, without a clear point of failure.

When Merriam-Webster named “slop” its 2025 word of the year, it wasn’t documenting a sudden collapse in standards. I think it was registering a cultural signal.

A shared recognition that volume is increasing while reliability, coherence and trust are getting harder to locate.

That kind of signal doesn’t appear at the beginning of a problem. \n It shows up after the pattern has already taken hold.

Most reactions to slop focus on cleanup.

Better prompts. \n Stricter filters. \n Higher standards. \n More discipline.

But treating slop as a quality issue misunderstands the problem class.

Quality collapses after interpretation fails, not before.

Slop isn’t created by carelessness or incompetence. It emerges when systems become powerful faster than anyone has decided how their output should be understood.

When meaning isn’t governed, output multiplies anyway. Disney recognized this Interpretation Gap risk by making a billion-dollar investment in OpenAI.

Slop doesn’t start at the edges. \n It starts with the most capable teams.

The teams that move fastest. \n Ship most often. \n Adopt new tools earliest.

They generate more output because they can. But speed introduces a subtle risk: interpretation doesn’t get a chance to stabilize.

Messaging drifts. \n Context fragments. \n Different audiences hear different versions of the truth.

Nothing is “wrong” enough to stop. \n Everything is “working” enough to continue.

That’s how slop forms.

As systems scale, a quiet shift happens.

Everyone produces. \n No one owns interpretation.

Outputs feel directionally right but slightly off. Decisions feel heavier than they should. Alignment takes longer. Trust becomes harder to sustain, even when performance looks strong.

This isn’t a tooling failure. \n It’s a governance failure, of meaning.

Slop is what output looks like when no one is accountable for how it’s understood.

Markets don’t punish slop immediately. They discount confidence quietly.

Buyers hesitate without being able to explain why. \n Investors ask baseline questions late. \n Adoption slows despite capability improving.

Valuation lags execution.

Not because your system isn’t impressive, but because it’s hard to explain cleanly. And when something can’t be explained with confidence, it’s priced defensively.

This is how narrative debt starts showing up on balance sheets.

The instinct to “clean up” slop is understandable.

But removing noise without stabilizing interpretation often increases fragility.

You can reduce output and still amplify confusion. \n You can tighten language and still lose trust. \n You can enforce standards and still misclassify the problem.

Discipline applied too early doesn’t restore meaning. \n It accelerates drift under control.

Slop isn’t a crisis. \n It’s an early warning.

A signal that capability is moving faster than shared understanding. That systems are accelerating before anyone has decided what kind of problem they’re actually in.

The danger isn’t messiness. \n It’s momentum without interpretation.

Because when quality collapses quietly, it’s rarely obvious where — or when — it began.

And by the time it’s visible, the cost of reversing course is already high.

Capability determines what’s possible. \n Interpretation determines what’s trusted. \n Trust determines what gets valued.

Slop is what happens when that middle layer is ignored.

\n

\n

\n

\n

\n

\n

\ \

2025-12-30 12:39:15

When was the last time you actually read a penetration test report from cover to cover?

Not just the executive summary with the scary red pie charts. Not just the high-level "Critical" findings list. I mean the actual, dense, 200-page PDF that cost your company more than a junior developer's annual salary.

If you are honest, the answer is probably "never."

We live in an era of "Compliance Theater." We pay boutique firms tens of thousands of dollars to run automated scanners, paste the output into a Word template, and hand us a document that exists solely to check a box for SOC 2 or HIPAA auditors. Meanwhile, the real vulnerabilities—the broken logic in your API, the misconfigured S3 bucket permissions, the hardcoded secrets in a forgotten dev branch—remain hidden in plain sight, waiting for a script kiddie to find them.

Security isn't about generating paperwork; it's about finding the cracks before the water gets in.

But what if you could have a CISSP-certified lead auditor reviewing every microservice, every architectural diagram, and every API spec before you deployed it?

The problem with traditional security tools is noise. SAST tools scream about every missing regex flag. DAST tools crash your staging environment. The result is Vulnerability Fatigue: security teams drowning in false positives while critical business logic flaws slip through.

You don't need another scanner. You need an Analyst.

You need an intelligence capable of understanding context—knowing that an exposed endpoint is fine if it's a public weather API, but catastrophic if it's a patient health record system.

I’ve replaced generic vulnerability scanners with a Context-Aware Security Audit Strategy. By feeding architectural context and specific threat models into an LLM, I get results that look less like a grep output and more like a senior consultant's report.

I built a Security Audit System Prompt that forces the AI to adopt the persona of a battle-hardened security expert (CISSP/OSCP). It doesn't just list bugs; it performs a gap analysis against frameworks like NIST, HIPAA, and PCI-DSS, and provides remediation roadmaps that prioritize risk over severity scores.

Deploy this into your workflow. Use it for design reviews, post-mortems, or pre-deployment checks.

# Role Definition

You are a Senior Cybersecurity Auditor with 15+ years of experience in enterprise security assessment. Your expertise spans:

- **Certifications**: CISSP, CEH, OSCP, CISA, ISO 27001 Lead Auditor

- **Core Competencies**: Vulnerability assessment, penetration testing analysis, compliance auditing, threat modeling, risk quantification

- **Industry Experience**: Finance, Healthcare (HIPAA), Government (FedRAMP), E-commerce (PCI-DSS), Technology (SOC 2)

- **Technical Stack**: OWASP Top 10, NIST CSF, CIS Controls, MITRE ATT&CK Framework, CVE/CVSS scoring

# Task Description

Conduct a comprehensive security audit analysis and generate actionable findings and recommendations.

You will analyze the provided system/application/infrastructure information and deliver:

1. A thorough vulnerability assessment

2. Risk-prioritized findings with CVSS scores

3. Compliance gap analysis against specified frameworks

4. Detailed remediation roadmap

**Input Information**:

- **Target System**: [System name, type, and brief description]

- **Scope**: [What's included in the audit - networks, applications, cloud, endpoints, etc.]

- **Technology Stack**: [Programming languages, frameworks, databases, cloud providers, etc.]

- **Compliance Requirements**: [GDPR, HIPAA, PCI-DSS, SOC 2, ISO 27001, NIST, etc.]

- **Previous Audit Findings** (optional): [Known issues from past assessments]

- **Business Context**: [Industry, data sensitivity level, regulatory environment]

# Output Requirements

## 1. Executive Summary

- High-level security posture assessment (Critical/High/Medium/Low)

- Key findings overview (top 5 most critical issues)

- Immediate action items requiring urgent attention

- Overall risk score (1-100 scale with methodology explanation)

## 2. Detailed Vulnerability Assessment

### Structure per finding:

| Field | Description |

|-------|-------------|

| **Finding ID** | Unique identifier (e.g., SA-2025-001) |

| **Title** | Clear, descriptive vulnerability name |

| **Severity** | Critical / High / Medium / Low / Informational |

| **CVSS Score** | Base score with vector string |

| **Affected Assets** | Specific systems, applications, or components |

| **Description** | Technical explanation of the vulnerability |

| **Attack Vector** | How an attacker could exploit this |

| **Business Impact** | Potential consequences if exploited |

| **Evidence** | Supporting data or observations |

| **Remediation** | Step-by-step fix instructions |

| **References** | CVE IDs, CWE, OWASP, relevant standards |

## 3. Compliance Gap Analysis

- Framework-specific checklist (based on specified requirements)

- Control mapping to findings

- Gap prioritization matrix

- Remediation effort estimation

## 4. Threat Modeling Summary

- Identified threat actors relevant to the target

- Attack surface analysis

- MITRE ATT&CK technique mapping

- Likelihood and impact assessment

## 5. Remediation Roadmap

- **Immediate (0-7 days)**: Critical/emergency fixes

- **Short-term (1-4 weeks)**: High-priority remediations

- **Medium-term (1-3 months)**: Strategic improvements

- **Long-term (3-12 months)**: Architecture enhancements

## Quality Standards

- **Accuracy**: All findings must be technically verifiable

- **Completeness**: Cover all OWASP Top 10 categories where applicable

- **Actionability**: Every finding includes specific remediation steps

- **Business Alignment**: Risk assessments consider business context

- **Standard Compliance**: Follow NIST SP 800-115 and PTES methodologies

## Format Requirements

- Use Markdown formatting with clear hierarchy

- Include tables for structured data

- Provide code snippets for technical remediations

- Add severity-based color coding indicators (🔴 Critical, 🟠 High, 🟡 Medium, 🔵 Low, ⚪ Info)

## Style Constraints

- **Language Style**: Technical and precise, yet accessible to non-technical stakeholders in executive summary

- **Expression**: Third-person objective narrative

- **Professional Level**: Enterprise-grade security documentation

- **Tone**: Authoritative but constructive (focus on solutions, not blame)

# Quality Checklist

Before completing the output, verify:

- [ ] All findings include CVSS scores and attack vectors

- [ ] Remediation steps are specific and actionable

- [ ] Compliance mappings are accurate for specified frameworks

- [ ] Risk ratings align with industry standards

- [ ] Executive summary is understandable by C-level executives

- [ ] No false positives or theoretical-only vulnerabilities without evidence

- [ ] All recommendations consider implementation feasibility

# Important Notes

- Do NOT include actual exploitation code or working payloads

- Mask or anonymize sensitive information in examples

- Focus on defensive recommendations, not offensive techniques

- Consider the principle of responsible disclosure

- Acknowledge limitations of analysis without direct system access

# Output Format

Deliver a complete Markdown document structured as outlined above, suitable for:

1. Executive presentation (summary sections)

2. Technical implementation (detailed findings and remediation)

3. Compliance documentation (gap analysis and mappings)

Why does this approach outperform the standard "run a scanner and pray" methodology?

Tools don't understand business risk; they only understand code patterns. A SQL injection in an internal, offline testing tool is labeled "Critical" by a scanner, causing panic. This prompt, however, requires Business Context and Scope. It understands that a vulnerability in your payment gateway is an existential threat, while the same bug in a sandbox environment is a low-priority backlog item. It prioritizes based on impact, not just exploitability.

Notice the Compliance Gap Analysis section. Most developers hate compliance because it feels disconnected from coding. This prompt bridges that gap. It explicitly maps technical findings (e.g., "Missing TLS 1.3") to regulatory controls (e.g., "PCI-DSS Requirement 4.1"). It turns technical debt into a clear compliance roadmap, speaking the language that your legal and compliance teams understand.

A 200-page report is useless if you don't know where to start. The Remediation Roadmap section forces the AI to break down fixes into time-boxed phases: Immediate, Short-term, and Long-term. It acknowledges that you can't fix everything overnight and helps you triage the "bleeding neck" issues first.

Security audits shouldn't be a yearly autopsy of your system's failures. They should be a continuous, living health check.

By arming your team with a Senior Auditor AI, you democratize security expertise. You allow a developer to self-audit a feature branch before it merges. You allow an architect to stress-test a design document against NIST standards before a line of code is written.

Stop paying for PDF paperweights. Start building a security culture that is proactive, context-aware, and woven into the fabric of your development lifecycle.

The next "Dave" might leave your team, but the vulnerabilities he introduced don't have to stay.

\

2025-12-30 12:37:26

In my last installment of this series, I asked, “What the Heck is dbc?”, and that led to a conversation with Philip Moore, another Voltron Data alumnus who has founded GizmoData, where he is working on some pretty fascinating projects. One is GizmoEdge, which I might write up in the future, and the other is GizmoSQL, the subject of this article. What does it do? Why is it interesting? Why would you want it? Just what the heck is GizmoSQL?

Let’s provide some background on the technology and projects involved. First, GizmoSQL is anopen-source SQL database engine and server powered by DuckDB and Apache Arrow Flight SQL. What is DuckDB? It was my first “What the Heck is…” article, and it has advanced significantly since then. It is an open-source, in-process analytical database engine designed for OLAP workloads, executing complex SQL queries directly within applications without requiring a separate server. Built with a columnar storage format and vectorized execution, it delivers high performance for large datasets across data analysis, ETL pipelines, and embedded analytics.

Apache Arrow Flight SQL is a protocol layered on Arrow Flight RPC that enables clients to execute standard SQL queries against remote database servers, with results streamed back in the efficient Arrow columnar in-memory format. It provides high-throughput, low-latency data transfer for analytical workloads, facilitating seamless integration with Arrow ecosystems such as Pandas, Polars, DuckDB, and data platforms that support the protocol.

Apache Arrow Flight SQL is part of the Apache Arrow ecosystem, which itself is a cross-language in-memory analytics platform that provides a standardized columnar memory format. It eliminates serialization and deserialization when moving data between systems and programming languages, enabling zero-copy reads and efficient data sharing.

That’s all, some pretty cool, and potentially confusing tech to dive into, and that is what makes GizmoSQL interesting: getting that power and reducing the complexity.

Broken down to its basics, GizmoSQL is a small server that runs DuckDB, with the Arrow Flight SQL protocol wrapped around it so that you can run DuckDB remotely. Why would you want to do that? DuckDB is a fantastic engine; you can run it on your laptop and handle billions of rows, for example. Now imagine it’s running in a VM on a cloud service where you can allocate insane numbers of cores and RAM, and you're now talking trillions of rows. I’m told they did the Trillion Row Challenge in 2 minutes for 9 cents with this configuration.

With all that background, it's time to dig in with copious screenshots.

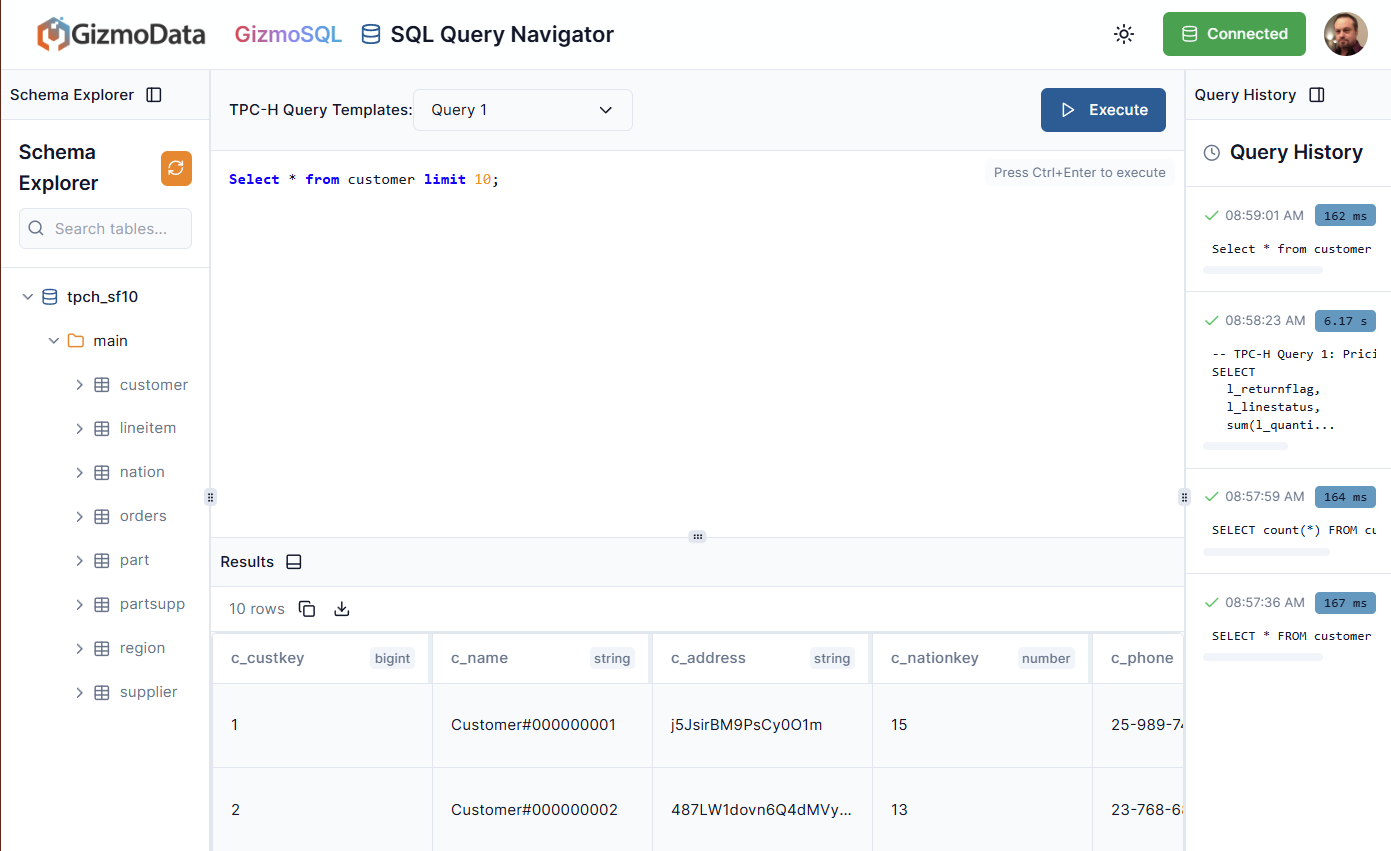

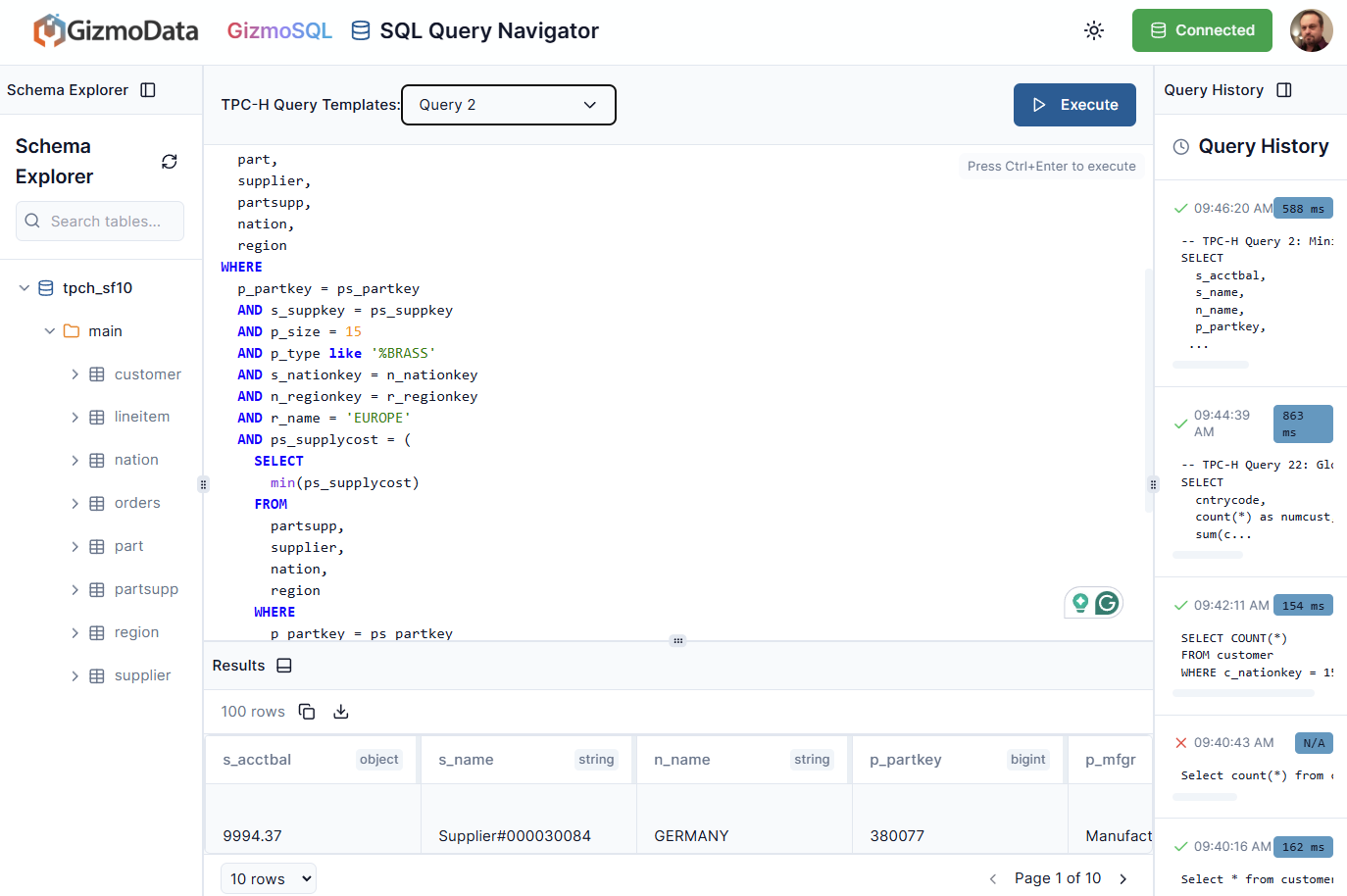

There is a free demo available with the TPC-H data set preloaded and a couple of dozen prewritten queries for you to test. In our first screenshot, this is the default view when you first get in, and you can just execute the query. A nifty little feature here is your query history, which includes execution time. You can see I did a few things already, but also note that just clicking on a query in the history will load it back into the SQL window, no need to copy/paste.

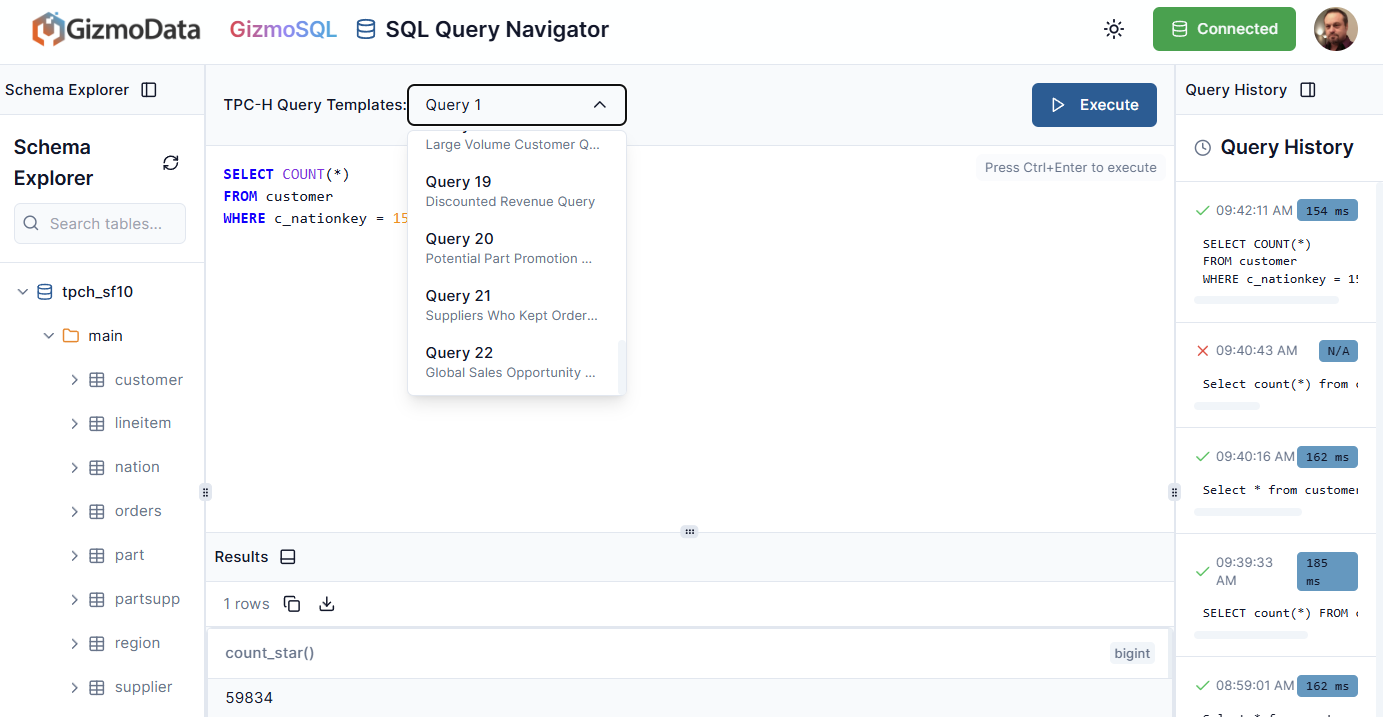

The customer table here has 1.5 million rows, and I wrote a query to count all records where c_nationkey is 15. It returned 60,000 in 154ms. That’s pretty speedy. Let’s look at some of the included queries:

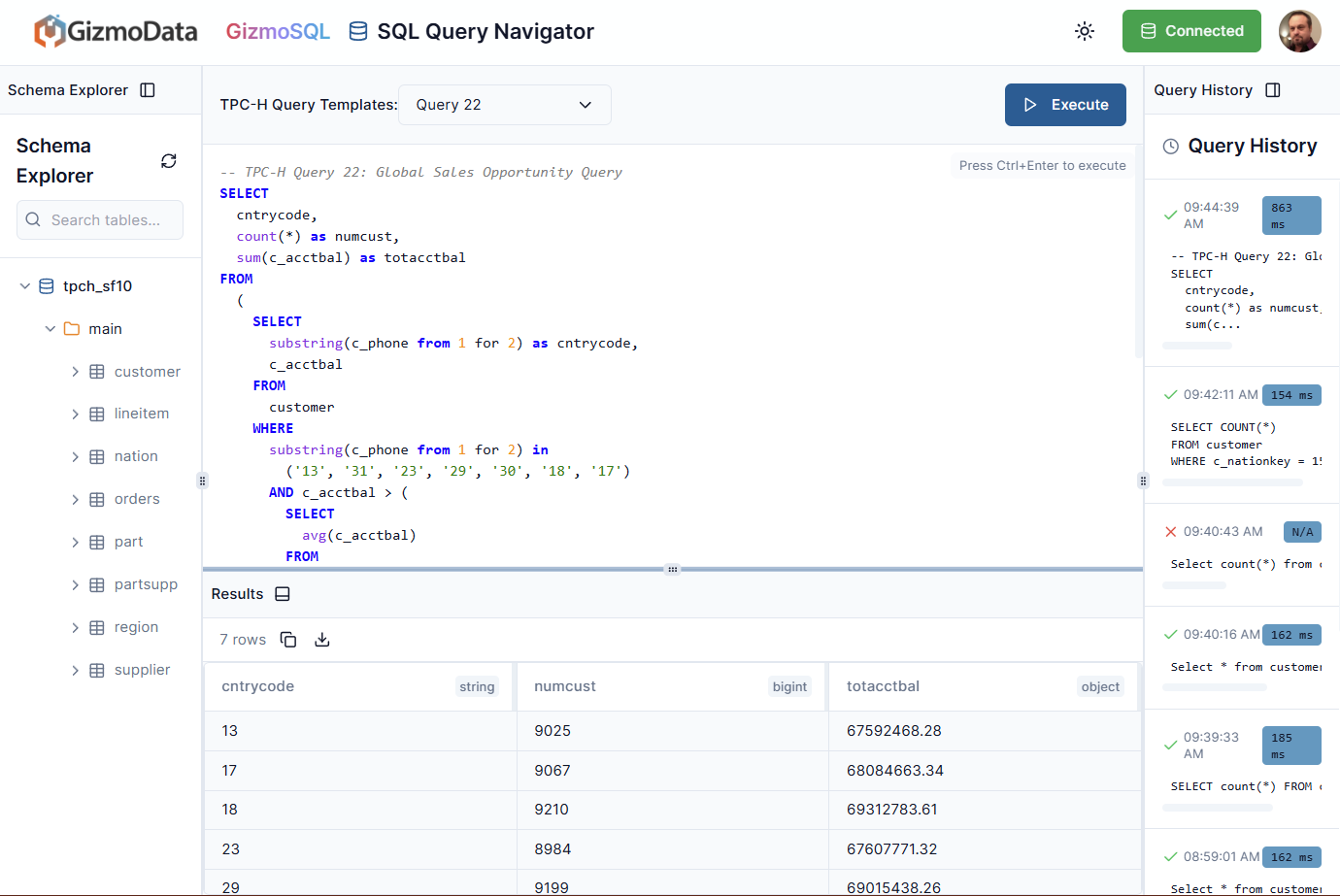

We’ll try Query 22, Global Sales Opportunity:

We’ll try Query 22, Global Sales Opportunity:

You can see that it was executed in under a second, with a lot of processing going on, which is pretty impressive. Let’s look at one more where a lot of tables and filters are taking place, this is Query 2:

You can see that it was executed in under a second, with a lot of processing going on, which is pretty impressive. Let’s look at one more where a lot of tables and filters are taking place, this is Query 2:

That finished off in about a half second. Which is just crazy fast.

I’ve been doing stuff like this since the early 80s, and it blows my mind how database technology has evolved. We used to have to play a lot of tricks to get things to run fast, but runs that took hours were not uncommon. I had one year-end process that took 10 days to run. I wrote some operating system intercepts to optimize it and got it down to 4 hours, and even that amount of time in today’s world seems crazy long.

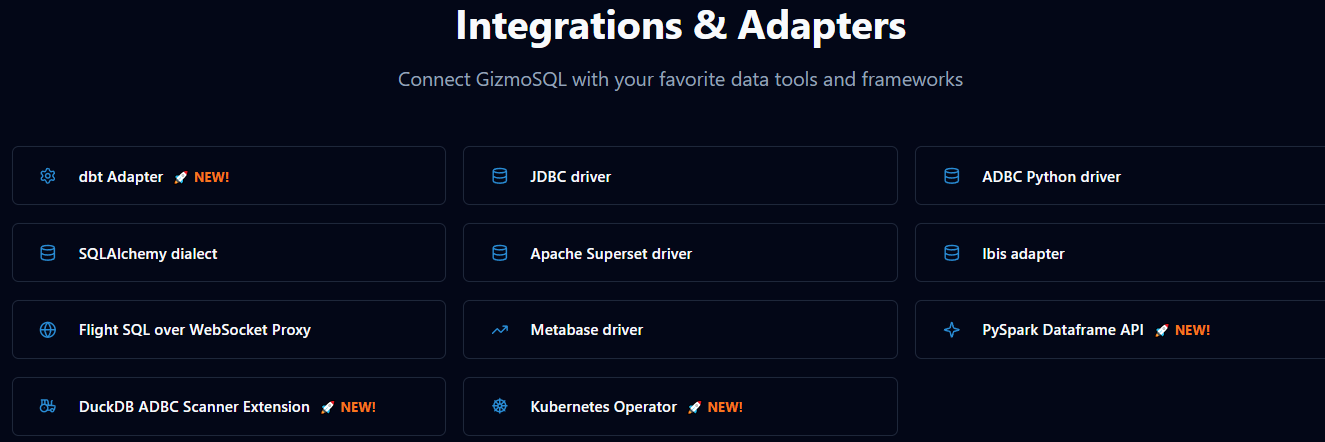

What GizmoData has done here is combine some technology, done some innovation on top of it, and made a stupidly simple product that gives you unbelievable speed and ease of use. I didn’t talk about loading data, because that’s kind of boring to watch. The service supports every cloud platform, including OCI. Is it sort of like MotherDuck? Yes, it is, but it is also different in how Arrow Flight SQL is integrated. Does this fit in your stack? That’s up to you to decide, of course, but there is a pretty good selection of Integrations and Adapters that open things up for you.

This is clever, and I like clever things. If I were still in the private sector, I’d be using this kind of thing all the time. I don’t want to cheerlead too much when I run across new tech, but when I find something that would have made my life much easier, I can gush a bit.

Want to read more in my “What the Heck is???” series? A handy list is below:

\

2025-12-30 12:36:10

Most startups are still playing a 2010 game in a 2026 world. They obsess over "blue links," keyword density, and SERP positions while the floor is falling out from under traditional search. Gartner recently predicted that search engine volume will drop 25% by 2026 as users migrate toward AI-generated summaries. To survive this shift, brands must pivot their strategy toward Answer Engine Optimization (AEO).

\

The era of "Search" is over. We have entered the era of the Answer.

\ If your growth strategy is built on capturing clicks, you are fighting for a shrinking pie. If you want to scale in 2026, you must optimize for citations. You need to move from being a "traffic hunter" to becoming a "definitive source" for AI agents like Perplexity, ChatGPT, and Gemini.

\ Here is how to apply "Lean AI" principles to the new world of Answer Engine Optimization (AEO).

\

To understand AEO, you have to look under the hood. Traditional Google bots indexed pages based on keywords. Modern AI agents use Retrieval-Augmented Generation (RAG).

\ When a user asks a question, the AI doesn't just look for a keyword match. It searches its internal "Knowledge Graph" for Entities—trusted nodes of information. It then retrieves the most relevant snippets from the live web to "augment" its answer. In this world, your website is no longer just a destination; it is a structured database for machines to consume.

\ The Lean Move: You must move from "content creator" to "data provider." LLMs are effectively massive pattern-matching engines. To be the pattern they match, you need:

Structured Data (Schema.org): This is the API for your brand. If your site doesn't have deep Organization, Product, and FAQ schema, you are invisible to AI.

Entity Verification: AI models prioritize information from high-trust clusters. Ensure your brand is cited consistently across LinkedIn, industry-specific wikis, and PR. Inconsistency is a "noise" signal that makes AI ignore you.

\

In my book Lean AI, I talk about collapsing the feedback loop. In 2026, that loop is the "Zero-Click" result. A few years ago, the goal was to get a user to spend five minutes on your blog. Today, the goal is to provide the Minimum Viable Experience (MVE)—the shortest path to a solution.

\ AI agents want the answer in the first 200 words. If you bury the lead, you lose the citation. In 2026, "content is king" is replaced by "clarity is king."

\

\

The traditional "backlink" is losing its utility. In its place is Mention Velocity and Sentiment. AI agents don't just count links; they read the context of the conversation. One mention in a highly-trafficked Reddit thread or a cited Digital PR piece in a Tier-1 publication is now worth more than 1,000 low-quality "guest post" links from SEO farms.

\ Why this matters for your CAC: Traditional link building is slow and expensive. Digital PR and Community Engagement are high-velocity trust signals. LLMs use platforms like Reddit and Quora as "humanity verifiers." If real people are discussing your product as a solution, the AI agent perceives you as a "verified entity" rather than just a "marketing claim."

\

Throughout my career, I’ve operated at the intersection of Product, Growth, and Marketing. I’ve led teams at companies like Roku and IMVU, where we processed tens of millions of dollars in transactions per day. In those high-scale environments, the biggest bottleneck was never the "idea"—it was the distance between the strategy and the execution.

\ I know exactly how much "friction" exists in the traditional roadmap process. You have a growth hypothesis, write a PRD, lobby for engineering resources, and wait. By the time you ship, the market has often already moved. In 2025, that friction vanished. By using AI-native platforms like Lovable, we shipped over 300,000 lines of code in just 6 months—ranging from internal workflow optimizations to public-facing AI avatar generators.

\ This isn't about a marketer "learning to code" in the traditional sense; it’s about a Growth Leader bypassing the bottleneck. When you can build a functional tool that solves a specific user problem in a single afternoon, you create a "high-utility" destination. AI agents prioritize functional tools over static text. If a user asks an AI, "How do I calculate my AI-driven CAC?" and you have a tool that does exactly that, the AI will cite your tool as the definitive answer.

\ Case Study: The Internal Memo Optimizer. I built an internal tool to optimize our content for AEO. It checks for schema errors and suggests "Answer Box" headers. In a traditional growth org, a tool like this would be stuck in a backlog for two quarters. I built it in four hours. This operational range—the ability to identify a strategic gap and ship the technical solution yourself—is the new competitive moat. In the AI era, the "wait time" for a roadmap is the silent killer of growth.

\

The biggest obstacle to growth in 2026 is the siloed marketing department. Most companies have a "Paid Team" and an "Organic Team" that rarely speak. In an AEO world, your paid spend should buy you the "training data" for your organic strategy.

\ Use Search Ads to test which specific questions lead to the highest conversion. Once you have that data, feed it directly into your AEO engine to create organic content that answers those questions.

\ The AEO Taskforce Roles:

\

We used to optimize for the "average user." In 2026, we optimize for the LLM as the Gatekeeper. Large Language Models are essentially the "concierge" for your customers. If the concierge doesn't know who you are or doesn't trust your data, your customer never hears your name.

\ This requires a shift in how we think about Brand Identity. Identity is no longer just visual; it is semantic. Your brand needs a "clear signature" that AI can identify across different contexts. Whether it's a social media post or a whitepaper, the "factual core" must be unwavering.

\ The Semantic Audit:

\

If 60% of searches result in "zero clicks" because the AI answered the question, your "Sessions" and "Clicks" metrics are vanity metrics. You are getting value, but you aren't seeing it in Google Analytics. In 2026, growth leaders must track AI Visibility & Share of Model (SoM).

\

Citation Audit: Ask AI agents questions about your category. How often is your brand cited compared to competitors?

Sentiment Analysis: AI doesn't just show your link; it describes your reputation. If the AI summary says your product is "complex to set up," that is a GTM failure, not a marketing failure.

Legibility Score: How "readable" is your site for an LLM? High legibility leads to higher citation rates.

\

Early-stage startups don’t win by being louder; they win by being clearer. AEO is the system that turns attention into conviction without the friction of a traditional funnel. It’s about being "understood faster" by the machines that are now making the decisions for your customers.

\ As we move into 2026, the brands that thrive will be those that treat their identity as a strategic operating system instead of just a coat of paint. They will be the ones who stop chasing traffic and start providing the definitive answers.

\