2026-01-20 15:51:36

The role of Spec is undergoing a fundamental transformation, becoming the governance anchor of engineering systems in the AI era.

From first principles, software engineering has always been about one thing: stably, controllably, and reproducibly transforming human intent into executable systems.

Artificial Intelligence (AI) does not change this engineering essence, but it dramatically alters the cost structure:

Therefore, in the era of Agent-Driven Development (ADD), the core issue is not “can agents do the work,” but how to maintain controllability and intent preservation in engineering systems under highly autonomous agents.

Many attribute the “explosion” of ADD to more mature multi-agent systems, stronger models, or more automated tools. In reality, the true structural inflection point arises only when these three conditions are met:

Agents have acquired multi-step execution capabilities

With frameworks like LangChain, LangGraph, and CrewAI, agents are no longer just prompt invocations, but long-lived entities capable of planning, decomposition, execution, and rollback.

Agents are entering real enterprise delivery pipelines

Once in enterprise R&D, the question shifts from “can it generate” to “who approved it, is it compliant, can it be rolled back.”

Traditional engineering tools lack a control plane for the agent era

Tools like Git, CI, and Issue Trackers were designed for “human developer collaboration,” not for “agent execution.”

When these three factors converge, ADD inevitably shifts from an “efficiency tool” to a “governance system.”

In the context of ADD, Spec is undergoing a fundamental shift:

Spec is no longer “documentation for humans,” but “the source of constraints and facts for systems and agents to execute.”

Spec now serves at least three roles:

Verifiable expression of intent and boundaries

Requirements, acceptance criteria, and design principles are no longer just text, but objects that can be checked, aligned, and traced.

Stable contracts for organizational collaboration

When agents participate in delivery, verbal consensus and tacit knowledge quickly fail. Versioned, auditable artifacts become the foundation of collaboration.

Policy surface for agent execution

Agents can write code, modify configurations, and trigger pipelines. Spec must become the constraint on “what can and cannot be done.”

From this perspective, the status of Spec is approaching that of the Control Plane in AI-native infrastructure.

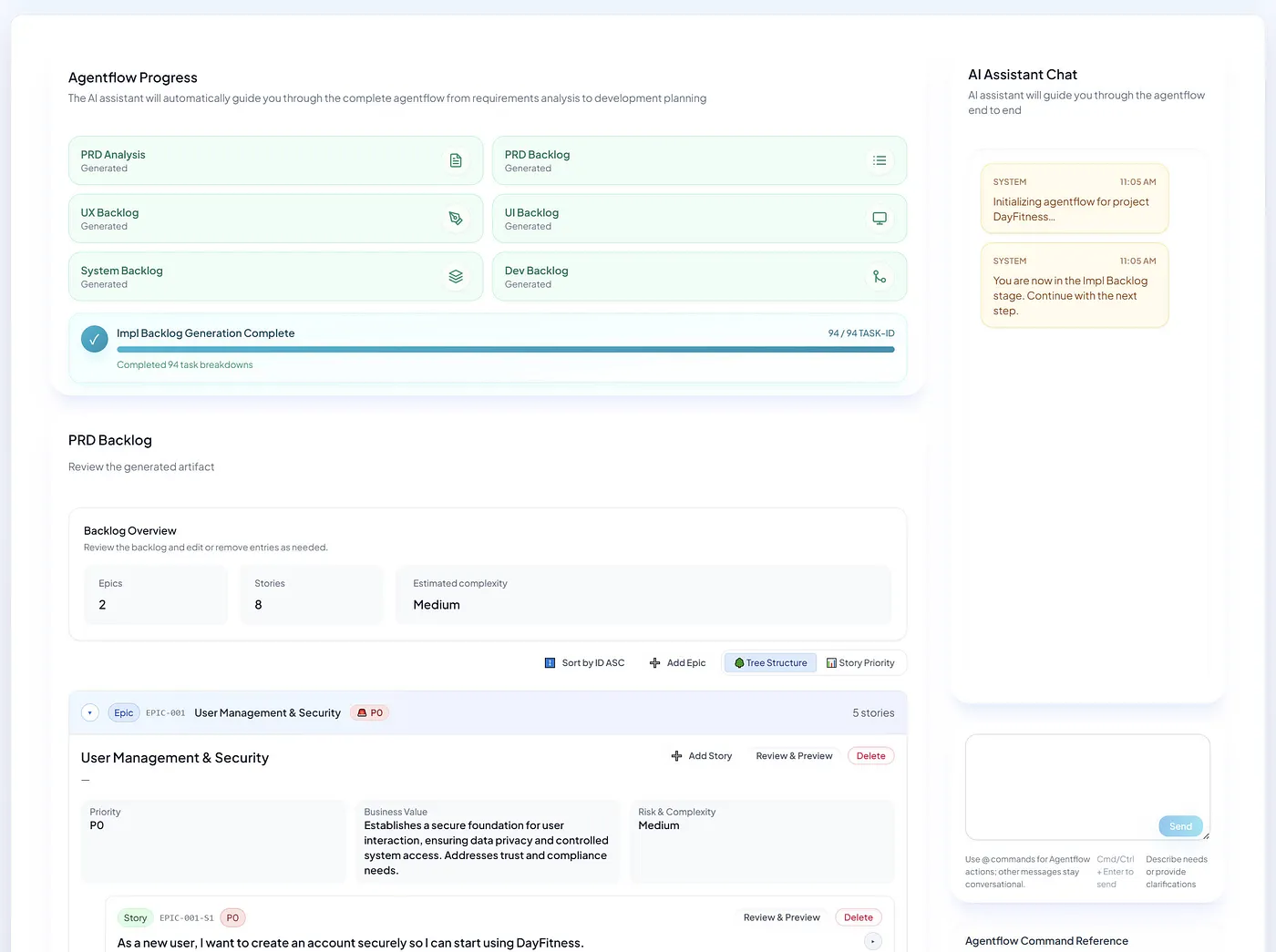

In recent systems (such as APOX and other enterprise products), an industry consensus is emerging:

APOX (AI Product Orchestration eXtended) is a multi-agent collaboration workflow platform for enterprise software delivery. Its core goals are:

APOX is not about simply speeding up code generation, but about elevating “Spec” from auxiliary documentation to a verifiable, constrainable, and traceable core asset in engineering—building a control plane and workflow governance system suitable for Agent-Driven Development.

Such systems emphasize:

This is not about “smarter AI,” but about engineering systems adapting to the agent era.

This is not to devalue code, but to acknowledge reality:

In the ADD era, the value of Spec is reflected in:

Code will be rewritten again and again; Spec is the long-term asset.

ADD also faces significant risks:

Can Spec become a Living Spec

That is, when key implementation changes occur, can the system detect “intent changes” and prompt Spec updates, rather than allowing silent drift?

Can governance achieve low friction but strong constraints

If gates are too strict, teams will bypass them; if too loose, the system loses control.

These two factors determine whether ADD is “the next engineering paradigm” or “just another tool bubble.”

From a broader perspective, ADD is the inevitable result of engineering systems becoming “control planes”:

Engineering systems are evolving from “human collaboration tools” to “control systems for agent execution.”

In this structure:

This closely aligns with the evolution path of AI-native infrastructure.

The winners of the ADD era will not be the systems with “the most agents or the fastest generation,” but those that first upgrade Spec from documentation to a governable, auditable, and executable asset. As automation advances, the true scarcity is the long-term control of intent.

2026-01-18 14:53:08

Voice input methods are not just about being “fast”—they are becoming a brand new gateway for developers to collaborate with AI.

I am increasingly convinced of one thing: PC-based AI voice input methods are evolving from mere “input tools” into the foundational interaction layer for the era of programming and AI collaboration.

It’s not just about typing faster—it determines how you deliver your intent to the system, whether you’re writing documentation, code, or collaborating with AI in IDEs, terminals, or chat windows.

Because of this, the differences in voice input method experiences are far more significant than they appear on the surface.

After long-term, high-frequency use, I have developed a set of criteria to assess the real-world performance of AI voice input methods:

Based on these criteria, I focused on comparing Miaoyan, Shandianshuo, and Zhipu AI Voice Input Method.

Miaoyan was the first domestic AI voice input method I used extensively, and it remains the one I am most willing to use continuously.

It’s important to clarify that Miaoyan’s command mode is not about editing text via voice. Instead:

You describe your need in natural language, and the system directly generates an executable command-line command.

This is crucial for developers:

This design is clearly focused on engineering efficiency, not office document polishing.

But there are some practical limitations:

From a product strategy perspective, it feels more like a “pure tool” than part of an ecosystem.

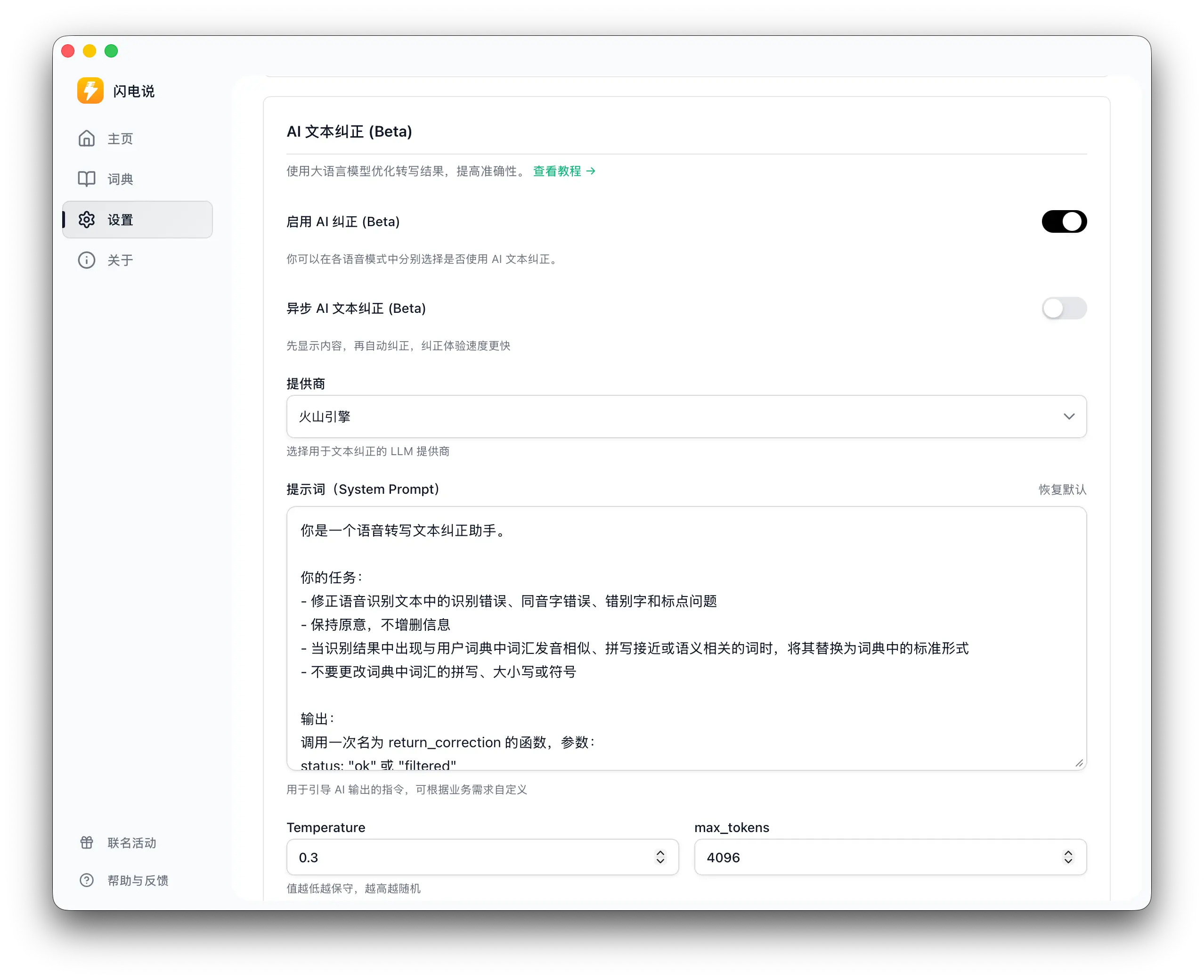

Shandianshuo takes a different approach: it treats voice input as a “local-first foundational capability,” emphasizing low latency and privacy (at least in its product narrative). The natural advantages of this approach are speed and controllable marginal costs, making it suitable as a “system capability” that’s always available, rather than a cloud service.

However, from a developer’s perspective, its upper limit often depends on “how you implement enhanced capabilities”:

If you only use it for basic transcription, the experience is more like a high-quality local input tool. But if you want better mixed Chinese-English input, technical term correction, symbol and formatting handling, the common approach is to add optional AI correction/enhancement capabilities, which usually requires extra configuration (such as providing your own API key or subscribing to enhanced features). The key trade-off here is not “can it be used,” but “how much configuration cost are you willing to pay for enhanced capabilities.”

If you want voice input to be a “lightweight, stable, non-intrusive” foundation, Shandianshuo is worth considering. But if your goal is to make voice input part of your developer workflow (such as command generation or executable actions), it needs to offer stronger productized design at the “command layer” and in terms of controllability.

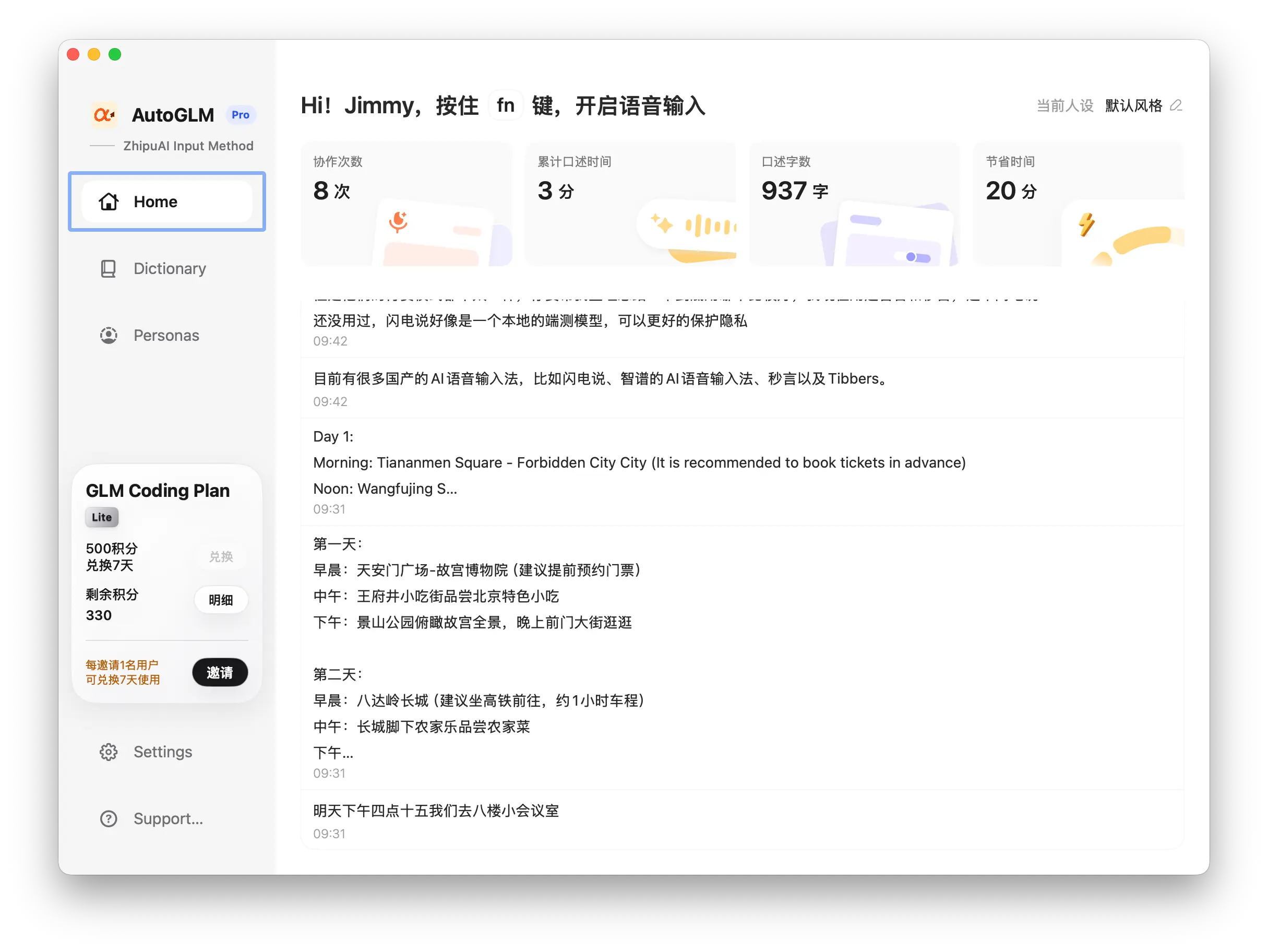

I also thoroughly tested the Zhipu AI Voice Input Method.

Its strengths include:

But with frequent use, some issues stand out:

Although I prefer Miaoyan in terms of experience, Zhipu has a very practical advantage:

If you already subscribe to Zhipu’s programming package, the voice input method is included for free.

This means:

From a business perspective, this is a very smart strategy.

The following table compares the three products across key dimensions for quick reference.

| Dimension | Miaoyan | Shandianshuo | Zhipu AI Voice Input Method |

|---|---|---|---|

| Response Speed | Fast, nearly instant | Usually fast (local-first) | Slightly slower than Miaoyan |

| Continuous Stability | Stable | Depends on setup and environment | Very stable |

| Idle Misrecognition | Rare | Generally restrained (varies by version) | Obvious: outputs characters even if silent |

| Output Cleanliness/Control | High | More like an “input tool” | Occasionally messy |

| Developer Differentiator | Natural language → executable command | Local-first / optional enhancements | Ecosystem-attached capabilities |

| Subscription & Cost | Standalone, separate purchase | Basic usable; enhancements often require setup/subscription | Bundled free with programming package |

| My Current Preference | Best experience | More like a “foundation approach” | Easy to keep but not clean enough |

The switching cost for voice input methods is actually low: just a shortcut key and a habit of output.

What really determines whether users stick around is:

For me personally:

These points are not contradictory.

The competition among AI voice input methods is no longer about recognition accuracy, but about who can own the shortcut key you press every day.

2026-01-11 11:29:28

The divide in technical standards and data sovereignty determines the global competitive landscape of infrastructure open source in the AI era.

In this article, I will use the differences in air quality data presentation in Apple Maps and Weather as a starting point to explore how technical standards and data sovereignty influence the open source paths of AI in different countries. I will further analyze why, in the AI era, infrastructure-level open source has become the key battleground for ecosystem dominance.

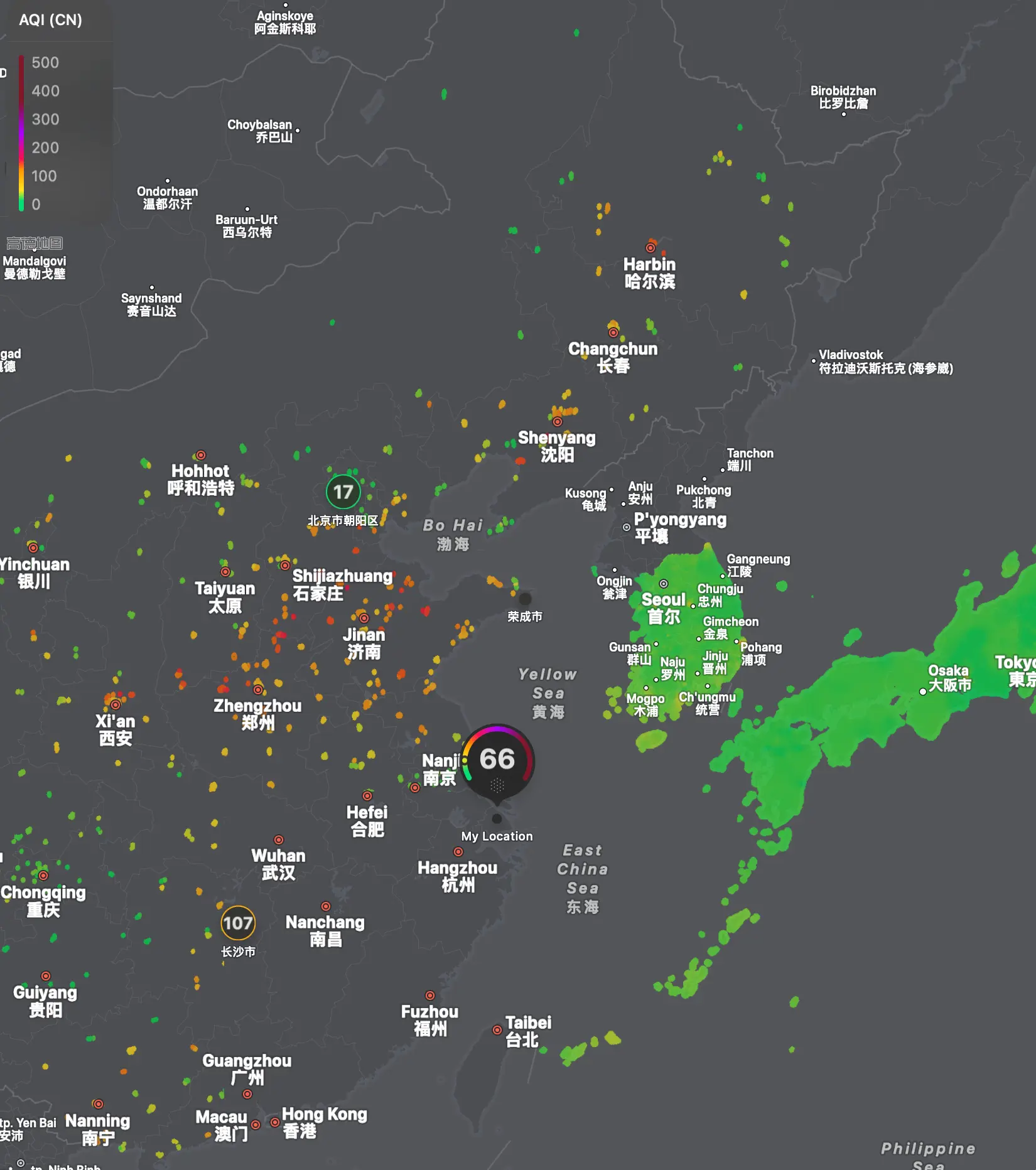

This article originates from a very everyday observation: Why is air quality data in China shown as “points” in Apple Maps and Weather, while in other countries it is often displayed as “areas”?

At first glance, it seems like a product experience difference. But when I reconsidered this issue in the context of engineering, standards, and system design, I realized it actually points to a much bigger question: how different countries understand the relationship between technology, standards, openness, and sovereignty.

As an engineer who has long worked in cloud native, AI infrastructure, and open source ecosystems, I gradually realized that this difference is not limited to air quality or map data. In the AI era, it is further amplified, directly affecting how we open source models, build infrastructure, and whether we can participate in the formulation of global rules.

Writing this article is not about judging right or wrong, but about using a concrete example to explain a structural difference and discuss the long-term impact and real opportunities this difference may bring in the AI era.

What is especially important: at the level of AI infrastructure and infra-level open source, the competition has just begun. China is not without opportunities, but the choice of path will become more critical than ever.

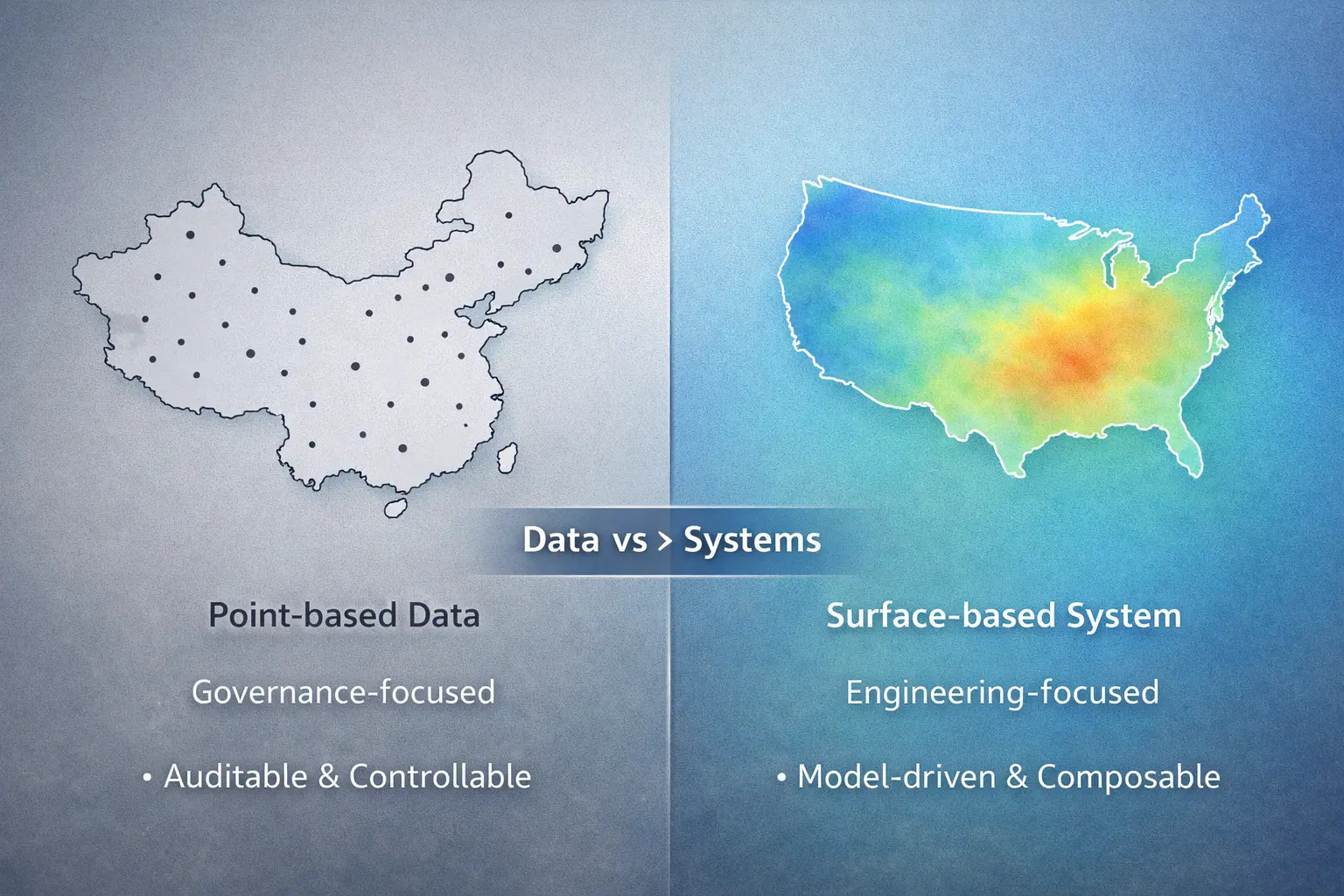

The following image illustrates the divide between spatial data, AI open source, and technical standards. By comparing how air quality data is presented in Apple Maps and Weather in different countries, you can intuitively feel the differences in technical standards and sovereignty strategies behind the scenes.

If you regularly use global products such as maps, weather, traffic, or various data services, you may notice a recurring phenomenon that is rarely discussed seriously: the way data is presented in China often differs significantly from global mainstream standards.

A very intuitive example comes from the air quality display in Apple Maps or Weather. In China, air quality is usually shown as discrete points; in the US, Europe, Japan, and other countries, it is often rendered as continuous coverage areas.

At first glance, this seems like a product experience difference, and may even lead people to mistakenly believe that “China’s data is incomplete.” But if you treat it as an engineering or system design issue, you will find: this is not a matter of data capability, but a different choice in technical standards, data sovereignty, and openness strategies.

And this choice is not limited to air quality.

Air quality is just a highly visible and relatively low-risk example. Similar differences have long existed in broader spatial and public data domains.

In global mainstream systems, such data is usually regarded as public information infrastructure. It is standardized, gridded, API-ified, allows interpolation, modeling, and redistribution, and is widely used in research, business, and product innovation.

In China, this data often takes another form: hierarchical, discrete, strictly defined, and with centralized interpretation authority.

This is not a technical preference in a single field, but a systemic logic of technology and governance.

Placing China in a global context, we can see that there are roughly three different paths worldwide regarding “how public data and technical standards are opened.”

Engineering-Open Type: Standards and Ecosystem First

Represented by the US and some European countries, the core features of this system are:

This path directly shaped the global landscape of foundational software and infrastructure-level open source. Linux, Kubernetes, and the cloud native system are essentially products of openness at the rules layer.

Governance-Sovereignty Type: Control and Auditability First

Represented by China, this path emphasizes:

In this system, “point data” is not a sign of technological backwardness, but a governable technical form. When a technical system is designed as a governance system, its primary goal is not reusability, but controllability.

Compromise-Coordinated Type: Cautious Openness, Engineering Internationalization

Some countries try to find a balance between the two, maintaining caution in spatial data while being highly internationalized in engineering and industry. This shows that the difference is not about being advanced or backward, but about different objective functions.

The following diagram compares the core characteristics, typical cases, and advantages/challenges of these three paths from a global perspective. The “Engineering-Open Type” on the left shapes the global infrastructure software landscape through standards and ecosystems; the “Governance-Sovereignty Type” in the middle emphasizes data sovereignty and security controllability but has limitations in influence at the rules layer; the “Compromise-Coordinated Type” on the right attempts to find a balance between security and openness. The divide between these three paths directly affects the infrastructure competition landscape of various countries in the AI era.

Among all spatial public data, air quality is an ideal observation window:

China does not lack air quality data; on the contrary, the density of monitoring stations is among the highest in the world. The real difference lies in:

“Point” means authenticity and traceability; “area” means models, inference, and redistribution of interpretive authority. This is precisely the watershed between technical standards and data sovereignty.

The following diagram compares two different technical paths. The left side, “Governance-Sovereignty Type,” emphasizes data traceability and controllability, using discrete point-based data presentation. The right side, “Engineering-Open Type,” allows model interpolation and inference, providing more user-friendly experience through continuous area-based coverage. The essence of this difference lies not in the level of technical capability, but in the different choices made between data sovereignty, governance capability, and open ecosystems.

With the above logic in mind, many phenomena in the AI era become less confusing.

For example:

The key is not “whether to open source,” but “which layer is open sourced.”

Open sourcing weights is essentially openness at the asset layer; infrastructure-level open source means relinquishing control over operating rules and interpretive authority.

The following diagram compares two different layers of AI open source. The left side shows “Model Weight Layer Open Source,” which is a typical feature of Chinese path—opening static digital assets with low cost and controllable risk, but not involving rule-making. The right side shows “Infrastructure Layer Open Source,” which is a core strategy of US path—by open sourcing development tools, protocol standards, runtimes, and compute scheduling and other infrastructure, defining how AI is used, thereby mastering ecosystem rules and interpretive authority. Key insight: Open sourcing model weights does not equal mastering AI ecosystem, and the real competitive focus is shifting to the infrastructure layer of “how AI runs.”

In the past year or two, US-led AI open source and ecosystem initiatives have shown a highly consistent direction: not rushing to open source the strongest models, but focusing on defining “how AI is used.”

The commonality of these actions: competing in model capability, but controlling the usage rules.

It is important to emphasize that this difference does not mean China is unaware of the issue.

Whether in policy discussions or within industry and research institutions, the risk of “only open sourcing models without controlling infrastructure and standard dominance” has been repeatedly discussed.

The real challenge lies in how to achieve a directional shift within the existing governance logic and risk framework. This shift has already appeared in some concrete practices.

In the AI era, infrastructure often starts with the most engineering-driven problems.

HAMi Project

Projects like HAMi do not focus on model capability, but on:

The significance of such projects is not about being “SOTA,” but about entering the domain of “how AI runs.”

AI Runtime Reconstruction from a System Software Review

Exploration at the research institution level is also noteworthy. The FlagOS initiative by the Beijing Academy of Artificial Intelligence is a clear signal: AI is being redefined as a system software issue, not just a model or algorithm problem.

Long-Term Tech Stack Investment by Industry Players

In the industry, Huawei’s strategy reflects a similar direction: not simply open sourcing models, but attempting to build a complete, controllable AI tech stack, from computing power to frameworks, platforms, and ecosystems. This is a slower, heavier, but more infrastructure-competitive path.

Taking a longer view, we find an easily overlooked fact:

At the level of AI infrastructure and infra-level open source, there is no settled pattern between China and the US.

The US advantage lies in:

China’s variables include:

The real uncertainty is not “whether we can catch up,” but whether it is possible to gradually open up space for engineering autonomy and standard co-construction while maintaining governance bottom lines.

The “points” and “areas” of air quality, model weights and the world of operations—behind these appearances lies not a simple technical route dispute, but how a country finds its own balance between openness, standards, and sovereignty.

In the AI era, this issue will not disappear, but will become more concrete and more engineering-driven. And this is precisely where there are still opportunities for China’s AI infrastructure open source.

2026-01-07 15:49:21

Compute governance is the critical bottleneck for AI scaling. From hardware consumption to core asset, this long-undervalued path needs to be redefined.

I have officially joined Dynamia as Open Source Ecosystem VP, responsible for the long-term development of the company in open source, technical narrative, and AI Native Infrastructure ecosystem directions.

I chose to join Dynamia not because it’s a company trying to “solve all AI problems,” but precisely the opposite—it’s because Dynamia focuses intensely on one unavoidable, yet long-undervalued core issue in AI Native Infrastructure: compute, especially Graphics Processing Units (GPU), are evolving from “technical resources” into infrastructure elements that require refined governance and economic management.

Through years of practice in cloud native, distributed systems, and AI infrastructure (AI Infra), I’ve formed a clear judgment: as Large Language Models (LLM) and AI Agents enter the stage of large-scale deployment, the real bottleneck limiting system scalability and sustainability is no longer just model capability itself, but how compute is measured, allocated, isolated, and scheduled, and how a governable, accountable, and optimizable operational mechanism is formed at the system level. From this perspective, the core challenge of AI infrastructure is essentially evolving into a “resource governance and Token economy” problem.

Dynamia is an AI-native infrastructure technology company rooted in open source DNA, driving efficiency leaps in heterogeneous compute through technological innovation. Its leading open source project, HAMi (Heterogeneous AI Computing Virtualization Middleware), is a Cloud Native Computing Foundation (CNCF) sandbox project providing GPU, NPU and other heterogeneous device virtualization, sharing, isolation, and topology-aware scheduling capabilities, widely adopted by 50+ enterprises and institutions.

In this context, Dynamia’s technical approach—starting from the GPU layer, which is the most expensive, scarcest, and least unified abstraction layer in AI systems, treating compute as a foundational resource that can be measured, partitioned, scheduled, governed, and even “tokenized” for refined accounting and optimization—aligns highly with my long-term judgment on AI-native infrastructure.

This path doesn’t use “model capabilities” or “application innovation” as selling points in the short term, nor is it easily packaged into simple stories. However, with rising compute costs, heterogeneous accelerators becoming the norm, and AI systems moving toward multi-tenant and large-scale operations, these infrastructure-level capabilities are gradually becoming prerequisites for the establishment and expansion of AI systems.

As Dynamia’s Open Source Ecosystem VP, I will focus on technical narrative of AI-native infrastructure, open source ecosystem building, and global developer collaboration, promoting compute from “hardware resource being consumed” to governable, measurable, and optimizable AI infrastructure core asset, laying the foundation for the scaling and sustainable evolution of AI systems in the next stage.

Joining Dynamia is an important milestone in my career and a concrete action demonstrating my long-term optimism about AI-native infrastructure. Compute governance is not a short-term trend that yields quick results, but an infrastructure proposition that cannot be bypassed for AI large-scale deployment. I look forward to exploring, building, and landing solutions on this long-undervalued path with global developers.

2026-01-04 10:25:48

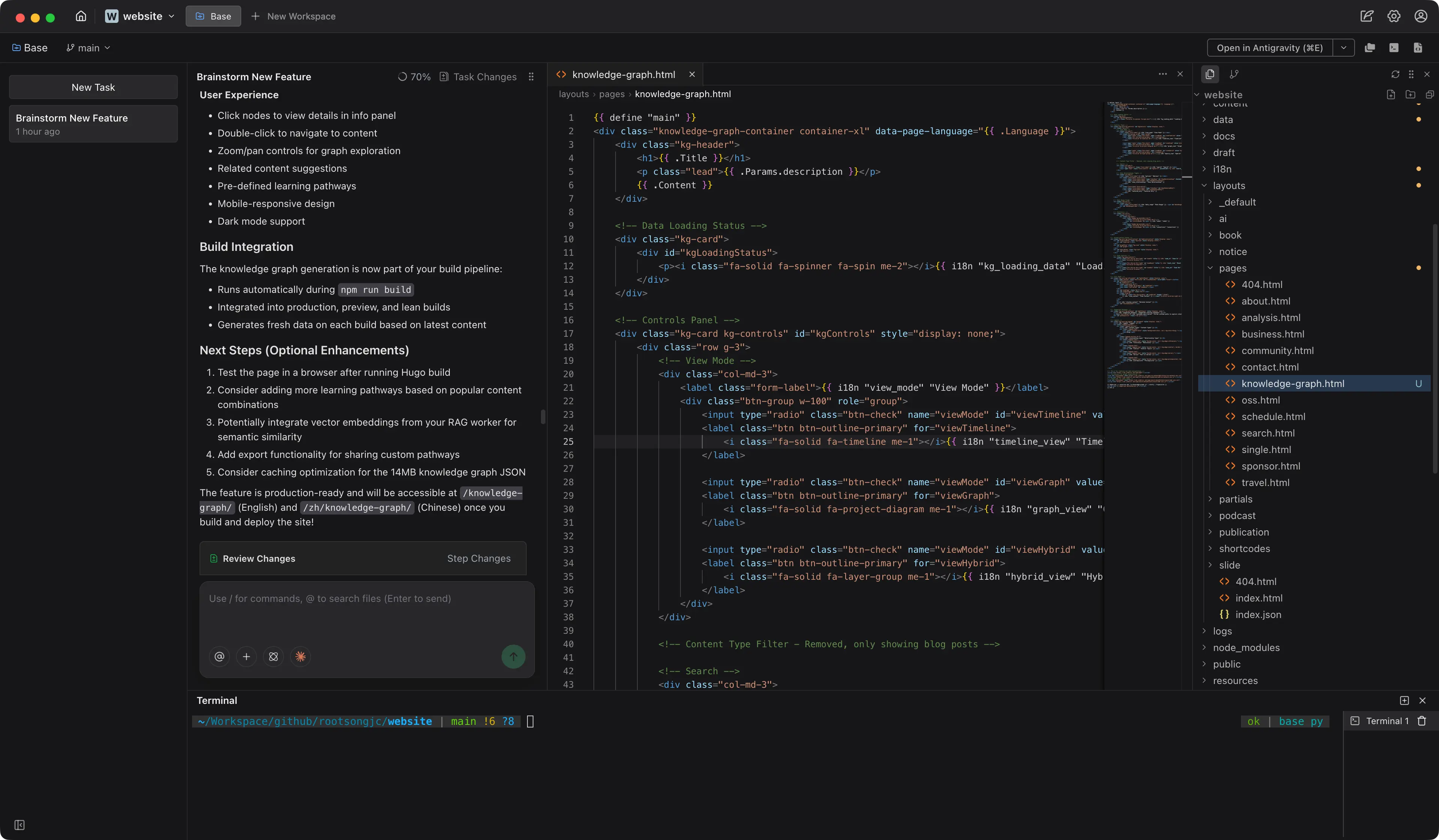

I’ve been spending more time recently experimenting with vibe coding tools on real projects, not demos. One of those projects is my own website, where I constantly tweak content structure, navigation, and layout.

During this process, I started using Verdent’s standalone Mac app more seriously. What stood out was not any single feature, but how different the experience felt compared to traditional AI coding tools.

Verdent doesn’t behave like an assistant waiting for instructions. It behaves more like an environment where work happens in parallel.

Most AI coding tools begin with a conversation. Verdent begins with tasks.

When I opened my website repository in the Verdent app, I didn’t start with a long prompt. I created multiple tasks directly: one to rethink navigation and SEO structure, another to explore homepage layout improvements, and a third to review existing content organization.

Each task immediately spun up its own agent and workspace. From the beginning, the app encouraged me to think in parallel, the same way I normally would when sketching ideas on paper or jumping between files.

This framing alone changes how you work.

Switching contexts is unavoidable in real development work. What usually breaks is continuity.

Verdent handles this well. Each task preserves its full context independently. I could stop one task mid-way, switch to another, and come back later without re-explaining the problem or reloading files.

For example, while one agent was analyzing my site’s navigation structure, another was exploring layout options. I moved between them freely. Nothing was lost. Each agent remembered exactly what it was doing.

This feels closer to how developers think than how chat-based tools operate.

Parallel work only becomes truly safe when code changes are isolated. When parallelism moves from discussion to actual code modification, risk management becomes essential.

Verdent solves this with Workspaces. Each workspace is an isolated, independent code environment with its own change history, commit log, and branches. This isn’t just about separation—it’s about making concurrent code changes manageable.

What this means in practice:

I intentionally let different agents operate on overlapping parts of my project: one modifying Markdown content and links, another adjusting CSS and layout logic. Both ran in parallel. No conflicts emerged. Later, I reviewed the diffs from each workspace and merged only what made sense.

This kind of isolation removes significant anxiety from AI-assisted coding. You stop worrying about breaking things and start experimenting more freely, knowing that each change exists in its own contained environment.

Parallelism doesn’t mean that all agents complete the same phase of work at the same time—instead, by isolating and overlapping phases, what was once a strictly sequential process is compressed into a more efficient, collaborative mode.

In Verdent, each agent runs in its own workspace, essentially an automatically managed branch or worktree. In practice, I often create multiple tasks with different responsibilities for the same requirement, such as planning, implementation, and review. But this doesn’t mean they all complete the same phase simultaneously.

These tasks are triggered as needed, each running for a period and producing clear artifacts as boundaries for collaboration. The planning task generates planning documents or constraint specifications; the implementation task advances code changes based on those documents and produces diffs; the review task, according to the established planning goals and audit criteria, performs staged reviews of the generated changes. By overlapping phases around artifacts, the originally strict sequential process is compressed into a workflow that more closely resembles team collaboration.

The value of splitting into multiple tasks is not parallel execution, but parallel cognition and clear collaboration boundaries.

While it’s technically possible to put multiple roles into a single task, this causes planning, implementation, and review to share the same context, which weakens role isolation and the auditability of results.

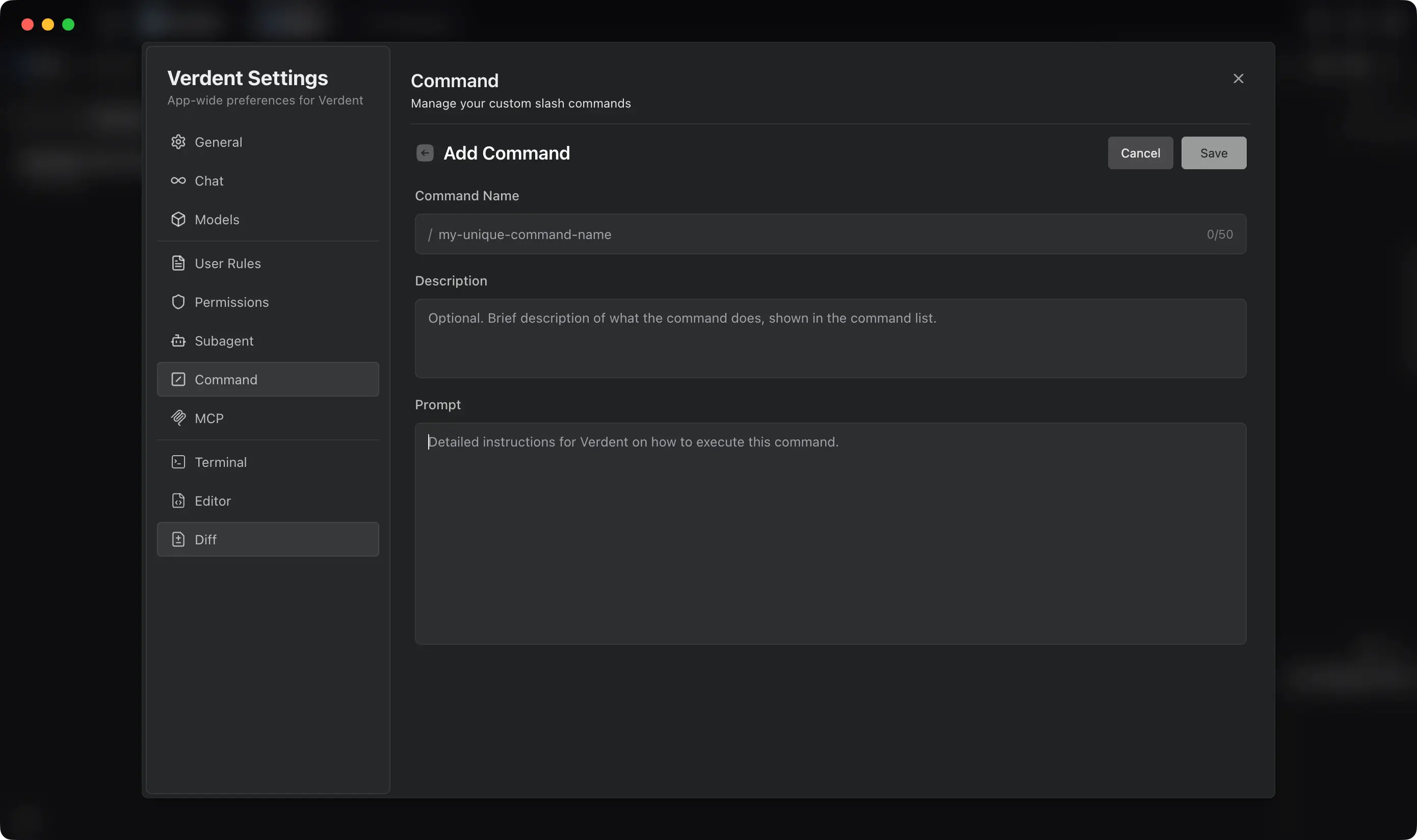

Beyond the workflow model itself, Verdent exposes a surprisingly rich set of configurable capabilities.

It allows users to customize MCP settings, define subagents with configurable prompts, and create reusable commands via slash (/) shortcuts. Personal rules can be written to influence agent behavior and response style, and command-level permissions can be configured to enforce basic security boundaries. Verdent also supports multiple mainstream foundation models, including GPT, Claude, Gemini, and K2. For users who prefer a lightweight coding experience without a full IDE, Verdent offers DiffLens as an alternative review-oriented interface. Both subscription-based and credit-based pricing models are supported.

That said, Verdent makes a clear set of trade-offs. It is not built around tab-based code completion, nor does it offer a plugin system. If it did, it would start to resemble a traditional IDE - which does not seem to be its goal. Verdent is not designed for direct, fine-grained code manipulation; most changes are mediated through conversational tasks and agent-driven edits. This makes the experience clean and focused, but it also means that for large, highly complex codebases, Verdent may function better as a complementary orchestration layer rather than a full-time development environment.

There are many AI-assisted coding tools emerging right now. Some focus on smarter editors, others on faster generation.

Verdent feels different because it focuses on orchestration, not just assistance.

It doesn’t try to replace your editor. It sits one level above, coordinating planning, execution, and review across multiple agents.

That makes it particularly suitable for exploratory work, refactoring, and early-stage design - exactly the kind of work I was doing on my website.

Using Verdent’s standalone app didn’t just speed things up. It changed how I structured work.

Instead of doing everything sequentially, I started thinking in parallel again - and letting the system support that way of thinking.

Verdent feels less like an AI feature and more like an environment that assumes AI is already part of how development happens.

For developers experimenting with AI-native workflows, that shift is worth paying attention to.

2025-12-31 18:02:01

The waves of technology keep evolving; only by actively embracing change can we continue to create value. In 2025, I chose to move from Cloud Native to AI Native—this year marked a key turning point for personal growth and system reinvention.

2025 was a turning point for me. This year, I not only changed my technical direction but also the way I approach problems. Moving from Cloud Native infrastructure to AI Native Infrastructure was not just a migration of content, but an upgrade in mindset.

This year, I conducted a large-scale refactoring of the website and systematically organized the content. Beyond the technical improvements, I want to share my thoughts and changes throughout the year.

At the beginning of 2025, I made an important decision: to reposition myself from a Cloud Native Evangelist to an AI Infrastructure Architect. This was not just a change in title, but a strategic transformation after careful consideration.

As I witnessed the surge of AI technologies and the rise of Agent-based applications reshaping software, I realized that clinging to the boundaries of Cloud Native might mean missing an era. So, I systematically adjusted the website’s content structure, shifting the focus toward AI Native Infrastructure.

This transformation was not about abandoning the past, but extending forward from the foundation of Cloud Native. Classic content like Kubernetes and Istio remains and is continuously updated, but new topics such as AI Agent and the AI OSS landscape have been added, forming a more complete knowledge map.

Agents represent a major evolution in software for the AI era. When I tried to understand Agent design principles, I found fragmented information everywhere but lacked a systematic knowledge base.

So I created content that analyzes the Agent context lifecycle and control loop mechanisms, summarizing several proven architectural patterns. To make complex knowledge easier to digest, I organized it into logical sections so readers can learn step by step.

AI tools and frameworks are emerging rapidly, with new projects appearing daily. To help readers quickly grasp the ecosystem, I built a comprehensive AI OSS database.

This database covers everything from Agent frameworks to development tools and deployment services. I not only included active projects but also established an archive mechanism, preserving detailed information on over 150 historical projects. More importantly, I developed a scoring system to objectively evaluate projects across dimensions like quality and sustainability, helping readers decide which tools are worth investing time in.

In 2025, I wrote over 120 blog posts. Compared to previous years, these articles focused more on observing and reflecting on technology trends, rather than just technical tutorials.

I started paying attention to deeper questions: How will AI infrastructure evolve? What does Beijing’s open source initiative mean for the AI industry? What ripple effects might a tech acquisition trigger? These articles allowed me and my readers to not only see “what” technology is, but also “why” and “what’s next.”

No matter how good the content is, if it can’t be easily found and read, its value is greatly diminished. In 2025, I invested significant effort into website functionality, with one goal: to provide readers with a smoother reading experience.

As the volume of content grew, the original search function could no longer meet demand. I redesigned the search system to support fuzzy search and result scoring, and optimized index loading performance. More importantly, the new search interface is more user-friendly, supporting keyboard navigation and category filtering so users can find what they want faster.

Mobile reading experience has improved significantly. I refactored the mobile navigation and table of contents, making reading on phones much smoother. Dark mode is now more refined, fixing several display issues and ensuring images and diagrams look good on dark backgrounds.

A major change was optimizing the WeChat Official Account publishing workflow. Previously, publishing website content to WeChat required manual handling of many details; now, it’s almost one-click export. This workflow automatically processes images, metadata, styles, and all details, reducing a half-hour task to just a few minutes.

Additionally, I added a glossary feature for technical term highlighting and tooltips; improved SEO and social sharing metadata; and cleaned up outdated content. These seemingly minor improvements quietly enhance the user experience.

Looking back at content creation in 2025, I found clear changes in several dimensions.

Early content leaned toward technical tutorials and practical guides, showing “how to do.” This year, I focused more on “why” and “what are the trends.” I wrote more technology trend analyses, ecosystem maps, and in-depth case studies. These may not directly teach you how to use an API, but they help you understand the direction of technological evolution.

AI is a global wave and cannot be limited to the Chinese-speaking world. In 2025, I wrote bilingual documentation for almost all new AI tools, and important blog posts also have English versions. This increased the workload, but allowed the content to reach a broader audience.

Text is efficient, but not all knowledge is best expressed in words. This year, I used many architecture and schematic diagrams to explain complex concepts, adding 59 new charts. These visual elements lower the barrier to understanding, making abstract concepts more intuitive. I also optimized image display in dark mode to ensure consistent visual experience.

2025 was not only a year of shifting content themes toward AI, but also a year of deep practice in AI-assisted programming.

I developed a VS Code plugin and created many prompts to automate repetitive tasks. I experimented with various AI programming tools and settled on a toolchain that suits me. I even migrated the website to Cloudflare Pages and used its edge computing services to develop a chatbot. These practices greatly improved development efficiency, giving me more time to focus on thinking and creating rather than mechanical coding.

This made me realize: AI will not replace developers, but developers who use AI well will replace those who do not. I also shared more insights to help others master AI-assisted programming.

Looking back at 2025, the site underwent a profound transformation—from a Cloud Native tech blog to an AI infrastructure knowledge base. But this is just the beginning, not the end.

Looking forward to 2026, I plan to continue deepening in several areas:

2025 was a year of change and growth. From Cloud Native to AI Native, from technical practice to ecosystem observation, both the content and functionality of the site have made qualitative leaps.

What makes me happiest is that this transformation allowed me and my readers to stand at the forefront of the technology wave. We are not just learning new technologies, but thinking about how technology changes the world and the way we write software.

The waves of technology keep evolving; only by actively embracing change can we continue to create value. Thank you to every reader for your companionship and support. I look forward to sharing more insights and practices in 2026.

Further Reading: