2025-12-29 20:17:41

WinterFest 2025: The Festival of Artisanal Software is back with a fantastic new collection of carefully crafted software for writing, research, and thinking.

Innovative software often comes from small teams, crafted with imagination and a vision of a better way to work. There are no bundles, games, or prices that are too good to be true: just fresh software with fantastic support at great, sustainable prices.

Software artisans from around the globe have come together for this time-limited sale to bring you innovative apps to assist you with everyday work. This incredible catalog of productivity software includes:

These amazing deals don’t come around often, so act today to start 2025 off with the best software available from this terrific group of developers.

Visit the WinterFest website to learn more about these amazing deals.

Our thanks to Winterfest for sponsoring MacStories this week.

2025-12-24 00:40:26

Enjoy the latest episodes from MacStories’ family of podcasts:

This week, Federico and John look ahead to 2026 and what it will mean for apps, smarter Siri, and more.

On AppStories+, Federico and John update listeners on their latest app experiments and holiday hardware projects.

This week, Brendon, Federico, and John pick their top handheld consoles of 2025.

On NPC XL, Federico, John, and Brendon share their HOTY Honorable Mentions and trends they expect for 2026.

Visit AppStories.net to learn more about the extended, high bitrate audio version of AppStories that is delivered early each week and subscribe.

The Contenders:

NPC XL is a weekly members-only version of NPC with extra content, available exclusively through our new Patreon for $5/month. Each week on NPC XL, Federico, Brendon, and John record a special segment or deep dive about a particular topic that is released alongside the “regular” NPC episodes. You can subscribe here.

MacStories launched its first podcast in 2017 with AppStories. Since then, the lineup has expanded to include a family of weekly shows that also includes MacStories Unwind, Magic Rays of Light, Comfort Zone, NPC: Next Portable Console, and First, Last, Everything that collectively, cover a broad range of the modern media world from Apple’s streaming service and videogame hardware to apps for a growing audience that appreciates our thoughtful, in-depth approach to media.

If you’re interested in advertising on our shows, you can learn more here or by contacting our Managing Editor, John Voorhees.

2025-12-20 02:34:41

Enjoy the latest episodes from MacStories’ family of podcasts:

This week, Federico and John reveal the winners of the 2025 MacStories Selects Awards, which celebrate the exceptional design, innovation, and creativity of apps across the iPhone, iPad, Mac, and Apple Watch.

On AppStories+, John has some Apple Music discovery tips for Federico, and they reveal the iPhone features they don’t use.

This week, handhelds are shipping for the holidays, AYANEO makes a bold bet on a phone, a new Strix Halo tablet one-ups the ASUS ROG Flow Z13, and John dips a toe in the Bazzite waters.

On NPC XL, Federico jumps into the Bazzite mini PC world, while Brendon is revisiting the iPod on handheld consoles.

Chris reflects on a big year of changes, Matt has turned his garage into a mini-factory, and the gang buys clothes, but in a techy way.

On Cozy Zone, we draft fonts…for real this time!

This week, Federico and John close out the year by sharing their favorite music of 2025.

This episode is sponsored by:

Visit AppStories.net to learn more about the extended, high bitrate audio version of AppStories that is delivered early each week and subscribe.

NPC XL is a weekly members-only version of NPC with extra content, available exclusively through our new Patreon for $5/month. Each week on NPC XL, Federico, Brendon, and John record a special segment or deep dive about a particular topic that is released alongside the “regular” NPC episodes. You can subscribe here.

For even more from the Comfort Zone crew, you can subscribe to Cozy Zone. Cozy Zone is a weekly bonus episode of Comfort Zone where Matt, Niléane, and Chris invite listeners to join them in the Cozy Zone where they’ll cover extra topics, invent wilder challenges and games, and share all their great (and not so great) takes on tech. You can subscribe to Cozy Zone for $5 per month here or $50 per year here.

We deliver MacStories Unwind+ to Club MacStories subscribers ad-free with high bitrate audio every week. To learn more about the benefits of a Club MacStories subscription, visit our Plans page.

MacStories launched its first podcast in 2017 with AppStories. Since then, the lineup has expanded to include a family of weekly shows that also includes MacStories Unwind, Magic Rays of Light, Comfort Zone, NPC: Next Portable Console, and First, Last, Everything that collectively, cover a broad range of the modern media world from Apple’s streaming service and videogame hardware to apps for a growing audience that appreciates our thoughtful, in-depth approach to media.

If you’re interested in advertising on our shows, you can learn more here or by contacting our Managing Editor, John Voorhees.

2025-12-19 05:07:45

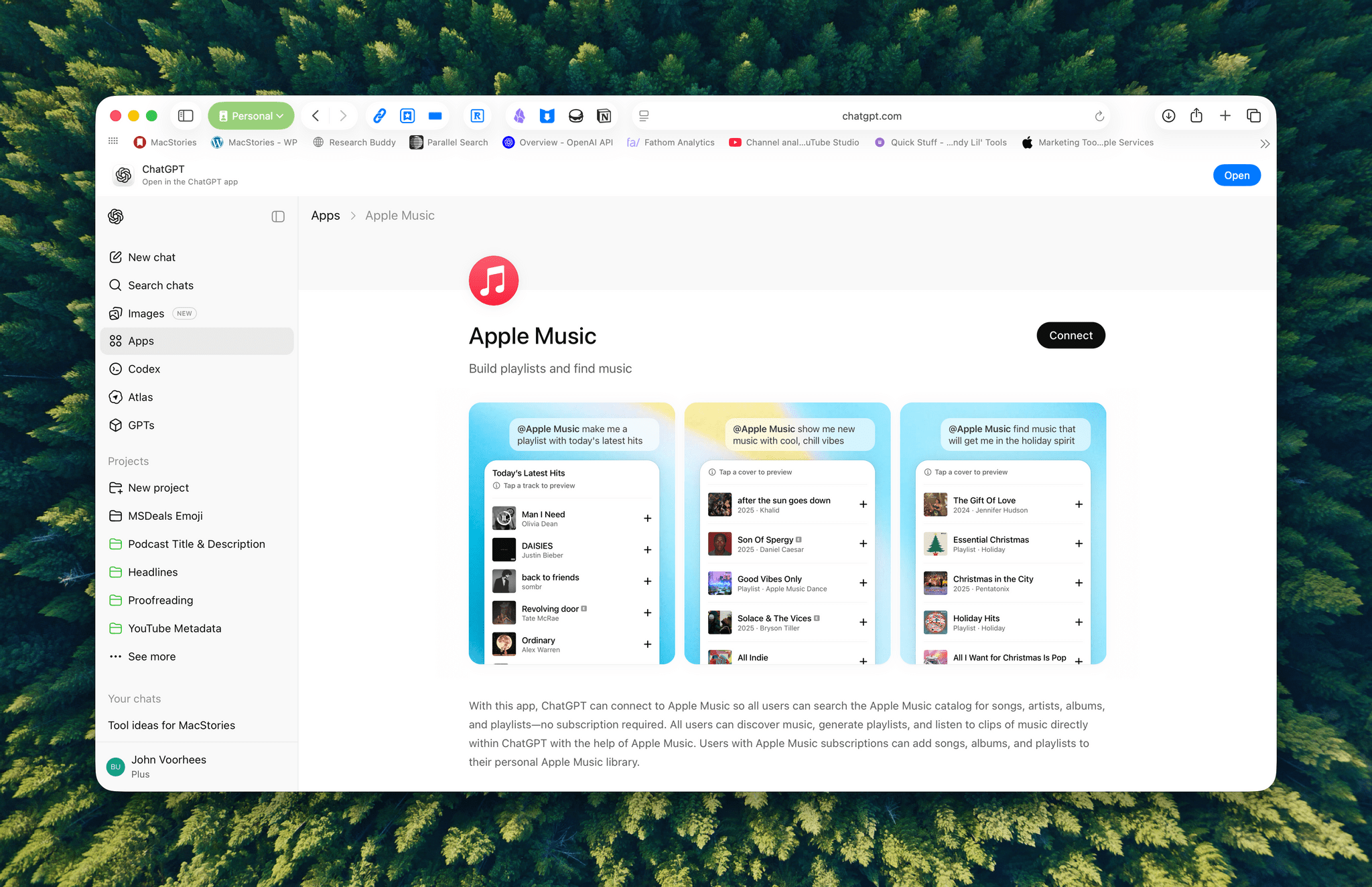

Announced earlier this year at OpenAI’s DevDay, developers may now submit ChatGPT apps for review and publication. OpenAI’s blog post explains that:

Apps extend ChatGPT conversations by bringing in new context and letting users take actions like order groceries, turn an outline into a slide deck, or search for an apartment.

Under the hood, OpenAI is using MCP, Model Context Protocol, which was pioneered by Anthropic late last year and donated to the Agentic AI Foundation last week.

Apps are currently available in the web version of ChatGPT from the sidebar or tools menu and, once connected, can be accessed by @mentioning them. Early participants include Adobe, which preannounced its apps last week, Apple Music, Spotify, Zillow, OpenTable, Figma, Canva, Expedia, Target, AllTrails, Instacart, and others.

I was hoping the Apple Music app would allow me to query my music library directly, but that’s not possible. Instead, it allows ChatGPT to do things like search Apple Music’s full catalog and generate playlists, which is useful but limited.

ChatGPT’s Apple Music app lets you create playlists.

Currently, there’s no way for developers to complete transactions inside ChatGPT. Instead, sales can be kicked to another app or the web, although OpenAI says it is exploring ways to offer transactions inside ChatGPT. Developers who want to submit an app must follow OpenAI’s app submission guidelines (sound familiar?) and can learn more from a variety of resources that OpenAI has made available.

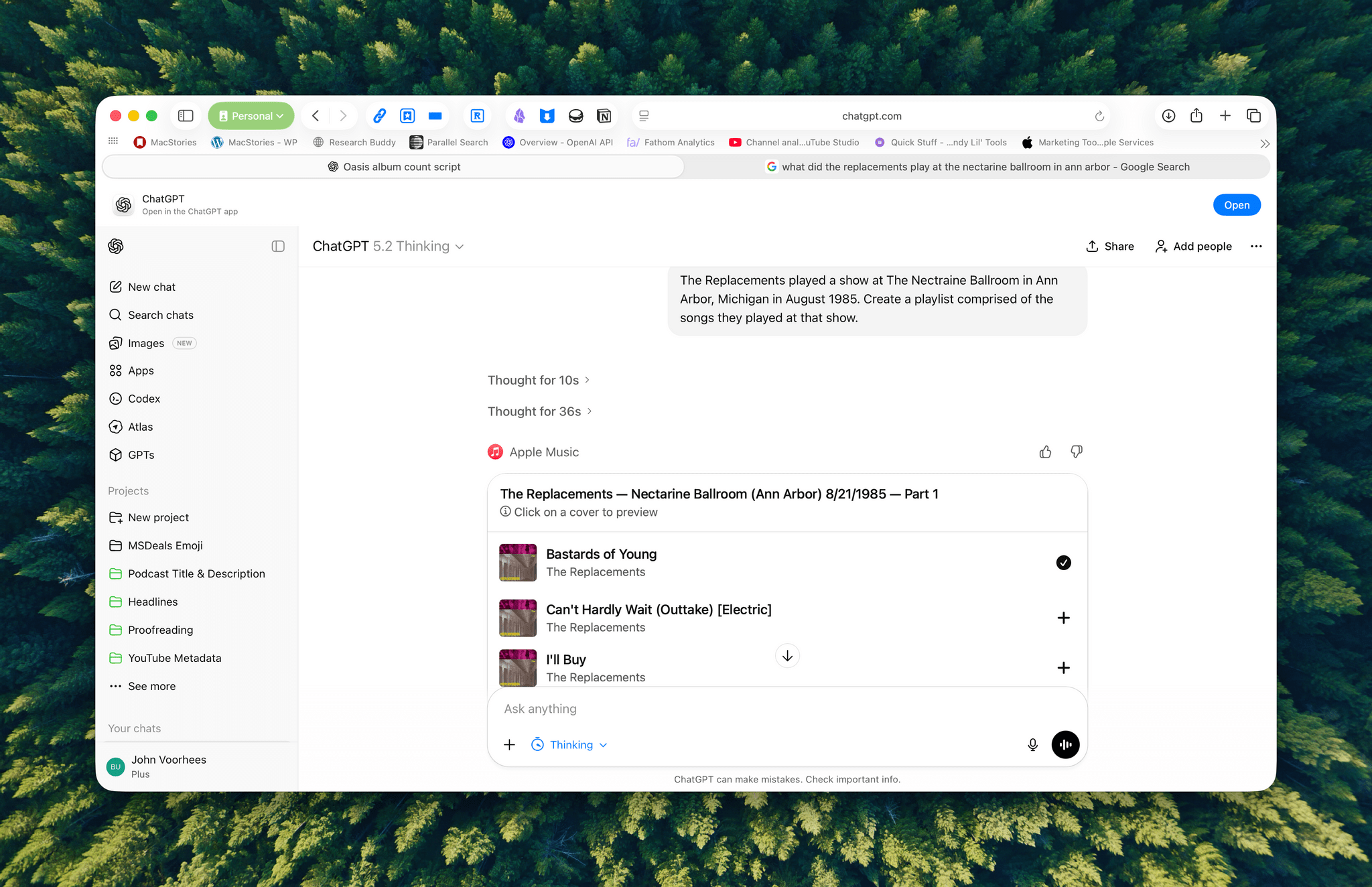

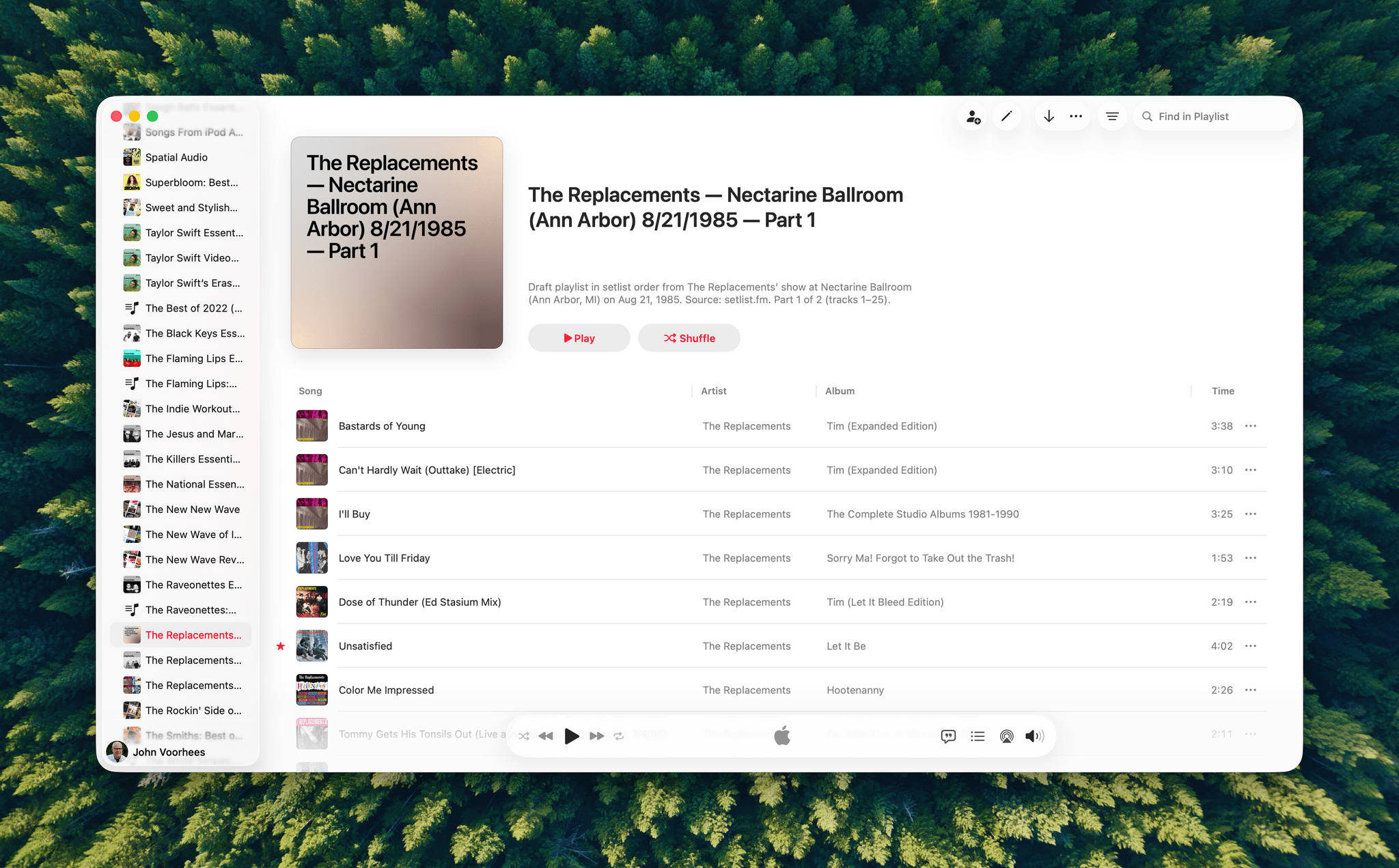

A playlist generated by ChatGPT from a 40-year-old setlist.

I haven’t spent a lot of time with the apps that are available, but despite the lack of access to your library, the Apple Music integration can be useful when combined with ChatGPT’s world knowledge. I asked it to create a playlist of the songs that The Replacements played at a show I saw in 1985, and while I don’t recall the exact setlist, ChatGPT matched what’s on Setlist.fm, a user-maintained wiki of live shows. I could have made this playlist myself, but it was convenient to have ChatGPT do it instead, even if the Apple Music integration is limited to 25-song playlists, which meant that The Replacements’ setlist was split into two playlists.

We’re still in the early days of MCP, and participation by companies will depend on whether they can make incremental sales to users via ChatGPT. Still, there’s clearly potential for apps embedded in chatbots to take off.

2025-12-18 23:50:27

Our desk setups. Federico (left) and John (right).

John: As 2025 comes to an end, Federico and I thought we’d cap off the year with a final update on our setups. We just went through this in November, but both Federico and I decided to take advantage of Black Friday sales to improve our setups in very different ways. Let’s take a look.

My changes were primarily to my office setup. I’ve wanted a gaming PC for a long time, but I never had a good place to set one up. The solution was to go with a high-end mini PC, the GMKtec EVO-X2, which features a Strix Halo processor, 64GB of RAM, and a 2TB SSD. It came with Windows installed, but after a few days, I installed Bazzite, an open-source version of SteamOS, which makes it dead simple to access my Steam videogame library.

Two things kept me from getting a PC earlier. The first was space, which the EVO-X2 takes care of nicely because it’s roughly the size of the Mac mini before its recent redesign.

The second and bigger issue, though, was my Studio Display. It’s an excellent screen, but it’s showing its age with its 60Hz refresh rate and 600 nits of brightness. Plus, with one Thunderbolt port for connecting to your Mac and three USB-C ports, the Studio Display is limiting. Without HDMI or DisplayPort, connecting it to other video sources like a PC or game console is nearly impossible.

The GMKtec EVO-X2 mini PC, Switch 2, and 8BitDo Ultimate 2 controller

So I also bought a deeply discounted ASUS ROG Swift 32” 4K OLED Gaming Monitor, which is attached to my desk using a VIVO VESA desk mount. I’d wanted a bigger screen for work anyway, and with its 240Hz refresh rate and bright OLED panel, the ASUS has been excellent. However, the ASUS display really shines when connected to my GMKtec and Nintendo Switch 2. As I covered on NPC: Next Portable Console recently, the mini PC combined with a great monitor, which also allows me to stream games to my handhelds over my local network, was the missing link in my setup, delivering a flexibility I just didn’t have before.

Along with the gaming part of my desktop setup, I updated my desktop lighting with two Philips Hue Play Wall Washer lights and a Hue Play HDMI Sync Box 8K, which casts light against the wall behind my desk that’s synced with what’s onscreen. In fact, the Sync Box 8K works with all the Hue lights in my office, allowing me to create a more immersive environment when I’m gaming.

I’ve been using a handful of other accessories lately, too, including:

That’s it from me for 2025, folks. Enjoy the holidays! Things will be a little quieter at MacStories over the next couple of weeks as we unwind and spend the time with family and friends over the holidays, but we’ll be back with lots more before long.

Federico: For this final update to my setup before the end of the year, I focused on two key areas: audio and my living room TV setup.

The biggest – literally – upgrade for me this month has been switching from my previous LG 65” TV to a flagship LG G5 77” model. I’d been keeping an eye on this TV for a while: it’s LG’s first model to use Tandem OLED technology, and it boasts higher brightness in both SDR and HDR with reduced reflections thanks to the new panel. I took advantage of an incredible Black Friday deal in Italy to buy it at 50% off, and we love it. The TV rests almost flush against the wall thanks to its compact design, but since it’s not completely flush, it allowed us to re-install our Philips Hue Gradient Light Strip behind it. Since I was in a renovation mood and I also wanted to future-proof my setup for the Steam Machine in 2026, I also upgraded to a Hue Bridge Pro and replaced my previous Hue Sync Box with the latest 8K edition that is certified for HDMI 2.1 connections. Speaking of gaming: as I discussed this week on NPC, I got a Beelink SER9 Pro mini PC and installed Bazzite on it to get a taste for SteamOS in the living room; this one will eventually be replaced by a more powerful Steam Machine.

The other area of improvement was audio. I recently realized that I wanted to fully take advantage of Apple Music and Spotify’s support for lossless playback with wireless headphones, which is something that, alas, Apple’s AirPods Max do not support. So after much research, I decided to treat myself to a pair of Bowers & Wilkins Px8 S2, which are widely considered some of the best Bluetooth headphones that you can buy right now. But you may be wondering: how do you even connect these headphones to Apple devices that do not support Qualcomm’s aptX Lossless or Adaptive codecs? That’s where the BT-W6 Bluetooth dongle comes in. In researching this field, I came across this relatively new category of small Bluetooth adapters that plug into an iPhone’s USB-C port (they work on a Mac or iPad, too) and essentially override the device’s built-in Bluetooth chip. Once headphones are paired with the dongle rather than the phone, wireless streaming from Apple Music or Spotify will use aptX Lossless instead of Apple’s legacy SBC protocol. The difference in audio quality is outstanding, and it makes me appreciate the Px8 S2 for all they have to offer.

While I was at it, I also took advantage of another deal for a Sonos Move 2 portable speaker; we’ll have to decide whether this one will be permanently docked on my desk or next to a record player that Silvia is getting me for Christmas. (We don’t like surprises for each other, especially when it comes to furniture-adjacent shopping.)

So that’s my update before we go on break for a couple of weeks. I can already feel that, when I’m back, I’ll have some changes to cover on the software front. But we’ll talk about those in 2026.

2025-12-18 00:12:23

In the depths of the pandemic, I bought an iRobot Roomba j7 vacuum. At the time, it was one of the nicer models iRobot offered, but it was expensive. It did a passable job in areas with few obstacles, but it filled up fast, had a hard time positioning itself on its base and frequently got clogged with debris, requiring me to partially disassemble and clean it regularly. The experience was bad enough that I’d written off robot vacuums as nice-to-have appliances that weren’t a great value.

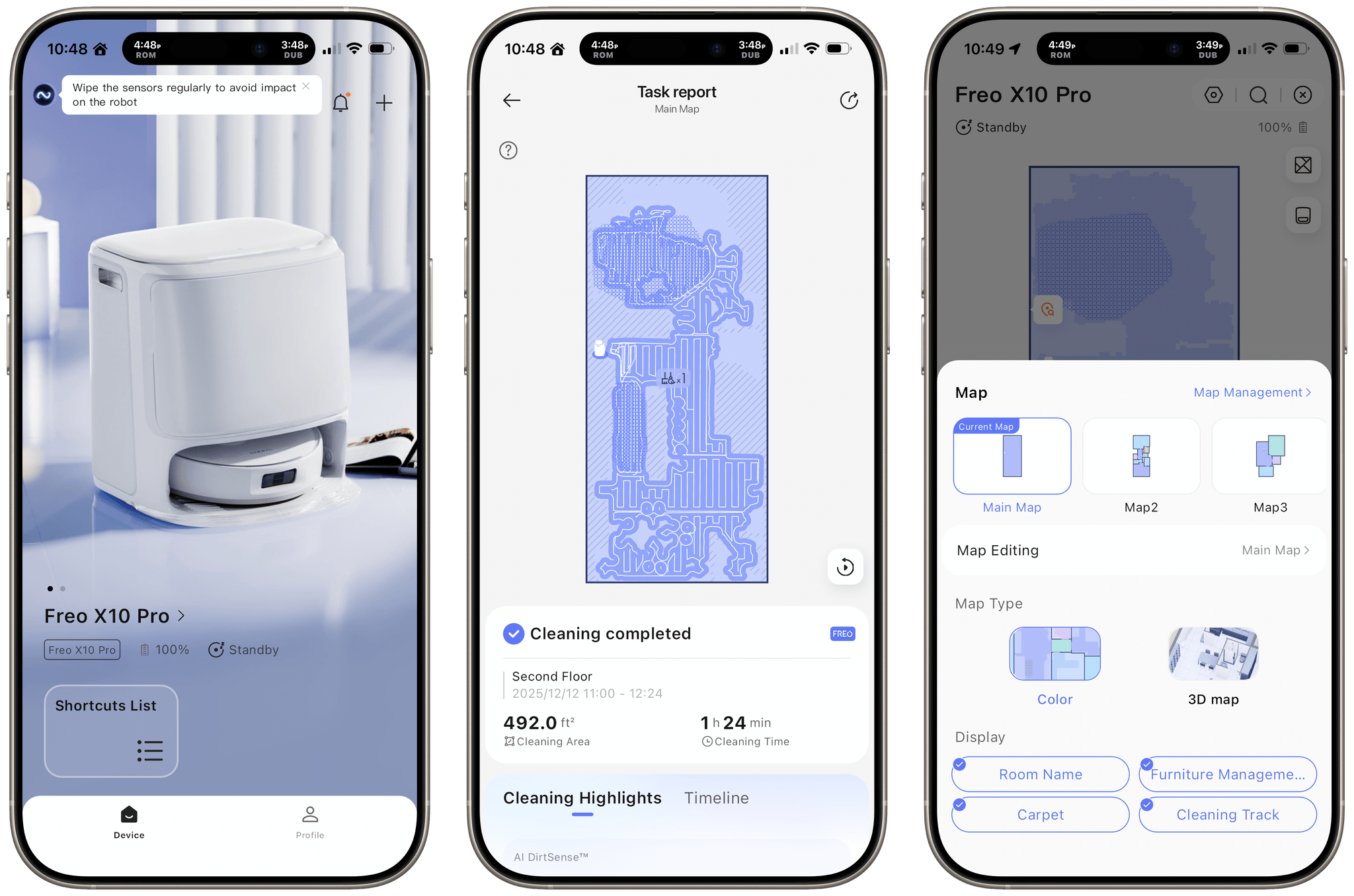

So, when Narwal contacted me to see if I wanted to test its new Freo X10 Pro, I was hesitant at first. However, I’d seen a couple of glowing early reviews online, so I thought I’d see if the passage of time had been good to robo-vacuums, and boy has it. The Narwal Freo X10 Pro is not only an excellent vacuum cleaner, but a mopping champ, too.

The Freo X10 Pro navigating around my living room.

For context, I live in a three-story condo, which isn’t ideal for robot vacuums. For the past two months or so, the Freo X10 Pro has been stationed in my kitchen tucked away in a nook next to my refrigerator on the second floor. I picked that spot because the second floor is our main living space where we spend most of our time, and as a result, it’s the floor that needs cleaning most often.

To use the vacuum on the first or third floors, the Freo X10 Pro needs to be carried up or downstairs. The vacuum’s app handles that just fine because it can store up to four maps. And, although Narwal’s device is heavier than my old Roomba, it’s not difficult to move between floors. In fact, I look forward to it because the Narwal talks, announcing that it is suspended in the air whenever I pick it up, which makes me laugh every single time.

Ready for action.

As a practical matter, though, I’ve found that I only move the Narwal to another floor for vacuuming, not mopping. That’s because the Freo X10 Pro needs to return to its base station frequently to clean its mopping pads. That’s not a big deal when it’s on the same floor as its base station. However, if I carry the Narwal to the third floor, I need to fetch it for a second floor self-cleaning five or six times during a mopping session, which is a hassle. In contrast, vacuuming can be done in one shot, which is a much better experience.

The Narwal uses LiDAR to navigate around your home. During setup, it drives around your home mapping obstacles, which didn’t take long. Vacuuming the roughly 500 square feet of vacuumable space on our second floor takes about 70 minutes, while mopping takes close to 90. That’s faster than our old Roomba could navigate the same space and it finishes the task using only about 30% of its battery life, allowing me to vacuum or mop multiple floors on one charge.

The faceplate of the Narwal’s base station is magnetic, providing access to the bag that collects the dirt it vacuums up.

At the end of a vacuuming run, the Freo X10 Pro returns to its base station, and while it charges, the dirt it collected is sucked into a bag in the base station, which Narwal says will last 120 days before needing to be replaced. When the bag fills up, you throw it out and replace it with a fresh one, which after two months I haven’t had to do yet.

The more frequent maintenance task is filling the Narwal’s tank with clean water and emptying the dirty water tank after a mopping run. The tanks hold about three mopping runs worth of water, but I typically empty and rinse the dirty water tank every time the Freo X10 Pro is finished mopping. The clean water tank uses little tablets that dissolve in the water to help it clean, which are sold separately. I got a box of 24 on Amazon that should last 4-5 months, but Narwal should throw at least a few in the box with the robot.

The Narwal’s clean and dirty water tanks.

Overall, I’ve been impressed with the Freo X10 Pro’s cleaning power. Its 11,000 Pa of suction and 8N of mop pressure don’t mean much to me, but what I can say is that using the Freo X10 Pro to vacuum once per week and vacuum and mop a second day of the week has kept our house clean. The Freo X10 Pro won’t magically reach areas that are narrower than it, which means you’ll still need to do a little manual vacuuming. However, the Narwal does a meticulous job criss-crossing the areas it can reach, deftly navigating around furniture and across rugs and hardwood floors.

The Freo X10 Pro’s mop works well, too, lifting up and out of the way when it’s not in use. I was curious how wet the robot would leave our floors, and while you can see dampness in its wake, the water evaporates quickly. However, because it takes so many trips up and down the stairs to mop our other floors, I’ve found that it’s more convenient to mop those floors manually myself.

The Narwal is short enough that it fits under nearly every piece of furniture in our house.

About the only area where the Roomba j7 has a slight leg up on the Narwal is with electrical cords and charging cables. That may come down to the difference between a camera-driven vacuum and one that navigates by LiDAR, but whatever the case, I do need to make sure there aren’t charging cables in the way of the Freo X10 Pro more than I ever had to with the Roomba. The Freo X10 Pro also has trouble with our rather lightweight bath mats, but that was true of the Roomba too, and they’re easily moved when it’s time to clean.

Another nice feature of the Freo X10 Pro is that it’s relatively quiet; at least compared to our old Roomba. I can still hear the Freo X10 Pro from other parts of the house, but it doesn’t knock into furniture loudly or rumble across the floor the way the Roomba did.

Like other robot vacuums, the Narwal is controlled using an app or the buttons on the robot itself. There’s a lot going on in Narwal’s app, which can be a little confusing at first. As a practical matter, though, once I had it map our home and set up a vacuuming and mopping schedule, I haven’t used the app much. It sends me push notifications when a cleaning session is completed and when the robot gets stuck somewhere, which happens occasionally. That said, you can use the app to tweak your cleaning routines, track the wear on replaceable parts, and more if you want.

One thing that hasn’t changed over the years since I got that first robot vacuum is the fact that robot vacuums both debut at high prices, but are frequently discounted, given the intense competition among their makers. When I started using the Freo X10 Pro, it was retailing for $700. However, in the months since, and even now as this is published, it’s been significantly discounted whether you buy directly from Narwal or a third-party retailer like Amazon.

However, what’s different about the Narwal is that it’s a far better value than my Roomba j7 was when it was brand new. It’s faster, quieter, better at cleaning, and also mops my floors. The Freo X10 Pro can’t climb stairs or reach every tight spot around my house, but it’s significantly cut back the amount of time we spend vacuuming and mopping our floors. In fact, the floors are cleaner simply by virtue of the fact that Narwal’s robot takes care of it unfailingly on a schedule. It doesn’t procrastinate, putting off chores like I do. Instead, it just goes about its business, cleaning up a couple of times a week, which makes it well worth this kind of investment to me.

The Freo X10 Pro is available directly from Narwal. The list price on Narwal’s website is $699.99 but the company is currently running a sale reducing the price to $419.99. You can also buy the Freo X10 Pro from retailers like Amazon.